Affiliate links on Android Authority may earn us a commission. Learn more.

Best of Android 2017: Which camera IS the best?

One of the most important areas for every smartphone is how good its camera is, but there are two ways to judge a camera: by whether it is technically good, or whether it looks good. Often, the most accurate smartphone cameras aren’t the ones that produce pictures that look good, so how do you decide which is the best smartphone camera?

For Best of Android 2017, we introduced an all-new method of testing smartphone cameras objectively, but we also wanted to see which smartphone camera looks the best. To do so, we split the camera into two parts. Below we’ll go into which is technically the best, based on all of our data. If you’re interested in which looks the best, check our 10 phone camera shootout post and vote in our poll.

What we tested

Given that modern photography is digital, objectively assessing image quality should be pretty straightforward, right? Wrong.

As recent controversies with scoring objective image data have highlighted, it’s very tough to come up with scores that mean something to the average consumer. To non-enthusiasts, taking a dive into test results can be boring and stressful, and nobody wants that. While the data we collected is much more comprehensive and complicated than what we’re showing here, we picked a few different basic measurements to compare the cameras of our candidate smartphones. Don’t worry, we’ll guide you through what we found without making it any more complicated. No scores out of ten, no hiding measurements behind skewed graphs, just the data and expert analysis from yours truly.

Bear in mind that photography is also an art form; what looks great often isn’t objectively great. For example: Instagram and a bunch of Lightroom presets will add imperfections and characteristics of “bad” cameras for artistic reasons. To most, “perfectly” processed photos will look drab, a bit soft, and somewhat lifeless. I went on phones where that suffered due to either a shortcoming of smartphone cameras as a whole, or a limit to human perception.

While we could rip into the hundreds of pages of esoteric results, we don’t really need to go beyond camera sharpness, color performance, noise performance, and video performance.

How we tested

Testing a camera unit objectively means removing as many variables as possible. In short: we had to create a lab for specifically this purpose. If you’d like to know more about that process, you can delve into all the nerdy details here.

With our perfectly blacked-out testing lab done, we then needed the right equipment. For this, we partnered with imaging specialists Imatest in Boulder, CO. I’ve used their systems in the past for other outlets, and their off-the-shelf solution gives users a time-tested way to get rock-solid objective camera test results. It’s our desire to be as accurate as possible, so instead of banging our heads against the wall in creating our own wrapper for a MATLAB analysis—we got the right software for the job.

Our data is collected from only a handful of shots of test charts. Here’s a quick rundown:

- The Xrite Colorchecker is a 24-patch chart containing a 6-patch greyscale and an 18-point color range. From this chart, we can measure color error (ΔC 00, saturation corrected), color saturation, white balance, shot noise, and more.

- The SFRPlus chart is a multi-region slanted-edge resolution chart, capable of revealing all sorts of fun performance data. This is how we test the sharpness capabilities of our cameras, but it also allows us to quantify distortion, lens defects, chromatic aberration, and more. We store all of this data, though we’re only covering sharpness here. If its warranted later on, we can dredge up our other findings.

- The DSCLabs Megatrumpet 12 is a chart designed to test the video sharpness capabilities of any 4K-capable image sensor. By panning the camera during recording, the incredibly tiny lines disappear, leaving only a blotchy grey area. This gives us a fairly reliable quantification of how much data a given camera can resolve in a unit called line pairs per picture height (LP/PH).

- A randomly-generated spilled-coins chart created with Imatest‘s chart generator function allows us to expose the weaknesses of the noise reduction algorithm that’s present on all consumer cameras. Ever notice how photos you take in low light look blotchy and strange? That’s the noise reduction feature getting confused about what’s noise and what’s detail. With lots of hard, round edges and bright colors, this chart shows how a camera is likely to remove detail in the name of noise reduction.

Results

After testing our candidate phones, it was striking how similar performance was across most cameras. It’s possible this is because a lot of the image sensors in mobile devices are manufactured by Sony, but it could also have a lot to do with the fact that there are very clear limitations to image sensors that small. Sure, image processing has come a long way—it’s incredible that these units can even take a picture, really—but many of the variations in performance seem to have a lot more to do with software than hardware.

Among flagships, camera performance isn't incredibly different from phone to phone

Among flagships, camera performance isn’t incredibly different from phone to phone when you strip away the enhancements applied by the camera API. The Nokia 8 has a super-high sharpness rating, but it achieves this with intense software edge-enhancement. Functionally, you should be happy with any camera here (except for the BlackBerry, anyway).

Color

Of everything that can go wrong with a photo, the color is probably most noticeable. Sure, you could miss your focus, but nothing gives you that visceral reaction of disgust like a magenta pall over your selfie, or orange tint to your bar shot.

...these cameras have an incredibly hard time finding the correct white balance automatically

While smartphone cameras are pretty poor at color accuracy, they’re just good enough to go toe-to-toe with most point and shoots. However, if there’s any theme to the color performance that I found in the lab, it’s that these cameras have an incredibly hard time finding the correct white balance automatically. That in turn has measurable consequences for color accuracy.

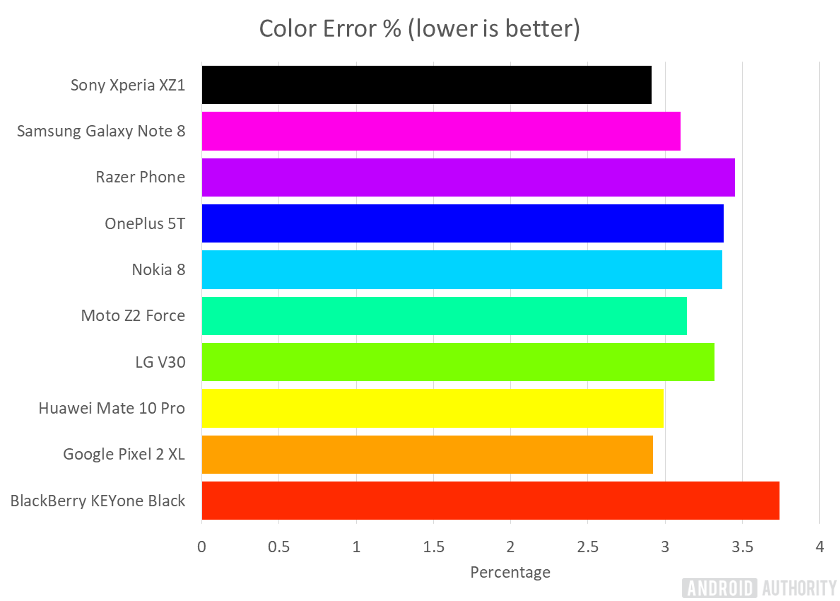

It’s not possible to to take manual white balance readings with the stock camera APIs of most smartphones. Despite the greycards, D65 bulbs, and massive sample sizes, these phones all had varying shortcomings in color performance—even in ideal conditions. Let’s start with color accuracy (ΔC 00, saturation corrected). They all did okay, but the Samsung Galaxy Note 8, Google Pixel 2 XL, Sony Xperia XZ1, Moto Z Force 2, and HUAWEI Mate 10 Pro had the best color accuracy by a noticeable margin.

No camera here was truly bad, but I would hesitate to recommend anyone caring about color accuracy to use any of the cameras I didn’t mention just now. Most of those phones just didn’t quite make the cut, either due to shifted colors, or simply a really rough time white balancing. For example, the LG V30 tends to err towards the side of warmer colors, and there’s not a whole lot you can do about it.

The metric we used to track color accuracy has a little “saturation corrected” at the end of it, and that’s no accident. Most casual shooters add a little pop to their shots, commonly by pumping in a little oversaturation of colors. There’s nothing inherently wrong with that. Keeping it tasteful can prevent problems like posterization and clipping, but it shifts color values. In order to keep things fair we didn’t want to ding any phone unfairly.

The TL;DR version of this chart is that the photos from the Oneplus 5T, Google Pixel 2 XL, and HUAWEI Mate 10 Pro all have colors that pop just a bit more than the rest of the pack. The other phones aren’t too far behind, however, and no camera undersaturates colors. The key takeaway here is that none of these numbers are very far apart from each other, and that any phone on this list will look more vivid than a shot taken at 100% color saturation. Boring takeaway, sure, but worth exploring.

Noise

This is probably the trickiest metric to pin down because smartphone cameras live or die by noise reduction algorithms. Essentially, tiny camera sensors have an extremely tough time generating noise-free shots, and often have to rely on low-res sensors to collect enough light. This means that if there’s anything preventing a clean signal from being recorded—a lack of light or internal heat come to mind—you’ll see a bunch of junk in your shot.

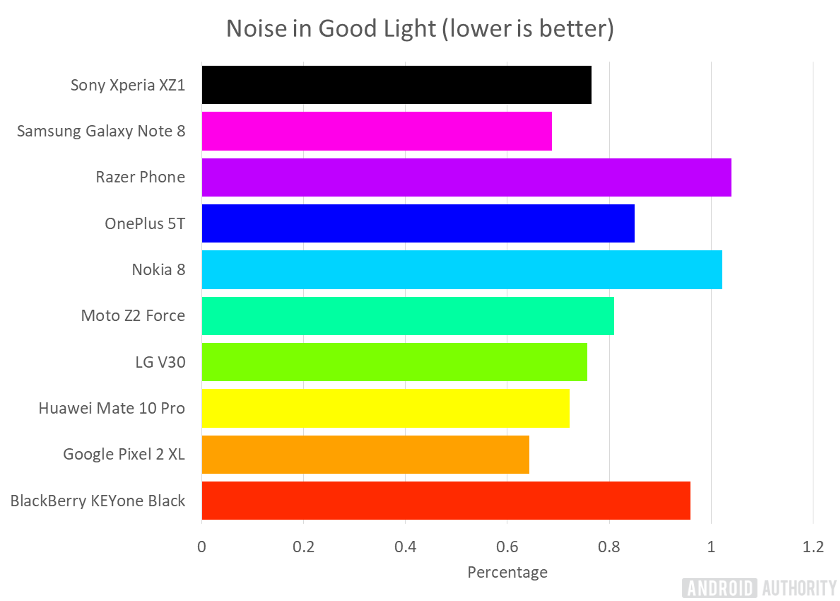

No flagship phone had any issues with shot noise in ideally bright conditions. Despite the Google Pixel 2 XL and the Samsung Galaxy Note 8 beating the competition handily, none of the candidates even approach what we’d consider “bad” or “noticeably worse than others.” For that, you’d typically look for an average noise level of 1-2% at minimum if you’re pixel-peeping.

Switch to low light, and all bets are off. Smartphone camera sensors are simply too small to perform at the same level as most popular standalone cameras today. Barring a massive drop in resolution or increase in sensor size, there’s really only so much you can expect from a smartphone in this regard. They can’t overcome physics, after all. However, notice that our noise levels are suspiciously low.

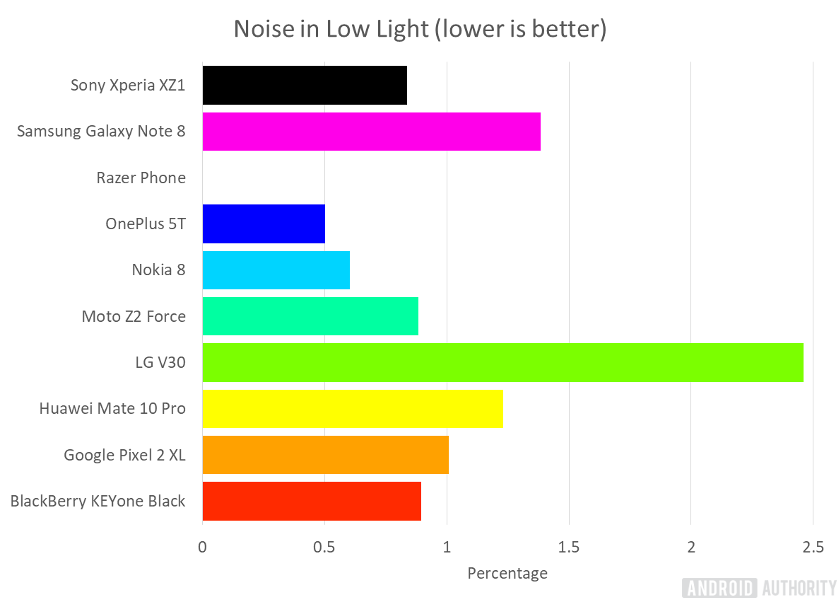

That obviously doesn’t tell the whole story, so we busted out a chart specifically designed to show how bad noise reduction performs. The results were illuminating. Essentially what we’re looking for here is sharp edges, the presence of the tiny circles sprinkled all over the chart, and no other issues like fuzziness, blotchiness where data was averaged out, or false coloration. I peg the OnePlus 5T as the best of the bunch here, despite its long shutter speed, while the rest will be predictably less good at handling dusky situations.

This could all change with an update to any phone’s camera app. If you’re worried about how bad your favorite phone looks here, we’re not even close to done; there’s lots of good stuff to dig into. While processing these files I noticed that many phones appended “LL” in their filenames, indicating that the camera API knew to treat the photo differently than normal. That’s pretty cool, but many cameras stil didn’t do so hot.

The LG V30 and the Google Pixel 2 XL showed enormous difficulty in maintaining detail in low light. Both produced different issues. The Pixel 2 XL seemed to err towards a violent destruction of noise at the expense of hard edges. The V30 preserved detail but created a rather strange pattern.

...the software chose to just use a longer shutter speed when I tested. If this happens when you take a shot, you won't be able to escape motion blur, and if it doesn't: the noise reduction will be far worse.

For most cameras, low light means cranking up the ISO (or sensitivity) to make a bright image. Alternatively, you could simply make the shutter speed longer, but that’ll almost always make your shot blurry. While I was impressed with the low light performance of the OnePlus 5T and Nokia 8, the software chose to just use a longer shutter speed when I tested. If this happens when you take a shot, you won’t be able to escape motion blur, and if it doesn’t happen, the noise reduction will be far worse. These results were strange outliers. It doesn’t pass the sniff test, but I’m not about to ignore something I corroborated over several samples. The Samsung Note 8 did well here, but with slightly less shutter speed tomfoolery. Slightly.

Sharpness

Sharpness is an easy thing to measure. Performing the tests might be mechanically difficult, but once you get a properly aligned and exposed test shot, you’ll get loads of data from scores of collection points across the frame which can tell you exactly how good your sensor and lens combo is.

This section may seem a little less hardcore than it really should be, but I noticed a few things. First off: we collected a ton of data, but many of the differentiating points between phones really centered around distortion related to the focal length of the lenses, raw sharpness, and software oversharpening. After our initial tests, we ran a couple re-tests to make sure we got accurate data, and here’s what we found:

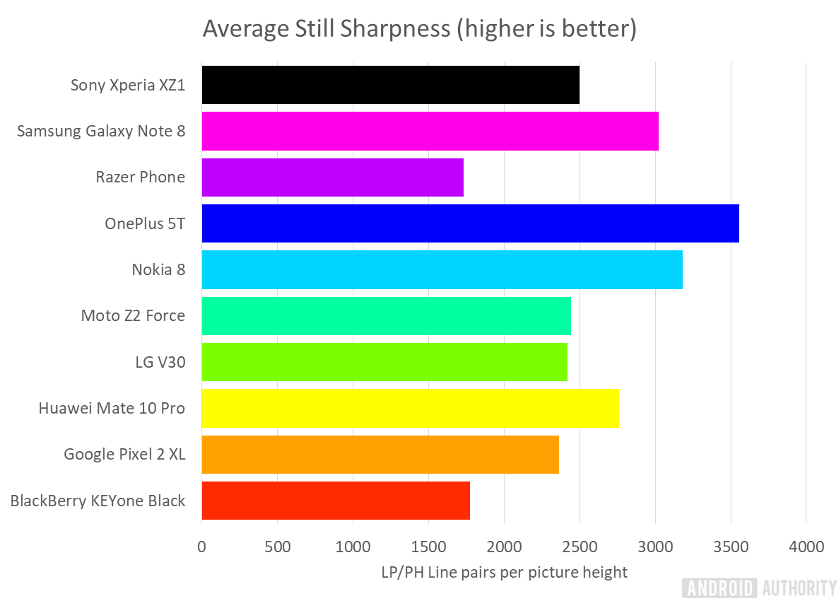

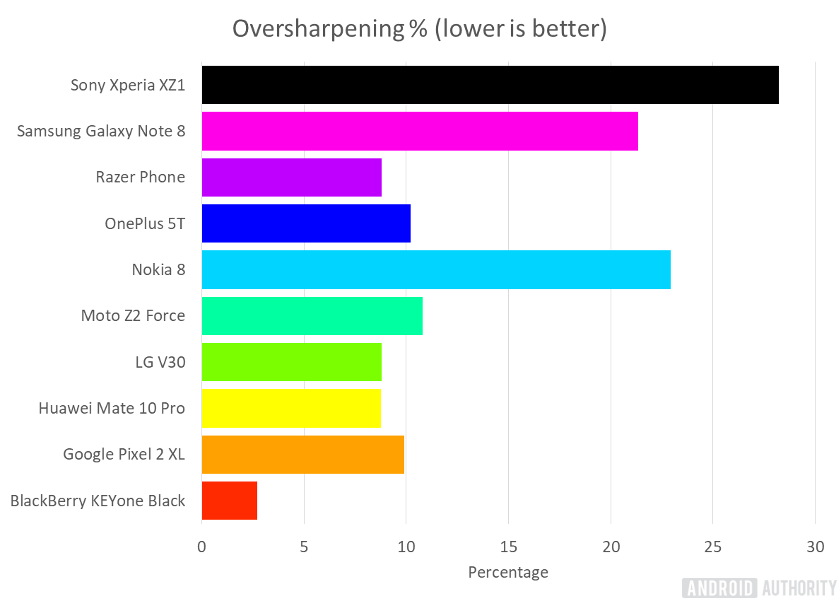

Most of the phones bounce around between 2,000 and 3,000 line widths per picture height (LW/PH), but the Samsung Note 8, Nokia 8, and OnePlus 5T all post scores over 3,000 LW/PH. That’s pretty impressive, but there’s a little more to it than that. Aside from the OnePlus 5T, both the Nokia and Samsung phones rely on heavy oversharpening to get their results. You’re unlikely to really notice it all that much, but oversharpening tends to affect picture quality in subtle ways.

Software oversharpening is a sort of edge-enhancement that imaging processors do to take areas of extreme contrast (hard edges), and push them even further. It’s like using the clarity slider in Photoshop. While some oversharpening will help smartphone cameras overcome the inherent difficulties in tiny camera design, too much will give you a lot of the same effects that the crappy, fake HDR filters do. It will also put noise where it shouldn’t be, and add some bizarre glowing if it’s too extreme. Thankfully, even the worst of the cameras here were pretty average, all things considered.

...even the worst of the cameras here were pretty average, all things considered.

If you’re having trouble visualizing this, here’s a concrete example. When you see an image with a hard line between black and white, sometimes the camera records a step of grey where the pixels don’t necessarily line up. Oversharpening makes the black side of that image darker, and the white pixels immediately adjacent to that line whiter for the sole purpose of making that edge appear more crisp. In photos, this changes how the detail is preserved, but generally isn’t much of a problem until you go overboard. With a light hand, it can even look good.

All this means that these cameras could very well improve, given time. For example, you may notice that the Razer phone has been lagging in all these test results— several reviews online point this out too. With a little software TLC Razer’s camera could be perfectly fine. Notice it doesn’t currently use a lot of enhancements like aggressive noise reduction or oversharpening. Even the color accuracy would improve from some developer attention to metering the temperature of ambient light. Even if it lags severely now, there’s nothing saying it’ll be like this forever.

Video

Video is also pretty easy to assess, but I have a sneaking suspicion that many of you budding cinematographers are going to be more interested in the specs and features of the cameras rather than the raw performance of the image sensor. Features like the V30’s log shooting profile, for example, may outweigh these results for some.

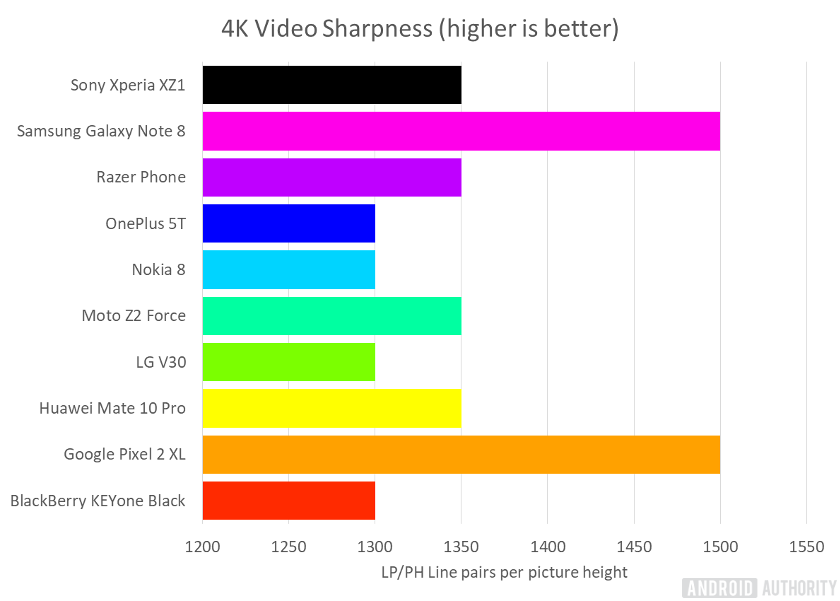

On to business: most of these cameras perform just fin in terms of sharpness. Unsurprisingly the Google Pixel 2 XL and Samsung Galaxy Note 8 are the standouts. The rest of the pack fall behind, though no camera we tested really reaches the realm of “bad” performance. The Google and Samsung phones are just really, really good. Using our trusty DSCLabs Megatrumpet 4K resolution chart, we found these two cameras performed admirably, and head-and-shoulders above the rest. Both posted measured sharpness of around 1500 LP/PH, which is right around where it should theoretically be for 4K video. The rest of the pack fell behind slightly, but not alarmingly so.

Possible sources of error

I wouldn’t be doing my job if I didn’t make it clear that despite our best efforts, there are a few variables we can’t control. Most smartphone camera systems don’t actually allow you to take a manual white balance reading, which means you have to rely on an intricate system of greycards, D65 bulbs, and the phone’s metering systems performing as they’re expected to.

Sometimes—especially in low light situations—the camera units simply don’t behave in a way that allows them to capture the best results possible. If a camera had troubles even in an ideal situation, that’s an issue. This source of potential error can have consequences for color accuracy, and little else.

The other sources of error revolve around how each mobile device solves the inherent problems of a smartphone camera. Some use longer shutter speeds to gather more light (making motion blur more likely), others ramp up the sensitivity (upping noise, and/or blotchiness). Sometimes the metering systems just don’t perform all that well, which changes the color balance of the shot. These things all happen. It’s enormously difficult to get these settings right automatically, even moreso with tiny sensors.

While all this data was collected in sterile conditions, these issues are near-impossible to control. In low light, we modified the EV settings and left the ISO and shutter speeds to the camera to decide. Doing this can cause other problems not evident in our tests.

A note on the difference between objective and subjective

Objective camera testing only tells us so much. It’s useful for people wanting the absolute most out of their equipment, but its value varies from person to person. It’s entirely possible that the best camera for you isn’t the camera with the most clinically accurate performance.

As I mentioned before, camera features on smartphones are really awesome nowadays. If you’re mostly going to be using Instagram, Lightroom CC, or VSCO: you’re probably going to be less concerned about “perfect” results, and more about things like color depth and in-phone adjustments. On top of that, even crappy camera units that shoot in RAW will also allow you to edit your photos if you want. You can also coax a little more quality out of photos your phone reaches certain performance benchmarks.

For more on the subjective side of assessing image quality, visit our camera shootout, where your vote counts for the People’s Choice Camera of the Year award!

Winners

[one_half]

With a combo of great color, noise, low light performance, and sharpness, we’d recommend the Samsung Galaxy Note 8, and HUAWEI Mate 10 Pro as having the best cameras, objectively speaking. As we illustrated, these cameras aren’t light-years ahead of the pack; they’re just the best of a super-competitive group.

Overall, the Galaxy Note 8 scores 60 points while the Mate 10 Pro ends up just one point behind with 59 points. The Pixel 2 XL comes in a close third with 56 points, while the OnePlus 5T also deserves a mention, coming in fourth with 52 points. The Xperia XZ1 is a further point behind, followed by the Moto Z2 Force, Nokia 8, LG V30 and BlackBerry KEYone Black. The Razer Phone rather surprisingly comes last by a considerable margin.

If you’re looking for another key attribute for the best smartphone, be sure to check out the other entries in the Best of Android 2017 series. Which phone do you think is Phone of the year? Vote in our poll below, as the winner will be crowned People’s Choice Smartphone Of The Year 2017!

Remember, you could win one of the three smartphones that come placed first, second and third overall! To enter, check out all the details in the widget below and for five extra entries, use this unique code: BOACO1.

Credits

Series Contributors: Rob Triggs, Gary Sims, Edgar Cervantes, Sam Moore, Oliver Cragg, David Imel

Series Editors: Nirave Gondhia, Bogdan Petrovan, Chris Thomas