Affiliate links on Android Authority may earn us a commission. Learn more.

Why haven't we seen another 41-megapixel smartphone camera?

The year was 2012. The smartphone market was already well established, but quality mobile photography was still very much in its infancy. Apple and most other manufacturers had only started focusing on it in the last few years and mobile photography still had a long way to go. All that changed with the Nokia PureView 808.

Featuring Carl ZEISS optics, an industry-first 41 MP image sensor and powerful software to boot, the PureView 808 was arguably the first smartphone to really push the envelope of mobile photography. Nokia followed it up with the legendary Lumia 1020 the next year, which added 3-axis optical image stabilization and an extensive and updated camera app. While it retained the same 41 MP resolution, the 1020 used an upgraded back-side illuminated sensor. It even ran Windows Phone 8 instead of Nokia’s own Symbian operating system.

This interplay of hardware and software put the Lumia 1020 light years ahead of the competition. So why haven’t we seen other smartphones with similar technology since?

Diffraction, Airy disks and image quality

There are potentially many answers to that question. One involves diffraction and requires a slightly technical explanation, so bear with me.

Light waves typically travel in a straight line. When they pass through gases, fluids or materials like glass, or bounce off certain surfaces, they bend and change their trajectory. Diffraction (not to be confused with refraction) occurs when light waves encounter an obstacle which causes them to bend around that obstacle, invariably causing interference.

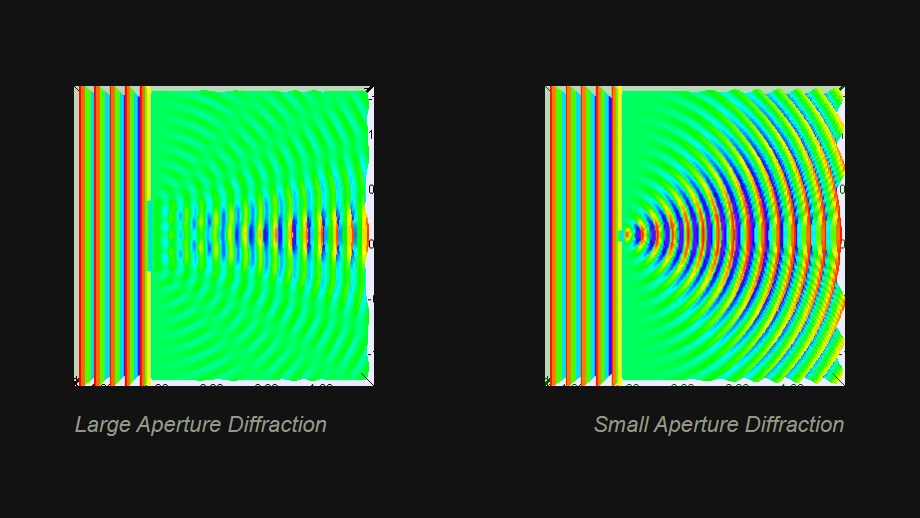

If you imagine the obstacle as a wall with a small round opening in it, light waves passing through the opening will be subject to at least some degree of diffraction. The extent of diffraction depends on the size of the opening. A larger opening (which allows most light waves to pass through) causes less diffraction. A smaller opening (which obstructs most of the light waves) causes more diffraction. Something similar occurs inside a camera lens. The two images below should help visualize the diffraction phenomenon.

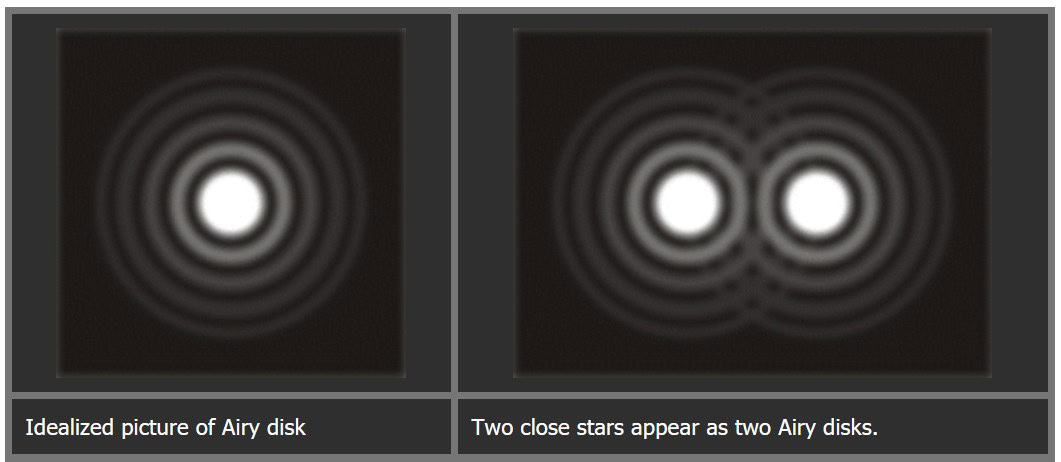

As you can see above, diffracted light waves propagate outwards in a circular pattern. Inside a camera lens, when light passes through the aperture, a similar circular pattern is created on the image sensor, with a bright spot in the center, flanked by concentric rings. The bright spot in the center is called an Airy disk, and the pattern is called an Airy pattern. They’re named after Sir George Biddell Airy, who originally observed the phenomenon in 1835. Generally, narrower apertures lead to higher diffraction, resulting in larger Airy disks.

The size of Airy disks and the distance between adjacent Airy disks play an important role in determining the overall detail and sharpness of the final image. During operation, light passing through the lens of a camera creates multiple Airy disks on the image sensor.

‘Diffraction-limited’ optical systems

An image sensor is essentially a grid of pixels. When a picture is taken, the sensor is illuminated by light and the pixels convert light data into a digital image. On smaller, high-resolution sensors with densely packed pixels, the diameters of Airy disks may be larger than that of a single pixel, causing them to spread out over multiple pixels, resulting in a noticeable loss of sharpness or detail.

At narrower apertures, this issue is exacerbated when multiple Airy disks begin to overlap each other. This is what it means when something is ‘diffraction limited’ – the image quality produced by a system with these issues is severely hampered by diffraction. While you can combat this in a number of different ways, there are a lot of complex variables at play, which introduce many interesting trade-offs.

Ideally, you want the size of an Airy disk to be small enough that it doesn’t overlap from one pixel to many others. On most recent flagships, pixel sizes aren’t much smaller than the diameter of the Airy disks present in those systems. But because they use such small sensor sizes, they’ve had to limit resolution in order to avoid Airy disk overlap. If they didn’t, ramping up the resolution without also increasing sensor size would bloat pixel size/Airy disk diameter differentials – seriously damaging image quality. To make matters worse, smaller pixels also capture less light; thereby sacrificing low-light performance.

While it may seem counter-intuitive: a lower-resolution sensor can sometimes mean better quality images simply because the solution to these issues is larger pixels.

But what about sampling?

However, larger pixels aren’t great at resolving fine detail. In order to faithfully reproduce all information contained in a source signal, it should be sampled at 2x the rate of the highest frequency contained in the source signal—what’s called the Nyquist Theorem. In simpler terms, photos recorded at double the resolution for a given size will look their sharpest.

But that’s only the case if we’re talking about a perfect signal, and diffraction prevents that from happening in high-res smartphone cameras. So while the Nokia’s sensor was able to hide some of its shortcomings with high resolution and sampling, the images it recorded were nowhere near as sharp as they should be.

So, inside a smartphone, and given space constraints, image quality loss due to diffraction does indeed become a problem, especially on smaller sensors with higher resolutions.

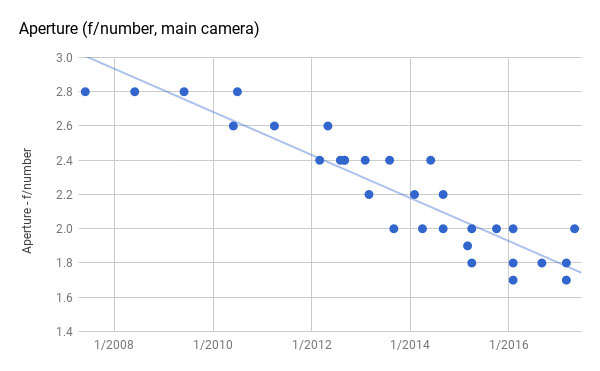

Evolution of smartphone cameras

Smartphones have come a long way over time, but they can’t re-write the laws of physics. Though the Nokia had a combination of a large sensor and huge resolution, industry leaders have since decided to limit sensor resolution to minimize diffraction issues. As you can see in the table below, the original Pixel—modest as its camera specs may seem—has a much smaller issue with diffraction than the Lumia 1020 did, especially when you consider the advancements in image sensor tech since then.

| Smartphone | Aperture size | Sensor size (inches, diagonal) | Airy disk size (µm) | Pixel size (µm) |

|---|---|---|---|---|

| Smartphone Google Pixel/Pixel XL | Aperture size f/2.0 | Sensor size (inches, diagonal) 1/2.3 | Airy disk size (µm) 2.7 | Pixel size (µm) 1.55 |

| Smartphone Nokia Lumia 1020 | Aperture size f/2.2 | Sensor size (inches, diagonal) 1/1.5 | Airy disk size (µm) 2.95 | Pixel size (µm) 1.25 |

Image sensors, hardware ISPs, and AI-powered software algorithms have seen huge improvements over the last decade, but they can only do so much to compensate for image quality loss in a ‘diffraction limited’ optical system. While the Lumia 1020’s sensor had a lot to offer in 2013, sensors on today’s smartphones perform better in just about every way, and use almost 40% less space.

Wrap up

While Nokia’s 41 MP sensor used sampling to mask its issues, it’s far cheaper and easier to just make a sensor with a more sensible resolution than to rekindle the Megapixel Wars.

12 MP to 16 MP sensors will continue to be the staple for smartphones in the foreseeable future. Better photographic performance will be achieved through optimizations to the underlying hardware and software ecosystem, as opposed to super high-resolution sensors.