Affiliate links on Android Authority may earn us a commission. Learn more.

Display specs and terms explained: Resolution, contrast, color gamut, and more

Shopping for a new display has never been more confusing. Between a myriad of competing standards and new display specifications, it’s often hard to tell which product is better. Even panels from the same manufacturer can boast vastly different features and specifications.

So in this article, we’ve compiled a list of 14 display specifications — common across monitors, TVs, and smartphones. Let’s now take a quick look at what they mean and which ones you should pay the most attention to.

See also: Is dark mode good for your eyes? Here’s why you may want to avoid it.

A comprehensive guide to display specs

Resolution

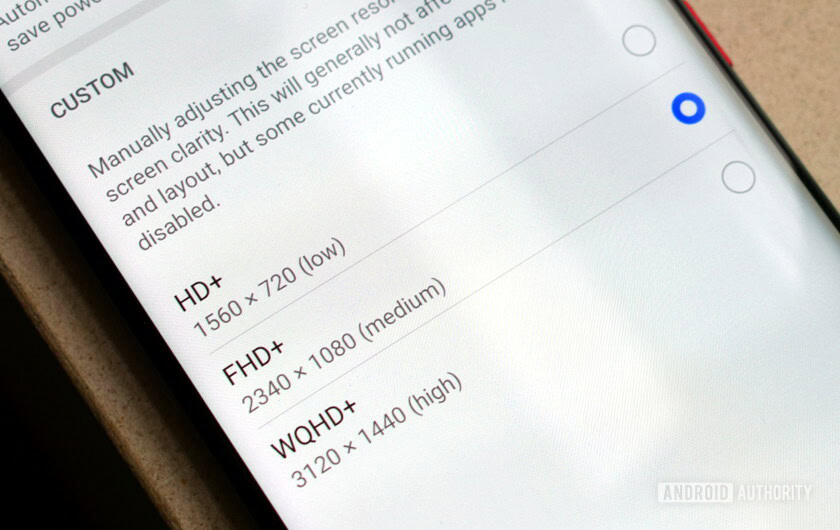

Resolution is by far the single most prominent display specification these days. Marketing buzzwords aside, a display’s resolution is simply the number of pixels in each dimension, horizontal and vertical. For example, 1920 x 1080 indicates that the display is 1920 pixels wide and 1080 pixels tall.

Very broadly speaking, the higher the resolution the sharper the display, although the ideal resolution depends upon your intended use-case. A TV, for instance, benefits from a higher resolution display much more than a smartphone or even a laptop.

Resolution is a classic example of how a higher number is not always better.

The industry-standard resolution for TVs these days is now 4K, or 3,840 x 2,160 pixels. It is also commonly referred to as UHD or 2160p. Finding content at this resolution is not difficult. Netflix, Amazon Prime, and Disney+ all offer a 4K tier.

Smartphones, on the other hand, are a bit less standardized. You’ll only find a very tiny percentage of devices, like Sony’s flagship Xperia 1 series, that feature a 4K-class display. Other high-end smartphones, such as the Samsung Galaxy S22 Ultra and OnePlus 10 Pro, include 1440p displays. Finally, the vast majority of sub-$1,000 devices have 1080p-class displays.

See also: 1080p vs 1440p: How much does 1440p really affect battery life?

There are two upsides to having a lower resolution screen on a compact, handheld device. A display with fewer pixels requires less processing power and, consequently, is more energy-efficient. For proof of this fact, take a look at the Nintendo Switch, which has a paltry 720p resolution screen to ease the load on its mobile SoC.

The average user will likely not perceive a bump in clarity from 1080p to 4K on a typical 6-inch smartphone display.

In that vein, the vast majority of computer monitors and laptop displays today are 1080p. One reason for this is that 1080p displays are relatively cheaper than their higher-resolution counterparts. More importantly, however, a high-resolution display requires beefier (and more expensive) graphics hardware to power it.

So what’s the ideal resolution? For portable devices like smartphones and laptops, 1080p or even 1440p are likely all you need. It’s only when you approach larger display sizes that you should start to consider 4K as a baseline requirement.

Aspect ratio

Aspect ratio is another specification that conveys the physical dimensions of the display. Instead of an exact measurement like resolution, however, it simply gives you a ratio of the display’s width and height.

A 1:1 aspect ratio means that the screen has equal horizontal and vertical dimensions. In other words, it would be a square. The most common aspect ratio is 16:9, or a rectangle.

Unlike many other specifications on this list, one aspect ratio isn’t necessarily better than another. Instead, it almost entirely comes down to personal preference. Different types of content are also better suited to a specific aspect ratio, so it depends on what you will use the display for.

Movies, for instance, are almost universally shot in 2.39:1. Incidentally, this is pretty close to most ultrawide displays, which have an aspect ratio of 21:9. Most streaming content, on the other hand, is produced at 16:9 to match the aspect ratio of televisions.

You don’t have much choice when it comes to TV aspect ratios — almost all of them are 16:9.

As for productivity-related use cases, laptop and tablet displays with 16:10 or 3:2 aspect ratios have become increasingly popular of late. Microsoft’s Surface Laptop series, for instance, houses a 3:2 display. These offer more vertical real estate than a typical 16:9 aspect ratio. That means you get to see more text or content on the screen without scrolling. If you multitask a lot, however, you may prefer the 21:9 or 32:9 ultrawide aspect ratios since you can have many windows side-by-side.

Taller aspect ratios like 3:2 allow you to see more content without scrolling, while sacrificing a bit of horizontal real estate.

Smartphone displays, on the other hand, do offer a bit more variety. On the extreme end, you’ll find devices like the Xperia 1 IV with a 21:9 display. As you’d expect, this makes the phone tall and narrow. If you prefer a device that’s short and wide instead, consider a smartphone with an 18:9 screen. Either way, it’s a matter of personal preference.

Viewing angles

Knowing a display’s viewing angles is extremely important because it dictates whether or not you can view the screen off-center. Naturally, looking at a screen head-on is ideal, but that’s not always possible.

A low or narrow viewing angle means that you could lose some brightness and color accuracy simply by moving your head to the left or right. Similarly, placing the display above or below eye level may also affect the perceived image quality. As you can probably guess, this is also not ideal for shared screen viewing.

A display with poor viewing angles will look significantly worse if you view it off-center.

IPS and OLED displays tend to have the widest viewing angles, easily approaching 180° in most cases. On the other hand, VA and TN panels tend to suffer from narrower viewing angles.

However, viewing angle numbers on a spec sheet doesn’t always convey the full story since the extent of quality degradation could range from minor to extensive. To that end, independent reviews are a better way to gauge how a particular display performs in this area.

Brightness

Brightness refers to the amount of light a display can output. In technical terms, it is a measure of luminance.

Naturally, a brighter display makes content stand out more, allowing your eyes to resolve and appreciate more detail. There’s one more advantage to a brighter display — you can use it in the presence of other light sources.

Higher brightness doesn't just make content look better, it also improves visibility in bright conditions.

Take smartphone displays, for example, which have become progressively brighter over the past few years. A big reason for this push is increased sunlight visibility. Only a decade or two ago, many smartphone displays were borderline unusable outdoors.

Brightness is measured in candela per square meter or nits. Some high-end smartphones, like the Samsung Galaxy S22 series, advertise a peak brightness of well over 1000 nits. At the other end of the spectrum, you’ll find some devices (like budget laptops) that top out at a paltry 250 to 300 nits.

Most high-end displays offer 1000 nits of brightness. This is nearly eye-searingly bright in a dark room, but necessary for direct sunlight.

There are also two measurements to watch out for — peak and sustained brightness. While most manufacturers will boast a product’s peak brightness, that figure only applies to short bursts of light output. In most cases, you’ll have to rely on independent testing to find out a display’s true brightness capability.

There are diminishing returns at the high end, so a reasonable baseline for brightness is around the 350 to 400 nits threshold. This guarantees that the display will still be somewhat usable in bright conditions, like a sunny day or an exceptionally well-lit room.

A reasonable baseline for brightness is the 350 to 400 nits threshold.

Brightness also has an outsized influence on the display’s HDR capabilities, as we’ll discuss soon. In general, the brightest display is often the best option — all else being equal.

Contrast ratio

Contrast is the measured difference between a bright and dark area of the display. In other words, it is the ratio between the brightest white and darkest black.

Practically speaking, the average contrast ratio lies between 500:1 and 1500:1. This simply means that a white area of the display is 500 (or 1500) times brighter than the black portion. A higher contrast ratio is more desirable because it provides more depth to colors in the image.

If a display doesn’t produce perfect blacks, darker portions of an image may appear gray instead. Naturally, this is not ideal from an image reproduction standpoint. A low contrast ratio also affects our ability to perceive depth and detail, making the entire image appear washed out or flat.

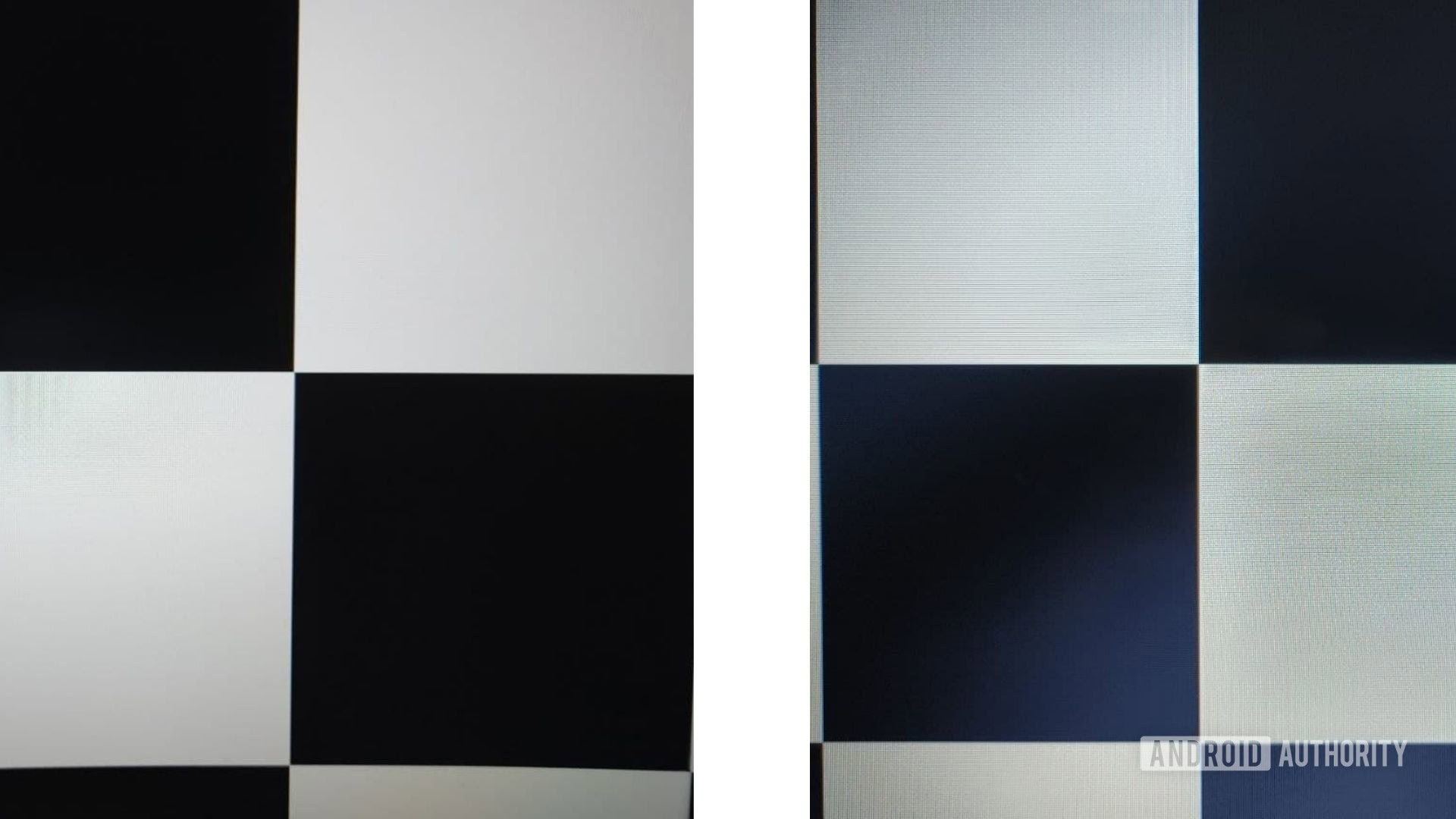

The checkerboard test is a good way to visualize the difference between low and high contrast ratios. The below images, captured from two different displays, show a marked difference in contrast levels.

Imagine a dark scene such as a starry night sky. On a display with a low contrast ratio, the sky won’t be pitch black. Consequently, individual stars won’t stand out very much — reducing the perceived quality.

Low contrast ratio is especially apparent when content is viewed in a dark room, where the entire screen will glow even though the image is supposed to look mostly black. In bright rooms, however, your eyes likely won’t be able to tell the difference between a very dark gray and true black. In this instance, you could perhaps get away with a lower contrast ratio.

At a minimum, your display should have a contrast ratio above 1000:1. Some displays achieve significantly higher contrast ratios thanks to their use of newer technologies. This is discussed in the following section on local dimming.

Local dimming

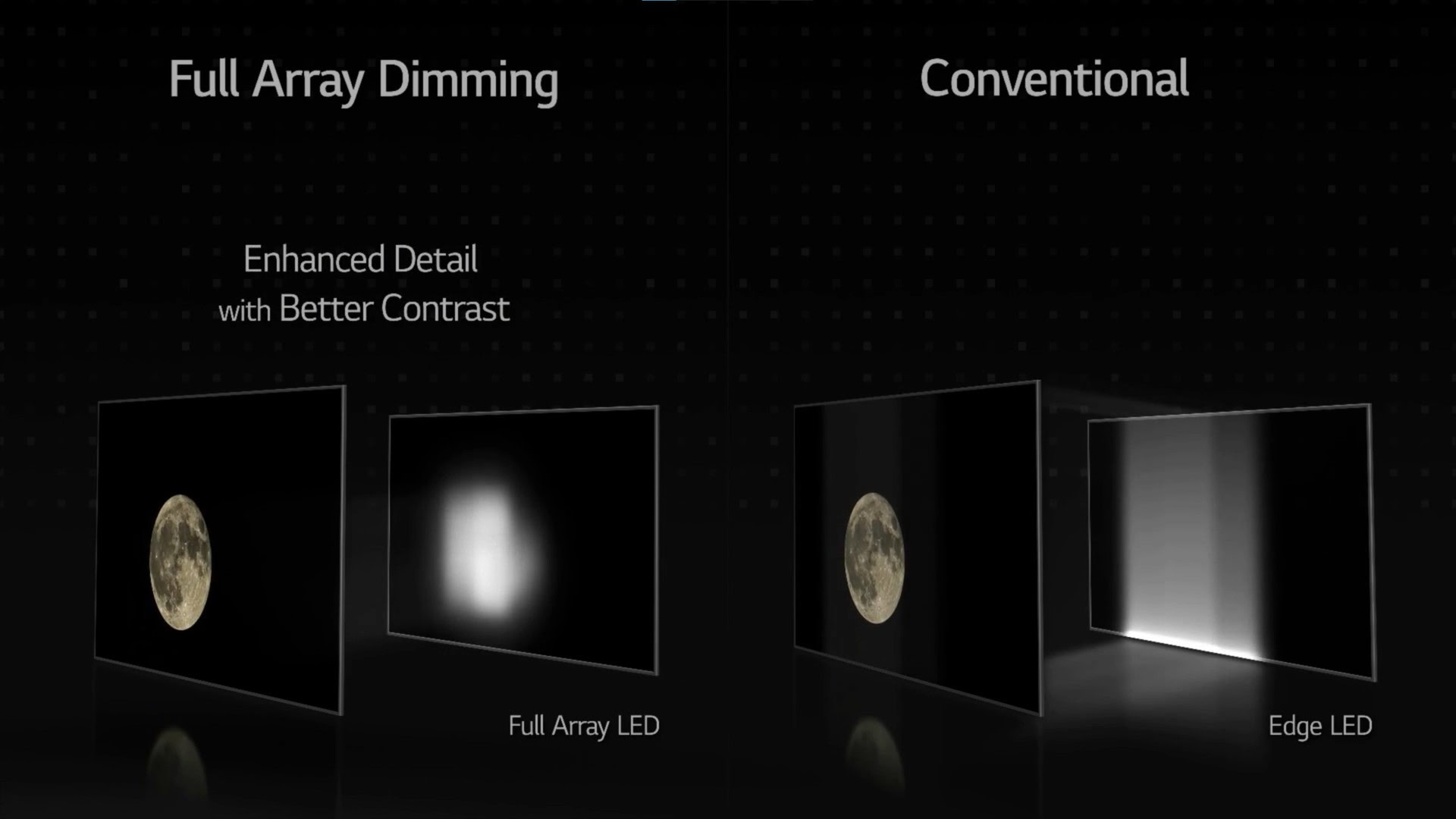

Local dimming is an innovative feature used to improve the contrast ratio of backlit LCD displays.

Displays using OLED technology tend to boast the best contrast, with many manufacturers claiming an “infinite:1” ratio. This is because OLED panels are composed of individual pixels that can completely turn off to achieve true black.

Traditional displays like LCD televisions, however, are not made up of individually lit pixels. Instead, they rely on a uniform white (or blue-filtered) backlight that shines through a filter to produce colors. An inferior filter that doesn’t block enough light will result in poor black levels and produce grays instead.

Read more: AMOLED vs LCD: Everything you need to know

Local dimming is a new method of improving contrast by splitting the LCD backlight into separate zones. These zones are essentially groups of LEDs that can be turned on or off as required. Consequently, you get deeper blacks simply by turning off a particular zone’s LEDs.

The effectiveness of a display’s local dimming feature depends primarily on the number of backlight zones. If you have many zones, you get more granular and precise control over how much of the display is illuminated. Fewer zones, on the other hand, will result in a distracting glow or halo around bright objects. This is known as blooming.

While local dimming is becoming a pretty common marketing term, pay attention to the number of zones and the implementation. Full array local dimming is the only proper implementation of this concept. Edge-lit and backlit local dimming techniques usually don’t improve contrast as much, if at all.

Read more: OLED vs LCD vs FALD TVs — What are they and which is best?

Gamma

Gamma is a setting that you can usually find buried deep within a display’s settings menu.

Without getting super in-depth, gamma refers to how well a display transitions from black to white. Why is this important? Well, because color information cannot be translated 1:1 into display brightness. Instead, the relationship looks more like an exponential curve.

Experimenting with various gamma values yields interesting results. Around 1.0, or a straight line according to the gamma equation, you get an image that is extremely bright and flat. Use a very high value like 2.6, though, and the image gets unnaturally dark. In both cases, you lose detail.

The ideal gamma value is around 2.2 because it forms an exact inverse curve of the gamma curve used by digital cameras. In the end, the two curves combine to form a linear perceived output, or what our eyes expect to see.

See also: The importance of gamma

Other common gamma values for displays are 2.0 and 2.4, for bright and dark rooms respectively. This is because your eyes’ perception of contrast depends heavily on the amount of light in the room.

Bit-depth

Bit-depth refers to the amount of color information a display can handle. An 8-bit display, for example, can reproduce 28 (or 256) levels of red, green, and blue primary colors. Combined, that gives you a total range of 16.78 million colors!

While that number may sound like a lot, and it absolutely is, you probably need some context. The reason you want a larger range is to ensure that the display can handle slight changes in color.

Take an image of a blue sky, for example. It’s a gradient, which just means that it is made up of different shades of blue. With insufficient color information, the result is rather unflattering. You see distinct bands in the transition between similar colors. We typically call this phenomenon banding.

A display’s bit depth specification doesn’t tell you a whole lot about how it mitigates banding in software. That’s something only independent testing can verify. In theory, though, a 10-bit panel should handle gradients better than an 8-bit one. This is because 10 bits of information equals 210 or 1024 shades of red, green, and blue colors.

1024(red) x 1024(green) x 1024(blue) = 1.07 billion colors

Remember, though. To fully appreciate a 10-bit display, you need matching content as well. Luckily, content sources that deliver more color information have become increasingly common of late. Gaming consoles like the PlayStation 5, streaming services, and even UHD Blu-Rays all offer 10-bit content. Just remember to enable the HDR option since the standard output is generally 8-bit.

10-bit displays can handle a lot more color, but most content is still 8-bit.

All in all, if you consume a lot of HDR content, consider picking a display that is capable of 10-bit color. This is because content mastered for HDR actually takes advantage of the entire color range. For most other use-cases, an 8-bit panel will likely be enough.

Color gamut

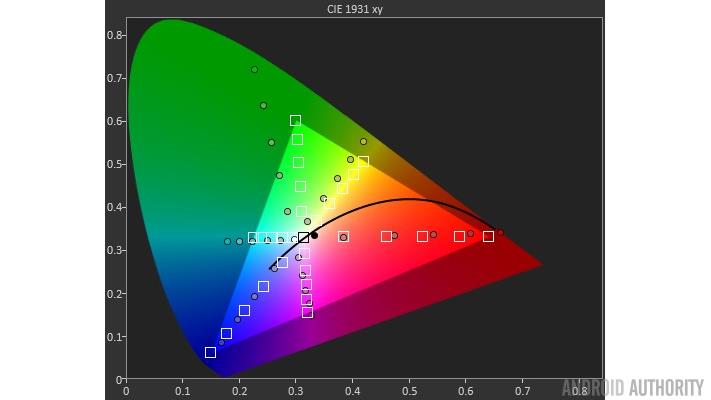

A display’s color gamut specification tells you how much of the visible color spectrum it can reproduce. Think of color gamut as a display’s color palette. Whenever an image needs to be reproduced, the display picks colors from this limited palette.

The visible color spectrum, or what our eyes can see, is commonly represented as a horseshoe shape, which looks something like this:

For TVs, the standard color space is Rec. 709. It surprisingly only covers about 25% of what our eyes can see (like the highlighted portion above). Despite that, it is the color standard adopted by broadcast television and HD video. To that end, consider 95 to 99% coverage of this space as a bare minimum and not a feature.

In recent years, more expansive color gamuts like DCI-P3 and Rec. 2020 have become key marketing points. Monitors can also offer these wider color gamuts, but you’ll generally only find that feature in professional models. Indeed, if you’re a photographer or video editor, you might benefit from coverage of additional color spaces.

However, most standard content sources like streaming services don’t take advantage of wider color gamuts. That said, HDR is quickly gaining popularity and could make wider color gamuts more accessible.

Like TVs, most computer-related content is designed around the decades-old standard RGB (sRGB) color gamut. To note, sRGB is pretty similar to Rec. 709 in terms of its coverage of the color spectrum. Where they differ is in terms of gamma. sRGB results in a gamma value of 2.2, while Rec.709’s value is 2.0. Nevertheless, a display with near 100% coverage of either should serve you well.

Most standard, non-HDR content is mastered for the sRGB or Rec. 709 color space.

About the only devices that tend to skimp out on sRGB coverage these days are low-end laptops. If color accuracy is important to you, consider avoiding displays that only cover only 45% or 70% of the sRGB color space.

HDR

HDR, or High Dynamic Range, describes displays that can output a wider range of colors and offer more detail in dark and bright areas alike.

There are three essential components to HDR: brightness, wide color gamut, and contrast ratio. In a nutshell, the best HDR displays tend to offer exceptionally high contrast levels and brightness, in excess of 1,000 nits. They also support a wider color gamut, like the DCI-P3 space.

Read more: Should you buy a phone for HDR?

Smartphones with proper HDR support are common these days. The iPhone 8, for instance, could play back Dolby Vision content in 2017. Similarly, Samsung’s flagship smartphones’ displays boast exceptional contrast, brightness, and color gamut coverage.

A good HDR display needs to offer exceptional brightness, contrast, and a wide color gamut.

Unfortunately, though, HDR is another term that has turned into a buzzword in the display technology industry. Still, there are a few terms that can make shopping for an HDR TV or monitor easier.

Dolby Vision and HDR10+ are newer, more advanced formats than HDR10. If a television or monitor only supports the latter, research other aspects of the display too. If it doesn’t support a wide color gamut or get bright enough, it’s likely no good for HDR either.

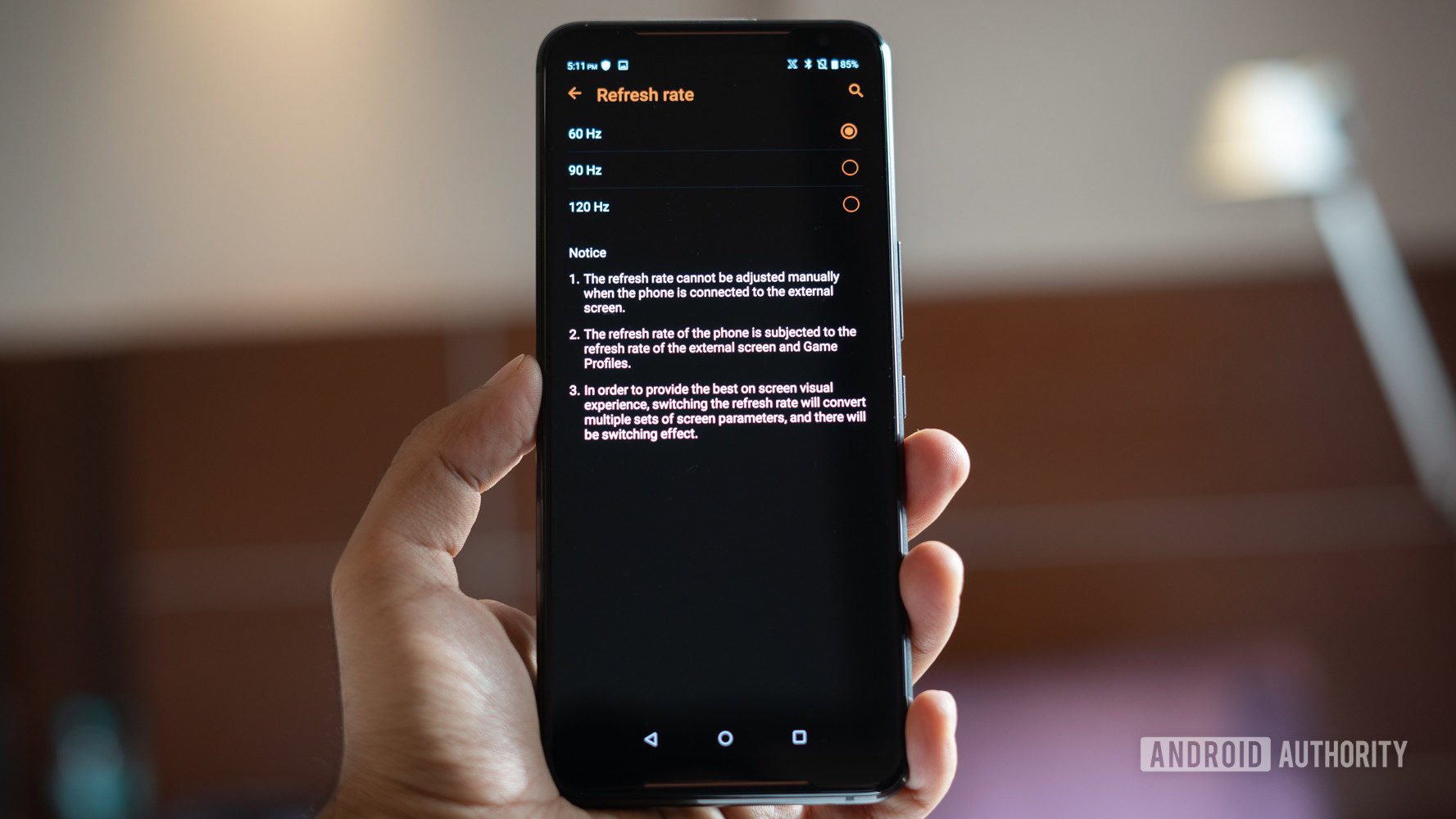

Refresh rate

A display’s refresh rate is the number of times it updates each second. We use Hertz (Hz), the unit of frequency, to measure refresh rate. The vast majority of displays on the market today are 60Hz. That just means they update 60 times per second.

Why does the refresh rate matter? Well the faster your content refreshes the smoother animation and motion appear. There are two components to this, the display’s refresh rate and the frame rate of your content, such as a game or video.

Videos are typically encoded at 24 or 30 frames per second. Obviously, your device’s refresh rate should match or exceed this frame rate. However, there are tangible benefits to going beyond that. For one, high frame rate videos do exist. Your smartphone, for example, is likely capable of recording content at 60fps and some sports are broadcast at higher frame rates.

A higher refresh rate gives you the perception of smoothness, especially while interacting with the display.

High refresh rates also offer a smoother experience when you interact with the display. Simply moving a mouse cursor on a 120Hz monitor, for example, will appear noticeably smoother. The same goes for touchscreens, where the display will appear more responsive with a higher refresh rate.

This is why smartphones are now increasingly including higher-than-60Hz displays. Almost every single manufacturer, including Google, Samsung, Apple, and OnePlus, now offer 90Hz or even 120Hz displays.

Screens that update more frequently also offer gamers a competitive edge. To that end, computer monitors and laptops with refresh rates as high as 360Hz also exist on the market today. However, this is another specification where diminishing returns come into play.

A display's refresh rate offers diminishing returns the higher up you go.

You’ll likely notice a very big difference going from 60Hz to 120Hz. However, the jump to 240Hz and beyond is not as striking.

See also: What is refresh rate? What does 60Hz, 90Hz, or 120Hz mean?

Variable refresh rate

As the title suggests, displays with a variable refresh rate (VRR) aren’t bound to a constant refresh rate. Instead, they can dynamically change their refresh rate to match the source content.

When a traditional display receives a variable number of frames per second, it ends up displaying a combination of partial frames. This results in a phenomenon called screen tearing. VRR reduces this effect greatly. It can also deliver a smoother experience by eliminating judder and improving frame consistency.

Variable refresh rate technology has its roots in PC gaming. NVIDIA’s G-Sync and AMD’s FreeSync have been the two most prominent implementations for nearly a decade.

Having said that, the technology has recently made its way to consoles and mid to high-end televisions like LG’s OLED lineup. This is largely thanks to the inclusion of variable refresh rate support in the HDMI 2.1 standard. Both the PlayStation 5 and Xbox Series X support this standard.

Variable refresh rate (VRR) technology benefits gamers by improving frame consistency and reducing judder.

Variable refresh rate technology has also become increasingly popular in the smartphone industry. Reducing the number of screen refreshes when displaying static content allows manufacturers to improve battery life. Take a gallery application, for example. There’s no need to refresh the screen 120 times per second until you swipe to the next picture.

Whether or not your device’s display should have support for variable refresh rate depends on the intended use case. That said, for devices permanently plugged into the wall, you may not notice any benefit outside of gaming.

Response time

Response time refers to the time it takes for a display to transition from one color to another. It is usually measured from black to white or gray to gray (GtG) and quoted in milliseconds.

A lower response time is desirable because it eliminates ghosting or blurriness. These happen when the display cannot keep up with fast-moving content.

Slow response times can result in trailing shadows behind fast moving objects.

Most monitors these days claim to have response times around 10ms. That figure is perfectly acceptable for content viewing, especially since at 60Hz, the display only refreshes every 16.67 milliseconds. If the display takes longer than 16.67ms at 60Hz, however, you’ll notice a shadow following moving objects. This is commonly referred to as ghosting.

Televisions and smartphones tend to have slightly higher response times because of the heavy image processing involved. Still, you’re unlikely to notice the difference while simply browsing the internet or watching videos.

At the other end of the spectrum, you’ll find gaming monitors that advertise 1ms response times. In reality, that number might be closer to 5ms. Still, a lower response time coupled with a high frame rate means that new information gets delivered to your eyes sooner. And in highly competitive scenarios, that’s all it takes to gain an edge over your opponent.

Sub-10ms response times only really matter if you're a competitive gamer.

To that end, sub-10 millisecond response times are only necessary if you’re primarily using the display for gaming.

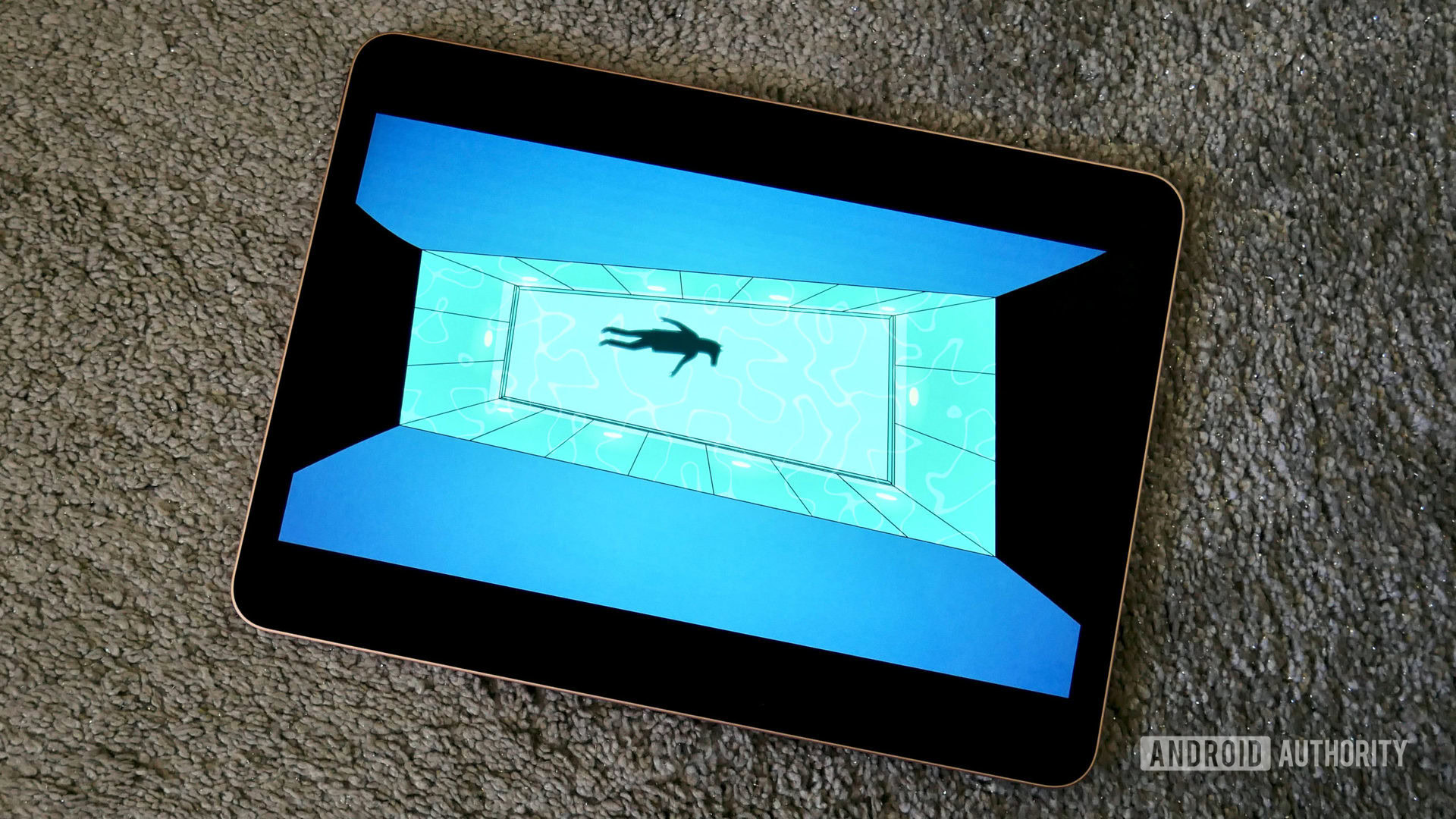

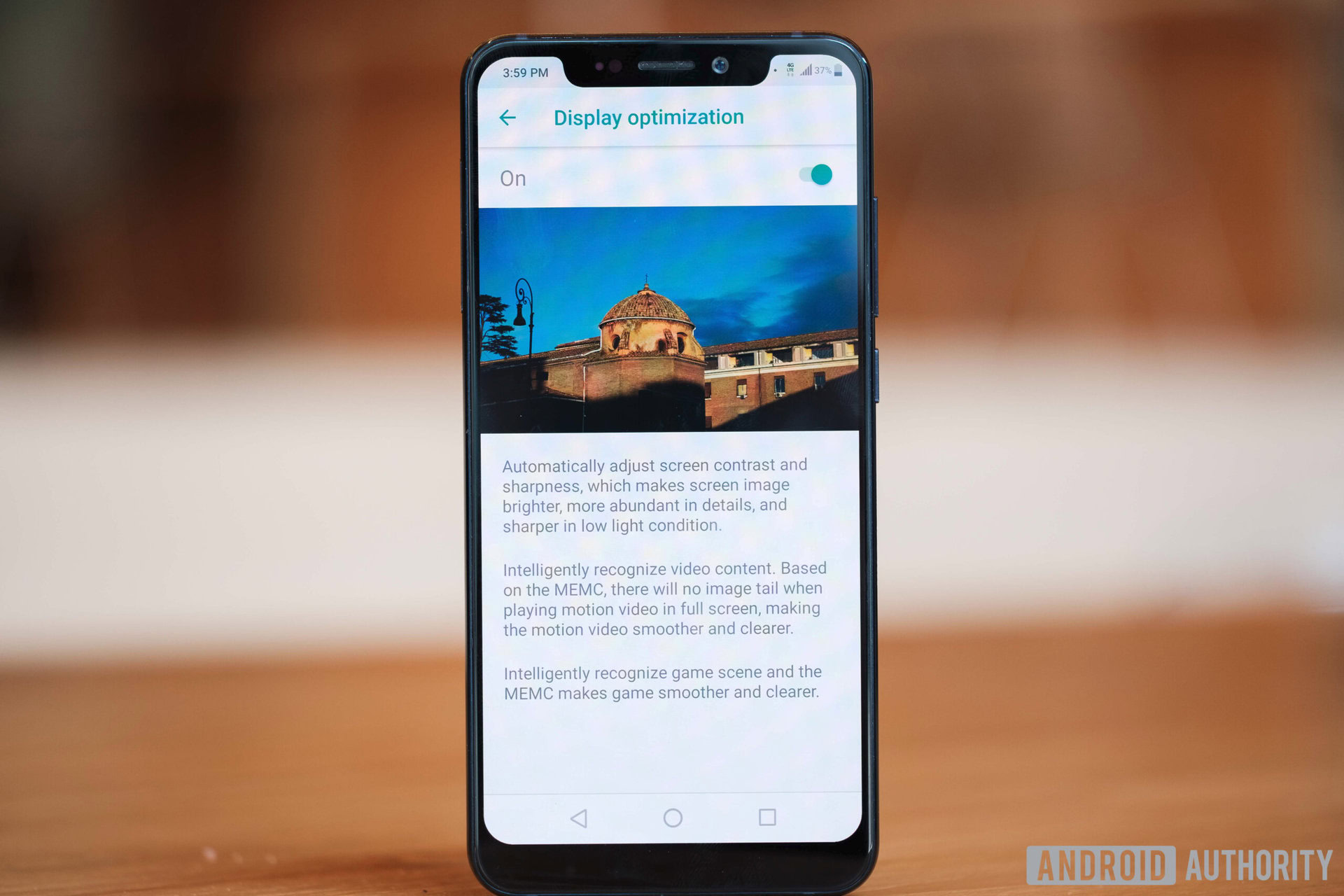

Motion smoothing or MEMC

MEMC is an initialism for Motion Estimation and Motion Compensation. You’ll find this feature on a variety of devices these days, ranging from televisions to smartphones.

In a nutshell, MEMC involves adding artificial frames to make low framerate content appear smoother. The goal is usually to match the content’s frame rate to the refresh rate of the display.

Films are typically shot at 24fps. Video captured on a smartphone might be 30fps. Motion smoothing allows you to double, or even quadruple, this figure. As the name suggests, MEMC tries to estimate or guess future frames based on motion in the current frame. The display’s onboard chipset is typically responsible for this function.

Read more: Not all 120Hz smartphone displays are made equally — here’s why

Implementations of MEMC vary between manufacturers and even devices. However, even the best ones may look fake or distracting to your eyes. Motion smoothing tends to introduce the so-called soap opera effect, making things look unnaturally smooth. The good news is that you can usually turn it off in the device’s settings.

Motion smoothing can look fake or unnatural to the trained eye. Thankfully, you can turn the feature off!

The increased processing from MEMC may also result in increased response times. To that end, most monitors don’t include the feature. Even smartphone manufacturers like OnePlus limit MEMC to certain apps like video players.

And that’s everything you need to know about display specifications and settings! For further reading, check out our other display related content: