Affiliate links on Android Authority may earn us a commission. Learn more.

What is FreeSync? AMD's display synchronization technology explained

Today’s displays offer a ton of features. We have come from the flickering and laggy displays of yesteryear to super quick displays that respond faster than most of us can notice. However, with that many new features coming in, what also came in was a new set of problems. As display refresh rates and GPU frame rates increased, displays started needing help to keep the two in sync. That’s where AMD FreeSync comes in.

Over the years there have been many display synchronization technologies that have aimed to solve screen tearing and other display artifacts. Being one of the two major GPU makers, AMD has its own solution. We know it as FreeSync. Let’s take a deeper look at how it works, and if you should be using it.

What is screen tearing?

One of the most important parameters of a display is the refresh rate, which is the number of times your monitor refreshes every second. Close to it is the frame rate, which is the number of frames the GPU renders per second. When the two fail to synchronize, the display can end up getting a jagged split across the horizontal side, which looks like the image on the screen is tearing.

It’s a visual artifact where the display shows information from different frames at once. The same syncing issues can also cause other artifacts like stuttering, and juddering.

See also: Display specs and terms explained — Resolution, contrast, color gamut, and more

V-Sync and the evolution to FreeSync

Screen synchronization technologies thus came by to combat screen tearing and other display artifacts. FreeSync is a rather recent entrant in the display synchronization space. There have been several before it. The starting milestone was V-Sync, a software-based solution. It worked by making the GPU hold back the frames in its buffer until the monitor was ready to refresh. This was a solid beginning, and worked fine on paper, but came with a glaring downside.

Being a software solution, V-Sync could not synchronize the frame rate and refresh rate quickly enough. Since the screen artifacts occur mostly when the two rates are high, this led to an unacceptable problem — input lag.

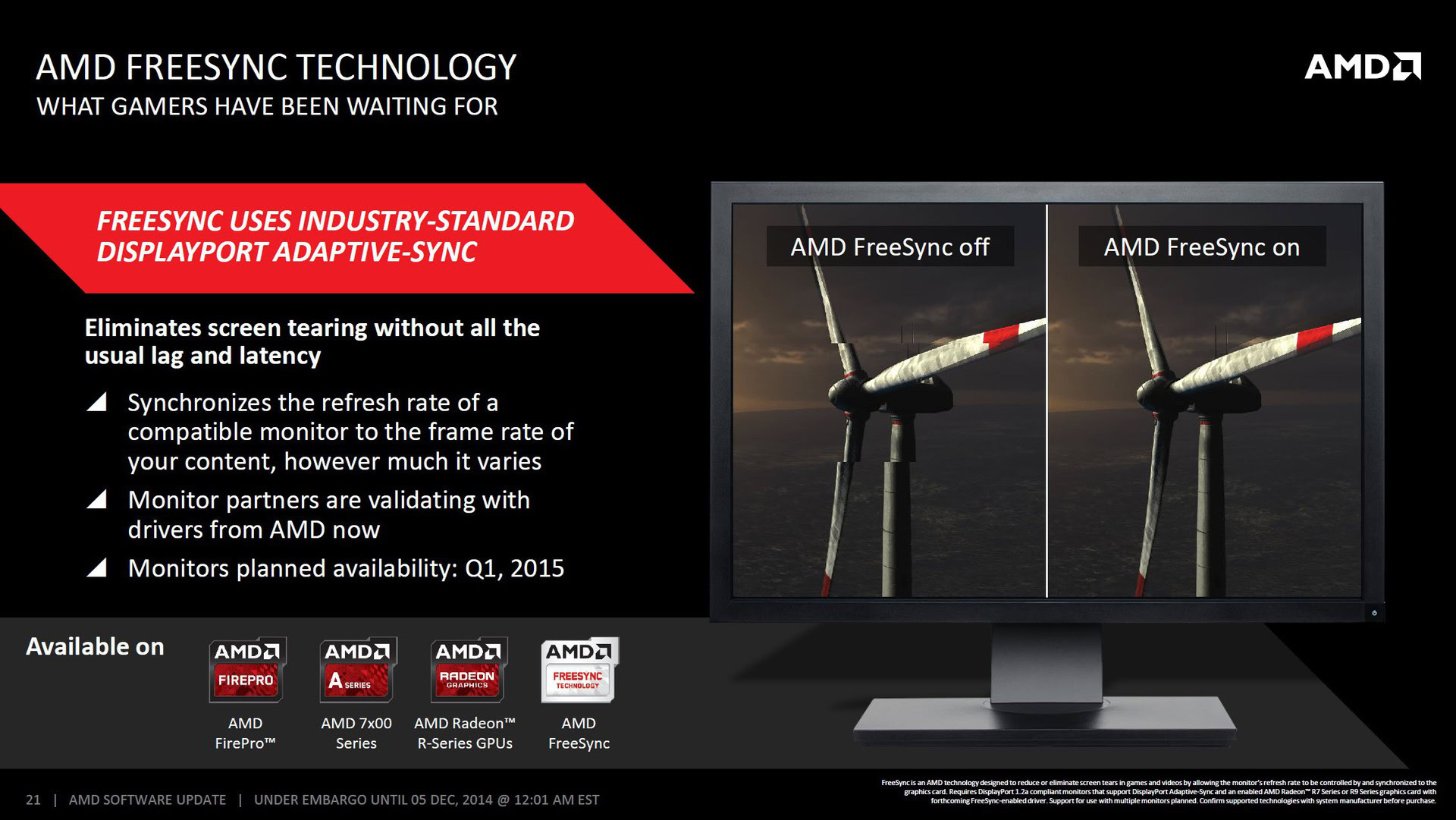

One of the big breakthroughs in this area came from the Video Electronics Standards Association, a.k.a. VESA. VESA introduced Adaptive-Sync in 2014 as an addition to the DisplayPort display standard. AMD in 2015 then released its own solution, based on VESA Adaptive-Sync, called FreeSync.

What is FreeSync?

FreeSync is based on VESA’s Adaptive-Sync and uses dynamic refresh rates to make sure that the refresh rate of the monitor matches the frame rates being put out by the GPU. AMD’s solution has the graphics processor control the refresh rate of the monitor, adjusting it to match the frame rate so that the two rates don’t mismatch.

It relies on a technology that has become quite popular in recent days — variable refresh rate (VRR). With VRR, FreeSync can dictate the monitor to drop or raise its refresh rate as per the GPU performance. This active switching ensures that the monitor doesn’t refresh in the middle of a frame being pushed out. This takes care of the screen artifacts like tearing, stuttering, and other inconsistencies that can pop up when there is a lack of sync.

FreeSync, of course, needs to forge a connection between the GPU and the monitor. It does this by communicating with the scalar board present in the monitors. It does not need specialized dedicated hardware in the monitor, making it an easier implementation in the world of display synchronization technologies.

See also: GPU vs CPU: What’s the difference?

Why do you need FreeSync?

Imagine having a fancy graphics card and monitor, but no guarantee that they’ll work together flawlessly. FreeSync actually is that guarantee, and it eliminates screen tearing and other visual artifacts to give you a smooth experience. So, do you need FreeSync? The answer is yes, especially if you have a gaming setup unless you’re looking to rely on NVIDIA’s G-Sync instead.

FreeSync is also a free standard, which means FreeSync-enabled monitors don’t come with an extra cost, and it also works with a wide variety of graphics cards.

Alternatively: What is G-Sync?

Does FreeSync make you better at games?

FreeSync makes your gaming experience smoother and helps ensure your GPU and monitor are synchronized. But does it make you better at games? Depends upon how you look at it. It eliminates a problem, much like a more stable internet connection would, if your ping is too high. So it improves the quality of your gaming experience like a lower ping would.

It won’t make you a better gamer for sure, as that’s more or less about your skills. It’s more like a bug-fix that will make the game itself better. Think of it not as an advantage, but rather as one disadvantage eliminated.

See also: GPU vs CPU: What’s the difference?

FreeSync vs FreeSync Premium vs FreeSync Premium Pro monitors and TVs

FreeSync is an AMD technology, and AMD has a set of certification criteria it sets for monitors. AMD groups these compatibility criteria into three tiers — FreeSync, FreeSync Premium, and FreeSync Premium Pro. While AMD does not require dedicated hardware, it still has a certification process using these tiers.

FreeSync — the most basic of the three tiers, promises a tear-free experience and low latency. FreeSync Premium also promises these features but raises the bar for the display characteristics. It needs at least a 120 Hz framerate at a minimum resolution of Full HD. It also comes with low frame rate compensation, which kicks in when the frame rate falls below the minimum refresh rate of the monitor. In this case, FreeSync duplicates the frames to push the framerate above the minimum refresh rate of the monitor.

Lastly is the FreeSync Premium Pro tier. Formerly known as FreeSync 2 HDR, this tier adds in an HDR requirement. This means the monitor needs a certification for its color and brightness standards, which include the HDR 400 spec. The display panel needs to hit at least 400 nits brightness. In addition, this tier requires low latency with SDR as well as HDR.

There’s a wide range of monitors that support this technology. You can find the full list of FreeSync monitors here. AMD’s certification is also more widely present in TVs. Check the full list of FreeSync TVs here.

FreeSync system requirements

To be able to use AMD FreeSync, you will need a compatible laptop, or a compatible monitor/TV, and an AMD APU or GPU. You’ll also need the latest graphics driver.

Compatible GPUs include all AMD Radeon GPUs, starting with Radeon RX 200 Series, released in 2013. Radeon consumer graphics products that use GCN 2.0 architecture and later are supported. All Ryzen APUs are also supported. The Xbox Series X/S and Xbox One X/S also support FreeSync. FreeSync runs over a DisplayPort or HDMI connection.

AMD says other GPUs that support DisplayPort Adaptive-Sync, like NVIDIA GeForce Series 10 and later, should also work fine with FreeSync. NVIDIA GPUs support FreeSync under the G-Sync Compatible label, so if you have one of those displays, you should be covered.

See also: AMD vs NVIDIA – what’s the best add-in GPU for you?

FreeSync vs G-Sync

G-Sync is NVIDIA’s display synchronization technology that uses a custom proprietary board instead of the typical scalar board FreeSync uses. It thus has a solid lead on G-Sync, to begin with, given the fact that it doesn’t need dedicated hardware. This means FreeSync has wider support, and it also doesn’t come with royalty fees attached, making it the more affordable option.

Head-to-head: FreeSync vs G-Sync — Which one should you pick?

FreeSync is thus arguably the best display synchronization technology in the market. However, since NVIDIA has more market share with GPUs, G-Sync has become a pretty common occurrence as well. For someone looking to buy a new monitor but not sure about their choice of GPU, it would make more sense to go for a G-Sync Compatible monitor, since those include FreeSync support.

Want to read more about PC components and the surrounding technology? Check out these articles next: