Affiliate links on Android Authority may earn us a commission. Learn more.

Display specs: the good, the bad, and the completely irrelevant

Let’s talk display specifications. I don’t mean which screen has the biggest brightness or contrast numbers, or which is the latest and greatest technology; I want to talk about the specifications themselves. Which ones are really important? Which ones really don’t matter (at least not nearly as much as marketing departments would have us believe)?

Believe it or not, some of the specs trumpeted the most really don’t have all that much to do with whether or not the display is any good.

Contrast ratio

Take contrast. It’s a pretty simple concept: measure the brightness of the display in a white area and a black area, and the contrast ratio is simply the ratio of those two numbers. Obviously, the bigger the number, the better the display is going to look, right?

A display can only get so bright, and presumably that’s the value you measure for the white. Let’s face it: no real-world display is designed to be eye-searingly bright. So a display’s contrast ratio is pretty much always determined by how dark the blacks get. With the advent of OLEDs, that can be pretty dark, indeed.

Let's face it — no real-world display is designed to be eye-searingly bright.

OLEDs emit light in relation to how much current is put through the device, and if you turn off the current completely, there can be no light at all emitted. Zero or near-zero emission in the “black” state is going to make for some staggeringly high contrast ratio numbers. Some OLED phones are claiming contrast ratio specs of a hundred thousand to one or even a million to one. Some makers have even claimed “infinite” contrast for their OLED screens.

The problem here is that these numbers are what you’d get if you’d measure the black level in a totally dark, non-reflecting environment (assuming you actually could measure such low black levels – in practice, this requires some pretty sophisticated equipment). Under normal viewing conditions, even in a fairly dark room, the actual delivered contrast of most displays is limited by the amount of ambient light reflected by the screen (including the display’s own light, which is reflected by its surroundings back to its surface), which is what really limits the “black” brightness. Most screens deliver an effective contrast in the range of 50:1 to 100:1 at best under typical viewing conditions, with a reasonable level of ambient light. Approaching, let alone exceeding, 200:1 is outstanding.

So the bottom line? Beyond a certain level – and definitely by the time you get past the hundreds or low thousands to one – contrast ratio specs as they’re usually quoted are virtually meaningless, unless you do your viewing in a very dark room. What you really should be looking at is the screen’s reflectance (the lower the better) and the actual delivered contrast under real-world conditions.

What you really should be looking at is the screen's reflectance (the lower the better) and the actual delivered contrast under real-world conditions.

Color gamut

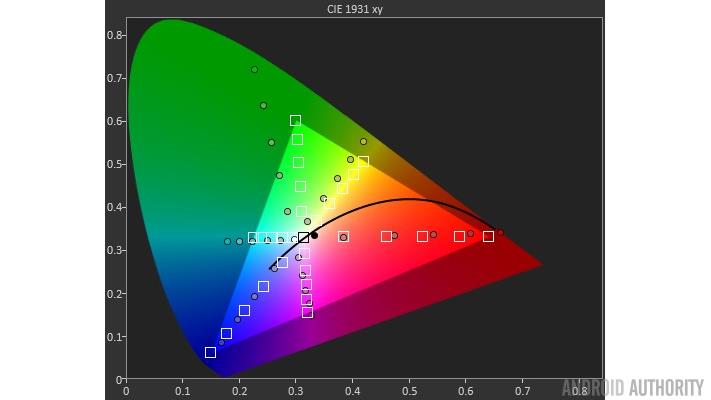

Another spec where the “bigger is always better” mindset leads us astray is color gamut, which, simply put, is the range of colors (or the fraction of the total visible “color space”) the display is capable of producing. Typically, color gamut specs are given as a percentage of a particular reference space or gamut; the traditional reference was the gamut used in the original U.S. color TV standard, the so-called “NTSC gamut.” Some displays claim “105% NTSC” or something similar, which leads us to believe that bigger gamut numbers mean a better display.

Simply providing a larger gamut does nothing for the quality or accuracy of the image.

In reality, simply providing a larger gamut does nothing for the quality or accuracy of the image. Still pictures and videos are made with a specific set of “color space” specs in mind — including the display gamut. Unless the display matches those specs (or it has color management software) the resulting image won’t be accurate.

Show a given picture on a display with a gamut significantly larger than what the image was made for, and the colors will look overly bright and cartoonish.

What you really want is not a display with a big gamut percentage, but instead one whose gamut is a good match to the intended space of the images you’ll be viewing. Almost all TV programming and digital camera images today are produced for the sRGB/”Rec. 709″ gamut, which itself is only about 72% of the standard NTSC reference’s area. More recent standards, such as the digital cinema DCI-P3 gamut or that of the digital TV “Rec. 2020” standard are a good deal larger than this, but still the point isn’t to just get a big percentage number; it’s to match the standard gamut as closely as possible.

Color bit depth

While we’re on color-related specs, there’s another one that’s often abused and generally misunderstood. It goes by several names, but usually we see it as “color bit depth” or “number of colors.” This one is pretty simple to grasp: if your display can handle, say, eight bits of data for each of the red, green, and blue primaries, then you have the ability to make 256 different “gray levels” for each of these (since 28 = 256). If that’s the case, then we should be able to make:

256 (reds) x 256 (greens) x 256 (blues) = 16.78 million different colors!

That’s good, right? Clearly more color variety is always better. Why not bump it up to 10 bits of control for each primary? Wow, now we’re up to more than a billion colors!

Not so fast. First of all, “color” is really just a perception; it’s something made up by our own visual systems, and has no real physical existence or meaning. How many different colors are our eyes capable of distinguishing? The answer comes out to be something around a few million, tops. Any claims of distinct colors numbering much greater than this are, from a perceptual standpoint, nonsense.

How many different colors our eyes are capable of distinguishing? The answer comes out to be something on the order of a few millions.

More bits per color (within reason) can beuseful in many situations. It’s just that this isn’t a very helpful way to look at it. Whether or not the display can really produce a given number of visually distinct levels or colors has to do with both the number of bits and how well the display matches the desired response or “gamma” curve (keep an eye out for our breakdown of this soon).

We’ll look at some others in more detail later, but for now here’s my list of the top good – and bad – display specifications:

| Don't worry so much about... | Instead, look for | |

|---|---|---|

1 | Don't worry so much about... Absolute, "dark room" contrast (beyond 2,000-3,000:1 or so) | Instead, look for Contrast under expected ambient light conditions and low screen reflectance |

2 | Don't worry so much about... Huge color gamut percentage numbers | Instead, look for A good match to the color gamut(s) of the space your images were made for |

3 | Don't worry so much about... Huge "number of colors" specs | Instead, look for Good color accuracy numbers (measured in term of "ΔE*" error; lower is better, and 1.0 or under is essentially perfect) and the correct "gamma" |

4 | Don't worry so much about... Standard total/GtG response time specs (as long as they're well under a frame time) | Instead, look for "Moving picture" response time (MPRT) and similar motion-based response specs (moving edge blur, etc.) |

Wrap-up

These are just some examples of where just looking at the spec numbers, without looking at what they mean, can lead us astray in judging the overall quality of a display.