Affiliate links on Android Authority may earn us a commission. Learn more.

What being an “AI first” company means for Google

Published onNovember 8, 2017

Back at Google I/O, CEO Sundar Pichai outlined the company’s vision as an “AI first” company, with a new focus on contextual information, machine learning, and using intelligent technology to improve customer experience. The launch of the Pixel 2 and 2 XL, the latest batch of Google Home products, and the Google Clips offer a glimpse into what this long-term strategic shift could mean. We’ll get to Google’s latest smartphones in a minute, but there’s much more to explore about the company’s latest strategy.

As part of the Google I/O 2017 keynote, Sundar Pichai announced that the company’s various machine learning and artificial intelligence efforts and teams are being brought together under a new initiative called Google.ai. Google.ai will be focusing not only on research, but on developing tools such as TensorFlow and its new Cloud TPUs, and “applied AI”.

For consumers, Google’s products should end up smarter, seemingly more intelligent, and, most importantly, more useful. We’re already using some of Google’s machine learning tools. Google Photos has built-in algorithms to detect people, places, and objects, which are helpful for organizing your content. RankBrain is used by Google within Search to better understand what people are looking for and how that matches the content it has indexed.

Google is leading the field when it comes to snatching up AI tech, followed closely by Microsoft and Apple.

But Google hasn’t been doing all of this work alone, the company has made over 20 corporate acquisitions related to AI so far. Google is leading the field when it comes to snatching up AI tech, followed closely by Microsoft and Apple. Most recently, Google purchased AIMatter, a company that owns an image detection and photo editing neural network-based AI platform and SDK. Its app, Fabby, offers a range of photo effects capable of changing hair color, detecting and altering backgrounds, adjusting make-up, etc, all based on image detection. Earlier in the year Google acquired Moodstocks for its image recognition software, which can detect household objects and products using your phone camera— it’s like a Shazam for images.

That’s just a taste of the potential of machine learning-powered applications, but Google is also pursuing further development. The company’s TensorFlow open-source software library and tools are one of the most useful resources for developers looking to build their own machine learning applications.

TensorFlow at the heart

TensorFlow is essentially a Python code library containing common mathematical operations necessary for machine learning, designed to simplify development. The library allows users to express these mathematical operations as a graph of data flows, representing how data moves between operations. The API also accelerates mathematically intensive neural networking and machine learning algorithms on multiple CPU and GPU components, including optimal CUDA extensions for NVIDIA GPUs.

TensorFlow is the product of Google’s long-term vision and is now the backbone of its machine learning ambitions. Today’s open-source library started out in 2011 as DistBelief, a proprietary machine learning project used for research and commercial applications inside Google. The Google Brain division, which started DistBelief, began as a Google X project, but its wide use across Google projects, like Search, resulted in a quick graduation to its own division. TensorFlow and Google’s entire “AI first” approach is the result of its long term vision and research, rather than a sudden change in direction.

TensorFlow is now also integrated into Android Oreo through TensorFlow Lite. This version of the library enables app developers to make use of many state-of-the-art machine learning techniques on smartphones, which don’t pack in the performance capabilities of desktop or cloud servers. There are also APIs that allow developers to tap into dedicated neural networking hardware and accelerators included in chips. This could make Android smarter too, with not only more machine-learning-based applications but also more features built into and running on the OS itself.

TensorFlow is powering many machine learning projects, and the inclusion of TensorFlow Lite in Android Oreo shows that Google is looking beyond cloud computing to the edge too.

Google’s efforts to help build a world full of AI products isn’t just about supporting developers though. The company’s recent People+AI Research Initiative (PAIR) project is devoted to advancing the research and design of people-centric AI systems, to develop a humanistic approach to artificial intelligence. In other words, Google is making a conscious effort to research and develop AI projects that fit in with our daily lives or professions.

Marriage of hardware and software

Machine learning is an emerging and complicated field and Google is one of the main companies leading the way. It demands not only new software and development tools, but also hardware to run demanding algorithms. So far, Google has been running its machine learning algorithms in the cloud, offloading the complex processing to its powerful servers. Google is already involved in the hardware business here, having unveiled its second generation Cloud Tensor Process Unit (TPU) to accelerate machine learning applications efficiently earlier this year. Google also offers free trials and sells access to its TPU servers through its Cloud Platform, enabling developers and researchers to get machine learning ideas off the ground without having to make the infrastructure investments themselves.

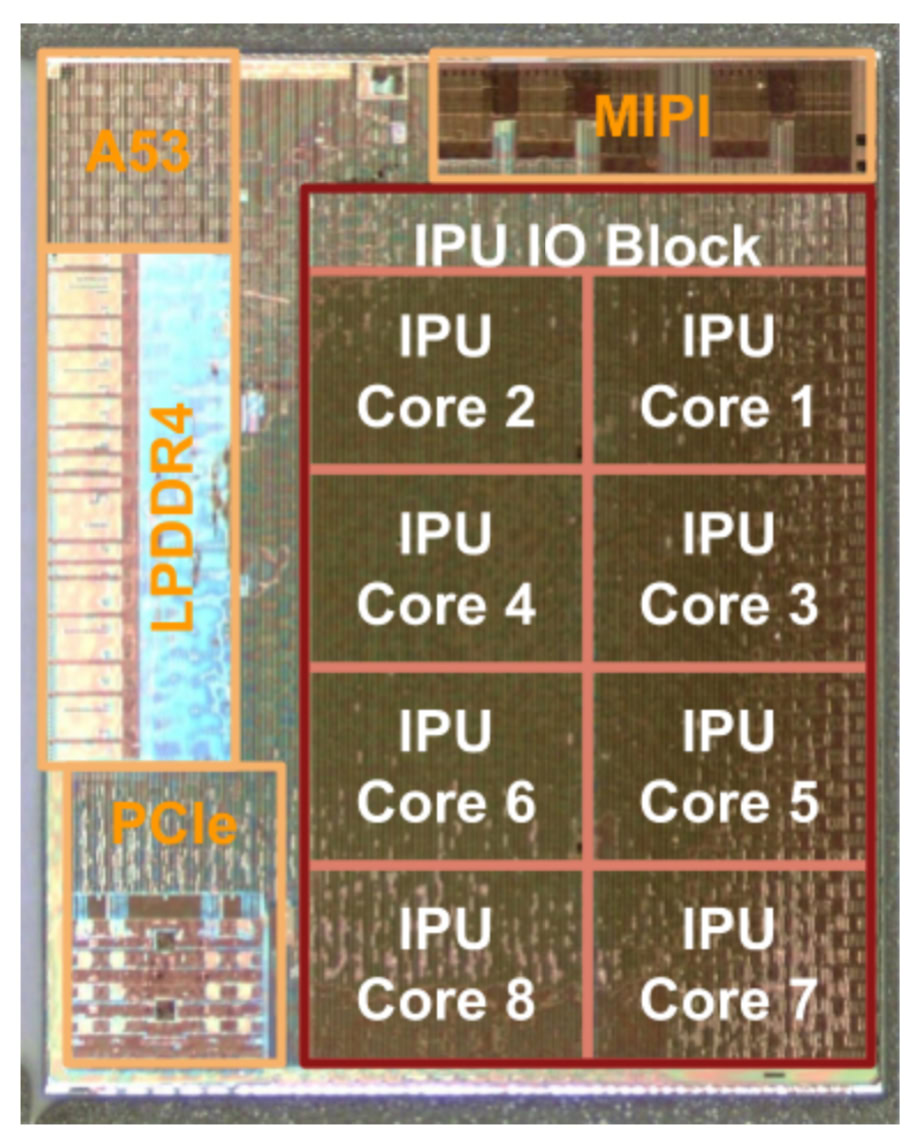

However, not all applications are suitable for cloud processing. Latency sensitive situations like self driving cars, real time image processing, or privacy sensitive information that you might want to keep on your phone are better processed at the “edge”. In other words, at the point of use rather than on a central server. To perform increasingly complex tasks efficiently, companies including Google, Apple and HUAWEI are turning to dedicated neural network or AI processing chips. There’s one inside the Google Pixel 2, where a dedicated image processing unit (IPU) is designed to handle advanced image processing algorithms.

Much has been made of Google’s product strategy and whether or not the company wants to sell successful mass products and compete with major consumer electronics companies, or simply show the way forward with smaller batch flagship products. Either way, Google can’t provide all of the world’s machine learning solutions, just like it can’t provide every smartphone app, but the company does have the expertise to show hardware and software developers how to get started.

Google can't provide all of the world's machine learning solutions, but it does have the expertise to show hardware and software developers how to get started.

By providing both hardware and software examples to product developers, Google is showing the industry what can be done, but isn’t necessarily intent on providing everything itself. Just like how the Pixel line isn’t big enough to shake Samsung’s dominant position, Google Lens and Clips are there to demonstrate the type of products that can be built, rather than necessarily being the ones we end up using. That isn’t to say Google isn’t searching for the next big thing, but the open nature of TensorFlow and its Cloud Platform suggests that Google acknowledges that breakthrough products might come from somewhere else.

What’s next?

In many ways, future Google products will be business as usual from a consumer product design standpoint, with data seamlessly being passed to and from the cloud or processed on the edge with dedicated hardware to provide intelligent responses to user inputs. The intelligent stuff will be hidden from us, but what will change is the types of interactions and features we can expect from our products.

Google Clips, for example, demonstrate how products can perform existing functions more intelligently using machine learning. We’re bound to see photography and security use cases subtly benefit quite quickly from machine learning. But potential use cases range from improving the voice recognition and inference capabilities of Google Assistant to real time language translations, facial recognition, and Samsung’s Bixby product detection.

Although the idea may be to build products that just appear to work better, we will probably eventually see some entirely new machine learning based products too. Self driving cars are an obvious example, but computer assisted medical diagnostics, faster more reliable airport security, and even banking and financial investments are ripe to benefit from machine learning.

Google is looking to be the backbone of a broader AI first shift in computing.

Google’s AI first approach isn’t just about making better use of more advanced machine learning at the company, but also about enabling third parties to develop their own ideas. In this way, Google is looking to be the backbone of a broader AI first shift in computing.