Affiliate links on Android Authority may earn us a commission. Learn more.

Google.ai and second-gen Cloud TPUs unveiled

Whether you’re aware of it or not, machine learning is a big part of your everyday smartphone usage and the backbone of a number of Google’s software products. As part of the Google I/O 2017 keynote, Sundar Pichai announced that the company’s various machine learning and artificial intelligence efforts and teams are being brought together under a new initiative called Google.ai. Google.ai will be focusing not only on research, but on developing tools such as TensorFlow and its new Cloud TPUs, and “applied AI” or developing solutions, in other words.

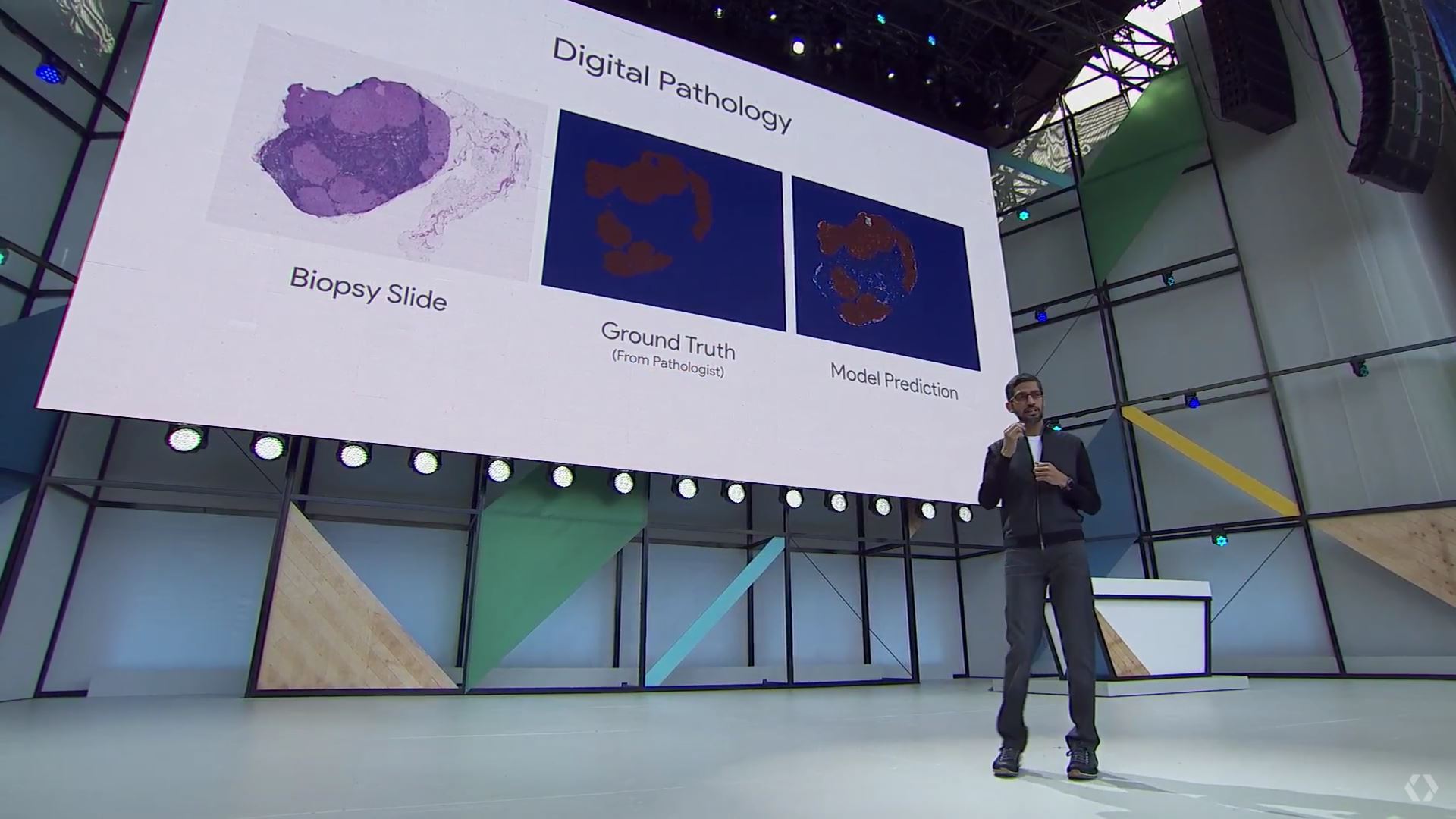

Although still in its relative infancy, machine learning tools are already making promising strides in a number of fields, including medical research. During the announcement, Pichai remarked that machine learning is being used to improve the accuracy of DNA sequencing, which is useful for helping to identify genetic diseases, and that the company helped developed a neural net to help identify cancer spreading to adjacent cell by studying patient images.

Google.ai's AutoML initiative. uses neural nets to help design other neural nets, and is designed to lower the barrier to AI development.

This is all very promising stuff, and in order to bring down the barrier for developing new machine learning models, so that you don’t have to be a PHD researcher to be involved, Google also unveiled a little about its AutoML initiative. Pichai explained this as using neural nets to help design other neural nets, by iterating down a selection of candidate neural nets down to the most optimal design. This is known as a reinforcement learning approach.

This is a computationally expensive process, but Google believes that by opening up this technology to developers, we could see hundreds of thousands of new applications begin making use of machine learning. To do this, Google is extending supporting for these type of training feature on its newly announced second generation TPUs, known as Cloud TPUs. At Google I/O, Pichai announced that Google’s Cloud Tensor Process Units (TPU) hardware will be initially available via its Google Compute Engine, which lets customers create and run virtual machines on Google infrastructure that can tap Google’s computing resources.

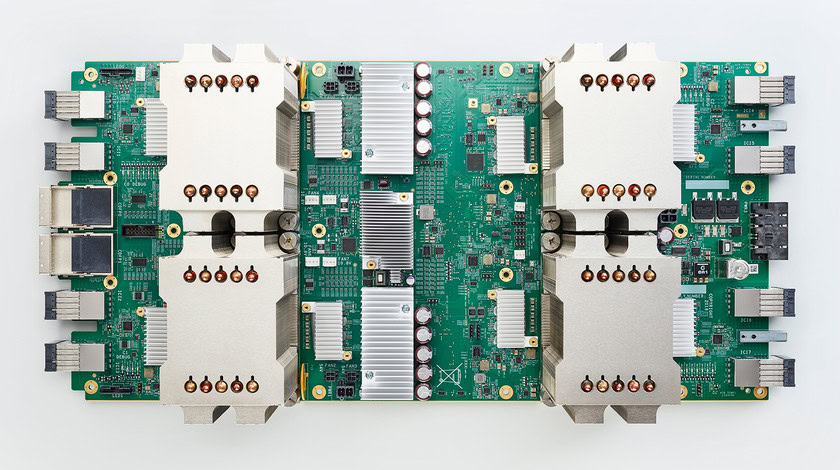

The 2nd gen Cloud TPU can now be used to train computationally intensive AI algorithms.

These TPUs are specifically optimized for machine learning, making them both more powerful and power efficient at these type of tasks that traditional CPUs and GPUs. These TPUs power virtually all of Google’s impressive intelligent cloud-based products, including language translations and image recognition.

The second generation TPU can deliver up to 180 teraflops of floating point performance and can be paired up in “pods” for additional power. A single TPU pod contains 64 of these latest Cloud TPUs and can therefore provide up to 11.5 petaflops of compute power for machine learning models. Importantly, these new TPUs also now supports training as well as inference. This means that computationally intensive AI algorithms can now be developed on this hardware, as well as just real-time number crunching, and this is what will be powering the AutoML initiative.

Of course, these TPU’s work with Google’s TensorFlow open-source software library for machine learning. Speaking of which, the company has also unveiled its TensorFlow Research Cloud program, whereby it will be giving out access to a cluster of 1,000 TPUs to researchers for free. Google also says that its Cloud TPUs can also be mix and matched with other hardware types, including Skylake CPU and NVIDIA GPUs, which are often used by machine learning tools.

The merger of several groups under the Google.ai group certainly shows that the company is committed to its machine learning platform and that it views these technologies as a key part of its strategy going forward. Google’s latest hardware and tools will hopefully not only empower some interesting new use cases, but will also opening up machine learning development and applications to a range of new developers, which is sure to yield some innovative results. Interesting times ahead.