Affiliate links on Android Authority may earn us a commission. Learn more.

What is Google's Tensor chip? Everything you need to know

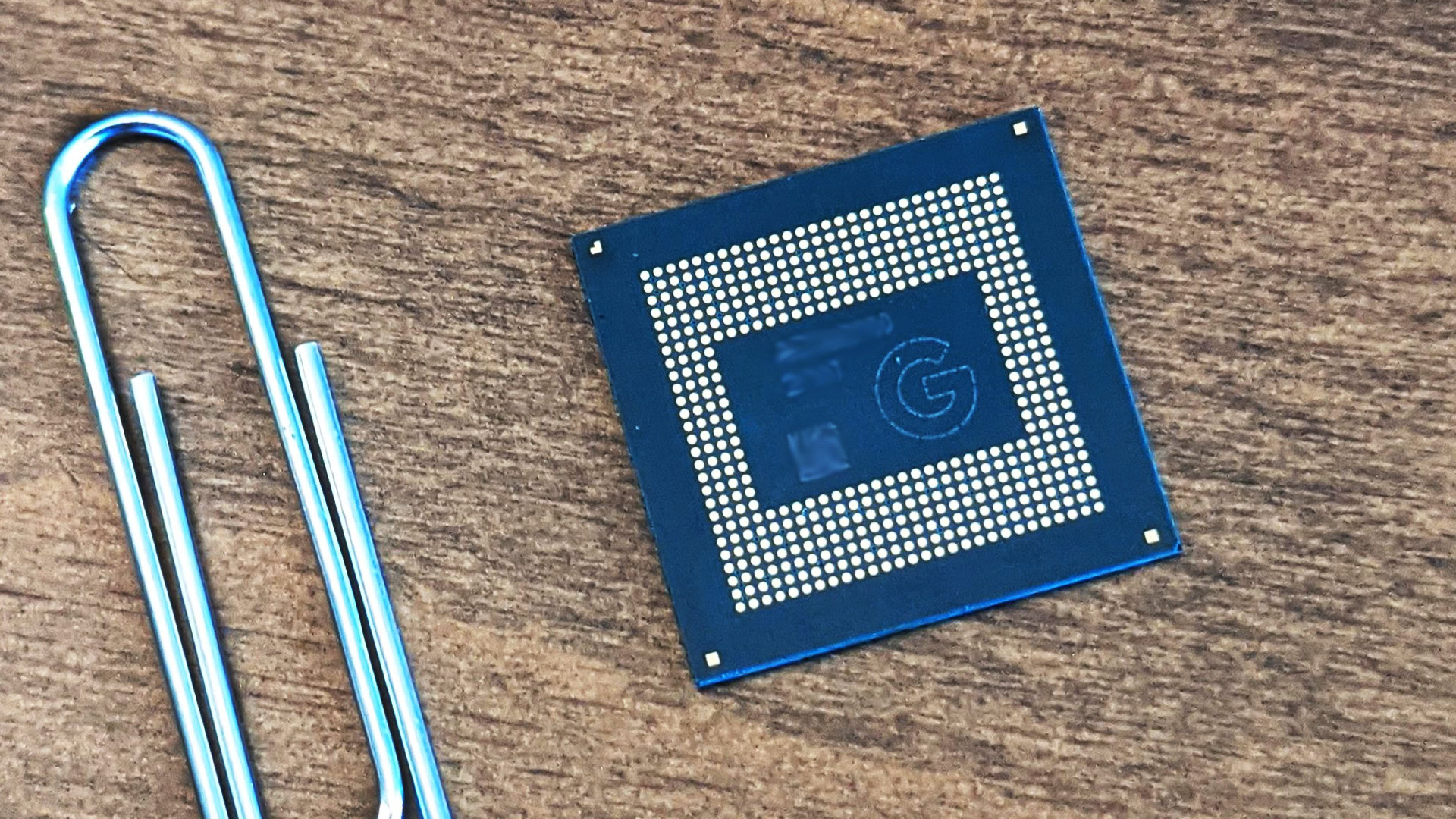

The Pixel 6 was the first smartphone to feature Google’s bespoke mobile system on a chip (SoC), dubbed Google Tensor. While the company dabbled with add-on hardware in the past, like the Pixel Visual Core and Titan series of security chips, the Google Tensor chip represented the company’s first attempt at designing a custom mobile SoC. Or at least part-designing.

Even though Google didn’t develop every component from scratch, the Tensor Processing Unit (TPU) is all in-house, and it’s at the heart of what the company wants to accomplish with the SoC. As expected, Google stated that the processor is laser-focused on enhanced imaging and machine learning (ML) capabilities. To that end, Tensor doesn’t deliver ground-breaking raw power in most applications, but that’s because the company is targeting other use-cases instead.

Given this nuanced approach to chip design then, it’s worth taking a closer look at the guts of Google’s first-generation SoC and what the company has accomplished with it. Here’s everything you need to know about Google Tensor.

What is the Google Tensor chip all about?

First and foremost, Tensor is a custom piece of silicon designed by Google to be efficient at the things the company most wants to prioritize, such as machine learning-related workloads. Needless to say, the first-generation Tensor in the Pixel 6 is a significant step up from the chips Google used in the previous-generation mid-range Pixel 5. In fact, it rubs shoulders with flagship SoCs from the likes of Qualcomm and Samsung.

That’s no coincidence, though — we know that Google collaborated with Samsung to co-develop and fabricate the Tensor SoC. And without delving too deep into the specifications, it’s also worth noting that the chip shares many of the Exynos 2100’s underpinnings, from components like the GPU and modem to architectural aspects like clock and power management.

Google won’t admit it, but the Tensor SoC shares many of the Exynos 2100’s underpinnings.

Admittedly, a modest speed bump isn’t all too exciting these days and Google could have obtained similar performance gains without designing its own SoC. After all, many other smartphones using other chips, ranging from earlier Pixel devices to rival flagships, are perfectly fast enough for day-to-day tasks. Thankfully, though, there are plenty of other benefits that aren’t as immediately obvious as raw performance gains.

As we alluded to earlier, the star of the show is Google’s in-house TPU. Google has highlighted that the chip is quicker at handling tasks like real-time language translation for captions, text-to-speech without an internet connection, image processing, and other machine learning-based capabilities, like live translation and captions. It also allowed the Pixel 6 to apply Google’s HDRNet algorithm to video for the first time, even at qualities as high as 4K 60fps. Bottom line, the TPU allows Google’s coveted machine learning techniques to run more efficiently on the device, shaking the need for a cloud connection. That’s good news for the battery and security conscious.

Google’s other custom inclusion is its Titan M2 security core. Tasked with storing and processing your extra sensitive information, such as biometric cryptography, and protecting vital processes like secure boot, it’s a secure enclave that adds a much-needed additional level of security.

How does Google’s chip stack up against the competition?

We knew pretty early on that Google would be licensing off-the-shelf CPU cores from Arm for Tensor. Building a new microarchitecture from scratch is a much bigger endeavor that would require significantly more engineering resources. To that end, the SoC’s basic building blocks may seem familiar if you’ve kept up with flagship chips from Qualcomm and Samsung, except for a few notable differences.

| Google Tensor (2021) | Google Tensor G2 (2022) | Snapdragon 888 (2021) | Exynos 2100 (2021) | |

|---|---|---|---|---|

CPU | Google Tensor (2021) 2x Arm Cortex-X1 (2.80GHz) 2x Arm Cortex-A76 (2.25GHz) 4x Arm Cortex-A55 (1.80GHz) | Google Tensor G2 (2022) 2x Arm Cortex-X1 (2.85GHz) 2x Arm Cortex-A78 (2.35GHz) 4x Arm Cortex-A55 (1.80GHz) | Snapdragon 888 (2021) 1x Arm Cortex-X1 (2.84GHz, 3GHz for Snapdragon 888 Plus) 3x Arm Cortex-A78 (2.4GHz) 4x Arm Cortex-A55 (1.8GHz) | Exynos 2100 (2021) 1x Arm Cortex-X1 (2.90GHz) 3x Arm Cortex-A78 (2.8GHz) 4x Arm Cortex-A55 (2.2GHz) |

GPU | Google Tensor (2021) Arm Mali-G78 MP20 | Google Tensor G2 (2022) Arm Mali-G710 MP7 | Snapdragon 888 (2021) Adreno 660 | Exynos 2100 (2021) Arm Mali-G78 MP14 |

RAM | Google Tensor (2021) LPDDR5 | Google Tensor G2 (2022) LPDDR5 | Snapdragon 888 (2021) LPDDR5 | Exynos 2100 (2021) LPDDR5 |

ML | Google Tensor (2021) Tensor Processing Unit | Google Tensor G2 (2022) Next-gen Tensor Processing Unit | Snapdragon 888 (2021) Hexagon 780 DSP | Exynos 2100 (2021) Triple NPU + DSP |

Media Decode | Google Tensor (2021) H.264, H.265, VP9, AV1 | Google Tensor G2 (2022) H.264, H.265, VP9, AV1 | Snapdragon 888 (2021) H.264, H.265, VP9 | Exynos 2100 (2021) H.264, H.265, VP9, AV1 |

Modem | Google Tensor (2021) 4G LTE 5G sub-6Ghz & mmWave | Google Tensor G2 (2022) 4G LTE 5G sub-6Ghz and mmWave | Snapdragon 888 (2021) 4G LTE 5G sub-6Ghz & mmWave 7.5Gbps download 3Gbps upload (integrated Snapdragon X60) | Exynos 2100 (2021) 4G LTE 5G sub-6Ghz & mmWave 7.35Gbps download 3.6Gbps upload (integrated Exynos 5123) |

Process | Google Tensor (2021) 5nm | Google Tensor G2 (2022) 5nm | Snapdragon 888 (2021) 5nm | Exynos 2100 (2021) 5nm |

Unlike other 2021 flagship SoCs like the Exynos 2100 and Snapdragon 888, which feature a single high-performance Cortex-X1 core, Google opted to include two such CPU cores instead. This means that Tensor has a more unique 2+2+4 (big, middle, little) configuration, while its competitors feature a 1+3+4 combo. On paper, this configuration may appear to favor Tensor in more demanding workloads and machine learning tasks — the Cortex-X1 is an ML number cruncher.

As you may have noticed, though, Google’s SoC skimped on the middle cores in the process, and in more ways than one. Besides the lower count, the company also opted for the significantly older Cortex-A76 cores instead of the better-performing A77 and A78 cores. For context, the latter is used in both the Snapdragon 888 and Samsung’s Exynos 2100 SoCs. As you’d expect from older hardware, the Cortex-A76 simultaneously consumes more power and puts out less performance.

Tensor has a unique core layout relative to the competition. It bundles two high-performance cores but makes some tradeoffs in the process.

This decision to sacrifice middle core performance and efficiency was the subject of much debate and controversy prior to the Pixel 6’s release. Google hasn’t given a reason for using the Cortex-A76. It’s possible that Samsung/Google didn’t have access to the IP when Tensor development began four years ago. Or if this was a conscious decision, it may have been a result of silicon die space and/or power budget limitations. The Cortex-X1 is big, while the A76 is smaller than the A78. With two high-performance cores, it’s possible that Google had no power, space, or thermal budgets left to include the newer A78 cores.

While the company hasn’t been forthcoming about many Tensor-related decisions, a VP at Google Silicon told Ars Technica that including the twin X1 cores was a conscious design choice and that the trade-off was made with ML-related applications in mind.

As for graphics capabilities, Tensor shares the Exynos 2100’s Arm Mali-G78 GPU. However, it is a beefed-up variant, offering 20 cores over the Exynos’ 14. This 42% increase is a rather significant advantage once again, in theory anyway.

As of the Tensor G2, Google contracts Samsung Foundry for chip fabrication.

How does the Google Tensor chip perform?

Despite some clear advantages on paper, if you were hoping for generation-defying performance, you’ll be a bit disappointed here.

While there’s no arguing that Google’s TPU has its advantages for the company’s ML workloads, most real-world use cases like web browsing and media consumption rely exclusively on the traditional CPU cluster instead. When benchmarking CPU workloads, you’ll find that both Qualcomm and Samsung eke out a small lead over Tensor. Still, Tensor is more than powerful enough to handle these tasks with ease.

The GPU in the Tensor does manage to put up a more commendable showing, thanks to the extra cores compared to the Exynos 2100. However, we did notice aggressive thermal throttling in our stress-test benchmarks.

It’s possible that the SoC could perform slightly better in a different chassis than the Pixel 6 series. Even so, the performance on offer is plenty for all but the most dedicated of gamers.

But all of this isn’t exactly new information — we already knew that Tensor wasn’t designed to top benchmark charts. The real question is whether Google has managed to deliver on its promise of improved machine learning capabilities. Unfortunately, that’s not as easily quantified. Still, we were left impressed by the camera and other features Google brought to the table with the Pixel 6. Furthermore, it’s worth noting that other benchmarks showcase the Tensor handily outperforming its closest rivals in natural language processing.

All in all, Tensor is not a massive leap forward in the traditional sense, but its ML capabilities indicate the start of a new era for Google’s custom silicon efforts. And in our Pixel 6 review, we were pleased by it’s performance in day-to-day tasks even if it came at the expense of slightly higher heat output.

What has Google accomplished with the Pixel 6 SoC?

AI and ML are at the core of what Google does, and it arguably does them better than everyone else — hence why it’s the core focus of Google’s chip. As we’ve noted in many recent SoC releases, raw performance is no longer the most important aspect of mobile SoCs. Heterogeneous compute and workload efficiency are just as, if not more, important to enable powerful new software features and product differentiation.

For proof of this fact, look no further than Apple and its own vertical integration success with the iPhone. Over the past few generations, Apple has focused heavily on improving its custom SoCs’ machine learning capabilities. That has paid off — as is evident from the slew of ML-related features introduced alongside the latest iPhone.

With Tensor, Google finally has influence over its hardware and is bringing unique machine learning-enabled experiences to mobile.

Similarly, by stepping outside the Qualcomm ecosystem and picking out its own components, Google gains more control over how and where to dedicate precious silicon space to fulfill its smartphone vision. Qualcomm has to cater to a wide range of partner visions, while Google certainly has no such obligation. Instead, much like Apple’s work on custom silicon, Google is using bespoke hardware to help build bespoke experiences.

Even though Tensor is the first generation of Google’s custom silicon project, we’ve already seen some of those bespoke tools materialize recently. Pixel-only features like Magic Eraser, Real Tone, and even real-time voice dictation on the Pixel are a marked improvement over previous attempts, both by Google and other players in the smartphone industry.

Moreover, Google is touting a massive reduction in power draw with Tensor in these machine learning-related tasks. To that end, you can expect lesser battery drain while the device performs computationally expensive tasks, like the Pixel’s signature HDR image processing, on-device speech captioning, or translation.

Google is using its bespoke hardware for applications like real-time offline translation and 4K HDR video recording.

Features aside, the Tensor SoC is seemingly also allowing Google to provide a longer software update commitment than ever before. Typically, Android device makers are dependent on Qualcomm’s support roadmap for rolling out long-term updates. Samsung, via Qualcomm, offers three years of OS updates and four years of security updates.

With the Pixel 6 lineup, Google has leapfrogged other Android OEMs by promising five years of security updates — albeit with only the usual three years of Android updates in tow.

Google Tensor SoC: What’s next?

Google CEO Sundar Pichai noted that the Tensor chip was four years in the making, which is an interesting time frame. Google embarked on this project when mobile AI and ML capabilities were still relatively new. The company has always been on the cutting edge of the ML market and often seemed frustrated by the limitations of partner silicon, as seen in the Pixel Visual Core and Neural Core experiments.

Admittedly, Qualcomm and others haven’t sat on their hands for four years. Machine learning, computer imaging, and heterogeneous compute capabilities are at the heart of all the major mobile SoC players, and not just in their premium-tier products either. Still, the Tensor SoC is Google striking out with its own vision for not just machine learning silicon but how hardware design influences product differentiation and software capabilities.

Even though the first generation of Tensor did not break new ground in traditional computing tasks, it does offer us a glimpse at the future of the Pixel series and the smartphone industry in general. The Tensor G2 found in the latest Pixel 7 series introduces a more efficient TPU, slightly better multi-core performance, and improved sustained GPU performance. While this is a smaller upgrade than most other yearly SoC releases, the new Pixel 7 camera features further illustrate that Google’s focus is on the end-user experience rather than chart-topping results.

Read next: Google Tensor G3 benchmarked vs Qualcomm’s Snapdragon 8 Gen 3