Affiliate links on Android Authority may earn us a commission. Learn more.

What is Google Gemini? A next-gen assistant and language model

With competition in the tech industry heating up, Google has consolidated its generative AI efforts under one umbrella: Gemini. The term originally referred to the search giant’s cutting-edge large language model. But Google has now rebranded its Bard AI chatbot to Gemini as well, matching the name of its underlying language model.

It’s fair to say that Gemini could change the way we use many Google services, ranging from Gmail to Android, in the near future. In fact, you can already replace the Google Assistant with Gemini on your smartphone. Sounds interesting? Here’s everything you need to know about Google Gemini, how to use it, and what it can do.

What is Google Gemini? AI model and next-gen Assistant

Gemini is Google’s AI chatbot, next-generation assistant, and language model all rolled into one. In mid-2023, Google introduced Gemini as the name for its latest language model. However, in February 2024, the company also decided to rebrand its Bard chatbot to Gemini and bring other AI features under the same banner.

Let’s start with Gemini, the large language model that goes head-to-head against OpenAI’s GPT-4. The model is available in three sizes, namely Gemini Nano, Pro, and Ultra. The smallest Gemini Nano model runs locally on Google’s Pixel smartphones and powers various AI features. The larger Gemini Pro model, meanwhile, is the backbone of virtually all cloud-based Google services, including the chatbot with the same name. Finally, the Ultra variation unlocks the model’s full potential. However, it’s available only to paying users via Gemini Advanced at the moment. More on that in a later section.

Gemini is an AI chatbot, but it's also the name of Google's latest language model.

On the chatbot side of things, Gemini now also refers to Google’s AI chatbot and virtual assistant. You can think of Gemini as the successor of the Google Assistant. The name admittedly sounds a whole lot better than the originally proposed “Google Assistant with Bard”.

Nevertheless, Gemini fixes some of the Google Assistant’s biggest shortcomings. For one, it can actually handle back-and-forth conversations in a natural and human-like manner. It can also interact with other Google services such as Maps, Drive, Flights, and YouTube. For example, you can ask the chatbot to summarize a long video or find the cheapest flight tickets between two cities in a given month. That said, don’t expect complete accuracy as language models do have the tendency to hallucinate or make up information.

How to use Gemini: Android, iPhone, and beyond

Here’s a quick rundown on how to use Google Gemini, depending on your platform and desired use-case:

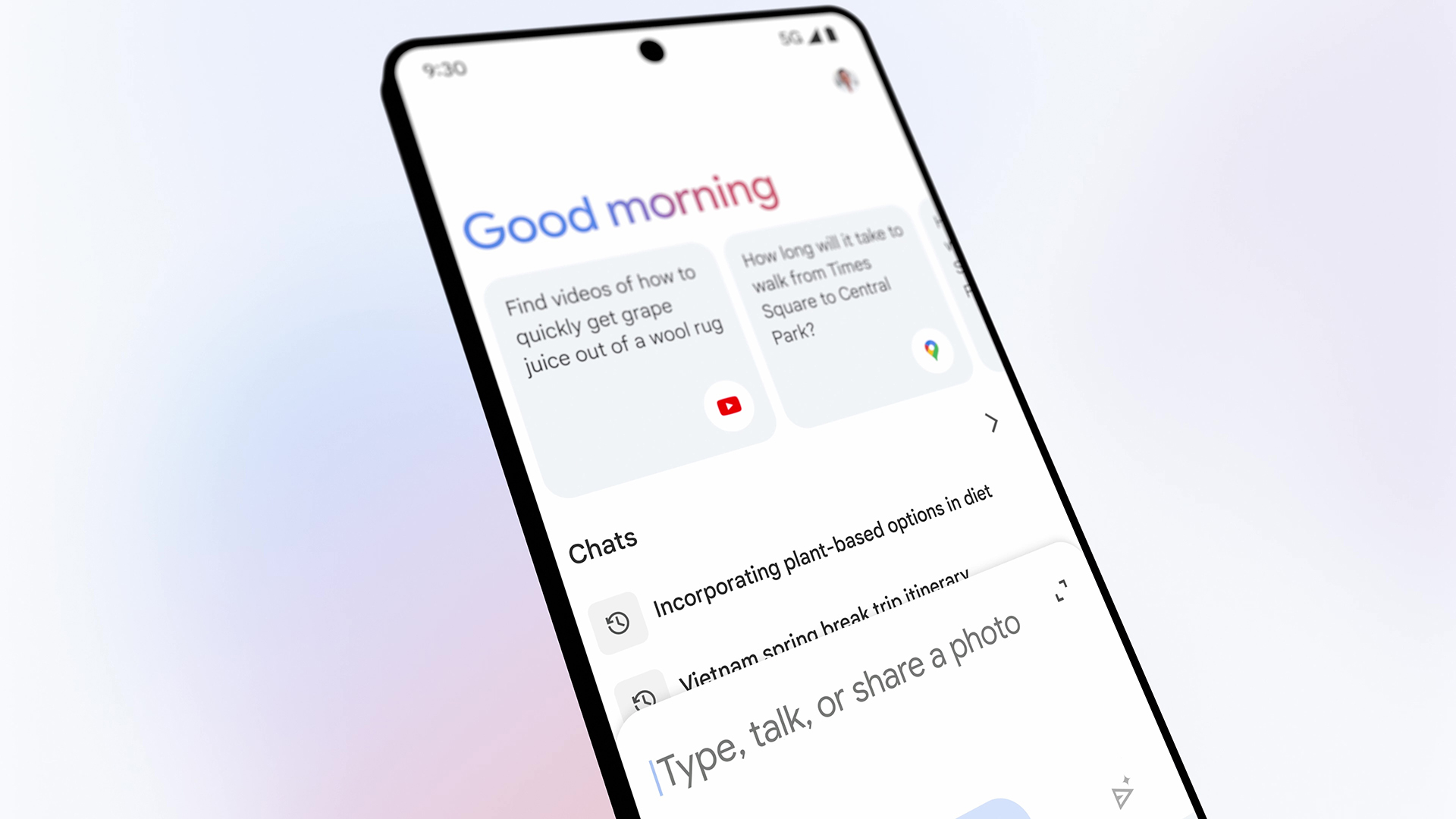

- Gemini on Android: If you have an Android device, you can set Gemini as your default virtual assistant, replacing the Google Assistant. To do so, you need to download the Gemini app from the Play Store. This will allow you to invoke Gemini using the “OK Google” wake word and via existing shortcuts like holding down the power button. Keep in mind that Gemini doesn’t support the Google Assistant’s entire feature-set yet but it can perform basic tasks like fetching the weather and setting alarms.

- Gemini on iOS: You can’t change the default assistant on the iPhone or iPad. There’s no dedicated iOS app for Gemini either so you’ll have to access it through the Google app instead.

- Gemini on the web: As with Bard previously, you can access Google’s Gemini chatbot by opening a web browser and navigating to gemini.google.com. It won’t offer any Assistant-like features but you’ll get the full chatbot experience.

- Gemini for Workspace: You can also find the language model in other Google products under the Gemini for Workspace banner. In a nutshell, it enables AI-assisted features like Help me Write in Google Docs and Help me Organize in Sheets. This software suite was originally titled Duet AI for Workspace, but Google decided to bring it under the Gemini umbrella in February 2024.

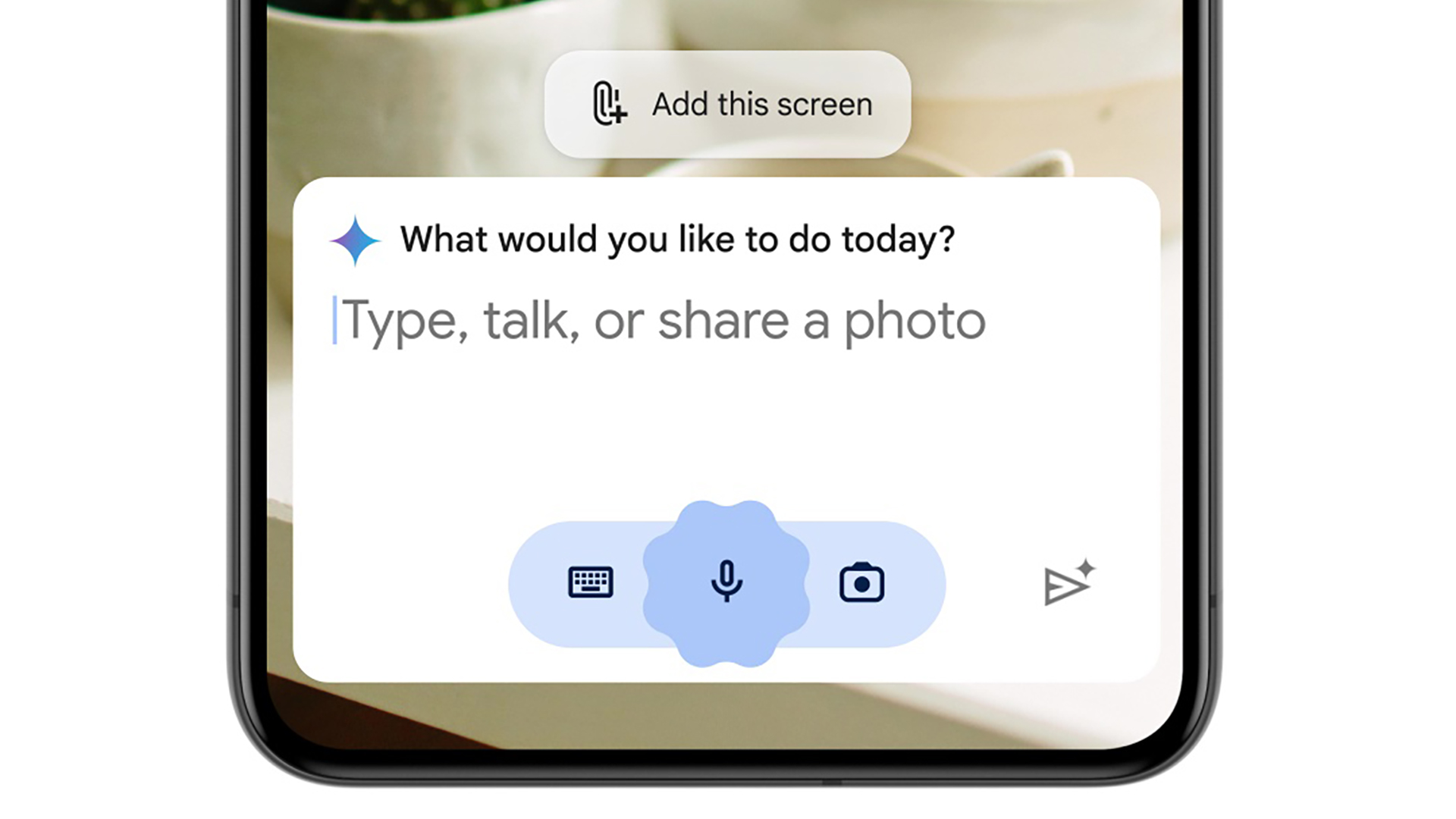

The Android Gemini app offers the most functionality as it’s meant to completely replace the Google Assistant. The AI model can even see the contents of your screen when you bring up Gemini using a shortcut like holding down the power button.

I’m hoping Gemini comes to even more Google products and services, including smart speakers, in the future. For now, however, the AI model only replaces the Google Assistant on mobile devices.

Google Gemini release date and price

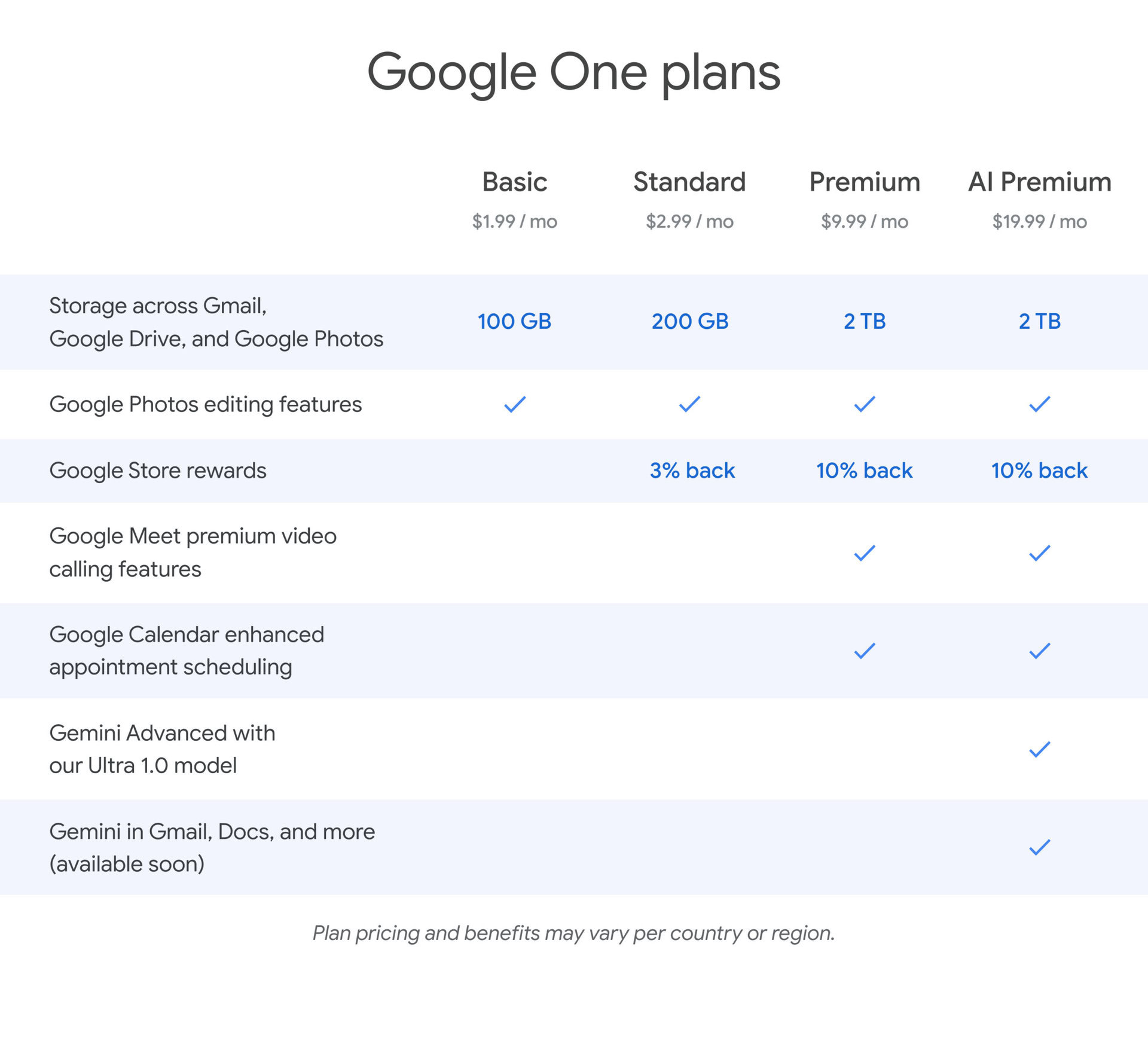

Google released its Gemini 1.0 language model to the public on December 6, 2023. Two months later on February 8, 2024, the company rebranded its Bard chatbot to Gemini. And at the same time, it announced Gemini for Workspace and a new Google One AI Premium subscription.

The Gemini chatbot is available right away in a host of different languages across 150 countries and territories. However, the Android app is rolling out slowly, starting with English support in the United States as well as Japanese and Korean. Check the Play Store on your Android device to see if you can download the Gemini app.

The Gemini Android app is rolling out slowly, but almost everyone can access it on the web.

As for pricing, the most capable Gemini Ultra model is gated behind the aforementioned Google One AI Premium subscription. It costs $20 per month but includes 2TB of Drive storage and other Google One benefits as outlined above. The silver lining is that you’ll only pay an additional $10 if you’re already subscribed to the 2TB Google One tier.

Paying for Gemini Ultra or Advanced also unlocks other AI features features like Help Me Write in Gmail and Google Docs. Moreover, Google says to expect even more features like the ability to upload documents for analysis in the coming months so you’re getting more than just a more capable language model for your money. That said, it’s only optimized for English at the moment unlike the free version of Gemini that officially supports many more languages.

Gemini vs. GPT-4: Which is the better language model?

Gemini differs from older and some competing large language models in that it’s not just trained on text alone. Google says that it built Gemini with multimodal capabilities in mind. More specifically, its ability to combine visuals and text allows it to generate more than one kind of data at the same time. This is similar to GPT-4, the current reigning champion in the world of language models, which can handle different modalities like vision and speech.

A table in Google’s technical report (pictured below) suggests that Gemini Ultra performs better than GPT-4 in its benchmarks, although real-world testing could paint a different picture.