Affiliate links on Android Authority may earn us a commission. Learn more.

Forget more megapixels, your next phone's camera could offer whiter teeth

Qualcomm and MediaTek have both announced their new generation flagship processors in the last couple of months, set to power 2023’s high-end smartphones. In fact, we’ve already seen phones launching with these chipsets, such as the OnePlus 11, Xiaomi 13 series, and vivo X90 range.

The processors bring increased horsepower, hardware-based ray tracing, and satellite connectivity, but it definitely seems like more of an evolutionary year in terms of classical camera capabilities. Both MediaTek and Qualcomm’s high-end chips don’t see major changes in terms of photo and video resolutions or frame rates.

But there’s more to camera support than resolution alone, and the two companies indeed bring quite a few under-the-hood imaging changes, such as pro video capture tech, optimizations for 200MP sensors, and native RGBW camera support. However, we’re also seeing a trend to unify AI and imaging hardware, and this is enabling at least one rather interesting feature in 2023.

More granular recognition

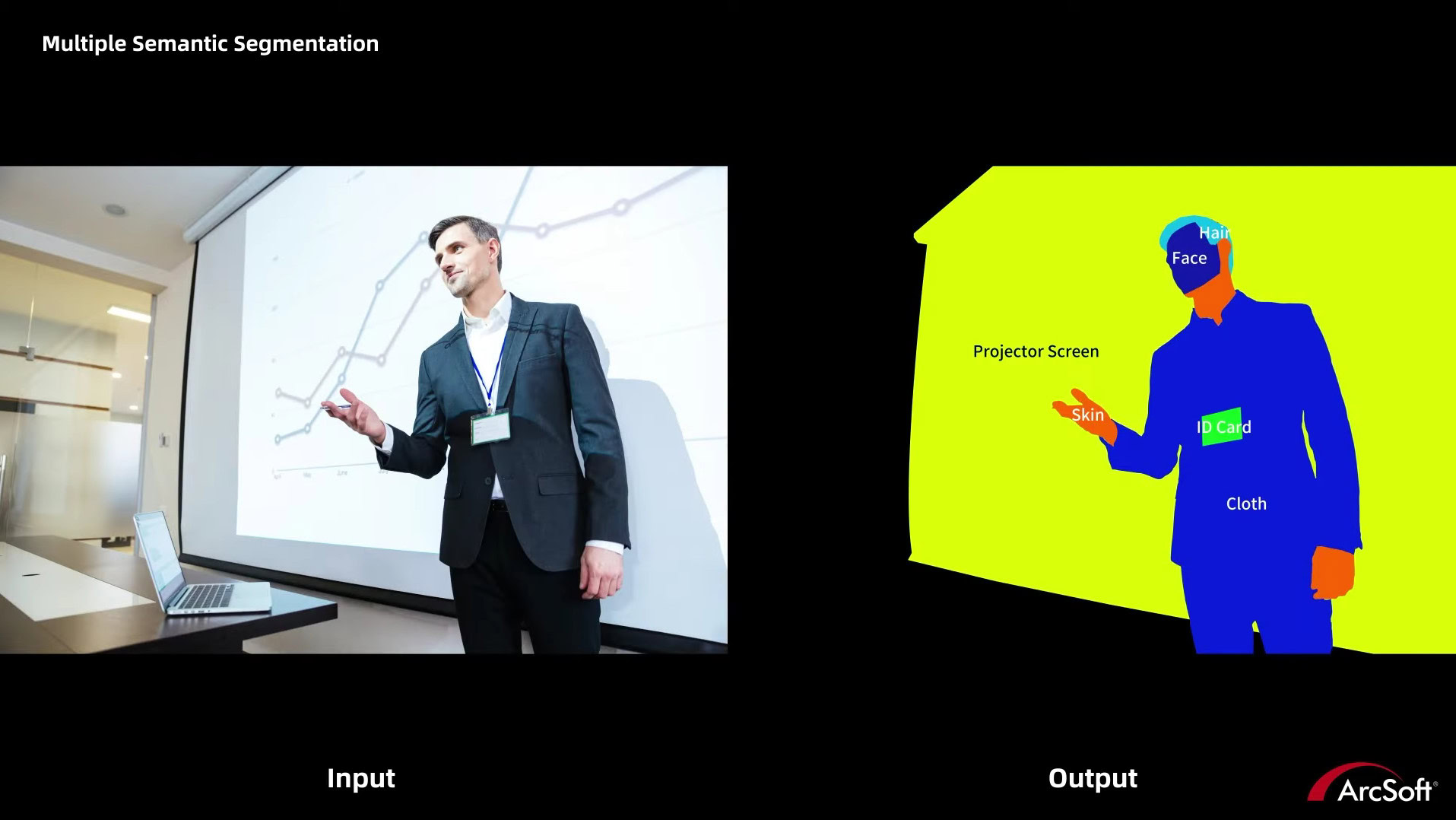

Qualcomm is touting real-time semantic segmentation in the Snapdragon 8 Gen 2. For the uninitiated, semantic segmentation refers to the ability to identify specific objects and subjects within a frame. It’s a core technology at the heart of many camera modes, as the camera software is able to identify specific scenes or people and then apply image processing accordingly.

Many smartphone brands use semantic segmentation for single-camera portrait modes, while other brands use it for AI scene recognition (sunsets, landscapes, flowers, food). We’ve even seen some brands like Xiaomi and Google touting the ability to completely change the sky, swapping out a grey sky in your photo for a completely blue sky.

More reading: AI will help phone photos surpass the DSLR, says Qualcomm

However, Qualcomm is drilling down even deeper. For one, the company confirms its latest take on the solution is fast enough to run in real-time and for videos. It also says its solution is able to identify specific elements such as teeth, hair, facial hair, lips, fabric, and more. And this could open the door for some interesting possibilities.

The most obvious one is that we’ll see more accurate portrait mode snaps. Qualcomm’s own video demo, in conjunction with ArcSoft, shows the ability to more accurately blur challenging backgrounds (seen below) while keeping trickier subjects in focus.

However, one intriguing possibility is that Snapdragon 8 Gen 2 phones could offer more detailed and more advanced beautification effects. In fact, Judd Heape, vice president of product management for cameras at Qualcomm, told Android Authority that the tech is initially focused on selfie cameras.

We’ve already seen selfie cameras offer blemish removal, skin smoothing, and shape adjustments as beautification options, but this is just the tip of the iceberg with real-time semantic segmentation.

It’s also theoretically possible that we could see crazy beautification effects like teeth whitening, for one. After all, this latest tech indeed offers teeth recognition. Heape concurs with this suggestion, explaining that partners can make their own semantic segmentation networks based on this technology to detect other things.

So yes, if you had a network that’s really good at detecting teeth, then that can be fed to the ISP [image signal processor – ed], and the ISP can desaturate the colors in the teeth and turn them from yellow to white. Absolutely, that’s totally a possibility.”

Qualcomm also touts the ability to recognize hair, saying this could be used to deliver more detailed hair. But it also seems theoretically possible for brands to implement grey hair removal or the ability to completely change your hair color. Heape suggests that grey hair removal might be a tough challenge, particularly if it’s only a few grey hairs in a sea of dark hair. But he still reckons that a complete change in hair color is a possibility, although it might not look realistic.

Qualcomm's upgraded take on semantic segmentation could theoretically result in beautification options like teeth whitening.

Manufacturers will however need to walk a fine line between providing beautification features that people want and promoting warped beauty standards. After all, we’ve seen many questionable effects and filters over the years such as face thinning, nose shaping, skin lightening, and eye-widening.

More advanced semantic image segmentation isn’t limited to beautification though. The tech could also enable better processing for clothes as Qualcomm’s video shows, offering extra sharpening for your jersey or jacket without affecting the rest of your body. The clip even shows the ability to remove glare from a pair of spectacles.

Qualcomm also confirmed that real-time semantic segmentation is programmable too. So companies can run different neural networks if they have other uses in mind for the tech.

Will this tech come to commercial devices, though?

It’s all well and good for chipmakers to support something like real-time semantic segmentation, but the real question is whether smartphones will actually ship with this tech. After all, smartphone brands have a mixed record when it comes to adopting a chipmaker’s camera features (e.g. unlimited 960fps slow-motion, 120fps 12MP burst mode).

Fortunately, Heape confirmed that this feature was available “right out of the box” to all smartphone brands. “So there’s no licensing fees, there’s nothing else the OEM has to do,” he explained.

So coming out in 2023 (sic), there will be multiple handsets with this feature, one pretty notable one.

In other words, this won’t merely be a theoretical feature but one that will arrive in commercial devices in 2023. So you’ll want to keep an eye on future launches from the likes of Samsung, Xiaomi, OPPO, and other brands to see whether real-time semantic segmentation makes an appearance there.

The merging of AI and imaging hardware

Qualcomm’s semantic segmentation improvements are possible thanks to the company’s Hexagon Direct Link feature. This refers to Qualcomm effectively creating a link between the AI silicon and the ISP responsible for camera processing. MediaTek is following a similar route with the Dimensity 9200 chipset, saying it’s fused AI and ISP hardware for more efficient 8K/30fps and 4K/60fps with electronic stabilization. Meanwhile, Google’s semi-custom Tensor chips inside Pixel phones also use AI silicon that’s tightly linked to the imaging pipeline.

This merged AI/ISP approach by Qualcomm and MediaTek in particular means that camera data can bypass comparatively slow RAM, enabling more real-time camera processing. Speedy processing doesn’t simply mean less time looking at a “processing” screen before previewing a photo, but it could potentially give us live viewfinder previews of various modes, new photo modes, and new video features.

Tightly linked AI and imaging hardware will bring a host of benefits to mobile photography, such as speed improvements and all-new features.

Qualcomm and/or MediaTek already promise advanced camera features in their latest SoCs like better bokeh video, video super-resolution, photo unblurring, and better low-light performance. But it’s not a stretch to imagine future features like more detailed and performant AR filters, Magic Eraser functionality for videos, burst mode with HDR for each shot, or multi-frame processing for full-resolution 50MP or 108MP shots.

In fact, we got our first taste of what’s possible when cameras bypassed traditional RAM with 2017’s Sony Xperia XZ Premium. This phone featured a camera sensor with its own dedicated DRAM, enabling native 960fps super slow-motion video for the first time. So we’re keen to see what else is possible with a much faster camera processing pipeline.

Fortunately, this unified approach to AI and ISP hardware won’t be exclusive to flagship devices, as Heape confirmed we can expect the feature to eventually land in mid-range chipsets at some point.

The groundwork for future smartphone cameras

It’s interesting to see both Qualcomm and MediaTek coming to the same conclusion of unifying AI and imaging hardware. And there’s no doubt that this could be the foundation for future smartphone camera developments. So while it doesn’t seem like there are loads of headline-grabbing camera features in today’s high-end chipsets, these chips are still bringing important improvements to the table.

In saying so, we’re particularly intrigued by this latest step in image segmentation. Between more accurate portrait modes, more granular image processing, and enhanced beautification, real-time semantic segmentation is already enabling some interesting features. But we’re keen to see what else OEMs will come up with thanks to this mode and a more unified approach to AI and imaging hardware.