Affiliate links on Android Authority may earn us a commission. Learn more.

Is ChatGPT free and open source? We have some alternatives you can try

AI chatbots have become an invaluable tool for many of us but not everyone’s comfortable using them just yet. Between the fact that ChatGPT saves your data and that you need an internet connection to use it, many don’t feel comfortable using it. These problems could be overcome if ChatGPT was open source, as it would allow anyone to run it on their own hardware. That’s already possible with some competitors, which we’ll talk about later. But let’s start with ChatGPT — is it free in the same sense as Android and Linux?

Is ChatGPT open source?

No, ChatGPT is not open source software. Moreover, it’s only offered free of cost to end users. If you’re looking to add ChatGPT functionality to your own website or app, you’ll have to pay for each response. ChatGPT’s creator, OpenAI, was founded as a non-profit organization. However, the company’s goals have since changed over the years and it now aims to turn profitable.

OpenAI has a unique advantage at the moment, which means it benefits from keeping ChatGPT closed source. It’s widely believed that the company’s latest GPT-4 language model surpasses the competition, even if Google claims that its Gemini language model comes exceedingly close.

Even if it were open source, though, running a local version of ChatGPT on your own computer would be extremely difficult if not impossible. This is because the technology requires vast amounts of computing power, especially when we’re talking about more complex models like OpenAI’s GPT family.

What are some open source alternatives to ChatGPT?

Meta’s LLaMA 2 is one of the most popular open-source large language models available today. LLaMA stands for Large Language Model Meta AI, so it’s named after Facebook’s parent company. However, it’s worth noting that LLaMA 2 isn’t exactly an open source ChatGPT alternative for the average user. Meta hasn’t released a product based on LLaMA yet, only the underlying model. But as I’ll show you in a later section, the open source community has come up with ways to interact with LLaMA on even typical home computers.

Meta may not have the best reputation in the social media place, but the company has made significant open-source contributions over the years. For example, the popular machine learning framework PyTorch was originally developed by Meta’s AI division. Likewise, many developers use Meta’s open-source React JavaScript library to quickly build UI elements for their websites. And now, Meta has become the first major company to release an open-source language model.

Facebook's parent company offers the most popular open source large language model at the moment.

According to Meta, its latest LLaMA 2 language model can keep up with OpenAI’s GPT-4. For context, you need to fork over $20 per month for a ChatGPT Plus subscription to get access to the latest GPT-4 model. All of that is to say Meta’s open-source LLaMA language model can keep up with the industry’s best, provided you learn how to run it yourself.

But if you don’t care about the bleeding edge, there are quite a few open-source language models to choose from. Here are some examples:

- BERT: Google AI’s BERT, short for Bidirectional Encoder Representations from Transformers, was one of the first language models to become publicly available. According to the search giant, BERT performs exceptionally well in question-answering scenarios if you fine-tune the model beforehand. But as you may have guessed, it requires a fair bit of work to get started.

- GPT-NeoX: EleutherAI’s GPT-NeoX is a 20 billion parameter language model that’s much easier to use. However, it requires large amounts of GPU video memory (VRAM), ruling out most consumer-grade hardware. That said, you can use multiple graphics cards to reach the 45GB minimum requirement.

- Alpaca: A group of Stanford researchers took Meta’s LLaMA language model and fine-tuned it using OpenAI’s GPT-3 API. The result is a smaller but highly optimized model that runs on commodity hardware including my very middle-of-the-road laptop. Alpaca’s accessibility has made it one of the most popular open-source alternatives to ChatGPT.

How to use an open-source AI chatbot offline

Now that you know some of the open-source ChatGPT alternatives out there, you may want to run one of them yourself. There’s good news on that front since the open-source community has developed a number of easy solutions to start chatting with them. Best of all, they also work offline so you don’t need an internet connection.

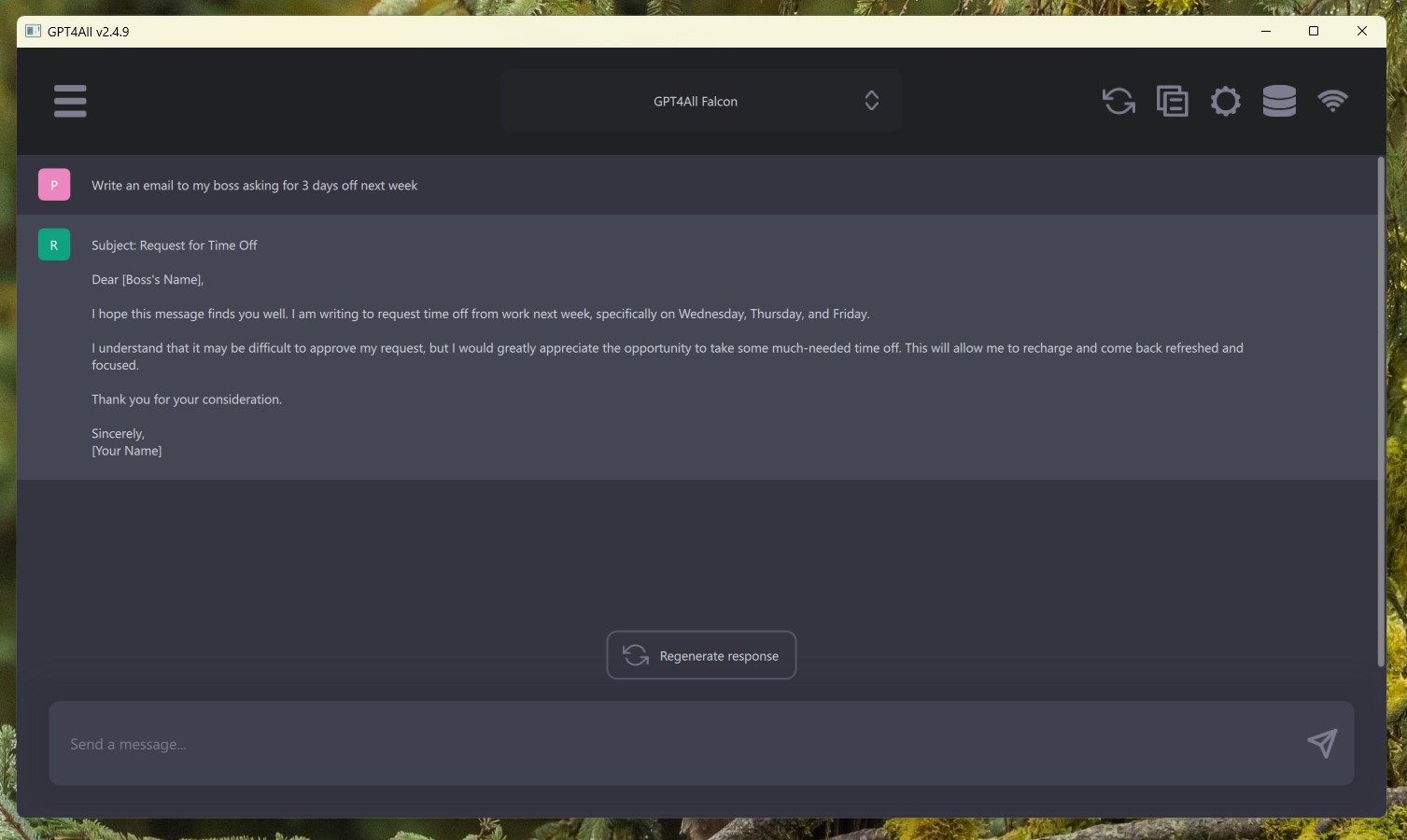

While you can find individual instructions for every major open-source model, I’d recommend using GPT4All instead. It’s a graphical app that lets you train, fine-tune, and chat with various open-source models, including many based on LLaMA. When I tested it on an M1-powered Macbook Air, GPT4All took just a few seconds to generate responses. On average, it was about as quick as the free version of ChatGPT with a couple of minor slowdowns from time to time. Here’s how you can get started:

- Visit the GPT4All website and click on the download link for your operating system, either Windows, macOS, or Ubuntu.

- Follow the instructions to install the software on your computer.

- Open the GPT4All app and select a language model from the list. The app will warn if you don’t have enough resources, so you can easily skip heavier models.

- Once downloaded, you’re all set to start chatting with the language model. Simply type in a prompt as you would with ChatGPT and wait for a response.

If you’re using a slower computer or laptop, it may take a few seconds for responses to appear. But that’s the trade-off you have to accept when using an open source language model on your own machine.