Affiliate links on Android Authority may earn us a commission. Learn more.

A Samsung Galaxy S25 with Google AI silicon sounds bizarre, but is it?

Who doesn’t love a good wild rumor, especially when it blends high-profile phones like the Samsung Galaxy S25 with hot topics like AI? According to the latest whispers, Samsung’s next flagship phone will be powered by a new flagship Exynos 2500 processor (in addition to an as-yet-unannounced Qualcomm Snapdragon 8 Gen 4). The report requires a hefty dose of salt, but the juicy speculation is that the chip will feature a dedicated AI accelerator built by Google, not unlike the Google TPU found in the Pixel 8’s Tensor G3 processor.

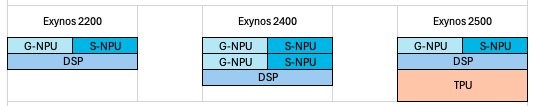

For the past two generations, Samsung has developed its own AI accelerator for Exynos, consisting of big and little AI-focused cores (NPUs) and a more traditional number-crunching digital signal processor (DSP). Introducing a Google-developed processor part would be a tacit admission that Samsung’s AI silicon is behind the competition, either in performance or development tools.

Usually, we’d say absolutely no way, but this isn’t an improbable development. Google and Samsung have worked closely on the Tensor chip series and AI software like Galaxy AI. Licensing additional technology between the two would be business as usual. ASUS already uses Google TPUs for its Coral IoT platform, so why not Samsung?

The benefits would, presumably, be more potent AI number-crunching capabilities for the Galaxy S25 series. Whether or not the rumor turns out to be true (we’re still far from convinced), it has drawn my attention to a key inflection point in the current mobile AI arms race that’s worth diving into.

Someone will win the AI race, at some point

The AI landscape has changed a lot in the past year, and so has the hardware requirements to run the latest models and tools. “Basic” early inferencing and cloud-based tools have quickly given way to generative models that can produce swathes of text, images, and even video on device, but require vastly more specialist compute power to do so. Mobile processor designers have been investing more and more transistors into NPU processing parts to keep up, with Google, Samsung, Qualcomm, MediaTek, and Apple all pursuing slightly different approaches.

If the rumors are true, Exynos and Tensor might be closer than ever.

There are always winners and losers in an innovative field, and there’s an element of luck in predicting silicon development directions so many years in advance. Part of the problem is that AI workloads vary so much, and figuring out which instructions and bit-depths to support in hardware is difficult while the state of applications remains in flux. The changing landscape can make development costs prohibitive, especially if you’re not selling your developments far and wide.

Google’s advantage is having its hand in both AI software and hardware development, perhaps keeping its TPU development a little more in tune with changing developments than some others. There are bound to be benefits to using AI hardware from the same company bringing Gemini Nano models to various smartphones, after all.

Looking just slightly further ahead, the future of AI applications won’t limited to a few proprietary OEM apps that ship with your phone (sorry, Galaxy AI). Consumers will want to take their AI applications across devices and platforms like other apps, necessitating third-party developer access to AI accelerator hardware.

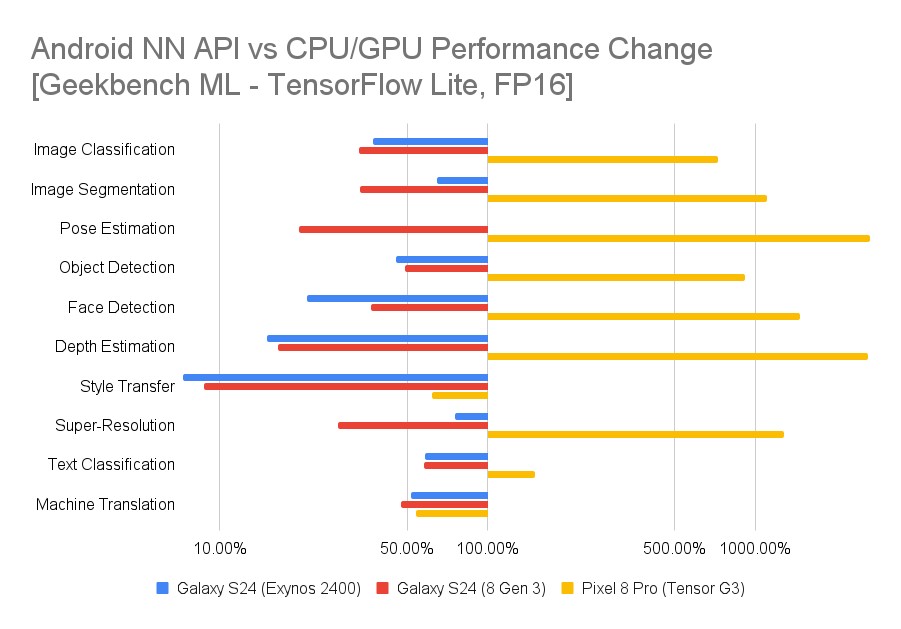

However, I tested the Exynos and Snapdragon Samsung Galaxy S24 series AI performance numbers using GeekBench ML (admittedly still in development) and found the app couldn’t access additional NPU performance via the Android NN API. Running directly on the CPU and GPU was faster, which shouldn’t be the case. Perhaps this is a driver issue, or some of the best AI phones on the market don’t appear to give third-party developers easy access to leverage their AI smarts via a core Android API.

I ran the same test on Google’s Pixel 8 Pro and found a notable uplift in some workloads when using Android NN (except in 32-bit floating point tests, which see no benefit). In other words, developers can run TensorFlow AI workloads on the Pixel’s TPU for significant performance gains.

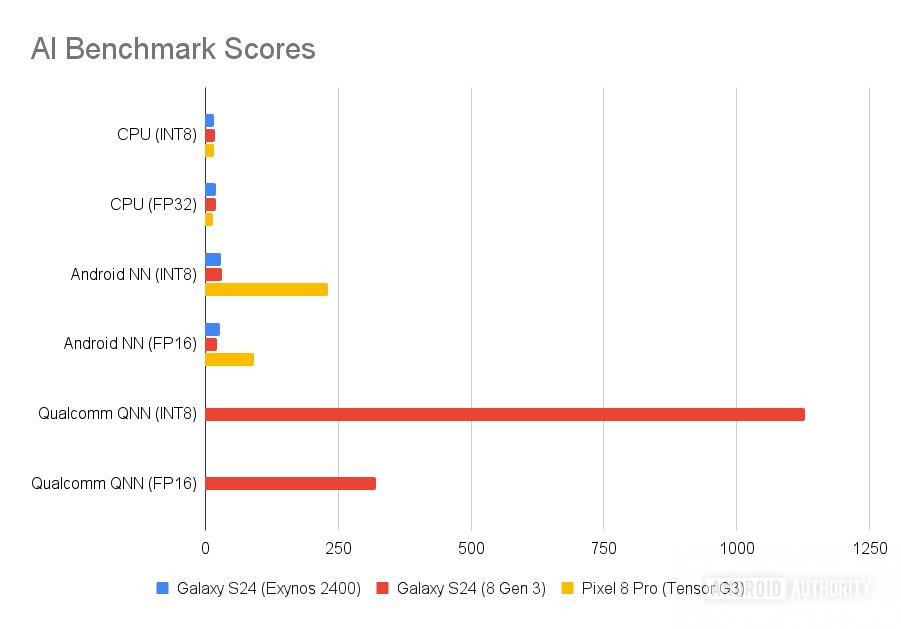

Running Ignatov Andrey’s AI Benchmark (results below) showcased a very similar trend. Android NN API showed basically no uplift over CPU cores and also scored far below Qualcomm’s QNN frameworks on the Galaxy S24 Ultra. Interestingly, QNN on the S24 Ultra actually outclassed the Pixel 8’s best score by a wide margin, highlighting Qualcomm’s AI compute power. In addition, Qualcomm has its AI Hub tools for developers to access its own on-device AI models. Worryingly, Samsung’s ENN option couldn’t run on the Exynos Galaxy S24, leaving it stuck with a terrible Android NN-based score.

This isn’t to take issue with the Galaxy S24’s AI performance; it clearly works well enough for Galaxy AI. The point is that developers aren’t going to want to implement three or four different frameworks to achieve the best AI app performance to everyone. This is a broader problem.

Hopefully, the above has helped highlight the two halves of the AI silicon challenge: first developing the hardware and then coding the libraries and tools to open up that hardware in a way that developers can and want to build on. That’s a hefty investment, more so when silicon is changing so fast.

AI silicon will eventually coalesce around the most practical model, and I wouldn't bet against Google.

If Google has already done the API legwork work with its TPUs, as appears to be the case, perhaps that’s a good enough reason for Samsung to consider Google’s solution over an in-house option for future Exynos chipsets, especially if it believes in a future market for third-party AI apps.

Today’s mobile AI landscape is a Wild West, with brands chasing their own unique hardware and software combinations in a bit to get ahead of the competition. Eventually, the market will settle on the most practical, if not necessarily the most powerful, ideas, and we’ll almost certainly see far more homogeny between devices. I wouldn’t bet against Google here, even if this shaky AI silicon rumor doesn’t turn out to be true for the Samsung Galaxy S25 series.