Affiliate links on Android Authority may earn us a commission. Learn more.

Al music on Spotify is exploding — here's how to identify it before you hit play

AI slop has invaded almost every corner of the internet, from social media sites to popular platforms like YouTube. Music streaming services aren’t safe either, with AI-generated music making it onto platforms like Spotify, YouTube Music, and Deezer.

Deezer noted on its blog in September 2025 that 28% of daily uploads on its platform are AI-generated. Meanwhile, Spotify and YouTube users have bemoaned the flood of AI-generated artists on their playlists. While Spotify does not provide exact figures for how much AI-generated music accounts for its content, confirmed AI artists have racked up millions of monthly listeners on the platform — outperforming many indie bands and artists.

So if you’d prefer that your money goes towards real people, how can you spot AI music on Spotify? There’s no single red flag that will give it away (unless the creator confirms it), but a combination of these features points to AI involvement.

Have you encountered AI music on Spotify?

1. Superhuman output

There are a variety of factors that contribute to how prolific an artist or band is. My favorite band, Badflower, releases an album every few years, with the occasional single and EP in between. But AI bands and artists usually release multiple albums within the span of months or even weeks.

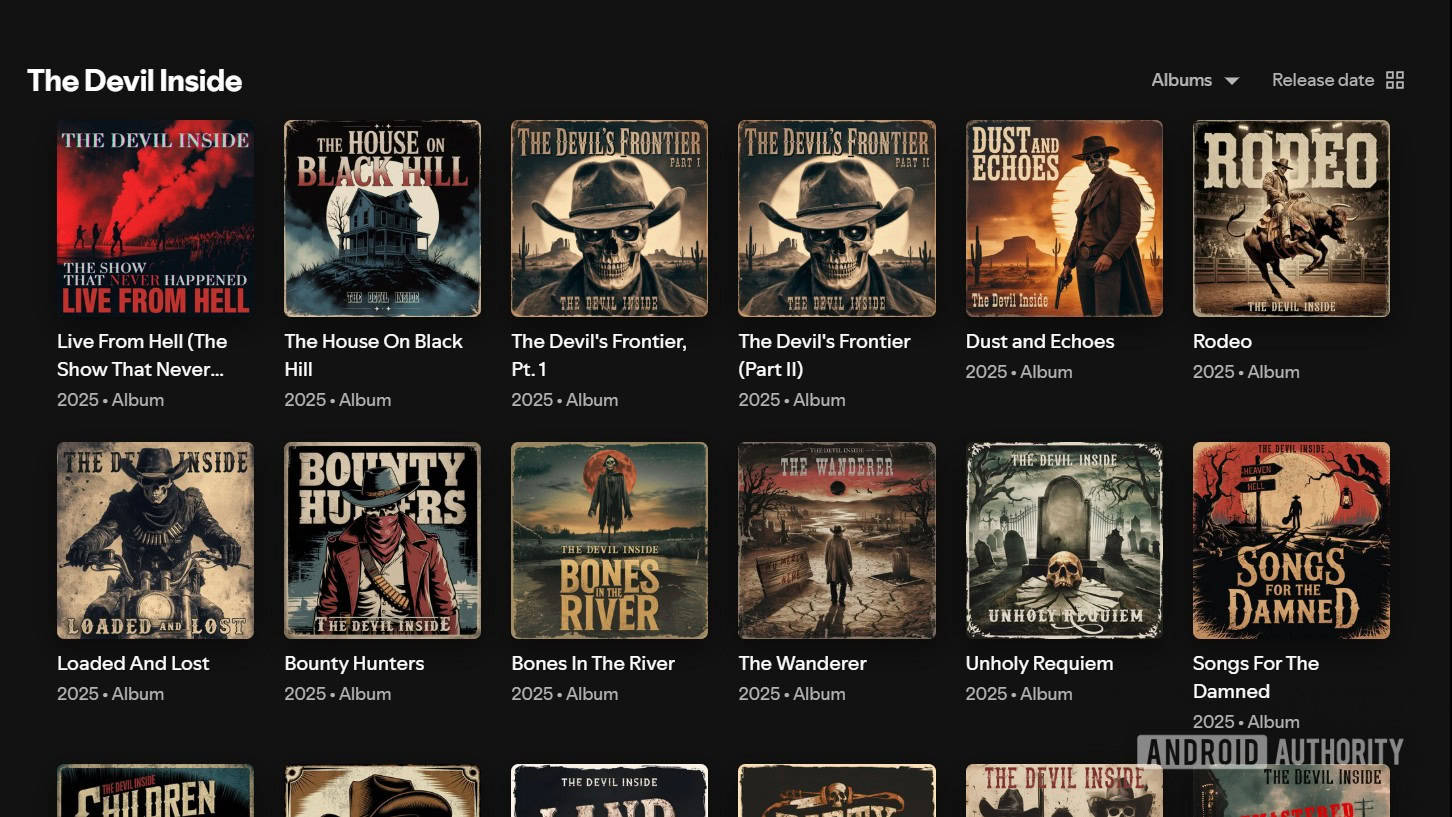

For example, The Devil Inside, an AI project with nearly 200,000 monthly listeners, released 13 albums in 2025. The Velvet Sundown, another confirmed AI band, falls on the more conservative side of the spectrum, having released three albums in its first year on the platform (2025). Aventhis, an AI artist with over 1.2 million monthly listeners, also released three albums in 2025, though some appear to have been removed from the platform.

AI artists have a much higher output than real musicians, with low-output AI rivalling even the most prolific human artists.

This kind of output rivals even the most prolific human artists. There are exceptions, of course, because James Brown existed and released five albums in 1968. But if I’m in doubt, I also look at the years that they released their albums.

AI-generated albums do not predate 2024. This is because the tools used to produce this content at scale were not really available before then. Suno AI launched in December 2023, and Udio launched in April 2024.

There is always the chance that a person comes along who is very prolific and also just a new artist, but it’s rare.

2. A lack of live shows, media interviews, or social media posts

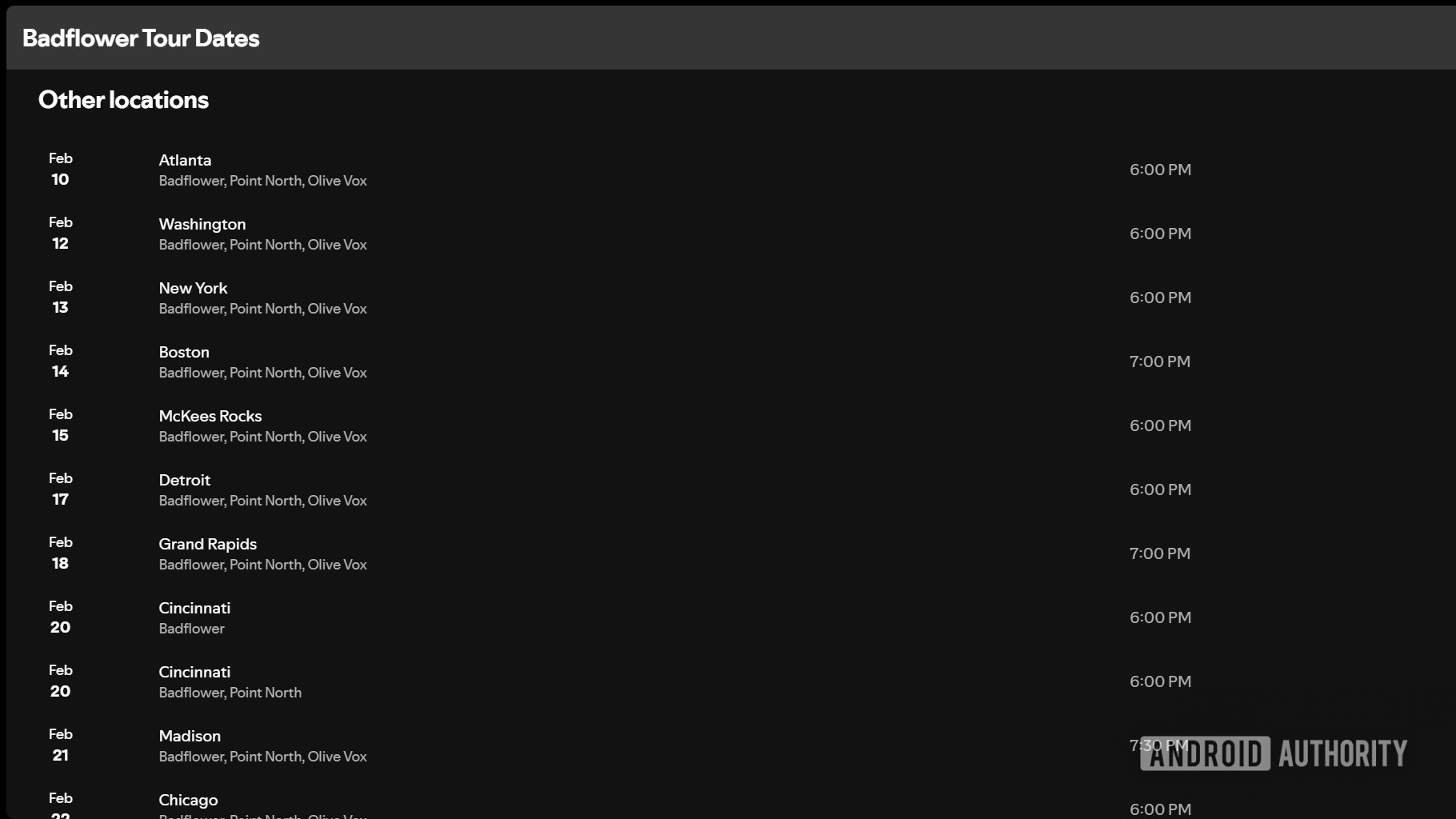

Another way to discern AI artists from real ones is to check the Spotify page for live shows. I’ve found that even very niche, indie artists will have live shows scheduled. Meanwhile, AI artists will have millions of listeners and no shows.

None of the AI projects I visited had live shows scheduled — because after all, there are no people who can perform. This is particularly noticeable for pages that are very successful. For example, Breaking Rust is an AI project with over 2.5 million monthly listeners but no live shows.

The only exception was The Devil Inside — but this seems to be an error. The page listed one live show but linked to a page for a band called Devil Inside that does rock music covers.

AI artists don't have live shows, interviews with media outlets, or an authentic social media presence.

When in doubt, look for media coverage or at least a verifiable social media presence. After all, an artist just starting out or who has a limited budget may not have the funds or fame for live shows. And there are cases like Taylor Swift whose shows aren’t listed on Spotify.

But I’ve found that many niche artists still have live shows and media coverage, with only the occasional exception. In these cases, the person usually has a social media presence and songs spanning back years.

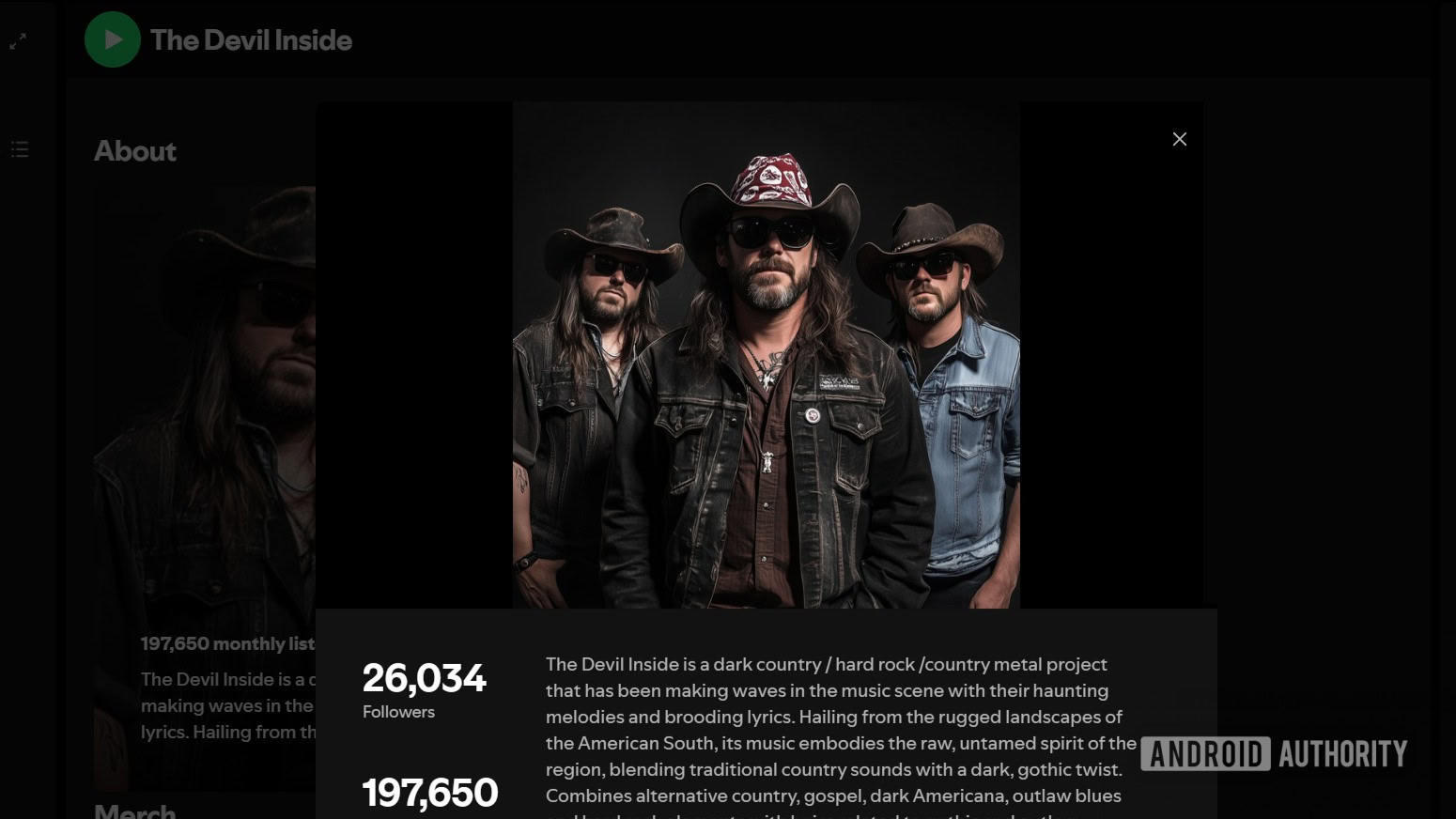

3. AI-generated imagery

AI-generated images are another red flag when it comes to artists and bands on Spotify. This includes their artist page, as well as their social media pages. For some AI music pages, you can recognize the overly smooth visuals on their bio immediately. This is the case for The Devil Inside.

However, it’s getting more difficult to spot AI images, especially ones that use actual images as a basis. Even AI-generated videos trick some people, especially when there’s limited movement of the subject.

Some of these projects avoid images of the “performers” altogether or delete older images that might contradict newer ones.

AI artists may use AI-generated imagery on profiles and social media, though this is getting more difficult to verify.

For example, Sienna Rose (with over 4.3 million monthly listeners) has been flagged as likely AI-generated. However, the person or people managing the Spotify page reportedly scrubbed earlier albums that depicted an AI-generated redhead on album covers.

You can still see these incarnations on Tidal and Deezer, along with newer music featuring a different singer. But the social media pages linked to the Spotify page now feature someone claiming to be Sienna Rose and denying accusations that the music is AI-generated.

However, suspicions continue because of other red flags — as well as the fact that the short video clips don’t show the woman actually speaking or moving more than a few inches.

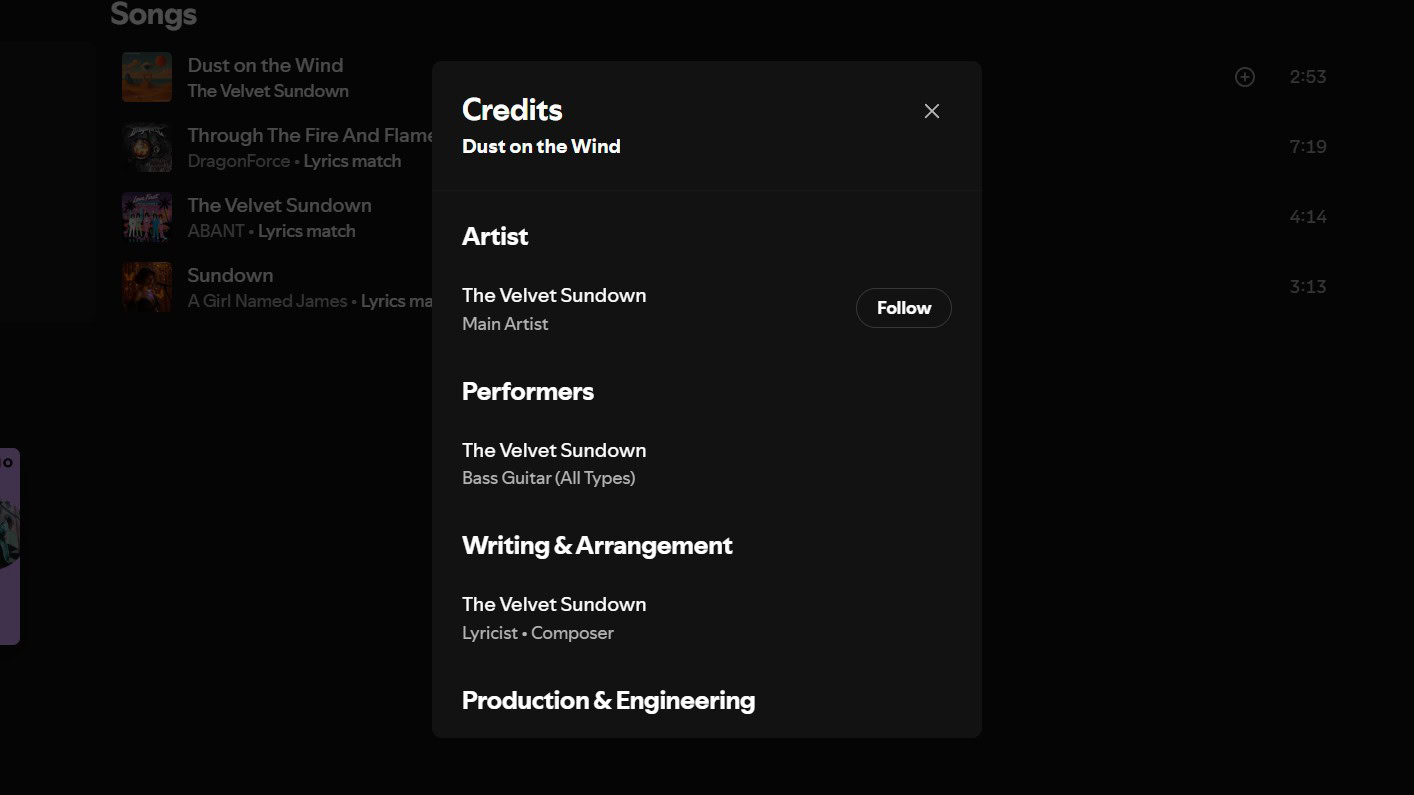

4. Credits with a single creator (or AI acknowledgement in the bio)

For me, one of the biggest signs that music is actually AI-generated lies in the credits. It is quite rare that a song has a single composer and performer. When it comes to bands, I haven’t encountered an instance where each person isn’t credited.

For AI-generated tracks, however, there is usually only one person listed in the credits. This is because many of these projects are produced by a single person who generates lyrics and music using AI prompts.

Sometimes, a solo indie artist writes, sings, and performs their own music and appears as the only person in the credits. But even in these cases, I’ve found that they have songs with other collaborators (along with other signs that they are real people).

Despite producing dozens or more songs within small timeframes, AI artists usually only have one creator listed in the credits.

On the other hand, an AI artist will release dozens of songs within a few weeks with the work attributed to a single person.

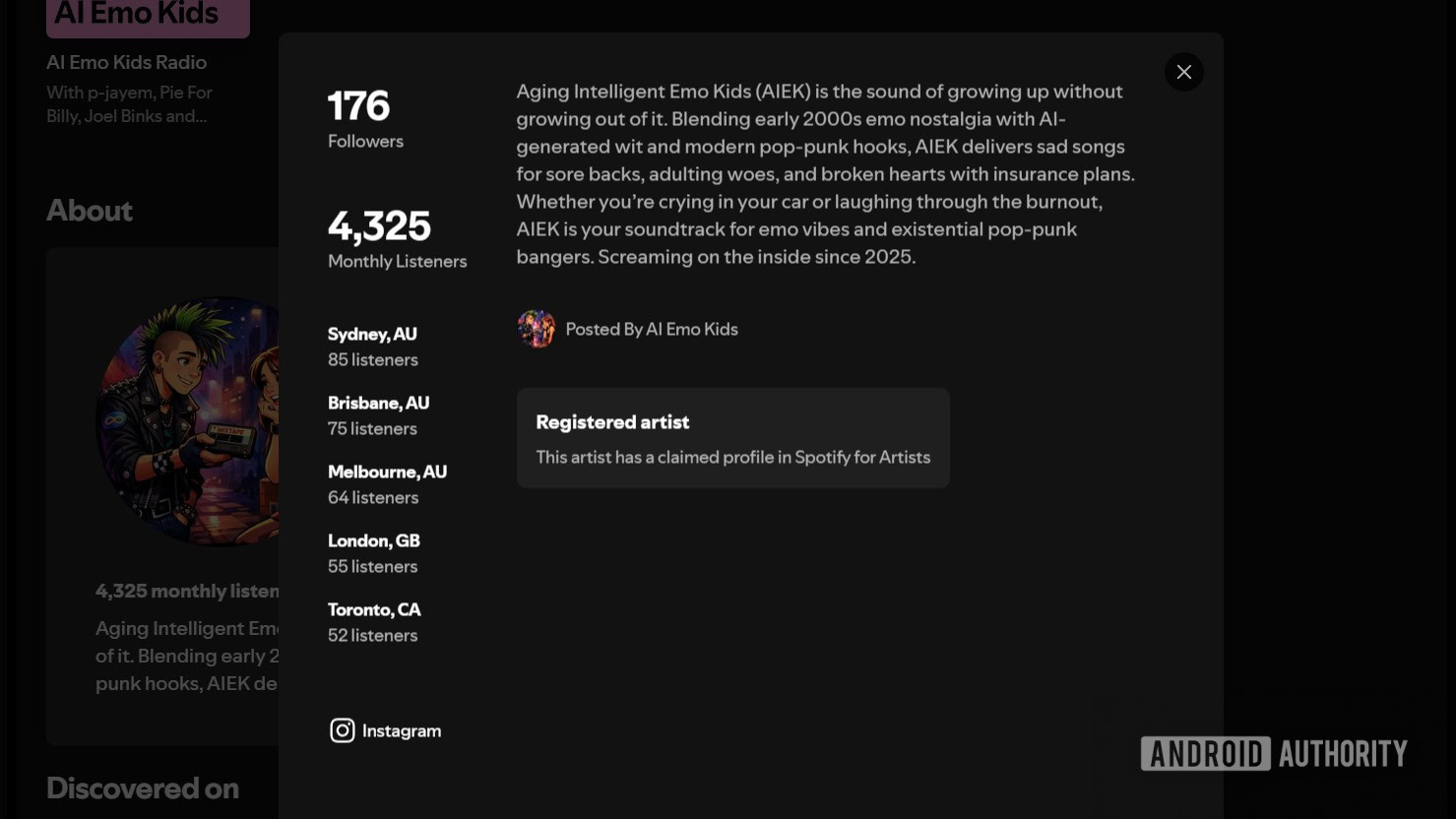

It’s also worth checking the Spotify artist’s biography to see whether the creator confirms that it’s an AI project. Not every creator is trying to trick listeners, and some even include the word “AI” in the artist title. Meanwhile, others have added mentions of AI, projects, or characters being “illustrative” after being flagged as AI.

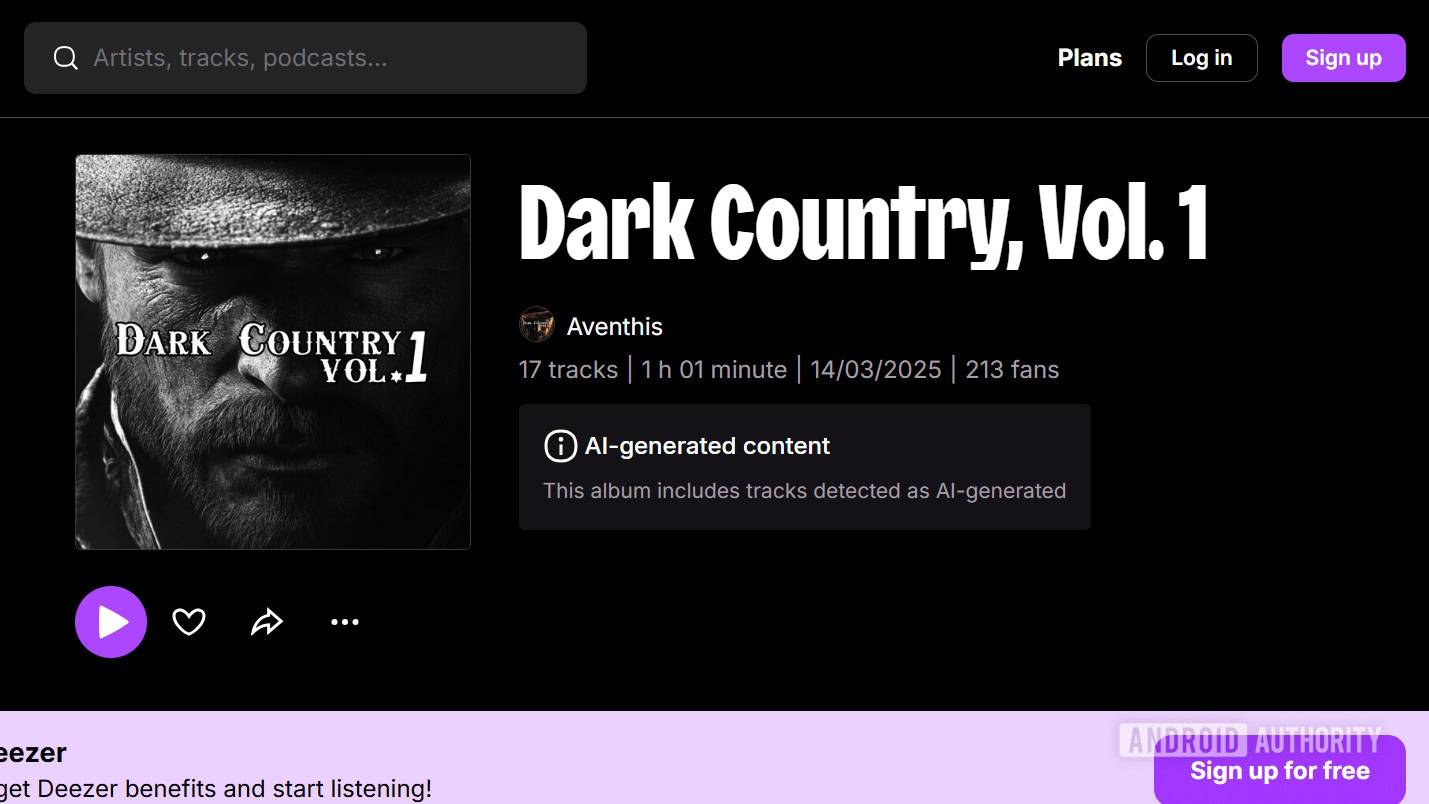

5. The music is flagged on other platforms

Spotify doesn’t flag AI-generated content, but Deezer does. If you visit an album on Deezer, the platform includes a label if its system detects AI-generated songs. So if you suspect that someone on Spotify is creating AI-generated music, it’s worth checking if they have an album on Deezer that is flagged as AI.

This doesn’t mean Deezer’s system is foolproof, but it is the most reliable way for consumers to at least see what AI-detection tools are currently flagging. There are other tools available, but most aren’t available to the public.

I actually appreciate Deezer’s transparency around AI-generated music, as it allows us to make an informed choice. Even its more detailed release date information makes it easier to check output at a glance, whereas Spotify only shows the release year as the default on albums.

Deezer flags albums that likely include AI-generated content, making it a useful resource.

There are some publicly available AI detectors, but these aren’t all that reliable. The biggest limitation is that most require you to upload a copy of the song, which isn’t feasible when you’re using a streaming service. For those that allow links, they rely on short track previews and aren’t very accurate.

Besides Deezer, there’s also the site Soul Over AI. The site is based on community reports, so there’s always the chance that people have incorrectly reported certain music as AI-generated. However, I would say it’s useful for seeing whether a band or artist has acknowledged AI use or what red flags others have spotted.

6. Generic, repetitive music and lyrics

Most of the signs I’ve discussed have nothing to do with the actual music. But those who have encountered AI music in their recommendations say that the blandness of the lyrics and music style tipped them off.

I looked at the lyrics of songs by some AI bands — and some lines are definitely questionable. At other times, though, they’re not any more bland than the vapid songs I sometimes encounter on the radio. And while some lyrics do sound like nonsense, one of the biggest pop songs of the 90s included the line “I wanna really, really, really wanna zigazig, ah.”

Bland, repetitive songs and lyrics are usually one of the first things that tip people off, but it's also the least reliable sign.

However, I’ve included it as a sign to look out for, as it is one of the first things that raises suspicion when an artist lands in someone’s recommendations.

If you hear some songs from an artist that sound off because of repetitiveness, it’s worth investigating if you’re getting AI slop so that you can block them from future recommendations. However, if you’re getting repeated recommendations from a mediocre artist or band you don’t enjoy, it’s worth blocking them, whether they’re real or not.

Don’t want to miss the best from Android Authority?

- Set us as a favorite source in Google Discover to never miss our latest exclusive reports, expert analysis, and much more.

- You can also set us as a preferred source in Google Search by clicking the button below.

It’s natural to want a simple way to identify AI music, but the reality is that you have to take multiple clues in context together. Once you’re aware of these red flags, it becomes easier to spot.

However, at the scale that AI-generated music is making its way onto streaming platforms, services need to give users more control over their experience. Opting out of AI-generated music in recommendations is one way. I also think that services should label suspected AI-generated music and require artists to declare AI use.

If you don’t mind AI music, that’s perfectly fine. But listeners should be able to make informed choices. Right now, most platforms don’t allow us to do that, and we have to rely on identifying these AI musicians ourselves. If the company doesn’t introduce ways for users to take control of their listening experience, I may end up looking at Spotify alternatives instead.

Thank you for being part of our community. Read our Comment Policy before posting.