Affiliate links on Android Authority may earn us a commission. Learn more.

AI raced out of the blocks at Google I/O 2018, and there's much more to come

Published onMay 12, 2018

If there’s one major theme to take away from 2018’s Google I/O it’s that AI is right at the forefront of everything the company is doing. From the unsettlingly impressive Duplex demonstration, new third generation Cloud TPUs, and the increasingly integrated features found within Android P, machine learning is here to stay and Google is pulling further ahead of its competitors in this space every year.

At the event, a selection of high-profile Googlers also shared their thoughts about the broader topics around AI. A three-way talk between Google’s Greg Corrado, Diane Greene, and Fei-Fei Li, and a presentation by Alphabet Chairman John Hennessy revealed some deeper insights into how recent breakthroughs and the thought process going on at Google are going to shape the future of computing, and, by extension, our lives.

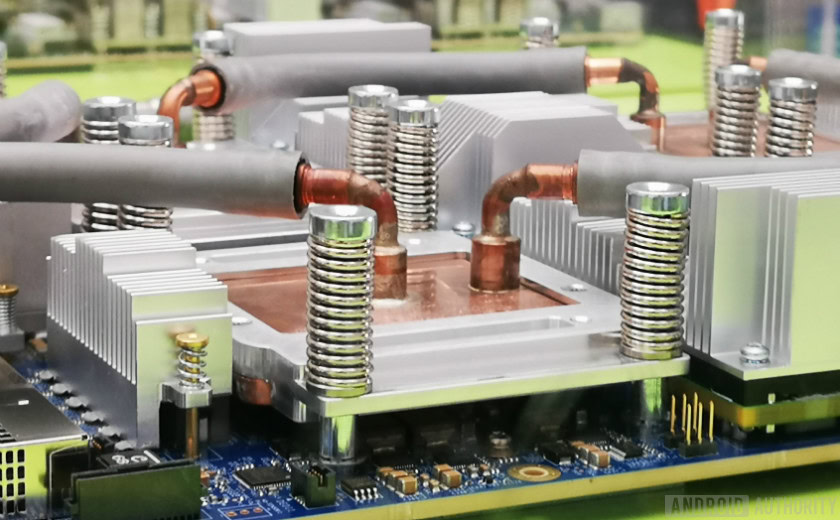

Google’s ambitions for machine learning and AI require a multipronged approach. There is dedicated hardware for machine learning in the cloud with its third generation Cloud TPU, application tools for developers in the form of TensorFlow, and plenty of research taking place both at Google and in conjunction with the wider scientific community.

Hardware on a familiar track

John Hennessy, a veteran of the computer science industry, saved his talk for the final day of I/O but it was every bit as pertinent as Sundar Pichai’s keynote speech. The key themes will have been familiar to tech followers at almost any point in the past 10 years — the decline of Moore’s Law, the limitations of performance efficiency and battery power sources, yet the increasing need for more computing to solve increasingly complex problems.

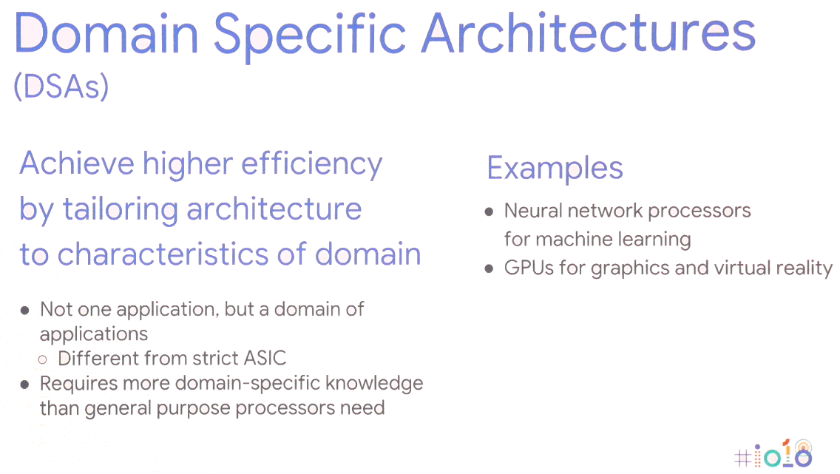

The solution requires a new approach to computing — Domain Specific Architectures. In other words, tailoring hardware architectures to the specific application to maximize performance and energy efficiency.

Of course, this isn’t a brand new idea, we already use GPUs for graphics tasks and high-end smartphones are increasingly including dedicated neural networking processors to handle machine learning tasks. Smartphone chips have been heading this way for years now, but this is scaling up to servers too. For machine learning tasks, the hardware is increasingly being optimized around lower accuracy 8 or 16-bit data sizes, rather than large 32 or 64-bit precision floating point, and a small number of dedicated highly parallel instructions like mass matrix multiply. The performance and energy benefits compared with generic large instruction set CPUs and even parallel GPU compute speaks for themselves. John Hennessy sees products continuing to make use of these heterogeneous SoCs and off-die discrete components, depending on the use case.

However, this shift towards a broader range of hardware types presents new problems of its own — increasing hardware complexity, undermining the high-level programming languages relied upon by millions of developers, and fragmenting platforms like Android even further.

Machine learning is a revolution, it’s going to change our world.John Hennessy - Google I/O 2018

Dedicated machine learning hardware is useless if it’s prohibitively difficult to program for, or if performance is wasted by inefficient coding languages. Hennessy gave an example of a 47x performance difference for Matrix Multiply math between coding in C, compared with the more user-friendly Python, hitting up to 62,806x performance improvements using Intel’s domain-specific AVX extensions. But simply demanding that professionals switch to lower level programming isn’t a viable option. Instead, he suggests that it’s compilers that will require a rethink to ensure that programs run as efficiently as possible regardless of the programming language. The gap may never close fully, but even reaching 25 percent of the way would greatly improve performance.

This also extends into the way that Hennessy envisions future chip design. Rather than relying on hardware scheduling and power-intensive, speculative out-of-order machines, it’s compilers that may eventually have a greater role to play in scheduling machine learning tasks. Allowing the compiler to decide which operations are processed in parallel rather than at runtime is less flexible, but could result in better performance.

The added benefit here is that smarter compilers should also be able to effectively map code to the variety of different architectures out there, so the same software runs as efficiently as possible across different pieces of hardware with different performance targets.

The potential shifts in software don’t stop there. Operating systems and kernels may too need to be rethought to better cater to machine learning applications and the wide variety of hardware configurations that will likely end up out in the wild. Even so, the hardware that we are already seeing in the market today, like smartphone NPUs and Google’s Cloud TPUs are very much a part of Google’s vision for how machine learning will play out in the long term.

AI as integral as the internet

Machine learning has been around for a long time, but it’s only recent breakthroughs that have made today’s “AI” trend the hot topic that it is. The convergence of more powerful compute hardware, big data to drive statistical learning algorithms, and advances in deep learning algorithms have been the driving factors. However, the big machine learning problem, at least from a consumer standpoint, seems to be that the hardware is already here but the killer applications remain elusive.

Google doesn’t seem to believe that the success of machine learning hinges on a single killer application though. Instead, a panel discussion between Google AI specialists Greg Corrado, Diane Greene, and Fei-Fei Li suggested that AI will become an integral part of new and existing industries, augmenting human capabilities, and eventually becoming as commonplace as the internet in both its accessibility and importance.

Today, AI adds spice to products like smartphones, but the next step is to integrate AI benefits into the core of how products work. Googlers seem particularly keen that AI is delivered to industry’s that can benefit humanity the most and solve the most challenging questions of our time. There’s been a lot of talk about the benefits for medicine and research at I/O, but machine learning is likely to appear in a wide variety of industries, including agriculture, banking, and finance. As much focus as Google has been placing on the smart capabilities of Assistant, it’s more subtle and hidden use cases across industries that could end up making the biggest changes to people’s lives.

Knowledge about AI will be key to businesses, just as servers and networking are understood by IT departments through to CEOs today.

Eventually, AI could be used to help take humans out of hazardous working environments and improve productivity. But as the Google Duplex demo showcased, this could end up replacing humans in many roles too. As these potential use cases become more advanced and contentious, the machine learning industry is going to work together with lawmakers, ethicists, and historians to ensure that AI ends up having the desired impact.

Although a lot of industry-based machine learning will be done behind the scenes, consumer-facing AI is going to continue advancing too, with a specific focus on a more humanistic approach. In other words, AI will gradually learn and be used to better understand human needs, and eventually be able to understand human characteristics and emotions in order to better communicate and help solve problems.

Lowering the bar to development

Google I/O 2018 demonstrated just how far ahead the company is with machine learning than its competitors. For some, the prospect of a Google monopoly on AI is worrying, but fortunately the company is doing work to ensure that its technology is widely available and increasingly simplified for third-party developers to start implementing. AI will be for everyone, if Googler sentiments are to be believed.

Advances in TensorFlow and TensorFlow Lite are already making it simpler for programmers to code their machine learning algorithms so that more time can be spent on optimizing the task and less time sorting out bugs in the code. TensorFlow Lite is already optimized to run inference on smartphones, and training is planned for the future too.

Google’s developer-friendly ethos can also be seen in the announcement of the new ML Kit development platform. There’s no need to design custom models with ML Kit, programmers simply need to feed the data in and Google’s platform will automate the best algorithm for use with an app. The Base APIs currently support image labeling, text recognition, face detection, barcode scanning, landmark detection, and eventually smart reply too. ML Kit will likely expand to encompass additional APIs in the future too.

Machine Learning is a complex subject, but Google is aiming to lower the barriers to entry.

Machine Learning and basic AI are already here, and although we might not have seen a killer application yet, it’s becoming an increasingly fundamental technology across a huge range of Google’s software products. Between Google’s TensorFlow and ML Kit software, Android NN support, and improved Cloud TPUs for training, the company is set up to power the huge growth in third-party machine learning applications that are right around the corner.

Google is undoubtedly an AI first company.