Affiliate links on Android Authority may earn us a commission. Learn more.

Google Camera: All the features that you get on Google Pixel smartphones

Google Pixel smartphones have long been some of the best Android smartphones you can buy, but their presence at the top is surprising if you take a deeper look at the spec sheet. The Pixels barely have top-of-the-line specifications, trailing behind most market leaders. However, thanks to some nifty software magic, Google can extract the most possible value out of hardware. We can see this in action with the Google Camera app on Pixel smartphones, which enables some cool photography features. It is easily one of the best Android camera apps out there. Here are all the features that you get on the Google Camera app.

What is Google Camera?

Google Camera is the default camera application shipped on Google Pixel smartphones. Most OEMs ship their own modified camera app as part of their Android skin, so Google is no different.

What makes Google Camera unique is that it can extract the best results out of dated camera hardware often found on Pixel smartphones. The Google Camera app contains most of the algorithms responsible for Google’s software magic on photos.

These software optimizations are so potent that third-party modders regularly attempt to port the latest Google Camera app from Pixel devices to other Android smartphones, improving the photography prowess of their non-Pixel hardware.

The Google Camera app was initially released to the public on the Google Play Store. But those days are long gone. Google Camera is now exclusive to Pixel smartphones. If you spot the app on a non-Pixel smartphone, it will likely be a third-party Google Camera port (often called “GCam” in this context).

Google Camera v9.0 vs. v9.1

Google recently updated its UI for the first time since the Pixel 4. The new Google Camera UI is available on version 9.0 and above. Notably, this version of the app requires Android 14. So if your Pixel isn’t eligible for the Android 14 update, you won’t be able to use Google Camera v9.0.

The biggest change in the new update is that you now have a toggle to switch between photo and video modes. When you select a mode, you will now see options for either photo or video. Below are the options listed for both modes in the new Google Camera app.

- Photo: Action Pan, Long Exposure, Portrait, Photo (main), Night Sight, Panorama, Photo Sphere (missing on Pixel 8 Pro)

- Video: Pan, Video (main), Slow motion, Time Lapse, Blur

Note that the Photo Sphere mode has been removed from the Pixel 8 series.

Elsewhere, the new Google Camera app shifts both photo and video settings overlay to the middle of the app screen. These can be accessed from the settings button in the bottom left corner of the app.

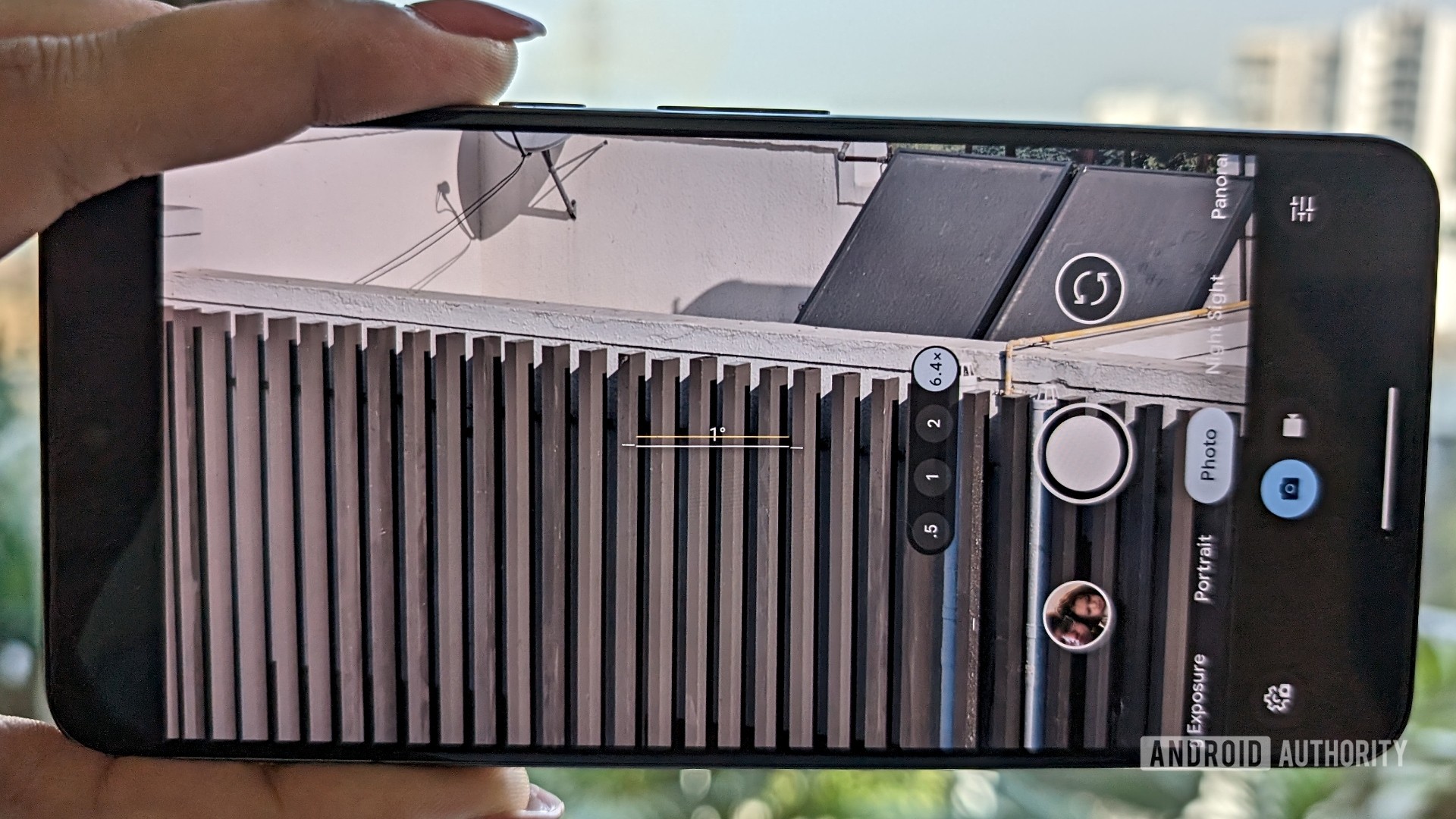

Google Camera v9.1 on Pixel 8 series

The Pixel 8 series gets all of these changes and then some. The new Google flagships ship with Google Camera v9.1, which pushes the photo and video settings to the bottom of the app screen. Unfortunately, Google has removed the brightness, shadow, and color temperature sliders from the main Pixel camera UI in this version of the app. You’ll now have to manually select these sliders from a new slider menu on the bottom right of the app screen.

The Pixel 8 Pro also exclusively features a new Pro mode, a first for the Pixel phone lineup. It lets you quickly change the resolution of photos, capture in RAW, and manually select the lens (Wide, Ultrawide, or Telephoto) you want to use.

Google Camera: Features

Google Camera has many features for photos and videos, with the former overpowering the latter. Below, we explain all the Google Camera features you get on Pixel phones.

HDR Plus

The highlight feature of the Google Camera app is HDR Plus, which was added around the release of the Nexus 6. HDR Plus is the engine behind HDR imaging in the Google Camera app. In its early announcement posts in 2014, the company said it uses “computational photography” for HDR Plus.

When you press the shutter button in the Google Camera app, HDR Plus captures a rapid burst of three to 15 pictures and combines them into one.

In low light scenes, HDR Plus takes a burst of shots with short exposure times, aligns them algorithmically, and then claims to replace each pixel with the average color at the position across these burst shots. Using short exposures reduces blur, while averaging shots reduces noise.

In scenes with high dynamic range, HDR Plus follows the same technique, and it manages to avoid blowing out the highlights and combines enough shots to reduce noise in the shadows.

In scenes with high dynamic range, the shadows can often remain noisy as all images captured in a burst remain underexposed. This is where the Google Camera app uses exposure bracketing, making use of two different exposures and combining them.

HDR Plus with Bracketing is the highlight feature of the Google Camera app.

The experience with exposure bracketing gets complicated with Zero Shutter Lag (ZSL, more on this feature below). HDR Plus works around ZSL by capturing frames before and after the shutter press. One of the shorter exposure frames is used as the reference frame to avoid clipped highlights and motion blur. Other frames are aligned to this frame, merged, and then de-ghosted through a spatial merge algorithm that decides per pixel whether image content should be merged or not.

If all of this sounds complicated and confusing to you as a user, fret not. The Google Camera app doesn’t require you to worry about these details. You just have to click photos; Google’s algorithms will handle the rest.

Here are some camera samples from the Pixel 8 Pro’s primary camera:

Night Sight

Night Sight is all of HDR Plus but in very low light. Because of the lack of light, the exposures and burst limits are allowed to be liberally longer. The time to take a night shot is thus longer, and a stronger element of motion must be compensated for.

You can expect a Night Sight photo to take about one to three seconds, and we’d advise you to wait another second after pressing the shutter button. Pixel smartphones will automatically enable Night Sight when it is dark, though you can manually toggle the mode if necessary. Note that Night Sight does not work if you have Flash turned on.

On newer Pixel smartphones, the denoising process in the HDR Plus process during Night Sight uses new neural networks that run on the Tensor processor. This has improved the speed of a Night Sight shot.

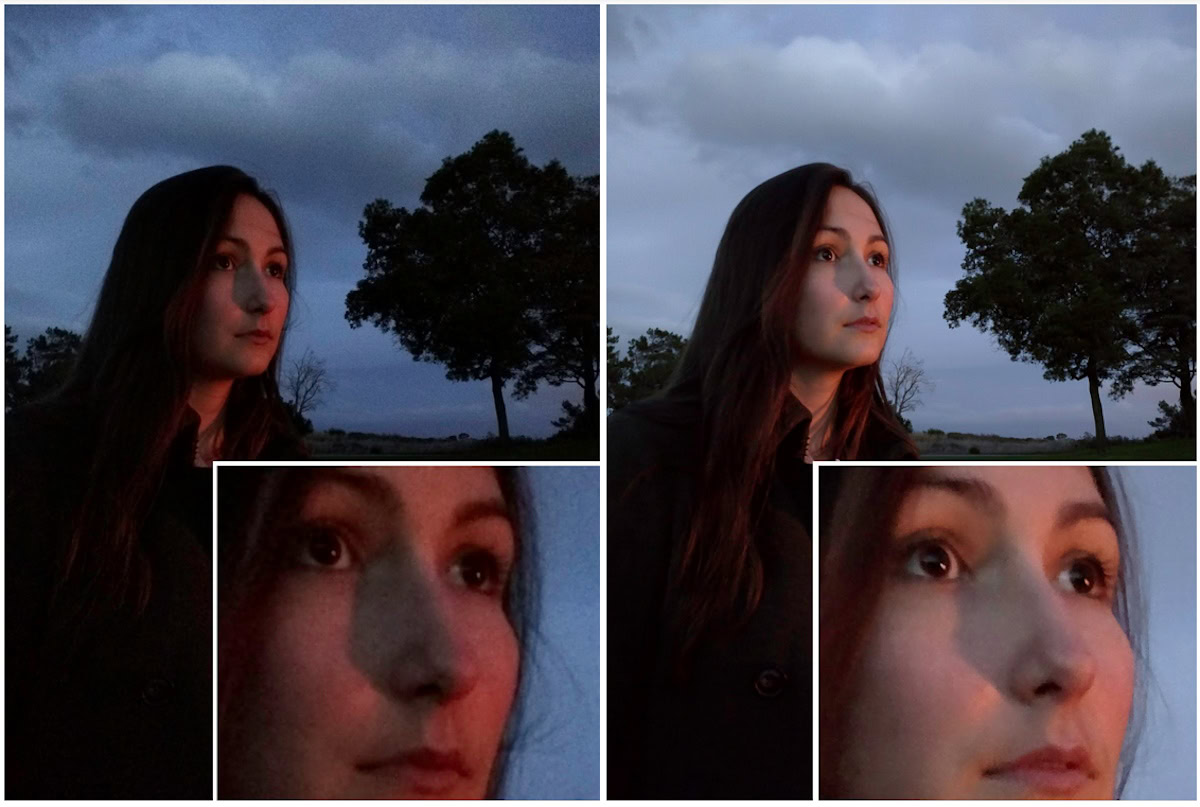

Here are some Night Sight samples from the Pixel 8 Pro:

On the new Pixel 8 series, you can now record longer timelapse videos in low light. You will need to set your phone steady, preferably on a tripod, and set the Night Sight setting under the Timelapse mode to Auto. You will then be able to shoot a long timelapse video that will have the bonus processing of Night Sight, giving you much more quality than you would have otherwise received with just the regular Timelapse mode.

Astrophotography

Astrophotography on Google Camera takes the principles behind long exposure HDR Plus and runs with it beyond Night Sight.

You need to mount your Pixel smartphone on a tripod and be in practically pitch-black conditions (away from city lights) with your phone pointed toward the clear sky. Once your Pixel phone determines the conditions to be right, it will show a message “Astrophotography on.”

In this mode, the Pixel phone will take 16 16-second photos and merge them to produce one detailed photograph. You can also create a cool one-second astrophotography timelapse of this 16-second shot.

Below is an example of an Astrophotography shot taken from the Pixel 7 Pro

Zero Shutter Lag (ZSL)

Zero Shutter Lag has long been an invisible feature in the Google Camera experience. The philosophy with Zero Shutter Lag is self-explanatory: What you click should be immediately captured. Users should be able to click the shutter button and forget about the image if they wish. The task should be done right at the button press, requiring no further waiting for processing to complete.

However, this is easier said than done, especially considering features like HDR Plus (combining a burst of images) and pixel binning (combining adjacent pixels) are inherently compute-intensive.

ZSL gets around this by capturing frames before the shutter is pressed! In some situations, like HDR Plus, longer exposures are captured after the shutter is pressed, though this experience is often hidden from the viewfinder.

ZSL used to be a more vital feature when phone processors were slow and required a lot of time to process an image. Zero Shutter Lag no longer gets advertised as strongly, as the feature is now practically seen across the smartphone ecosystem in ideal lighting conditions.

ZSL is also fairly challenging to orchestrate in current times, where our reliance on computational photography is at an all-time high. It gets further eclipsed by features like Night Sight and Astrophotography that intentionally take multiple seconds to capture photos.

Super Res Zoom

Historically, Pixels didn’t have the latest camera hardware, so Google had to rely on software magic to meet customer expectations. For instance, Google resisted adding a telephoto camera for optical zoom on the Pixel for quite some time and instead developed the Super Res Zoom feature that mimics the same functionality with digital zoom.

Super Res Zoom was introduced with the Pixel 3. On that phone, this feature merged many frames onto a higher resolution picture (multi-frame super-resolution) instead of upscaling a crop of a single image that digital zoom often did. This technique allowed the Pixel 3 with its single camera to achieve zoom details at the 2x level, which was surprisingly better than expected out of digital zoom.

Super Res Zoom salvages the results of digital zoom.

With Super Res Zoom, you would get more details if you pinched to zoom by 2x before taking a photo rather than digitally cropping the image by 2x after taking it.

When Google finally made the jump to a telephoto lens with the Pixel 4 series, it used HDR Plus techniques on the telephoto lens to achieve even better results.

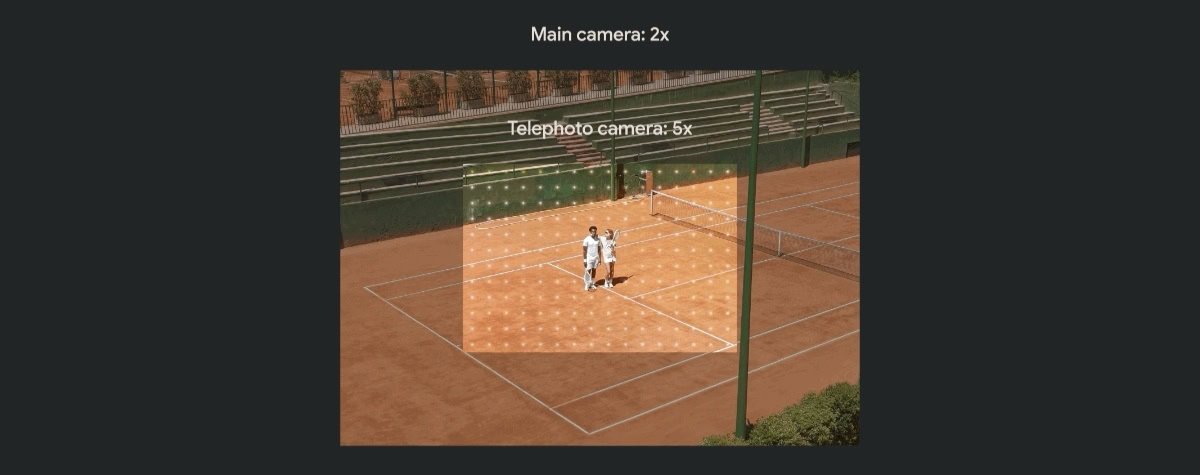

Google switched lanes more recently, adapting to the larger primary and telephoto sensors on the Pixel 7 Pro and Pixel 8 Pro for its Super Res Zoom feature. For 2x zoom, Google crops into the inner portion of the 50MP primary sensor to produce 12.5MP photos. It then applies remosaicing algorithms and uses HDR Plus with bracketing to reduce noise.

For 5x zoom, Google uses a crop of the 48MP telephoto sensor and the same techniques. For zoom outside of 2x and 5x, Google uses Fusion Zoom, a machine-learning algorithm that merges images from multiple cameras into one.

Once again, you, as a user, do not have to worry about any of this. Just pinch to zoom in, click the shutter button, and let Google figure out the rest on their Camera app.

Here are some zoom samples from the Pixel 8 Pro and Pixel 7 Pro

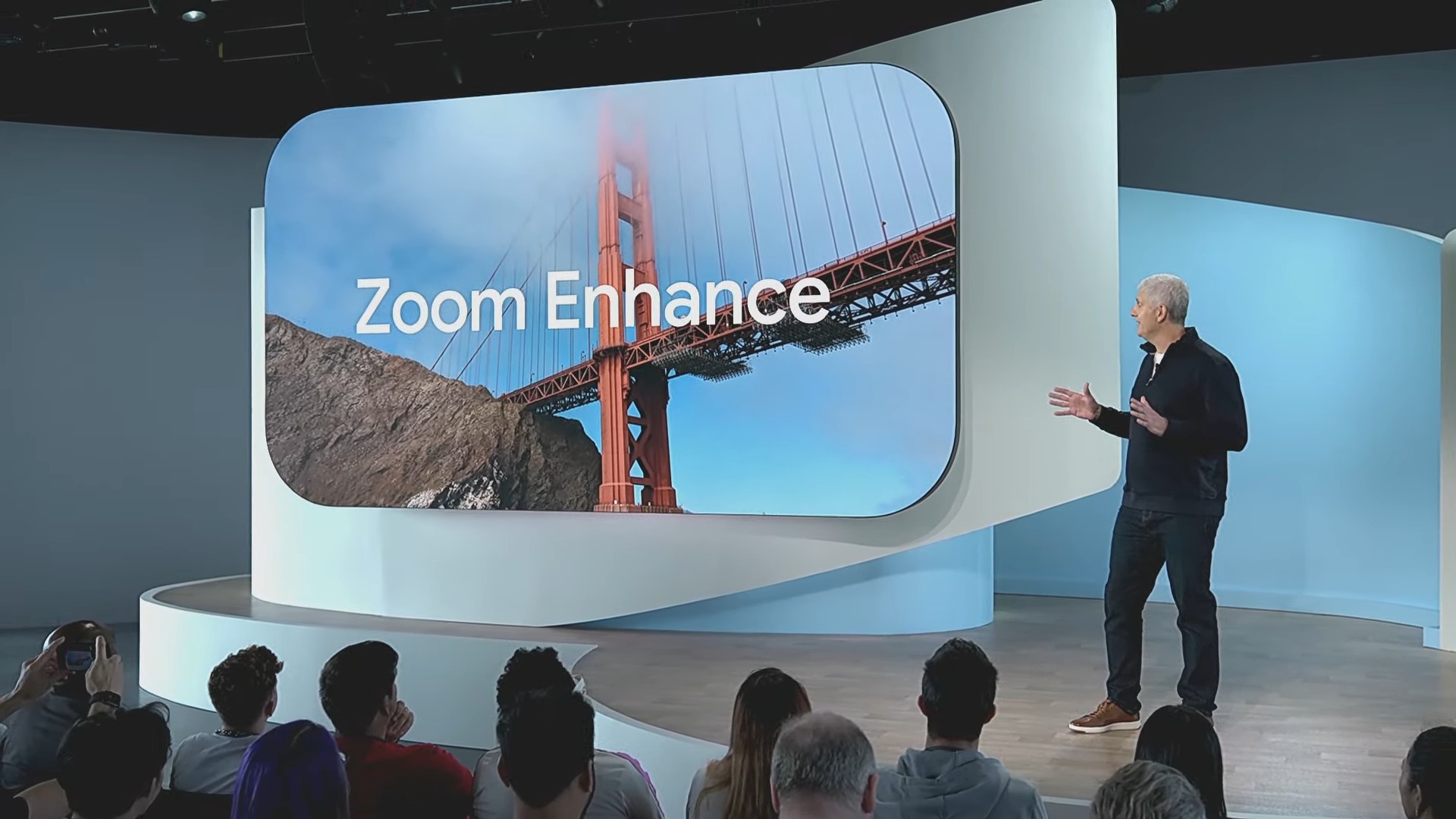

Zoom Enhance

Google revealed the Zoom Enhance feature during the Pixel 8 series launch. The upcoming feature will allow you to further enhance the detail of a zoomed-in image using generative AI smarts. The feature comes courtesy of the Pixel 8 Pro’s custom generative AI image model on-device. The mention of “on-device” by Google suggests that you’ll be able to use Zoom Enhance offline too. The feature will first come to the Pixel 8 Pro and there’s no word about it trickling down to other older Pixels.

Portrait Mode

Portrait Mode takes photos with a shallow depth of field, letting the subject pull all the attention to itself while the background remains blurred.

Smartphones usually use two cameras located next to each other to capture depth information (just like our eyes!). Without this depth information, the phone would have difficulty separating the subject from the background.

However, Google managed to do an excellent job with Portrait Mode on the Pixel 2, and it did it with just one camera on the front and back. The company used computational photography and machine learning to overcome hardware limitations.

Portrait mode on single-camera setups (like the front camera) starts with an HDR Plus image. The Camera app then uses machine learning to generate a segmentation mask that identifies common subjects like people and pets.

If depth information is available in some way (when you have multiple cameras available, such as on the back), it generates a depth map, which helps apply the blur accurately.

Real Tone

Real Tone is Google’s effort to make photography more inclusive for darker skin tones. It attempts to counter the long-existing skin tone bias in photography, giving people of color a chance to be photographed more authentically.

As part of the Real Tone initiative, Google has improved its face identification in low-light conditions. The AI and machine learning models that Google trains are now fed a wider, diversified data set. The company has also improved how white balance and automatic exposure work in photographs to better accommodate a broader range of skin tones.

Starting with the Pixel 7 series, the company also uses a new color scale (called the “Monk Skin Tone Scale”) that better reflects the full range of skin tones.

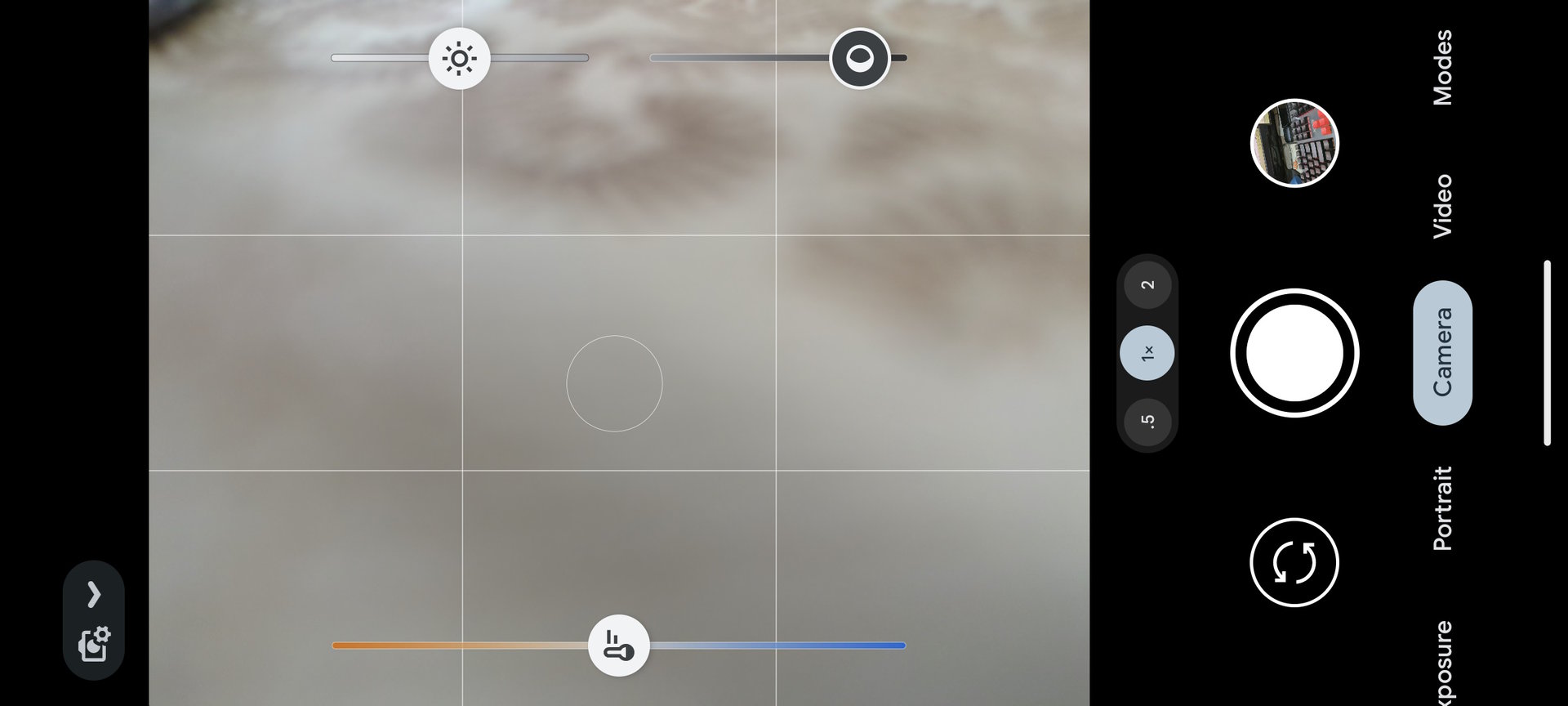

Dual Exposure controls and Color Temperature control

Very little thinking is involved in clicking a good photo on a Pixel smartphone, but if you want some control over your photos and don’t have access to the Pro mode on the Pixel 8 Pro, Google gives you three settings that you can play with:

- Dual Exposure Controls:

- Brightness: Changes the overall exposure. It can be used to recover more detail in bright skies or intentionally blow out the background if needed.

- Shadows: Changes only the dark areas. It manipulates the tone mapping instead of the exposure. It is helpful for high-contrast scenes, letting users boost or reduce shadows.

- Color temperature control: Changes the scene’s color temperature to make it warmer or cooler.

You can access all three sliders by long pressing on the viewfinder in Photo mode on the Google Camera app. These settings co-exist alongside HDR Plus, so you can still use computational photography features while slightly modifying specific settings to suit your taste better. On the Google Camera app 9.1, these settings are housed in a separate menu you can access from the bottom right of the app screen.

Computational RAW

Google adopted “Computational RAW” with the Pixel 3, though the term isn’t frequently used in their marketing.

With the Google Camera app, you can save RAW image files (.dng) alongside processed image files (.jpg). RAW files traditionally allow you a wider range of adjustments for settings like exposure, highlights, shadows, and more.

But the trick here on the Google Camera app is that these RAW image files aren’t entirely raw and untouched. Google processes the RAW file through its computational photography pipeline before saving it.

RAW on Google Camera is Computational RAW.

This approach may alarm purists and hobbyists who want an untouched, unprocessed RAW file. But try as hard as you may; you will find it extremely difficult to get a better result processing your RAW file by yourself than compared to Google’s super-processed JPGs.

Computational RAW is the middle ground, applying some of the Google software magic and letting you apply some of your own on top. The results of this approach take advantage of Google’s processing expertise and your vision.

Macro Focus

Macro Focus is one feature that relies heavily on hardware. It uses the ultrawide lens on the Pixel 7 Pro that is equipped with autofocus, letting you focus as close as 3cm away. When you come close to a subject, the Pixel 7 Pro will transition from the main camera to the ultrawide and let you take a macro photo.

You can also take a macro focus video on the Pixel 7 Pro.

Long Exposure Mode

Long Exposure on Pixel smartphones adds a creative way to use long exposure shots. It essentially uses a longer exposure photo and adds a blur effect to the background and moving parts in the image.

Most newer Pixel phones have two blur effects: Action Pan and Long Exposure. Pixel 6a, Pixel 7a, and Pixel Fold users do not have the Action Pan effect.

Action Pan works best for moving subjects against a stationary background (where the background gets blurred), while Long Exposure is better for motion-based scenes (where the moving object is blurred).

Motion Photos

Google Camera has a Motion Photos feature, which records a short, silent video when capturing a photo. It adds life to a still image and captures candid moments before and after a shot. The same was implemented in iOS as Live Photo.

Motion Photos is different from Motion Mode. You can export Motion Photos as videos.

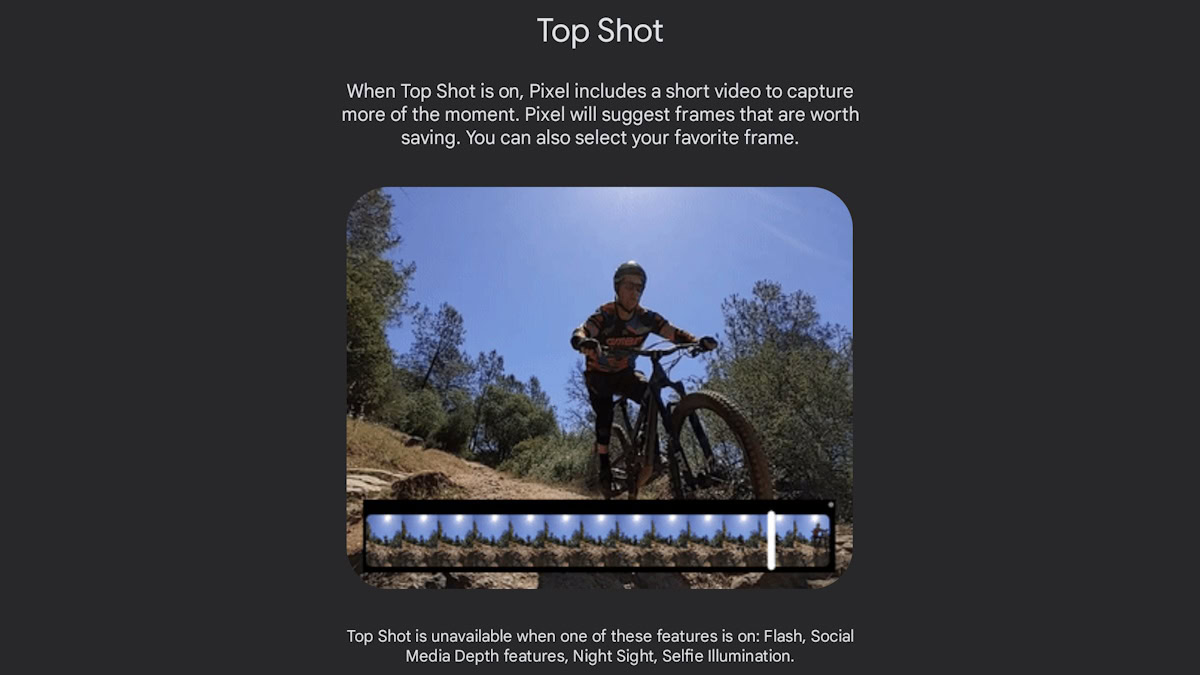

Top Shot

If there is one thing clear so far, the Google Camera app takes a lot of photos all the time, even when clicking one photo. You need to set Top Shot to Auto or On.

Top Shot lets you save alternative shots from a Motion Photo or video. The camera app takes a lot of photos before and after you tap the shutter button and then recommends a better-quality photo than the one you clicked, like one where all the people in the image are smiling well and not blinking.

Note that Top Shot is unavailable when you have enabled Flash, Night Sight, Selfie Illumination, or Social Media Depth features.

Frequent Faces

The Google Camera app saves data about the faces you photograph or record frequently if you turn on the Frequent Faces feature. The face data is saved on your Pixel smartphone and not sent to Google. When you turn off the feature, the data is deleted.

With Frequent Faces, the Google Camera app on Pixel 4 and later devices identifies and recommends better shots of faces you capture often. So you will get fewer blinking eyes and more smiling faces when using the Top Shot feature.

The feature also taps into the Real Tone feature, offering better auto-white balance for these recognized subjects.

Long Shot

Like other camera apps, the Google Camera app also lets you take videos in photo mode. Long-press the shutter button in photo mode and begin video recording.

Palm Timer

Google Camera includes a timer setting for three seconds and 10 seconds. When you activate these timer settings, you also activate the Palm Timer. Once you have framed yourself in the photo, raise your palm to face towards the camera, and the timer will begin counting down.

Guided Frame

Guided Frame is an accessibility feature on the Google Camera app designed for the visually impaired community. This feature uses Google’s TalkBack mode to audibly guide you through the framing and photo-clicking process for a selfie. This feature now works on both the front and rear cameras on the Pixel 8 series and recognizes more than just the face.

Panorama and Photo Sphere

The Google Camera app also includes Panorama and Photo Sphere modes. Panorama lets you stitch multiple images to create one long image. Photo Sphere enables you to stitch multiple images to create an “image sphere” that shows off all around you. Unfortunately, the Pixel 8 series lack the Photo Sphere option.

Dual Screen Preview

Dual Screen Preview uses the Google Pixel Fold’s outer display to show a preview of the camera viewfinder. You can click a photo using the control and viewfinder present on the inner display. This makes it easier for subjects to adjust their poses and framing to get the perfect shot.

Google Camera: Video features

The Google Camera app’s substantial focus is on photos, and the extensive feature list and improvements over the years testify to this attention. Videos are also crucial to the Google Camera experience, but they don’t receive the same love. As a result, Pixel phones with the Google Camera app can take excellent photos and videos.

The Google Camera app can record video at up to 4K 60fps across all lenses, though this feature has limitations depending on your Pixel phone. You can record with the h.264 (default) or the h.265 codec. You can also choose to record in 10-bit HDR.

There are a few stabilization options available within Google Camera:

- Standard: Uses optical image stabilization (OIS, if present), electronic image stabilization (EIS), or both.

- Locked: Uses EIS on the telephoto lens or 2x zoom if 2x telephoto is not present.

- Active: Uses EIS on the wide-angle lens.

- Cinematic Pan: For dramatic and smooth panning shots.

There is also a dedicated Cinematic Blur video mode, which is like Portrait mode but for videos. Further, you also get the usual slow-motion and time-lapse video capabilities.

Google added Video Boost with Night Sight on the Pixel 8 Pro. When you use the new feature, you’ll still receive a high-quality video right away, but the video will also be uploaded to the cloud, where Google’s computational photography models are applied to the entire video to give it HDR+ quality. This process also makes Night Sight Video possible for the first time on Pixel phones.

Google Camera: Availability

For devices other than Google Pixels, there are unofficial GCam ports. Third-party enthusiasts modify the Google Camera app and make it run on unsupported phones. They also tweak some of the myriad processing values to get subjectively different results that suit the hardware output from a certain class of phones.

While you can use GCam ports to get the Google Camera experience on your non-Pixel device, note that there will always be the risk of installing unknown APKs, and we recommend against that. Please be careful with what you install on your phone, and random APKs found on the internet should not be installed. Only install apps from official sources and developers that you trust.

FAQs

Yes, the Google Camera app is free as it comes pre-installed on Pixel smartphones.

The official Google Camera app comes pre-installed on Pixel smartphones. If your phone does not have the app pre-installed, you cannot officially install the Google Camera app. Instead, you can install unofficial Google Camera ports at your own risk.