Affiliate links on Android Authority may earn us a commission. Learn more.

I asked Bard and ChatGPT to plan me a trip and one result really impressed me

Google recently gave its Bard chatbot a major makeover in the form of an upgrade to the new Gemini Pro large language model. According to Google, Gemini represents a significant step forward, and the current Gemini Pro variant is considerably more advanced than GPT-3.5. After spending the last few weeks regularly using ChatGPT and Bard side-by-side for the same requests, I’ve come away with a pretty clear picture of how the two LLMs differ.

While I’m still working on my full Bard vs ChatGPT comparison, there are a few areas where Bard shines brighter. It turns out, trip planning is one of these things.

My fictional trip to Portland, Oregon

So, I asked each LLM to help me plan a trip to Portland, Oregon in July. The reality is that not everyone understands the importance of clarity and specificity when it comes to using these models, so I decided to replicate this with vague details. At first, all I said was I wanted to go to Portland, Oregon, and the surrounding area in July.

After each model gave me suggestions, I started “realizing” I needed to give it more context, like the fact I have three kids and they would want to do some fun activities too. From there, I asked follow-up questions like what festivals were going on. As it told me more, I asked each model to incorporate these new activities into the schedule.

Keep in mind that while I tried to ask the same questions, often each LLM would react in a way that would require me to provide follow-up. While I used the same general process, there were a few differences in some of the prompts.

How many questions did it take to get to an answer that satisfied what I was looking for and was customized for my family? Seventeen for ChatGPT and eleven for Bard. Bard wasn’t just faster; I liked the results a bit more.

Of course, Bard wasn’t perfect. Let’s start with the negative factor: It kept dropping half the answers. I asked it to make the trip in eight days, and on the first attempt, it only filled out 6 days fully, and the 7th was barely started. After asking it to add new hotspots to the agenda, I would ask for a new version and each time Bard would cut out. I imagine it must have a character limit I’m hitting against. The good news is you can just ask it to fill out the rest in a follow-up response, so it’s not a big deal.

As for what I like about it? There were a few things.

Bard vs ChatGPT for trip advice: Bard does the better job

Aside from its speed in answering my question, Bard had a lot of positives going for it:

- I felt it did a better job of integrating my suggestions and gave a more human-curated experience than ChatGPT could provide without additional prompting.

- Bard more consistently “remembers” what you asked it before, while ChatGPT often needed me to read in things I’d asked for in previous steps.

- Bard’s inclusion of thumbnails is a nice touch, as it helps you get a better idea of what it’s talking about.

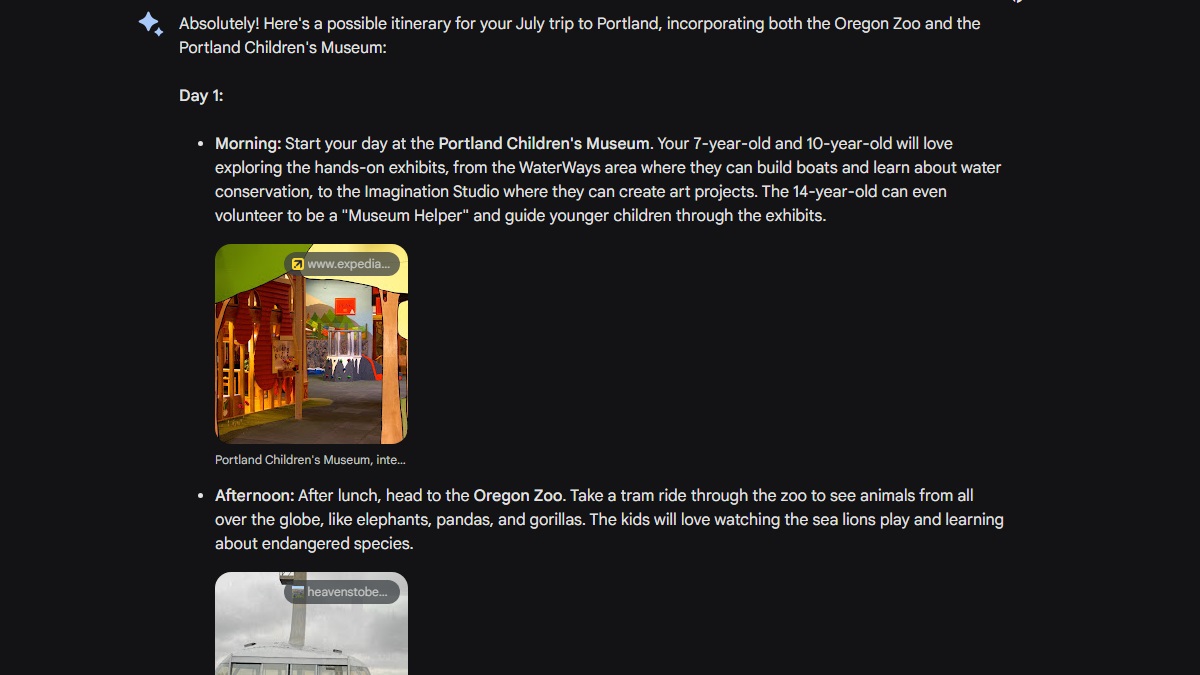

To better illustrate how each handles integrating my suggestions, I told both chatbots that my kids were 7, 10, and 14 and that I wanted to include activities that would work for all ages.

For its part, ChatGPT did include family-friendly activities, but I felt Bard did a much better job of spreading the load throughout the week. Things felt snug, but never too packed. But what really blew me away is Bard didn’t just consider my kids; it even specifically mentioned activities each age might like. You can see what I mean in the image directly below.

Let’s jump into the experience I had with ChatGPT and Bard respectively in order to better illustrate just how different the LLMs handle the activity. Note: I won’t walk through every step, but will give you the basic gist.

Using ChatGPT to make my travel itinerary

Let’s walk through the process with ChatGPT. For the first question, I asked it to “help me plan a trip in July to the Portland Oregon area. I want to focus both on things to do in the city and the surrounding area.” It responded with a list broken into morning, lunch, afternoon, dinner, and evening. There were basic descriptions included and a more guide-like style. The only problem? It only did 3 days of activities, despite claiming it was a trip for seven days.

I asked it to correct the problem. Then I asked it about kid activities, with the same prompt as I would use with Bard. It gave me suggestions, and then I asked it to include the Children’s Museum and Oregon Zoo in the schedule.

The result had a nice mix of activities, but it was extremely overpacked. I asked it to ease off and spread things over eight days instead.

Around that time, I decided to think about festivals. “Are there any festivals going on in July that would be worth going to?” It gave me some options, and I settled on the trip running from July 1st to July 8th. I asked it to add that all into the schedule, and it gave me a new schedule but this time left out descriptions entirely.

“Can you give me more information about the places on the agenda?” I asked. It responded with a list of info, and I had to tell it to integrate this into my schedule. From there, I asked it to make the experience more personal to see if it could provide the same style and level I experienced with Bard.

It gave me a pretty close alternative. Unfortunately, it also had me going to the zoo and museum on the same day. Before I could fix it, I noticed another error. Despite me telling it that I was going on the trip over the 4th of July week, it didn’t incorporate any local activities!

I asked it to address this, and it gave me a new form, but it had day 4 (July 4th) and Independence Day as two entries with different activities. No way I’m going to some waterfall outside of Portland and making it back for festivities on the same day! So I fixed this next. It spit me out a new schedule but removed the descriptions and went back to the vague format.

I reminded it that I wanted this with personalized descriptions, and finally, it gave me another version. I then remembered I needed to fix the zoo and museum day error. I brought it up, and it said it “fixed” it, but it changed literally nothing. I mentioned it once more, and it apologized. This time it removed the zoo completely from the schedule.

I asked it to add it back in and make sure it’s on a different day from the museum. It again gave me the same result with the zoo still missing. I asked a final time for it to add the zoo in as another day. Success!

As you can see, I was able to get ChatGPT to do what I wanted, but it required more work to get there.

Using Bard to make my travel itinerary

My experience with Bard started the same as ChatGPT. “Help me plan a trip in July to the Portland Oregon area. I want to focus both on things to do in the city and the surrounding area.” It immediately responded telling me this is a great time of the year to visit. The weather is nice, there are summer events, and so on. This felt much warmer and more personal than what ChatGPT produces by default.

I looked through the list and saw there were no kid activities. Granted I didn’t ask for it, but the reality is most genuine AI conversations start like this: Someone asks a vague question, gets a narrow response, and then asks something more detailed. I wanted to see how each LLM handled this process. So I told it about my kids and their ages. At first, it just gave me kids’ activities in the area, but I asked it to incorporate things into the schedule.

It did so beautifully. After looking through the schedule, I realized it was a bit packed and asked it to add more restful activities and breaks. It handled this task well with one exception; it cut off day 7. This likely had to do with the AI’s character limit and was easy to fix by just asking it for the rest.

I finally had a solid-looking schedule, but I realized I should think about doing this around the 4th and incorporate local events. I asked it what festivals were going on in July; it talked about Oregon’s 4th of July events, among others. I then told it to make the schedule run from July 1st to the 8th and add Independence Day festivities.

It missed filling out the last two days again. I asked it for more, and it gave me very few activities to choose from. I soon realized I’d exhausted most options that would be family-friendly for kids across our age spectrum. This helped me save a day, as I ended up shortening the trip to just 7 days.

Other than having to ask for the last day or so, Bard finally had the result I was looking for. From there, I performed a fact check and a few other things. Had this been a real vacation, I’d still need to do some manual work and planning, but this would have saved me a ton of time.

Was there anything ChatGPT did better?

Actually yes, ChatGPT has accuracy on its side. Because it didn’t go as specific in its suggestions, it was able to provide an experience that held up perfectly to my fact-checking. The places that it said to visit exist and were within a reasonable enough distance from Portland as well.

Google Bard tends to make things up more often in my interactions, and its trip planning wasn’t immune to this either. It told me to visit one of two places for coffee: Proud Coffee or Coffeehouse Northwest. From what I can gather, the first option was meant to be Proud Mary Cafe, while the other is permanently closed. That wasn’t the only instance. At one point it said Hawthorne Avenue, instead of Boulevard or District.

While this isn’t making stuff up, it also packed two outdoor events into the same day. I wouldn’t have minded this, but they are three hours apart, and yet I’m supposed to get from there back to the city in time for bed? The good news is that I could always manually adjust this schedule easily if this were a real vacation plan. For example, I cut back to seven days originally, so I could easily add back that day and turn the day I leave the city limits into a two-parter. I could rent a cabin or something in between the two days.

Which one should you use for trip planning?

Overall, I would probably use both AI tools on a real trip. They handle the process differently and yield different results, which I could then combine manually into the perfect schedule. I feel like running the same inquiries through both tools is more likely to provide the best result.

Of course, most of you aren’t going to do that. For those who just want a brief list of suggestions and prefer more manual interaction, ChatGPT can handle this well and without any glaring mistakes. For those who don’t mind fact-checking but want a more detail-oriented itinerary that feels more human-curated? Bard is great, just remember that you shouldn’t necessarily trust the suggestions it spits out.

Of course, I only did this fully with one vacation in the example above. To make this a bit more fair, I did quickly do a few other simple trips. In almost every instance, I found ChatGPT took a bit more manual interaction to get to where I wanted, and found that my general impressions still favored Bard.

Lastly, you might be thinking that I could have been more precise or clearer with my prompts and gotten much better results from ChatGPT. This is likely true, but also kind of the point. Most users don’t think through their prompts, so the advantage goes to the LLM that better understands these lower-quality prompts. Ultimately, Bard is easier to use for the average user for trip planning even if you can get pretty much the same results with the right prompts.