Affiliate links on Android Authority may earn us a commission. Learn more.

I spoke to Arm to find out why your Android phone needs all that AI power

Since the generative AI boom kicked off in 2023, skepticism has grown among tech enthusiasts: Do we actually need so much AI processing power on our Android phones?

It’s a fair question. After all, the most transformative AI tools — from ChatGPT to NotebookLM — run in the cloud. There is currently a split between “visible AI” (the chatbots we communicate with) and “invisible AI” (the magical features many don’t realize use AI), but the heavy lifting is often done remotely. This split has contributed to AI fatigue, as users see high-end NPUs as expensive overkill for features that require an internet connection anyway.

To understand why local AI matters, I sat down with Chris Bergey, the Executive Vice President of Arm’s Edge AI Business Unit. The company designs the Arm architecture used by billions of devices worldwide, placing it at the center of local AI processing. Here are a few takeaways from our conversation regarding the future of AI on Android, the importance of a hybrid AI approach, and what innovations to expect in 2026.

Arm’s work on AI didn’t start with ChatGPT

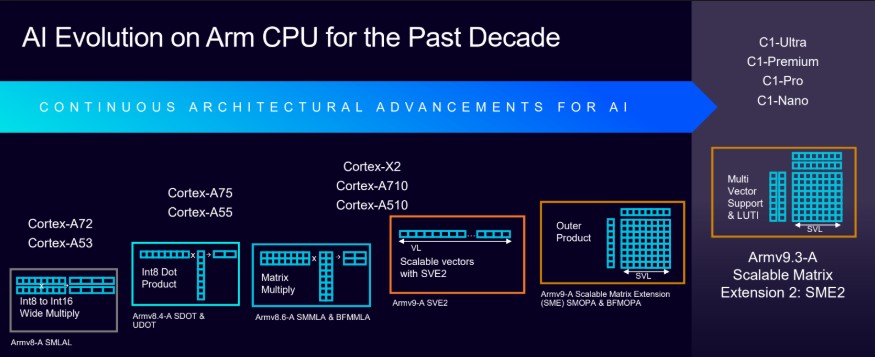

Bergey clarified that while AI exploded in popularity in 2023, Arm began a major architectural shift much earlier. The company introduced specific AI features and matrix extensions as early as 2017 to better support these workloads.

In recent years, Arm has championed heterogeneous computing. Instead of offloading all AI processing to a dedicated Neural Processing Unit (NPU), the CPU, GPU, and NPU work in tandem. Bergey noted that modern photography illustrates this perfectly: when you snap a photo, the CPU, GPU, and NPU fire on all cylinders to process the image. The industry now aims to apply that same approach to LLMs and agentic AI.

The case for on-device AI

If the best AI models run in the cloud, why bother with on-device AI? The answer lies in latency and reliability. Bergey shared an anecdote about a conversation with a major handset manufacturer who insisted that for AI to become a primary user interface, it cannot fail when you hit a cellular dead spot on the highway. “All it takes is for a few times for that to happen and you go, ‘I can’t leverage this AI use case. I want to go back to my old way,'” Bergey told me.

Then there is the cost factor. Bergey highlighted concerns from game developers regarding AI agents (NPCs). Some developers hesitate to implement them via the cloud, fearing massive bills for token usage at the end of the month. They are only comfortable moving forward if costs remain low and predictable — something on-device processing guarantees.

Neural graphics: The future of mobile gaming

An interesting divergence between desktop and mobile AI emerges in the realm of Neural Graphics. On a desktop PC using an NVIDIA card, AI upscaling (like DLSS) is often used to push raw frame rates higher.

On mobile, the priority shifts. Bergey explained that Arm’s focus with neural graphics is leveraging AI to drastically reduce power consumption. By using techniques like super sampling and neural ray denoising, a mobile GPU can deliver a high-fidelity experience without draining the battery in an hour.

Looking ahead to 2026

As we approach 2026, Bergey predicts a move toward a hybrid AI model. While the cloud will handle training and massive models, “invisible AI” — the ambient computing that manages your calendar or anticipates your needs — must live on the edge to ensure speed and privacy.

He also pointed to a renaissance in wearables. Just as smartwatches found their footing, Bergey sees a future in “facial computing” (glasses) and neural bands, though he admits thermal and power constraints make these some of the hardest engineering problems to solve.

Our full conversation covers much more, including a deep dive into how Arm is simplifying development with Kleidi libraries, the future of “agentic” AI, and predictions for CES 2026.

You can watch the full video interview above or read the complete transcript below.

00:00 – Mishaal Rahman: So just to start, can you briefly introduce yourself and the overall mission of Arm’s Edge AI business unit? For our readers who only see Arm on their phone’s spec sheet, but they don’t really fully grasp the business model, where exactly does your team sit in the AI stack?

00:18 – Chris Bergey: Mishaal, thanks for having me. It’s great to be here. Wow, you gave me a very broad first question. So, I do run the Edge AI business unit. We recently consolidated. We had about four business units before and now we’ve reconsolidated around Edge AI, Cloud AI, and physical AI. Obviously, that’s a lot of what I think we’re going to talk about today is the impact of AI and how that’s changing the future of computing. Arm is present in most smartphones and obviously in not just single processors, but processors that are not only running the Android system or the iOS system, but also the Wi-Fi controllers or many other different aspects of computing. That’s just part of Arm’s broad, broad reach. I think to date there’s over 400 billion Arm* processors that have been shipped to date and through our various partners and that goes across many different marketplaces from IoT to client computing, data centers, automotive, all those kinds of things.

*Editor’s note: Chris stated that there are over 400 billion Arm processors that have been shipped to date, but a spokesperson for Arm followed up to clarify that the company has actually shipped over 325 billion processors.

But what we focus on is, first off, the Arm architecture. Arm has existed for almost 35 years or I guess we just hit our 35th anniversary. Much of that actually came from a low power background. Some of the earliest designs that Apple was, for example, an early investor in Arm back 35 years ago and the Apple Newton, which was a product that I got a chance to play with. Not the most successful product, but clearly a future of what was to come. Products like the Apple Newton, products like the Nintendo DS, were based on Arm. So we have this history of power, low power, and of course that’s come into play in many different markets from IoT to smartphone and computing. Of course, now we see that with AI, the power of computing becomes super important. So we’ve made significant strides in data center as well as in other markets. So I’m focused on, one, we have the architecture, we have a whole set of companies that license the architecture from us that take implementations or RTL (Register-transfer level), which is used in designing chips, and basically takes that RTL, combines it with their system IP and their special sauce and creates chips that get built into so many consumer electronics devices today.

03:08 – Mishaal Rahman: Awesome. You guys, Arm, you’ve been doing your thing for quite a while now. With this recent boom in AI, people kind of think of it as a recent, a new innovation. But actually, AI has been a thing that’s been around for quite a while as well. It didn’t start with 2023 and ChatGPT. It’s been around for a long time. And I think you’ve talked about this in previous interviews. There has been AI before the LLM, before the ChatGPT, before Gemini, before all that. I want to ask you, what impact has Arm had on the pre-LLM era of AI? How have Arm chips been enabling AI before the AI boom as we came to know it today?

03:56 – Chris Bergey: That’s a great question. You’re right. AI and a lot of complex math that we were doing before that wasn’t branded AI clearly was doing a lot of things in multimedia and the like. But I think there’s two key areas. One is the architecture itself. As I mentioned, we have the Arm architecture. We believe it’s the most advanced computing architecture out there. We’ve been very aggressive due to our partnership and our partners in driving future innovation around security as well as around complex math and doing a lot of the AI acceleration. That goes from everything to the way we’re doing vector acceleration to some of our latest products that we’ve announced that actually now have matrix engines in them that actually the CPU cluster that’s made up of several different CPUs is able to leverage this same matrix engine in the cluster to help improve the performance of AI workloads.

What’s unique about that is CPUs are often thought of as general purpose and they are. But they’re also quite easy to program. And so that’s a programming model that a lot of people understand and a lot of people can leverage and is very consistent because of the footprint of Arm computing. So the fact we were able to do this matrix acceleration in the Arm architecture and a programming model that is the standard as you would program any kind of other application, that has been quite unique and quite powerful for us.

Of course, there still are other accelerators that are often in these systems, whether that be a GPU, whether that be a network processing unit. Arm has actually come up with several different NPUs that we actually provide to in the super low power area. One of the ones I like to point to is for your listeners that are familiar with the new Meta glasses that have a display and actually have the neural wristband that actually is starting to take neural sensing of your wristband. That is actually powered by, for example, one of Arm’s latest neural processors as well. So we’ve been in this for a while, as you said, way before 2023 and I guess we really started introducing some of these features actually back in 2017. So it’s been almost a decade.

06:30 – Mishaal Rahman: Wow. And with that early head start on preparing and optimizing for early AI machine learning workloads, what are some of the biggest benefits that you’ve seen from those early optimizations in terms of how you’re preparing Arm chips to run some of the new massively increased demanding workloads we’re seeing from the new LLM era of AI?

06:56 – Chris Bergey: Well, I think that one thing that Arm has done is really set a standard around the way that many chips are architected. And that goes beyond the CPU. Many of Arm’s protocols around how do you build extensions to various multimedia items or to memory systems and all those kinds of things is something that we’ve been working with our partners on in refining and creating a very rich ecosystem of those things. That’s one of the key building blocks right now as you get to AI because obviously the matrix computing and some of that can be super important. We’ve also, I think many of your listeners are aware of the importance of memory in AI and just the size of the model as well as the bandwidth associated with the model. And so the ability for our partners to be able to create these different architectures that can scale, because as I mentioned earlier on, we’ve got solutions that are scaling all the way down to well less than a dollar to super high computing price points. So I think giving the ecosystem those building blocks, that’s one.

The second was just learning from the workloads. We get users, we get workloads that people come to us and say, “Hey, we need tools to do this and can you accelerate that?” And that’s really been a lot of the evolution of the architecture. If you look at areas like our vector extensions, if you look at our matrix engines, something like that, that has been inspired by many of our partner feedback and what software developers have told us is important. We’re quite proud that right now we have a software developer ecosystem of more than 20 million developers, which is probably the largest out there. And so we get a lot of really good feedback on workloads that people are playing with for the future.

08:57 – Mishaal Rahman: Awesome. I did want to ask you about the developer ecosystem and kind of the optimizations you’re doing to improve the way developers work with AI, but I did want to touch back on first the future, the transition we’re seeing in the current era of AI. So right now, since 2023, a lot of the focus has been on passive interactions with AI chatbots like ChatGPT and things like that and kind of workloads that are very transient. Things like they happen, you tell an AI to do something, it does something, and then it executes that task. And then you don’t really interact with it until the next time you ask it to do something.

Versus now we’re starting to see a lot more AI that is running all the time in the background. It’s like processing all this stuff as you’re doing it and then executing tasks in the background. From a silicon perspective, how has the architectural demand of an always-on AI been different? Like what kind of optimizations are you making to ensure that these tasks can be run efficiently in terms of both battery usage and memory usage so that you can have an always-on AI in the background processing whatever you want without completely destroying the longevity of a mobile device?

10:05 – Chris Bergey: That’s a great question and it continues to be an evolution. I think one thing that Arm has been quite aggressive on for over a decade now has been what we call heterogeneous computing. Heterogeneous computing means that you may not have a single monolithic computing engine. So one of Arm’s biggest innovations that we brought to market again over a decade ago now was this idea of big.LITTLE. The idea was that you had big cores and little cores and they were actually instruction set the same so that literally software could swap from one core to the other and take advantage of performance when you needed it and thus higher power consumption, but also lower power consumption when that was all that was required because something was running in the task in the background and stuff like that. And the idea of, now we obviously know that most smartphones ship with at least six to 12 computing cores just in handling the OS, etc. So that concept has increased. And so that continues to be fundamental.

We’ve now started to see where people want to start leveraging GPU or they want to start leveraging NPU. As I mentioned, when you take a picture today in most smartphones, actually the CPU, the GPU, and the NPU are often involved with many of the computational elements that the picture taking is obviously advanced so much and is such a competitive area right now where so many phone manufacturers take great pride in the type of photos. And so that’s actually an example of where you’re using all these different computation elements but people are not necessarily aware of where that computation is happening.

And then you brought up the good point of ambient computing or ambient AI or what have you, the idea of it running in the background and just doing smart things and advising you and helping you make smarter decisions. And so I think as we get to agentic AI and those kinds of things, you’re going to see even more of that. And it’s really about, it is hard for software developers to do that. And so that is where we actually spent a lot of time in providing those tools and a lot of the abstractions as well as work very closely with many of the OS vendors, whether that be a Google or a Microsoft or general Linux distributions and those kinds of things. Because if we can extract that from some of the users and kind of get it right just between Arm and these OS vendors, then we can really make a broader audience that doesn’t have to worry about the system kind of doing the right thing at the right time.

13:02 – Mishaal Rahman: Yeah, I did want to follow up on the developer aspect. As you mentioned, there are tools for developers to build, to optimize their machine learning AI workloads for the particular hardware that they’re running their application on. But there are dozens of tools, dozens of libraries, dozens of frameworks, so many different variations of hardware that they have to target and support. It kind of feels like it’s a bit of a mess and really difficult for a developer to wrap their heads around on how exactly do they reach the broadest number of devices and actually get their application, their task working optimally on as many devices as possible. What is Arm doing to make that easier for developers?

13:44 – Chris Bergey: It’s a great question. I think that you can think about OS applications have a certain level of maturity today and then AI definitely has a different level of maturity. I think we work very closely with the OS distributions I mentioned to get as much of that integrated into the higher level stacks. A good example is that I mentioned earlier SME, SME 2, which is this new matrix engine. So what we actually do is we create Kleidi libraries. Kleidi libraries now are basically allow developers to target the Kleidi library and what the Kleidi library will do is it’ll actually find, it’ll understand, oh, this piece of hardware I’m running today, it is SME 2 enabled so I can use that matrix engine. Oh, maybe it only has SVE 2, which is a vector extension, we’re going to use that. So we try to abstract that as much. And then we work with many of the vendors that abstract those things. So for example with Google and XNNPack if you’re familiar with that, which is kind of a multimedia framework. And so now developers can work at higher levels. And so I think that is one of the biggest challenges right now around AI is that some of this hardware is outside of the CPU and as you think about CPU, easier to program, has more general purpose.

NPU is much more kind of focused on AI-only workloads but then the programming model can be quite unique and the capabilities and dependencies between operators and actually how the model works and all those kinds of things. And then GPUs are kind of somewhere in between. And so that is an area that the industry is really working towards different models, I guess how to solve that. And if you use like the Android example, obviously AICore is a core deliverable that Google delivers that basically provides a lot of the Gemini services. So that allows developers to work at a much higher level and leverage those services. And that gets built on top of core Google services which gets built on core Arm services which goes all the way down to the hardware. So much of, I think for many developers that is the level at which they want to abstract.

But you’re right, as you get deeper and deeper or if you say, “Hey, I don’t want to use Google’s large language model, I want to run my own,” you do need to have a different level of capabilities. We try to do as much as we can relative to documenting that and provide developers help at arm.developer.com. And I guess it’s developer.arm.com, but those are the kind of resources you can go to or obviously many of the other Google, etc. provide a lot of reach out as well for developers.

16:47 – Mishaal Rahman: Awesome. So what would you say to developers who are skeptical of the whole idea of on-device AI? Because I know there’s a group of, there are some people online on the AI space who kind of feel that there’s not really much benefit to having on-device AI because most of the key features, the features that people actually interact with right now are all being run in the cloud. What are some features that you think can only be realistically done on the edge?

17:17 – Chris Bergey: Yeah, this is a great question and it’s a fun one and something that will continue to get discussed I’m sure for years to come. A couple insights there. We all understand that interacting with AI is quite powerful and that’s what everyone kind of went through many of those eye opening moments when they first played with Gemini or first played with OpenAI chatbot and those kind of things. But I also would say it doesn’t necessarily feel like you’re necessarily interacting with a human, right? The chat experience, stuff like that, there’s still latencies and those kinds of things. It’s one thing when you’re sitting in front of a desk and have a super high speed link and are able to do those things. But I also, as these services become more and more important, the actual sensitivity to that latency becomes more.

So in speaking to actually one of the largest handset folks, I kind of asked that question saying, “Hey, why can’t we just do it in the cloud?” And they said, “Look, we want that experience to be so good that it needs to be good all the time.” And the fact that when you’re driving down the highway and you hit a cellular dead spot and now that service is janky or you’re frustrated, all it takes is for a few times for that to happen and you go, “I can’t leverage this AI use case. I want to go back to my old way” and those kinds of things. So there is a lot of sensitivity to that and I can tell you that we work so hard with so many of the phone manufacturers making sure you don’t have the jankiness and smoothness and scrolling and all those kinds of things. Well imagine when if AI becomes so important because it’s changed the way that we interact with these devices that you have that sensitivity to those latencies which may or may not be due to the handset manufacturer. So I think that clearly is one.

Two, another big one we hear about is cost. So we are in this situation where tokens per second are, cost per token is coming down significantly. But I can tell you that it’s very interesting the work that we’ve been doing with the gaming community where there is an opportunity in many of these gaming engines to start putting more AI agents in the game. So whoever, the adverse person you’re playing against or whatever becomes more and more AI generated and that can be token driven and all those kinds of things. And we were talking to developers about, “Well why are you not enabling that?” And they said, “Because I am worried that at the end of the month I’m going to get this bill of all of these tokens that my game utilized and I’m only going to be comfortable when I know that I can do that on device.” So I think that the answer is always going to be hybrid, right?

Just to be clear, I think that the cloud is going to play a huge role in training, but I think that as you see kind of expectations on experience, it’s just going to need that local acceleration. Another example I like to use is if you give a child, say a 10 year old today, a piece of electronics, the first thing they try to do is touch the screen, right? And they’re like, “Well wait, I want to interact with this.” And they’re like, because they don’t know anything that wasn’t a touch screen. For unfortunately for people like you and I, we definitely—touch screens was a very unique thing that only came about about 20 years ago in broad usages. But I think that that UI experience or that AI experience where like you’re literally just having a conversation like you or I, that’s just going to be an expectation. And so the question is going to be, okay, how much of that do you do locally? And then we’re not even getting into the privacy and all those kinds of things. So I think that there’s going to be a lot of on-device AI. I think the cloud is still going to play a huge part of that. And as these models, you know, the models get more capable and the models are shrinking, we’re going to find some happy medium and it’ll continue to get pushed on both sides as things evolve.

21:55 – Mishaal Rahman: As I mentioned before, one of the reasons people are so skeptical right now of on-device AI is because most of the most transformative experiences that people kind of associate with the new era of AI is all happening in the cloud. But as you mentioned, there’s a lot of improvements being made in getting these models to be run like shrinking them down and having them run on device and every year we’re seeing improvements architecturally in the handling and the performance of running AI models on device. Even with the latest Arm C1 Ultra, I think for example, there was announced that there’s a 5X performance uplift and a 3X improved efficiency in AI workloads.

How long do you think it will take to kind of have some of these really incredible transformative experiences we’re seeing today that are being run on the cloud? How long do you think it’ll take for those kinds of experiences to translate and actually be running locally on our mobile devices? Like three, five years out from now? Two years? Like how long do you think it’ll take?

22:55 – Chris Bergey: I think generally model migration at this point in time is less than two years. So I think there’s some memory limitations there just to be clear, but I think that if and I may even be under calling it, right? But I think if you think about some of the most powerful stuff that’s being done in the cloud, that’s been shrinking at such a rate and stuff like that that we’re kind of seeing that kind of transaction, that kind of change. But again, I think we’re just even in the early, yes there’s some exciting use cases that are happening, but I think some of the more interactive ones were still early on. And because a lot of what’s happening now is a lot of kind of knowledge distillation and those kinds of things which benefit from actually larger and larger data sets.

When you’re talking about the way you interact with things and getting rid of, imagine shipping a phone without a UI or without a touch screen or something like that. I mean when you get to that kind of a product, the use model and all those things just kind of totally changes. And so again, I think we’ve not gotten to that phase. And thanks for calling out our latest Lumex platform and yes, we’re very proud of the computing power that we’re putting out there and I think there’s some awesome products that are being launched late this year and early next year based on that. And so we’ll keep pushing those computing capabilities.

24:37 – Mishaal Rahman: Yeah, good to hear. One follow up question I had is, most of these AI improvements, we’re kind of seeing it on the flagship level on mobile devices. So devices with the highest end CPUs based on the latest Arm designs. And we’re also seeing as you mentioned earlier in this interview that we are seeing a new category of devices. We are seeing glasses where they have Arm chips, they are capable of processing audio and potentially through the camera what you’re seeing. But they definitely cannot have the same level of performance in AI processing as your mobile smartphone. You just can’t strap that amount of computing power onto a device the size of your glasses and expect it to be actually kind of wieldy. It’s going to be too heavy, too bulky, maybe require a huge battery pack, you know, it’s just not really feasible right now.

So I wanted to ask you, what is Arm doing to kind of optimize these workloads to get us to a point where maybe we can run all of this on device and have everything be completely standalone on glasses and not just have it be completely dependent on a standalone compute puck or your smartphone?

25:52 – Chris Bergey: The good news, bad news is that we’re going to keep bringing more computing, but I think the use cases will continue to consume all that we can provide and people are going to want more. So glasses are an amazing platform and I think we’re really seeing incredible amount of interest. As you mentioned, Meta has done an amazing job and I’m a very active user of Meta glasses and I think they’ve done an amazing job. They’ve really invested and now you’re starting to see a wider ecosystem. It is just such a hard problem. I got to tell you that the amount of weight and power that you can put in there and the sensitivity to temperature; facial computing or things that are on your face, it is a very difficult problem. And then you have all the fashion elements of it or whatever. But I think we are delivering today; those are Arm-CPU based and will continue to be.

And we’re continuing to evolve. How can we get lower and lower power to get or get the same amount of power but double the computing or whatever those kind of elements? So we definitely have a lot of work going on there. But I do again think that kind of it’s kind of a good example to, “Is the answer device or cloud?” Because you probably are going to have some type of a hybrid step in between where you’re able to do some additional offload of processing but yet you’re not going all the way to the cloud. In those kinds of devices today it’s most image capture and those kinds of things and there’s some good audio transactions. When you get into kind of video and things like this you get into a lot more kind of split rendering and there’s a lot of technologies and stuff like that that start coming into play. But yeah, glasses are a super cool place of innovation and we’re super excited about what the partners are turning out in that area.

The other thing that’s kind of interesting to me around the XR platform or whatever AR platform whatever you want to call it is how it’s kind of brought back wearables. You know, I think it was in 2014 that Apple launched the iWatch and I wear an electronic watch here but they were hot and people still use them but I think many of them found their way to drawers and didn’t get back out. Now we’re starting to see again with these new form factors how people want to use wearables to interact, right? Whether it’s the ring, whether it’s the neural band I mentioned and just this idea of different gestures and how that’s going to change computing.

So there is this whole, as much as how much performance can you give me, there are this whole new kind of sensor on your body computing and that’s become quite interesting again and it’s got a kind of a renaissance of figuring out how to do more wearables and because the capabilities of them can be more impactful now.

29:11 – Mishaal Rahman: Yeah, I feel like we’re kind of also in the renaissance of AI. AI is being put everywhere in every single product. You open up a new Google app, there’s probably like three different buttons to access Gemini in there. It’s everywhere now.

29:27 – Chris Bergey: But it is a computing limited app, right? So I mean that’s where you know that we’re still not able to give as much memory, memory bandwidth, computing power versus what the experience you’d like to deliver. And so that is why I think we’re still in the early days of what we’re going to expect from these devices in the future.

29:54 – Mishaal Rahman: Well, the people who are already getting AI fatigue will not be happy to hear that because there are a lot of people who feel like we already have too much AI and they kind of feel like it’s being shoehorned into products that don’t need it. Do you think this kind of skepticism is warranted towards Edge AI? Or do you think like, what would you say to people who feel that there’s too much AI going on? Do you think AI and the edge will kind of help alleviate their concerns? Is it a better approach for AI?

30:23 – Chris Bergey: I mean, I think that unfortunately this is a natural cycle and maybe us technologists are part of the problem. But you know, probably people would have made similar comments about how stupid internet commerce was, you know, 15 years ago, right? I mean some of those early companies like WebTV and Webvan and all these companies failed, right? And the concept was right, the timing was wrong or whatever. And so I just think AI is going to be like that. And I think you do have those moments where the technology becomes personal to you, right? Something it does something that you go, “Wow, that would have taken me a long time to do or I would have not been able to do that before.” And I think there’s a lot of very interesting tools out there.

NotebookLM is one that I like a lot just on kind of like trying to consume data and figure out how do I want to consume that data and how can I do it, how can I take this super technical thing and absorb it in a way that’s not going to put me to sleep after 15 minutes. And some of those activities I just think they are really really cool.

We’ve been starting to see some activities where people are being able to use AI to help people see when they couldn’t see before or hear when they couldn’t hear before and augment hearing aids and all these kinds of things. And I mean those things are transformative. One of the ones that I get excited about is just thinking about kind of even things like Gemini and imagine being able to put Gemini in $100 phones in villages where the educational options for people are not very high, right? And just I mean that changes the trajectory of civilization and impacts people in huge ways.

So yes, we’re going to come up with some dumb ways to use AI and that you’re not going to love but I think that on a whole it’s going to really change things and it would sound as silly as saying, “Wow I really don’t think internet commerce is going to be a thing” when we look back on it.

33:00 – Mishaal Rahman: Yeah, kind of the point I was trying to get at is like we have these two tiers, these two categories of AI. We have the visible AI, the chatbots, like the things that when you’re acting, you know it’s AI. That’s what people think of when they think AI, the chatbots. Then you have the invisible AI. All those features that use a lot of the AI technologies, the same principles, the same accelerators and things like that, but it runs invisible to the user. It’s just doing things for you. And yeah, a lot of that stuff is happening on the edge and it’s really important. But people just kind of associate AI with the chatbot that occasionally hallucinates and gives you incorrect information.

33:36 – Chris Bergey: Yeah, I mean, but it’s a natural evolution, right? I think that just to take your chatbot example a little bit further, right? I mean when you did those first chatbots as kind of were available for you as technical support, right? I mean the experience was difficult, right? It’s like the old telephone menus where you’d be like hitting zero all the time and it would keep asking you questions like no I just need to talk to somebody. But now I would say that I actually prefer the chatbot experience to resolve my issue than waiting on hold to talk to somebody, right? And I think that’s just an example how we take technology and when it’s new it’s a struggle, it has a lot of weaknesses. But in the end it actually becomes more seamless, more intelligent, more fulfilling, whether it’s customer satisfaction, whether it’s costs, whatever capabilities.

And like you said, I really agree with you. There’s the visible AI which is like, “Hey Google tell me this.” And then there’s the “Wow that just showed up in my calendar and it was correct or how did my phone remember that I needed to do X Y or Z?” and those kinds of things and you won’t remember those things.

34:55 – Mishaal Rahman: Very cool. And I kind of want to pivot ahead to next year. Kind of the stuff we might be seeing next year. You mentioned earlier when we were talking about gaming, you mentioned one of the use cases that we’re seeing with AI and gaming are AI agents. Kind of maybe interactive NPCs maybe powered by AI. But another thing that I remember that Arm announced earlier this year was kind of neural graphics coming to mobile. And you’re kind of bringing neural accelerators to Arm GPUs. Can you talk a bit more about that and how big of a shift it’ll be for mobile gaming in the future?

35:31 – Chris Bergey: So, yes, so we’re really excited about neural graphics. And graphics has been used from an AI sense quite a bit, right? And I remember sitting at CES last year and Jensen in his keynote was showing off their latest graphics card and how it was ray tracing. I think 30 million ray traces a second or whatever it was. It was a ridiculous number. And he basically said, “Hey computer scientists would have said that was impossible just two years ago.” And he said, “It’s possible today but the reason it’s possible is because about 2 million of those pixels I think are ray traced and the others are AI interpolated,” right? And that’s how you can create a scene and it’s a most amazing scene and you would say it’s a great application of that.

And so what we see in mobile is there’s, we’re not necessarily going to be going for that end of the spectrum from a performance point of view, but power is becoming super important. And so what we actually see that you can do with neural graphics is you can actually create a, whether it’s watching a video, whether it’s a gaming experience or whatever, that by using AI use cases you can drastically reduce the amount of power consumption that the GPU is consuming. And it’s using all kinds of techniques like super sampling, frame rate insertion, neural ray denoising, you know, all of these kinds of techniques that can be used that really allow you to create a great experience. And so that’s going to be a huge area that you’re going to see a lot of innovation coming from Arm and I think kind of across the industry as you think about what are these devices going to be capable of for the future. And so we’re super excited about it. We’re working closely with Khronos which is a standards body that drives a lot of this and we think it’s the future.

37:38 – Mishaal Rahman: Yeah. I mean there’s it’s already kind of widely used on desktop and like PC gaming, you know, like AI features to upscale and frame interpolate. And the impact there is better FPS, better frame rates for gamers. The reduced power consumption is not really as important on desktop gaming but I can see that having a huge deal, having a huge impact on mobile gaming. So yeah I’m excited to see that happen.

38:00 – Chris Bergey: Yeah imagine that you can play a game at whatever your chosen resolution is for 50% longer or whatever those kind of metrics are. You’re right. And again I think this is typical that we come up we have these technologies that can be applied in different ways and have a very different value proposition in that. And so that’s going to be the future of mobile gaming.

38:22 – Mishaal Rahman: And speaking of the future, you mentioned earlier that you were at CES earlier this year, I guess?

38:28 – Chris Bergey: January 2025. And I guess in a few weeks again…

38:33 – Mishaal Rahman: …in a few weeks we’re going to be back at CES in Vegas. Are you going to be there in CES?

38:37 – Chris Bergey: I will be there. I will be there.

38:39 – Mishaal Rahman: So if you had to make one prediction about the key AI-related headline that we’ll see at CES, like the key AI trends, new industries or new features that are AI-related, something that would surprise people today, what would you say it would be?

38:56 – Chris Bergey: Whoo, there’s a lot of things but I get excited about some of the biometric stuff that’s coming as I kind of hinted about. Like you know I think the way that we can put sensors on bodies and do things that really enhance people’s lives. I think that personally gets me excited that we’re allowing people to do things that many people take for granted but others are not. And so if we can enable some of that I think it’s just super exciting. I also love the way that AI can help educate the next generation and again in a positive way we need to make sure we have all the safety controls and all those kinds of things but I just love the way that AI and technology can help democratize the world.

39:53 – Mishaal Rahman: Awesome. Well I’m looking forward to seeing all the new AI innovations that are coming out at CES. I’m sure there will be a ton and the people who are tired of hearing about AI will continue to be tired by it because it is the future. It’s going to be everywhere. And CES being the forefront of all technology stuff, AI is going to be there. So be prepared, strap in, there’s going to be a ton to talk about in a couple of weeks.

Thank you so much Chris for joining us for joining me on this little interview to talk about AI and Arm’s place in the AI race. Everyone, this is Chris Bergey, Executive Vice President of Arm’s Edge AI Business Unit. Thank you again for taking the time to do this interview with me on the Android Authority channel and if you want to catch the show notes and the full transcript I’ll have an article on the website that you can go to read you know you can catch everything on your own pace. And Chris, where do you want to leave people with? Is there a particular website or your own social media channel or something you want to point people towards?

40:56 – Chris Bergey: All kinds of good stuff on as I mentioned developer.arm.com relative to developing with Arm technologies and come on by our website arm.com and check us out and really appreciate all the work that Android Authority is doing and Mishaal you’ve been a great addition I think to the team over the last year I guess it’s almost been.

41:23 – Mishaal Rahman: Yeah. Thank you. It’s been a huge honor to work on the Android Authority team you know mostly covering Android. I do talk about chipsets from time to time and great having this opportunity to talk about AI with you. So thank you.

41:40 – Chris Bergey: Thanks Mishaal. Happy 2026.

41:43 – Mishaal Rahman: Happy 2026.

Thank you for being part of our community. Read our Comment Policy before posting.