Affiliate links on Android Authority may earn us a commission. Learn more.

How to use gestures and gesture-based navigation in your Android app

Out-of-the-box, Android’s standard UI components support a range of Android gestures, but occasionally your app may need to support more than just onClick!

In this Android gesture tutorial, we’ll be covering everything you need to implement a range of Android gestures. We’ll be creating a range of simple applications that provide an insight into the core concepts of touch gestures, including how Android records the “lifecycle” of a gesture, and how it tracks the movement of individual fingers in a multi-touch interaction.

Occasionally your app may need to support more than just onClick.

To help demonstrate how this information might translate into real-world projects, we’ll also be creating an application that allows the user to zoom in and out of an image, using the pinch gesture. Finally, since Android 10 is poised to completely overhaul Android’s gesture support, we’ll be looking at how you can update your applications to support Android’s new gesture-based navigation, including how to ensure your app’s own gestures don’t conflict with Android 10’s system-wide gestures.

Read also: Building your Android UI: Everything you need to know about views

What are touch gestures?

Touch gestures allow users to interact with your app using touch.

Android supports a range of touch gestures, including tap, double tap, pinch, swipe, scroll, long press, drag and fling. Although drag and fling are similar, drag is the type of scrolling that occurs when a user drags their finger across the touchscreen, while a fling gesture occurs when the user drags and then lifts their finger quickly.

Android gestures can be divided into the following categories:

- Navigation gestures. These allow the user to move around your application, and can be used to supplement other input methods, such as navigation drawers and menus.

- Action gestures. As the name suggests, action gestures allow the user to complete an action.

- Transform gestures. These allow the user to change an element’s size, position and rotation, for example pinching to zoom into an image or map.

In Android, the individual fingers or other objects that perform a touch gesture are referred to as pointers.

MotionEvents: Understanding the gesture lifecycle

A touch event starts when the user places one or more pointers on the device’s touchscreen, and ends when they remove these pointer(s) from the screen. This begins Android gestures.

While one or more pointers are in contact with the screen, MotionEvent objects gather information about the touch event. This information includes the touch event’s movement, in terms of X and Y coordinates, and the pressure and size of the contact area.

A MotionEvent also describes the touch event’s state, via an action code. Android supports a long list of action codes, but some of the core action codes include:

- ACTION_DOWN. A touch event has started. This is the location where the pointer first makes contact with the screen.

- ACTION_MOVE. A change has occurred during the touch event (between ACTION_DOWN and ACTION_UP). An ACTION_MOVE contains the pointer’s most recent X and Y coordinates, along with any intermediate points since the last DOWN or MOVE event.

- ACTION_UP. The touch event has finished. This contains the final release location. Assuming the gesture isn’t cancelled, all touch events conclude with ACTION_UP.

- ACTION_CANCEL. The gesture was cancelled, and Android won’t receive any further information about this event. You should handle an ACTION_CANCEL in exactly the same way you handle an ACTION_UP event.

The MotionEvent objects transmit the action code and axis values to the onTouchBack() event callback method for the View that received this touch event. You can use this information to interpret the pattern of the touch gesture, and react accordingly. Note that each MotionEvent object will contain information about all the pointers that are currently active, even if those pointers haven’t moved since the previous MotionEvent was delivered.

While Android does try to deliver a consistent stream of MotionEvents, it’s possible for an event to be dropped or modified before it’s delivered successfully. To provide a good user experience, your app should be able to handle inconsistent MotionEvents, for example if it receives an ACTION_DOWN event without receiving an ACTION_UP for the “previous” gesture. This is an important consideration for our Android gesture tutorial.

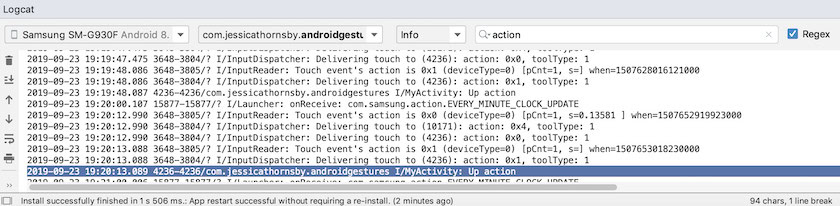

To help illustrate the “lifecycle” of a touch gesture, let’s create an application that retrieves the action code for each MotionEvent object and then prints this information to Android Studio’s Logcat.

In the following code, we’re intercepting each touch event by overriding the onTouchEvent() method, and then checking for the following values:

- True. Our application has handled this touch event, and we should print the corresponding message to Logcat.

- False. Our application hasn’t handled this touch event. The event will continue to be passed through the stack, until onTouchEvent returns true.

The onTouchEvent() method will be triggered every time a pointer’s position, pressure or contact area changes.

In the following code, I’m also using getActionMasked() to retrieve the action being performed:

import androidx.appcompat.app.AppCompatActivity;

import androidx.core.view.MotionEventCompat;

import android.os.Bundle;

import android.util.Log;

import android.view.MotionEvent;

public class MainActivity extends AppCompatActivity {

private static final String TAG = "MyActivity";

@Override

protected void onCreate(Bundle savedInstanceState) {

super.onCreate(savedInstanceState);

setContentView(R.layout.activity_main);

}

@Override

public boolean onTouchEvent (MotionEvent event){

int myAction = MotionEventCompat.getActionMasked(event);

switch (myAction) {

case (MotionEvent.ACTION_UP):

Log.i(TAG, "Up action");

return true;

case (MotionEvent.ACTION_DOWN):

Log.d(TAG,

"Down action");

return true;

case (MotionEvent.ACTION_MOVE):

Log.d(TAG, "Move action");

return true;

case (MotionEvent.ACTION_CANCEL):

Log.d(TAG, "Cancel action");

return true;

default:

return super.onTouchEvent(event);

}

}

}Install this application on your physical Android smartphone or tablet, and experiment by performing various touch gestures. Android Studio should print different messages to Logcat, based on where you are in the touch gesture’s lifecycle.

OnTouchListener: Capturing touch events for specific Views

You can also listen for touch events by using the setOnTouchListener() method to attach a View.OnTouchListener to your View object. The setOnTouchListener() method registers a callback that will be invoked every time a touch event is sent to its attached View, for example here we’re invoking a callback every time the user touches an ImageView:

View imageView = findViewById(R.id.my_imageView);

myView.setOnTouchListener(new OnTouchListener() {

public boolean onTouch(View v, MotionEvent event) {

//To do: Respond to touch event//

return true;

}

});Note that if you use View.OnTouchListener, then you should not create a listener that returns false for the ACTION_DOWN event. Since ACTION_DOWN is the starting point for all touch events, a value of false will cause your application to become stuck at ACTION_DOWN, and the listener won’t be called for any subsequent events.

Touch slop: Recording movement-based gestures

Touch gestures aren’t always precise! For example, it’s easy for your finger to shift slightly when you were just trying to tap a button, especially if you’re using your smartphone or tablet on the go, or you have manual dexterity issues.

To help prevent accidental scrolling, Android gestures use the concept of “touch slop” which is the distance, in pixels, that a pointer can travel before a non-movement based gesture, such as a tap, becomes a movement-based gesture, such as a drag.

Touch slop is the distance, in pixels, that a pointer can travel before a non-movement based gesture

When using movement-based gestures, you need to ensure that the user is in control of any onscreen movement that occurs. For example, if the user is dragging an object across the screen, then the speed that this object is travelling must match the speed of the user’s gesture.

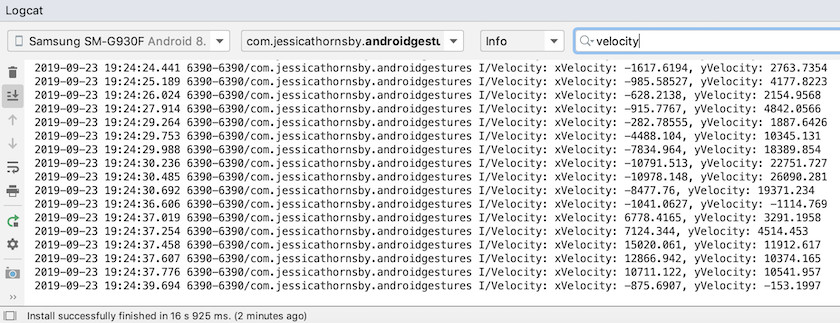

You can measure the velocity of a movement-based gesture, using Android’s VelocityTracker class. In the following Activity, I’m using VelocityTracker to retrieve the speed of a gesture, and then printing the velocity to Android Studio’s Logcat:

import android.app.Activity;

import android.util.Log;

import android.view.MotionEvent;

import android.view.VelocityTracker;

public class MainActivity extends Activity {

public static final String TAG = "Velocity";

private VelocityTracker myVelocityTracker;

@Override

public boolean onTouchEvent(MotionEvent event) {

obtainVelocityTracker(event);

switch (event.getAction()) {

case MotionEvent.ACTION_UP:

final VelocityTracker velocityTracker = myVelocityTracker;

//Determine the pointer’s velocity//

velocityTracker.computeCurrentVelocity(1000);

//Retrieve the velocity for each pointer//

float xVelocity = myVelocityTracker.getXVelocity();

float yVelocity = myVelocityTracker.getYVelocity();

//Log the velocity in pixels per second//

Log.i(TAG, "xVelocity: " + xVelocity + ", yVelocity: " + yVelocity);

//Reset the velocity tracker to its initial state, ready to record the next gesture//

myVelocityTracker.clear();

break;

default:

break;

}

return true;

}

private void obtainVelocityTracker(MotionEvent event) {

if (myVelocityTracker == null) {

//Retrieve a new VelocityTracker object//

myVelocityTracker = VelocityTracker.obtain();

}

myVelocityTracker.addMovement(event);

}

}Install this application on your Android device and experiment by performing different movement-based gestures; the velocity of each gesture should be printed to the Logcat window.

GestureDetector: Creating a pinch-to-zoom app with Android gestures

Assuming that you’re using common Android gestures, such as tap and long press, you can use Android’s GestureDetector class to detect gestures without having to process the individual touch events.

To detect a gesture, you’ll need to create an instance of GestureDetector, and then call onTouchEvent(android.view.MotionEvent) in the View#onTouchEvent(MotionEvent) method. You can then define how this touch event should be handled, in the callback.

Read also: Exploring Android Q: Adding bubble notifications to your app

Let’s create an application where the user can zoom in and out of an ImageView, using gestures. To start, create a simple layout that contains an image:

<?xml version="1.0" encoding="utf-8"?>

<LinearLayout xmlns:android="http://schemas.android.com/apk/res/android"

android:layout_width="match_parent"

android:layout_height="match_parent"

android:orientation="horizontal">

<ImageView android:id="@+id/imageView"

android:layout_width="wrap_content"

android:layout_height="match_parent"

android:scaleType="matrix"

android:src="@drawable/myImage"/>

</LinearLayout>To create the zoom in/zoom out effect, I’m using ScaleGestureDetector, which is a convenience class that can listen for a subset of scaling events, plus the SimpleOnScaleGestureListener helper class.

In the following Activity, I’m creating an instance of ScaleGestureDetector for my ImageView, and then calling onTouchEvent(android.view.MotionEvent) in the View#onTouchEvent(Motionvent) method. Finally, I’m defining how the application should handle this gesture.

import android.os.Bundle;

import android.view.MotionEvent;

import android.widget.ImageView;

import android.view.ScaleGestureDetector;

import android.graphics.Matrix;

import androidx.appcompat.app.AppCompatActivity;

public class MainActivity extends AppCompatActivity {

private Matrix imageMatrix = new Matrix();

private ImageView imageView;

private float scale = 2f;

private ScaleGestureDetector gestureDetector;

@Override

protected void onCreate(Bundle savedInstanceState) {

super.onCreate(savedInstanceState);

setContentView(R.layout.activity_main);

imageView = (ImageView)findViewById(R.id.imageView);

//Instantiate the gesture detector//

gestureDetector = new ScaleGestureDetector(MainActivity.this,new imageListener());

}

@Override

public boolean onTouchEvent(MotionEvent event){

//Let gestureDetector inspect all events//

gestureDetector.onTouchEvent(event);

return true;

}

//Implement the scale listener//

private class imageListener extends ScaleGestureDetector.SimpleOnScaleGestureListener{

@Override

//Respond to scaling events//

public boolean onScale(ScaleGestureDetector detector) {

//Return the scaling factor from the previous scale event//

scale *= detector.getScaleFactor();

//Set a maximum and minimum size for our image//

scale = Math.max(0.2f, Math.min(scale, 6.0f));

imageMatrix.setScale(scale, scale);

imageView.setImageMatrix(imageMatrix);

return true;

}

}

}Try installing this app on a physical Android smartphone or tablet, and you’ll be able to shrink and expand your chosen image, using pinching in and pinching-out gestures.

Managing multi-touch gestures

Some gestures require you to use multiple pointers, such as the pinch gesture. Every time multiple pointers make contact with the screen, Android generates:

- An ACTION_DOWN event for the first pointer that touches the screen.

- An ACTION_POINTER_DOWN for all subsequent, non-primary pointers that make contact with the screen.

- An ACTION_POINTER_UP, whenever a non-primary pointer is removed from the screen.

- An ACTION_UP event when the last pointer breaks contact with the screen.

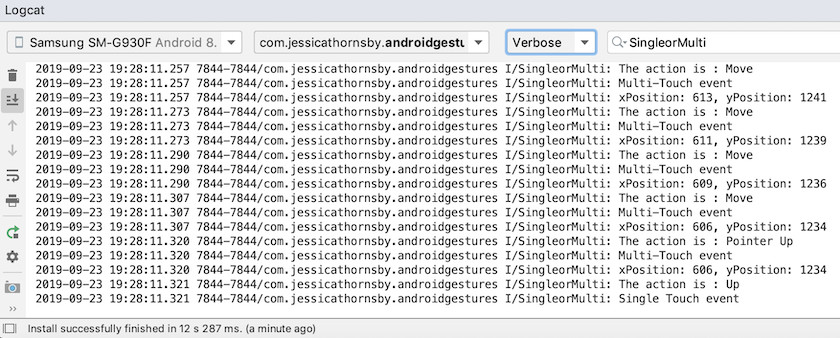

For example, in the following Activity I’m detecting whether a gesture is single-touch or multi-touch and then printing an appropriate message to Android Studio’s Logcat. I’m also printing the action code for each event, and the X and Y coordinates for each pointer, to provide more insight into how Android tracks individual pointers:

import android.app.Activity;

import android.util.Log;

import android.view.MotionEvent;

import androidx.core.view.MotionEventCompat;

public class MainActivity extends Activity {

public static final String TAG = "SingleorMulti";

@Override

public boolean onTouchEvent(MotionEvent event) {

int action = MotionEventCompat.getActionMasked(event);

String actionCode = "";

switch (action) {

case MotionEvent.ACTION_DOWN:

actionCode = "Down";

break;

case MotionEvent.ACTION_POINTER_DOWN:

actionCode = "Pointer Down";

break;

case MotionEvent.ACTION_MOVE:

actionCode = "Move";

break;

case MotionEvent.ACTION_UP:

actionCode = "Up";

break;

case MotionEvent.ACTION_POINTER_UP:

actionCode = "Pointer Up";

break;

case MotionEvent.ACTION_OUTSIDE:

actionCode = "Outside";

break;

case MotionEvent.ACTION_CANCEL:

actionCode = "Cancel";

break;

}

Log.i(TAG, "The action is : " + actionCode);

int index = MotionEventCompat.getActionIndex(event);

int xPos = -1;

int yPos = -1;

if (event.getPointerCount() > 1) {

Log.i(TAG, "Multi-Touch event");

} else {

Log.i(TAG, "Single Touch event");

return true;

}

xPos = (int) MotionEventCompat.getX(event, index);

yPos = (int) MotionEventCompat.getY(event, index);

Log.i(TAG, "xPosition: " + xPos + ", yPosition: " + yPos);

return true;

}

}Managing gestures in ViewGroups

When handling touch events within a ViewGroup, it’s possible that the ViewGroup may have children that are targets for different touch events than the parent ViewGroup.

To ensure each child View receives the correct touch events, you’ll need to override the onInterceptTouchEvent() method. This method is called every time a touch event is detected on the surface of a ViewGroup, allowing you to intercept a touch event before it’s dispatched to the child Views.

Also read:

If the onInterceptTouchEvent() method returns true, then the child View that was previously handling the touch event will receive an ACTION_CANCEL, and this event will be sent to the parent’s onTouchEvent() method instead.

For example, in the following snippet we’re deciding whether to intercept a touch event, based on whether it’s a scrolling event:

@Override

public boolean onInterceptTouchEvent(MotionEvent ev) {

final int action = MotionEventCompat.getActionMasked(ev);

if (action == MotionEvent.ACTION_CANCEL || action == MotionEvent.ACTION_UP) {

mIsScrolling = false;

//Do not intercept the touch event//

return false;

}

switch (action) {

case MotionEvent.ACTION_MOVE: {

if (mIsScrolling) {

//Intercept the touch event//

return true;

}

}

...

...

...

return false;

}

@Override

public boolean onTouchEvent(MotionEvent ev) {

//To do: Handle the touch event//

}

}Run this app on your Android device, and the Logcat output should look something like this:

Make your app an easy target: Expanding touchable areas

You can make smaller UI elements easier to interact with, by expanding the size of the View’s touchable region, sometimes referred to as the hit rectangle. Alternatively, if your UI contains multiple interactive UI elements, then you can shrink their touchable targets, to help prevent users from triggering the “wrong” View.

You can tweak the size of a child View’s touchable area, using the TouchDelegate class.

Let’s create a button, and then see how we’d expand this button’s touchable region.

<RelativeLayout xmlns:android="http://schemas.android.com/apk/res/android"

xmlns:tools="http://schemas.android.com/tools"

android:layout_width="match_parent"

android:layout_height="match_parent"

tools:context=".MainActivity">

<Button

android:id="@+id/button"

android:layout_width="wrap_content"

android:layout_height="wrap_content"

android:text="Button" />

</RelativeLayout>To change the View’s touchable region, we need to complete the following steps:

1. Retrieve the parent View and post a Runnable on the UI thread

Before we call the getHitRect() method and retrieve the child’s touchable area, we need to ensure that the parent has laid out its child Views:

parentView.post(new Runnable() {

@Override

public void run() {

Rect delegateArea = new Rect();2. Retrieve the bounds of the child’s touchable area

We can retrieve the button’s current touchable target, using the getHitRect() method:

Rect delegateArea = new Rect();

Button myButton = (Button) findViewById(R.id.button);

...

...

...

myButton.getHitRect(delegateArea);3. Extend the bounds of the touchable area

Here, we’re increasing the button’s touchable target on the bottom and right-hand sides:

delegateArea.right += 400;

delegateArea.bottom += 400;4. Instantiate a TouchDelegate

Finally, we need to pass the expanded touchable target to an instance of Android’s TouchDelegate class:

TouchDelegate touchDelegate = new TouchDelegate(delegateArea,

myButton);

if (View.class.isInstance(myButton.getParent())) {

((View) myButton.getParent()).setTouchDelegate(touchDelegate);Here’s our completed MainActivity:

import androidx.appcompat.app.AppCompatActivity;

import android.os.Bundle;

import android.widget.Button;

import android.view.TouchDelegate;

import android.view.View;

import android.widget.Toast;

import android.graphics.Rect;

public class MainActivity extends AppCompatActivity {

@Override

protected void onCreate(Bundle savedInstanceState) {

super.onCreate(savedInstanceState);

setContentView(R.layout.activity_main);

View parentView = findViewById(R.id.button);

parentView.post(new Runnable() {

@Override

public void run() {

Rect delegateArea = new Rect();

Button myButton = (Button) findViewById(R.id.button);

myButton.setEnabled(true);

myButton.setOnClickListener(new View.OnClickListener() {

@Override

public void onClick(View view) {

Toast.makeText(MainActivity.this,

"Button clicked",

Toast.LENGTH_SHORT).show();

}

});

myButton.getHitRect(delegateArea);

delegateArea.right += 400;

delegateArea.bottom += 400;

TouchDelegate touchDelegate = new TouchDelegate(delegateArea,

myButton);

if (View.class.isInstance(myButton.getParent())) {

((View) myButton.getParent()).setTouchDelegate(touchDelegate);

}

}

});

}

}Install this project on your Android device and try tapping around the right and bottom of the button – since we expanded the touchable area by a significant amount, the toast should appear whenever you tap anywhere near the button.

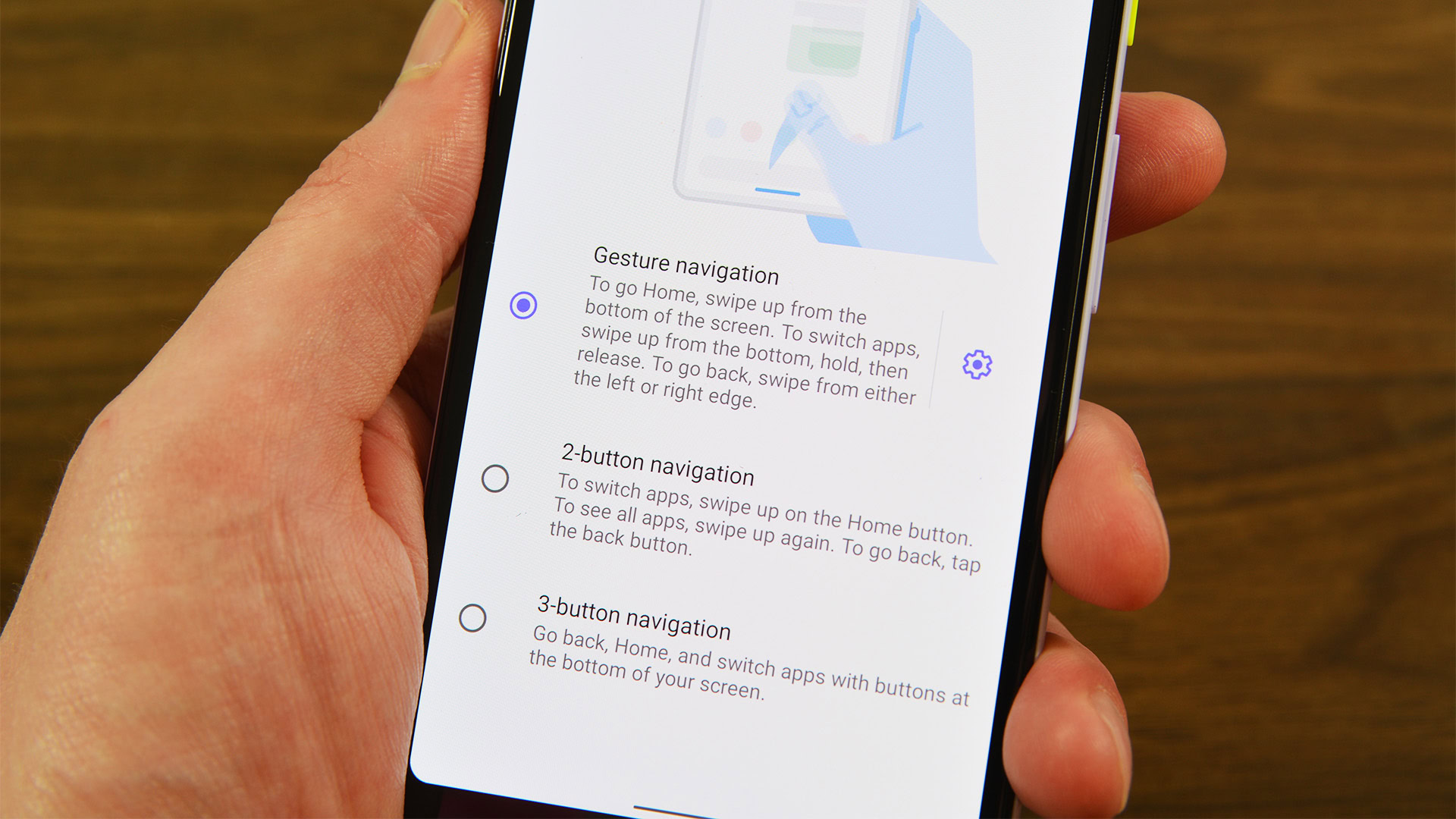

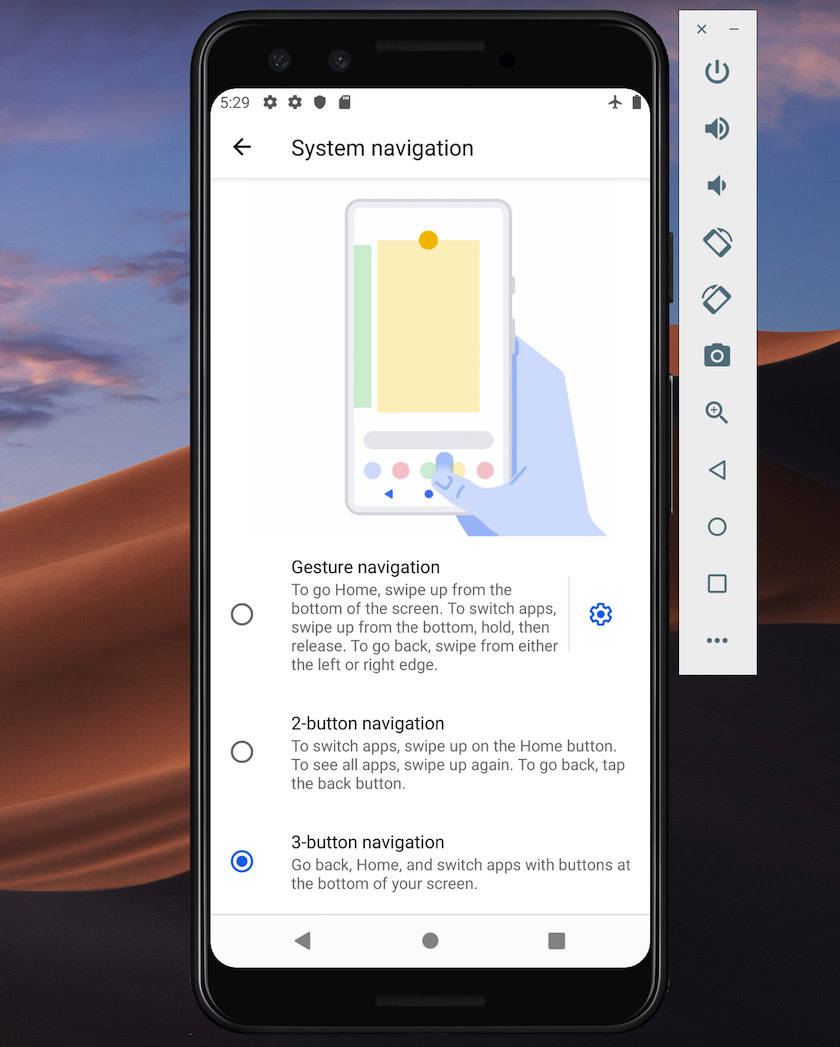

Android 10’s new navigational model: Gesture-based navigation

Beginning with API level 29, Android supports full gesture-based navigation.

Users on the latest and greatest version of Android will be able to trigger the following actions using gestures alone:

- Back. Swipe inwards from the left or right edge of the screen.

- Home. Swipe up from the bottom of the screen.

- Launch Assistant. Swipe in from the bottom corner of the screen.

Android 10 will continue to support the traditional 3-button navigation, so users will have the option to revert back to button-based navigation, if they prefer.

According to Google, gesture-based navigation will be the default for Android 10 and higher, so you need to ensure that your application provides a good user experience with Android’s new gesture-based model.

Take your UI edge-to-edge

Gesture-based navigation makes more of the screen available to your app, so you can deliver a more immersive experience by extending your app’s content edge-to-edge.

By default, apps are laid out below the status bar and above the navigation bar (collectively referred to as the system bars). In an edge-to-edge display, your application is laid out behind the navigation bar, and optionally behind the system bar if it makes sense for your particular application.

You can tell the system to lay out your app behind the system bar, using the View.setSystemUiVisibility() method, and the SYSTEM_UI_FLAG_LAYOUT_STABLE and SYSTEM_UI_FLAG_LAYOUT_HIDE_NAVIGATION flags. For example:

view.setSystemUiVisibility(View.SYSTEM_UI_FLAG_LAYOUT_HIDE_NAVIGATION

| View.SYSTEM_UI_FLAG_LAYOUT_STABLE);Note that if you’re using a View class that automatically manages the status bar, such as CoordinatorLayout, then these flags may already be set.

Turning the system bar transparent

Once your app is displaying edge-to-edge, you need to ensure that the user can see your app’s content behind the system bars.

To make the system bars fully transparent, add the following to your theme:

<style name="AppTheme" parent="...">

<item name="android:navigationBarColor">@android:color/transparent</item>

<item name="android:statusBarColor">@android:color/transparent</item>

</style>Android 10 will change the system bar’s color automatically based on the content behind it, in a process known as dynamic color adaptation, so you don’t need to worry about making these adjustments manually.

Check for conflicting gestures

You’ll need to test that Android’s new gesture-based navigation system doesn’t conflict with your app’s existing gestures.

In particular, you should check that the Back gesture (swiping inwards from the left or right edge of the screen) doesn’t trigger any of your app’s interactive elements. For example, if your application features a navigation drawer along the left side of the screen, then every time the user tries to drag this drawer open, they’ll trigger Android’s Back gesture, and may wind up exiting your app.

If testing does reveal gesture conflicts, then you can provide a list of areas within your app where the system shouldn’t interpret touch events as Back gestures.

To provide this list of exclusion rects, pass a List to Android’s new View.setSystemGestureExclusionRects() method, for example:

List exclusionRects;

public void onLayout(

boolean changedCanvas, int left, int top, int right, int bottom) {

setSystemGestureExclusionRects(exclusionRects);

}

public void onDraw(Canvas canvas) {

setSystemGestureExclusionRects(exclusionRects);

}Note that you should only disable the Back gesture for Views that require a precision gesture within a small area, and not for broad regions or simple tap targets, such as Buttons.

What about Android 10’s Home gesture?

At the time of writing, it isn’t possible to opt out of Android 10’s Home gesture (swiping up from the bottom of the screen). If you’re encountering problems with the Home gesture, then one potential workaround is to set touch recognition thresholds using WindowInsets.getMandatorySystemGestureInsets().

Gesture-based navigation for gaming apps

Some applications, such as mobile games, don’t have a View hierarchy but may still require the user to perform gestures in areas that trigger Android’s gesture-based navigation system.

If you’re encountering gesture conflicts in your gaming app, then you use the Window.setSystemGestureExclusionRects() method to provide a list of areas where the system shouldn’t interpret touch events as Back gestures.

Alternatively, you can request that your application is laid out in immersive mode, which disables all system gestures.

You can enable immersive mode by calling setSystemUiVisibility() and then passing the following flags:

- SYSTEM_UI_FLAG_FULLSCREEN. All non-critical system elements will be hidden, allowing your app’s content to take over the entire screen.

- SYSTEM_UI_FLAG_HIDE_NAVIGATION. Temporarily hide the system navigation.

- SYSTEM_UI_FLAG_IMMERSIVE. This View should remain interactive when the status bar is hidden. Note that for this flag to have any effect, it must be used in combination with SYSTEM_UI_FLAG_HIDE_NAVIGATION.

During immersive mode, the user can re-enable system gestures at any point, by swiping from the bottom of the screen.

Best practices: Using gestures effectively

Now we’ve seen how to implement various touch gestures, and the steps you can take to get your app ready for Android’s new gesture-based navigation system, let’s look at some best practices to ensure you’re using gestures effectively.

Don’t leave your users guessing: Highlight interactive components

If you’re using standard Views, then most of the time your users should automatically be able to identify your app’s interactive components, and understand how to interact with them. For example, if a user sees a button, then they’ll immediately know that they’re expected to tap that button. However, occasionally it may not be clear that a particular View is interactive, and in these instances you’ll need to provide them with some additional visual cues.

There’s several ways that you can draw attention to your app’s interactive Views. Firstly, you could add a short animation such as a pulsing effect, or elevate the View, for example elevating a card that the user can drag on screen to expand.

Alternatively, you could be more explicit and use icons, such as an arrow pointing to the View that the user needs to interact with next.

For more complex interactions, you could design a short animation that demonstrates how the user should interact with the View, for example animating a card so that it slides partially across the screen and then back again.

Use animations for transformative gestures

When a user is performing a transformative gesture, all affected UI elements should animate in a way that indicates what will happen when this gesture is completed. For example if the user is pinching to shrink an image, then the image should decrease in size while the user is performing that gesture, instead of “snapping” to the new size once the gesture is complete.

Providing visual cues for in-progress actions

For gestures that execute actions, you should communicate the action this gesture will perform once it’s complete. For example, when you start to drag an email in the Gmail application, it’ll reveal an Archive icon, indicting that this email will be archived if you continue with the dragging action.

By indicating the completed action while the user is performing the action gesture, you’re giving them the opportunity to abort the gesture, if the outcome isn’t what they expected.

Wrapping up this Android gesture tutorial

In this article, I showed you how to implement various gestures in your Android applications, and how to retrieve information about in-progress gestures, including the gesture’s velocity and whether there are multiple pointers involved. We also covered Android 10’s new gesture-based navigation system, and the steps you can take to ensure your application is ready for this huge overhaul in how users interact with their Android devices.

Do you have anymore best practices for using Android gestures in your app? Let us know in the comments below!