Affiliate links on Android Authority may earn us a commission. Learn more.

What is machine learning and how does it work?

From chatbots like ChatGPT and Google Bard to recommendations on websites like Amazon and YouTube, machine learning influences almost every aspect of our daily lives.

Machine learning is a subset of artificial intelligence that allows computers to learn from their own experiences — much like we do when picking up a new skill. When implemented correctly, the technology can perform some tasks better than any human, and often within seconds.

With how common machine learning has become today, you may wonder how it works and what its limitations are. So here’s a simple primer on the technology. Don’t worry if you don’t have a background in computer science — this article is a high-level overview of what happens under the hood.

What is machine learning?

Even though many people use the terms machine learning (ML) and artificial intelligence (AI) interchangeably, there’s actually a difference between the two.

Early applications of AI, theorized around 50 years or so ago, were extremely basic by today’s standards. A chess game where you play against computer-controlled opponents, for instance, could once be considered revolutionary. It’s easy to see why — the ability to solve problems based on a set of rules can qualify as basic “intelligence”, after all. These days, however, we’d consider such a system extremely rudimentary as it lacks experience — a key component of human intelligence. This is where machine learning comes in.

Machine learning enables computers to learn or train themselves from massive amounts of existing data.

Machine learning adds another new dimension to artificial intelligence — it enables computers to learn or train themselves from massive amounts of existing data. In this context, “learning” means extracting patterns from a given set of data. Think about how our own human intelligence works. When we come across something unfamiliar, we use our senses to study its features and then commit those to memory so that we can recognize it the next time.

How does machine learning work?

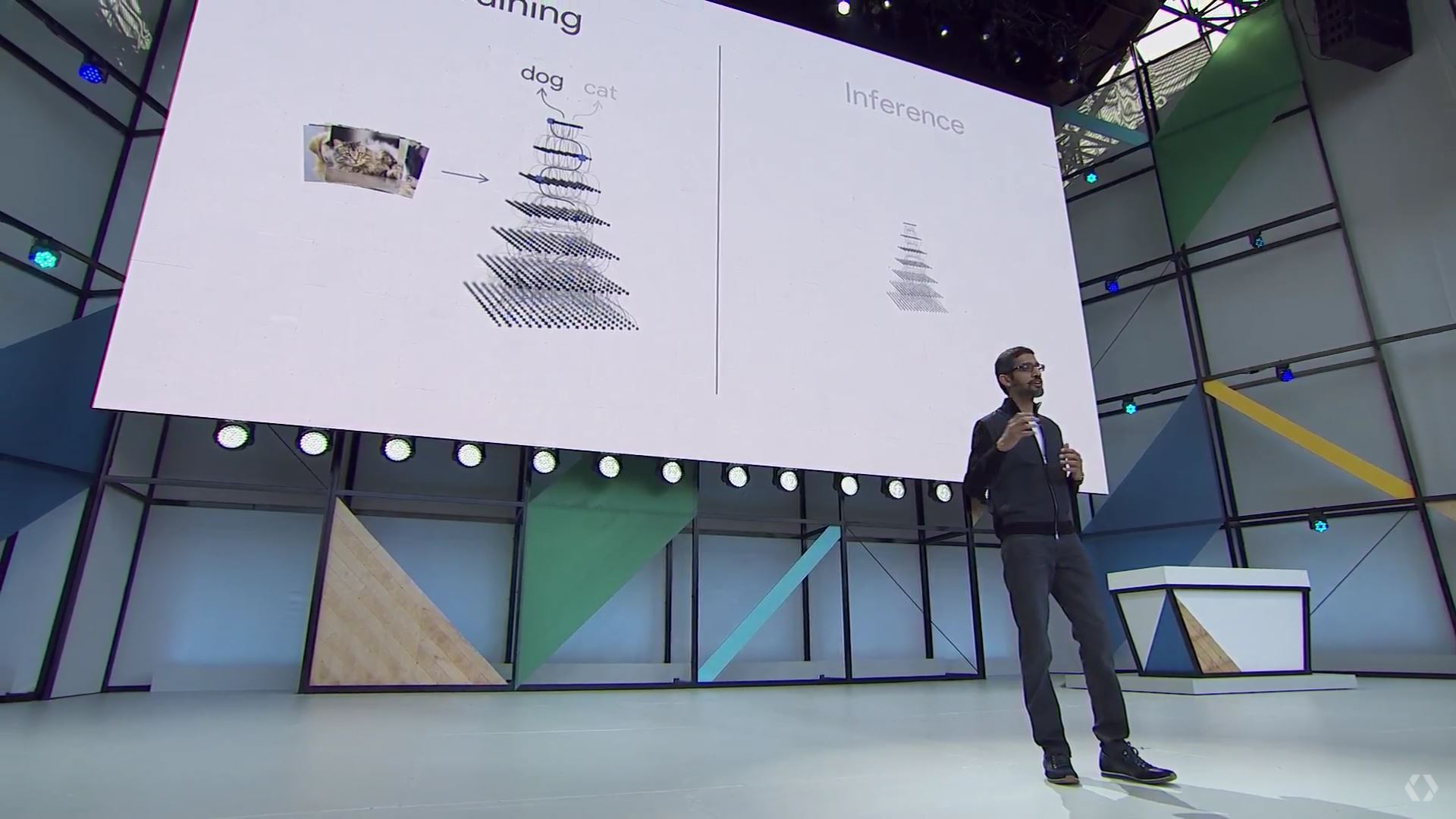

Machine learning involves two distinct phases: training and inference.

- Training: In the training stage, a computer algorithm analyzes a bunch of sample or training data to extract relevant features and patterns. The data can be anything — numbers, images, text, and even speech.

- Inference: The output of a machine learning algorithm is often referred to as a model. You can think of ML models as dictionaries or reference manuals as they’re used for future predictions. In other words, we use trained models to infer or predict results from new data that our program has never seen before.

The success of a machine learning project depends on three factors: the algorithm itself, the amount of data you feed it, and the dataset’s quality. Every now and then, researchers propose new algorithms or techniques that improve accuracy and reduce errors, as we’ll see in a later section. But even without new algorithms, increasing the amount of data will also help cover more edge cases and improve inference.

Machine learning programs involve two distinct stages: training and inference.

The training process usually involves analyzing thousands or even millions of samples. As you’d expect, this is a fairly hardware-intensive process that needs to be completed ahead of time. Once the training process is complete and all of the relevant features have been analyzed, however, some resulting models can be small enough to fit on common devices like smartphones.

Consider a machine learning app that reads handwritten text like Google Lens, for example. As part of the training process, a developer first feeds an ML algorithm with sample images. This eventually gives them an ML model that can be packaged and deployed within something like an Android application.

When users install the app and feed it with images, their devices don’t have to perform the hardware-intensive training. The app can simply reference the trained model to infer new results. In the real world, you won’t see any of this, of course — the app will simply convert handwritten words into digital text.

Training a machine learning model is a hardware-intensive task that may take several hours or even days.

For now, here’s a rundown of the various machine learning training techniques and how they differ from each other.

Types of machine learning: Supervised, unsupervised, reinforcement

When training a machine learning model, you can use two types of datasets: labeled and unlabelled.

Take a model that identifies images of dogs and cats, for example. If you feed the algorithm with labeled images of the two animals, it is a labeled dataset. However, if you expect the algorithm to figure out the differentiating features all on its own (that is, without labels indicating the image contains a dog or cat), it becomes an unlabelled set. Depending on your dataset, you can use different approaches to machine learning:

- Supervised learning: In supervised learning, we use a labeled dataset to help the training algorithm know what to look for.

- Unsupervised learning: If you’re dealing with an unlabelled dataset, you simply allow the algorithm to draw its own conclusions. New data is constantly fed back into the system for training — without any manual input required from a human.

- Reinforcement learning: Reinforcement learning works well when you have many ways to reach a goal. It’s a system of trial and error — positive actions are rewarded, while negative ones are discarded. This means the model can evolve based on its own experiences over time.

A game of chess is the perfect application for reinforcement learning because the algorithm can learn from its mistakes. In fact, Google’s DeepMind subsidiary built an ML program that used reinforcement learning to become better at the board game, Go. Between 2016 and 2017, it went on to defeat multiple Go world champions in competitive settings — a remarkable achievement, to say the least.

As for unsupervised learning, say an e-commerce website like Amazon wants to create a targeted marketing campaign. They typically already know a lot about their customers, including their age, purchasing history, browsing habits, location, and much more. A machine learning algorithm would be able to form relationships between these variables. It can help marketers realize that customers from a particular area tend to purchase certain types of clothing. Whatever the case may be, it’s a completely hands-off, number-crunching process.

What is machine learning used for? Examples and advantages

Here are some ways in which machine learning influences our digital lives:

- Facial recognition: Even common smartphone features like facial recognition rely on machine learning. Take the Google Photos app as another example. It not only detects faces from your photos but also uses machine learning to identify unique facial features for each individual. The pictures you upload help improve the system, allowing it to make more accurate predictions in the future. The app also often prompts you to verify if a certain match is accurate — indicating that the system has a low confidence level in that particular prediction.

- Computational photography: For over half a decade now, smartphones have used machine learning to enhance images and videos beyond the hardware’s capabilities. From impressive HDR stacking to removing unwanted objects, computational photography has become a mainstay of modern smartphones.

- AI chatbots: If you’ve ever used ChatGPT or Bing Chat, you’ve experienced the power of machine learning through language models. These chatbots have been trained on billions of text samples. This allows them to understand and respond to user inquiries in real time. They also have the ability to learn from their interactions, improving their future responses and becoming more effective over time.

- Content recommendations: Social media platforms like Instagram show you targeted advertisements based on the posts you interact with. If you like an image containing food, for example, you might get advertisements related to meal kits or nearby restaurants. Similarly, streaming services like YouTube and Netflix can infer new genres and topics you may be interested in, based on your watch history and duration.

- Upscaling photos and videos: NVIDIA’s DLSS is a big deal in the gaming industry where it helps improve image quality through machine learning. The way DLSS works is rather straightforward — an image is first generated at a lower resolution and then a pre-trained ML model helps upscale it. The results are impressive, to say the least — far better than traditional, non-ML upscaling technologies.

Disadvantages of machine learning

Machine learning is all about achieving reasonably high accuracy with the least amount of effort and time. It’s not always successful, of course.

In 2016, Microsoft unveiled a state-of-the-art chatbot named Tay. As a showcase of its human-like conversational abilities, the company allowed Tay to interact with the public through a Twitter account. However, the project was taken offline within just 24 hours after the bot began responding with derogatory remarks and other inappropriate dialogue. This highlights an important point — machine learning is only really useful if the training data is reasonably high quality and aligns with your end goal. Tay was trained on live Twitter submissions, meaning it was easily manipulated or trained by malicious actors.

Machine learning isn't a one-size-fits-all arrangement. It requires careful planning, a varied and clean data set, and occasional supervision.

In that vein, bias is another potential disadvantage of machine learning. If the dataset used to train a model is limited in its scope, it may produce results that discriminate against certain sections of the population. For example, Harvard Business Review highlighted how a biased AI can be more likely to pick job candidates of a certain race or gender.

Common machine learning terms: A glossary

If you’ve read any other resources on machine learning, chances are that you’ve come across a few confusing terms. So here’s a quick rundown of the most common ML-related words and what they mean:

- Classification: In supervised learning, classification refers to the process of analyzing a labeled dataset to make future predictions. An example of classification would be to separate spam emails from legitimate ones.

- Clustering: Clustering is a type of unsupervised learning, where the algorithm finds patterns without relying on a labeled dataset. It then groups similar data points into different buckets. Netflix, for example, uses clustering to predict whether you’re likely to enjoy a show.

- Overfitting: If a model learns from its training data too well, it might perform poorly when tested with new, unseen data points. This is known as overfitting. For example, if you only train a model on images of a specific banana species, it won’t recognize one it hasn’t seen before.

- Epoch: When a machine learning algorithm has analyzed its training dataset once, we call this a single epoch. So if it goes over the training data five times, we can say the model has been trained for five epochs.

- Regularization: A machine learning engineer might add a penalty to the training process so that the model doesn’t learn the training data too perfectly. This technique, known as regularization, prevents overfitting and helps the model make better predictions for new, unseen data.

Besides these terms, you may have also heard of neural networks and deep learning. These are a bit more involved, though, so let’s talk about them in more detail.

Machine learning vs neural networks vs deep learning

A neural network is a specific subtype of machine learning inspired by the behavior of the human brain. Biological neurons in an animal body are responsible for sensory processing. They take information from our surroundings and transmit electrical signals over long distances to the brain. Our bodies have billions of such neurons that all communicate with each other, helping us see, feel, hear, and everything in between.

A neural network mimics the behavior of biological neurons in an animal body.

In that vein, artificial neurons in a neural network talk to each other as well. They break down complex problems into smaller chunks or “layers”. Each layer is made up of neurons (also called nodes) that accomplish a specific task and communicate their results with nodes in the next layer. In a neural network trained to recognize objects, for example, you’ll have one layer with neurons that detect edges, another that looks at changes in color, and so on.

Layers are linked to each other, so “activating” a particular chain of neurons gives you a certain predictable output. Because of this multi-layer approach, neural networks excel at solving complex problems. Consider autonomous or self-driving vehicles, for instance. They use a myriad of sensors and cameras to detect roads, signage, pedestrians, and obstacles. All of these variables have some complex relationship with each other, making it a perfect application for a multi-layered neural network.

Deep learning is a term that’s often used to describe a neural network with many layers. The term “deep” here simply refers to the layer depth.

Machine learning hardware: How does training work?

Many of the aforementioned machine learning applications, including facial recognition and ML-based image upscaling, were once impossible to accomplish on consumer-grade hardware. In other words, you had to connect to a powerful server sitting in a data center to accomplish most ML-related tasks.

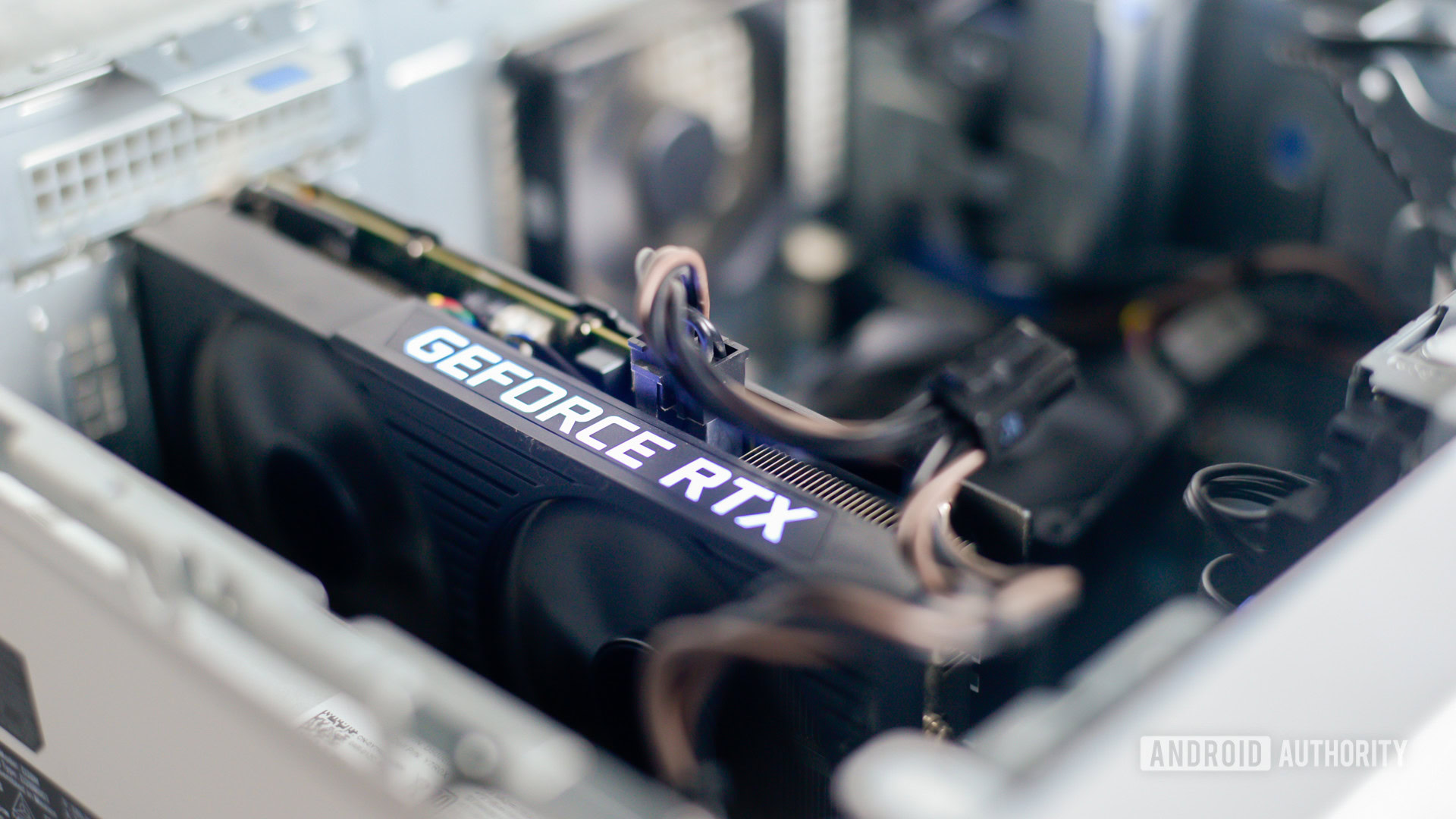

Even today, training an ML model is extremely hardware intensive and pretty much requires dedicated hardware for larger projects. Since training involves running a small number of algorithms repeatedly, though, manufacturers often design custom chips to achieve better performance and efficiency. These are called application-specific integrated circuits or ASICs. Large-scale ML projects typically make use of either ASICs or GPUs for training, and not general-purpose CPUs. These offer higher performance and lower power consumption than a traditional CPU.

Machine learning accelerators help improve inference efficiency, making it possible to deploy ML apps to more and more devices.

Things have started to change, however, at least on the inference side of things. On-device machine learning is starting to become more commonplace on devices like smartphones and laptops. This is thanks to the inclusion of dedicated, hardware-level ML accelerators within modern processors and SoCs.

Machine learning accelerators are more efficient than ordinary processors. This is why the DLSS upscaling technology we spoke about earlier, for example, is only available on newer NVIDIA graphics cards with ML acceleration hardware. Going forward, we’re likely to see feature segmentation and exclusivity depending on each new hardware generation’s machine learning acceleration capabilities. In fact, we’re already witnessing that happen in the smartphone industry.

Machine learning in smartphones

ML accelerators have been built into smartphone SoCs for a while now. And now, they’ve become a key focal point thanks to computational photography and voice recognition.

In 2021, Google announced its first semi-custom SoC, nicknamed Tensor, for the Pixel 6. One of Tensor’s key differentiators was its custom TPU — or Tensor Processing Unit. Google claims that its chip delivers significantly faster ML inference versus the competition, especially in areas such as natural language processing. This, in turn, enabled new features like real-time language translation and faster speech-to-text functionality. Smartphone processors from MediaTek, Qualcomm, and Samsung have their own takes on dedicated ML hardware too.

On-device machine learning has enabled futuristic features like real-time translation and live captions.

That’s not to say that cloud-based inference isn’t still in use today — quite the opposite, in fact. While on-device machine learning has become increasingly common, it’s still far from ideal. This is especially true for complex problems like voice recognition and image classification. Voice assistants like Amazon’s Alexa and Google Assistant are only as good as they are today because they rely on powerful cloud infrastructure — for both inference as well as model re-training.

However, as with most new technologies, new solutions and techniques are constantly on the horizon. In 2017, Google’s HDRnet algorithm revolutionized smartphone imaging, while MobileNet brought down the size of ML models and made on-device inference feasible. More recently, the company highlighted how it uses a privacy-preserving technique called federated learning to train machine learning models with user-generated data.

Apple, meanwhile, also integrates hardware ML accelerators within all of its consumer chips these days. The Apple M1 and M2 family of SoCs included in the latest Macbooks, for instance, has enough machine learning grunt to perform training tasks on the device itself.

FAQs

Machine learning is the process of teaching a computer how to recognize and find patterns in large amounts of data. It can then use this knowledge to make predictions on future data.

Machine learning is used for facial recognition, natural language chatbots, self-driving cars, and even recommendations on YouTube and Netflix.