Affiliate links on Android Authority may earn us a commission. Learn more.

Can you tell what's real? Here's how to spot AI images so you don't get scammed

Last year, I moved across the world and had to start from scratch — I needed a rental apartment, a used car, and furniture. I began my search on Facebook Marketplace and was quickly overwhelmed by the number of photos that were digitally altered with generative AI, some almost to the point of misrepresentation. But since I began my search thousands of miles away, I had to do all of my due diligence based on these photos alone.

This is not a problem isolated to Facebook Marketplace either. AI-generated images and videos have quickly proliferated across all online platforms. Over the past month alone, I’ve seen several supposed leaked photos of upcoming movies like Avengers: Doomsday that were, in fact, AI-generated.

Tools like ChatGPT and Gemini have become so accessible, and so capable, that it’s only a matter of time before you run into someone using it for nefarious purposes. But luckily for us, even the best AI image generators today struggle to replicate real world details. Once you know what to look for, you can easily spot telltale signs of AI’s involvement. Here’s everything you need to know about spotting a fake AI-generated image.

AI struggles with humans and animals the most

At face value, many AI-generated images of landscapes could pass off as the real deal. However, the illusion doesn’t hold when you look at images with living beings. These AI-generated images can often look strange and evoke a sense of uncanniness. For example, less sophisticated AI models tend to trip up on human anatomy. You’ll see extra fingers, disjointed body parts, or strange proportions and overlapping features in the most egregious cases.

The opposite is true as well — even when AI gets the shapes right, it tends to give human skin a smooth and airbrushed look with zero imperfections or flaws. This means you won’t see details like pores or wrinkles even though the rest of the image is pin sharp. The same principle extends to animals as well. I’ve seen AI generate extremely smooth fur on otherwise convincing-looking dogs and cats.

Look beyond the main subject

With the right prompts, one could perhaps achieve a realistic-looking subject as the centerpiece of an AI image. However, that level of quality and detail won’t be consistent for other characters and in the background of the image.

Take the above AI-generated image of Katy Perry, for example, which was posted by the singer herself on Instagram. The main subject looks convincing enough even with some scrutiny. However, the photographers in the background didn’t receive the same amount of attention — several faces overlap to the point of morphing into each other and many cameras have weird proportions. The floor also appears to be extremely smooth and lacks the texture of real carpet.

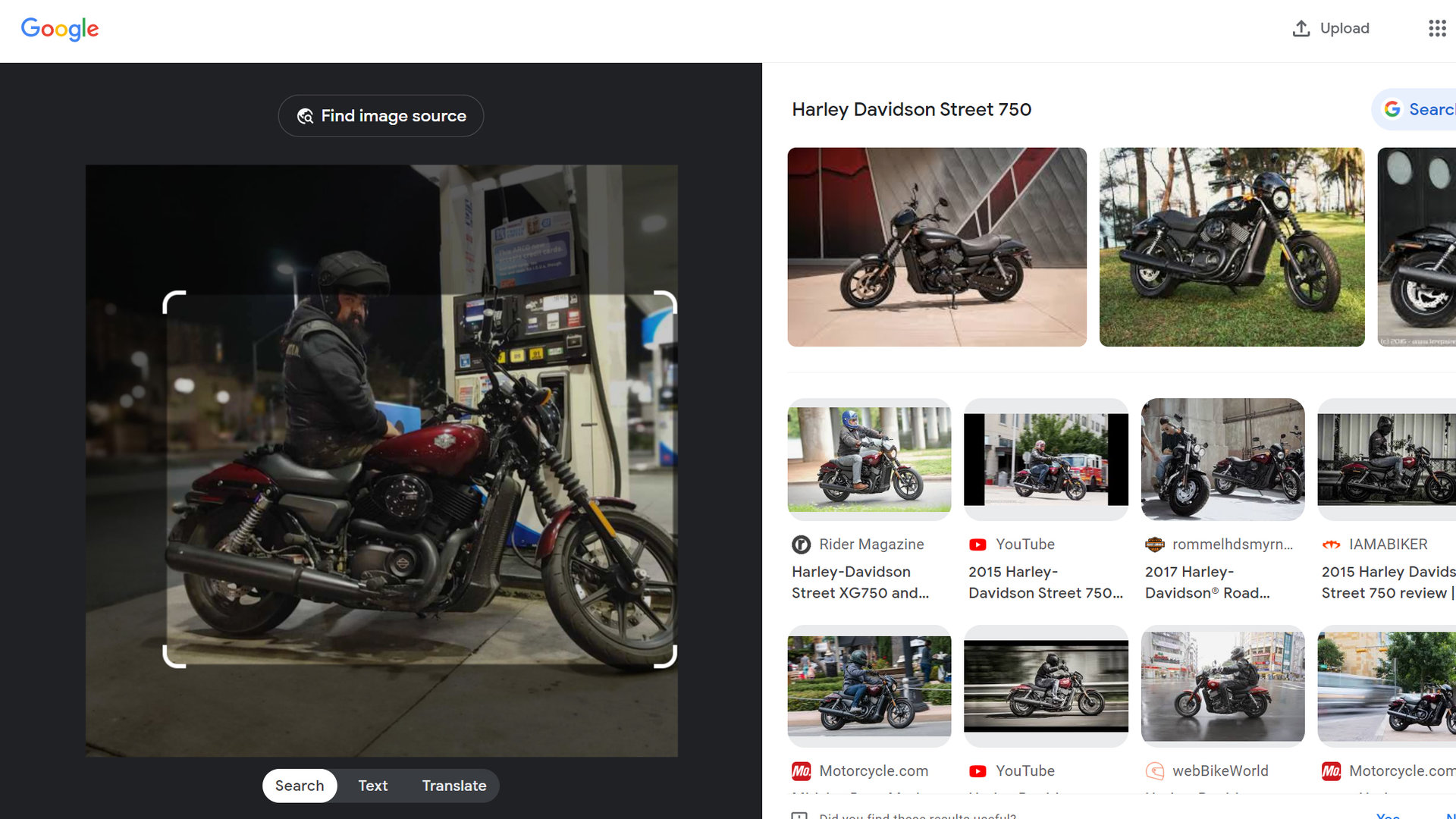

Reverse image search

If you stumble upon a suspicious image, right click on it and tap Search with Google Lens. Google and OpenAI have started adding special metadata to their AI-generated images, essentially adding a watermark that warns future viewers of their origin. This metadata will pop up in Google results too, so a reverse image search will quickly reveal AI-generated images with a warning banner.

Bad actors looking to spread misinformation will often intentionally use low-quality images as they can mask revealing details. A reverse image search can help you find an image’s true origin as well as higher quality versions. From there, it’s a matter of risk assessment — images originating from reputable sources like news websites are likely safe. But if your reverse image search only reveals results from Twitter, you should tread carefully.

Garbled text

Printed and handwritten text is one of the biggest telltale signs of AI-generated imagery. The first thing to check is whether the letters are clearly defined — AI tends to generate garbled alphabets that only look convincing at a quick passing glance. Just take a closer look at the Boots logo in the above image.

Admittedly, newer AI models can generate text within images with decent accuracy so the next step is to look for context clues. If an image is clearly posing to be from a foreign country and you only see English in the background everywhere, it might be worth investigating other aspects of the image to confirm its authenticity. Remember, AI can nail the atmosphere of a place so that it looks convincing, but not every detail.

Bonus: AI-generated videos

While modern generative AI tools quickly generate realistic pictures with very little tuning, that ease-of-use hasn’t carried over to moving images yet. A video can reveal many smaller imperfections over a longer timeframe, plus you can easily gauge how objects interact with each other as they move across the frame. Here are a couple of examples that clearly showcase where the AI falls short.

Now, not every AI-generated video will have such obvious telltale signs but chances are that you will notice at least one or two anomalies.

I’ve personally noticed that AI struggles to accurately depict friction in its videos — vehicles often glide along unrealistically even though real world roads are filled with undulations. It’s also worth looking out for shadows and reflections that don’t quite line up, especially if the camera pans around inconsistently.

AI-generated videos also don’t tend to feature much dialogue because it can be challenging to sync with the character’s lips. That said, newer models like OpenAI’s Sora 2 can add small bursts of dialogue so if you notice a single character speaking a few sentences at a time with rapid video cuts, you should immediately think of it as suspicious.

Finally, look at the length of the video. Each second of AI-generated video carries an immense computational cost that someone has to pay for. In other words, you’re much more likely to see short videos that range from ten seconds to around a minute. While it’s possible to merge multiple clips, generative AI struggles to maintain context so the results will never be perfect.

Ask the internet for help

Even if you religiously follow all of the tips in this article, chances are that you won’t detect every AI-generated image out there. Some of the best examples can look just realistic enough to fool us into thinking they’re real. And I’ve been in that situation too — after hours of searching for the right apartment, desperation finally caught up to me and I sub-consciously ignored some of the above telltale signs. It wasn’t the end of the world since I caught the discrepancies when I showed up in person, but this highlights why you should always seek multiple perspectives.

If you don’t know anyone in your circle that could help identify AI-generated imagery, there’s a thriving community on Reddit you can turn to instead. Posts on the RealOrAI subreddit often garner hundreds of comments from altruistic users that comb through images and videos to verify their authenticity.

Finally, if all else fails, you can fight fire with fire. Google’s own Gemini chatbot can tell you whether an image is synthetic or not. Simply upload the image in question and ask “Is this image generated by AI.” The chatbot will look for any embedded watermarks like SynthID and use its own internal reasoning to discern AI-generated images from real ones. I can imagine that more convincing samples will make their way past the AI, though, so your best bet will be to scrutinize suspicious images with your own eyes whenever possible.

Thank you for being part of our community. Read our Comment Policy before posting.