Affiliate links on Android Authority may earn us a commission. Learn more.

Google VideoPoet AI video generator: What is it and how does it work?

When Google announced PaLM 2 and Gemini language models in mid-2023, the search giant emphasized that its AI was multimodal. This meant it could generate text, images, audio, and even video. Traditionally, language models like ChatGPT’s GPT-4 have only excelled at reproducing text. Google’s latest VideoPoet model challenges that notion, however, as it can convert text-based prompts into AI-generated videos.

With VideoPoet, Google has become the first tech giant to announce an AI capable of generating videos. And unlike prior attempts, Google says it can also generate scenes with lots of motion rather than just subtle movements. So what’s the magic behind VideoPoet and what can it do? Here’s everything you need to know.

What is Google VideoPoet?

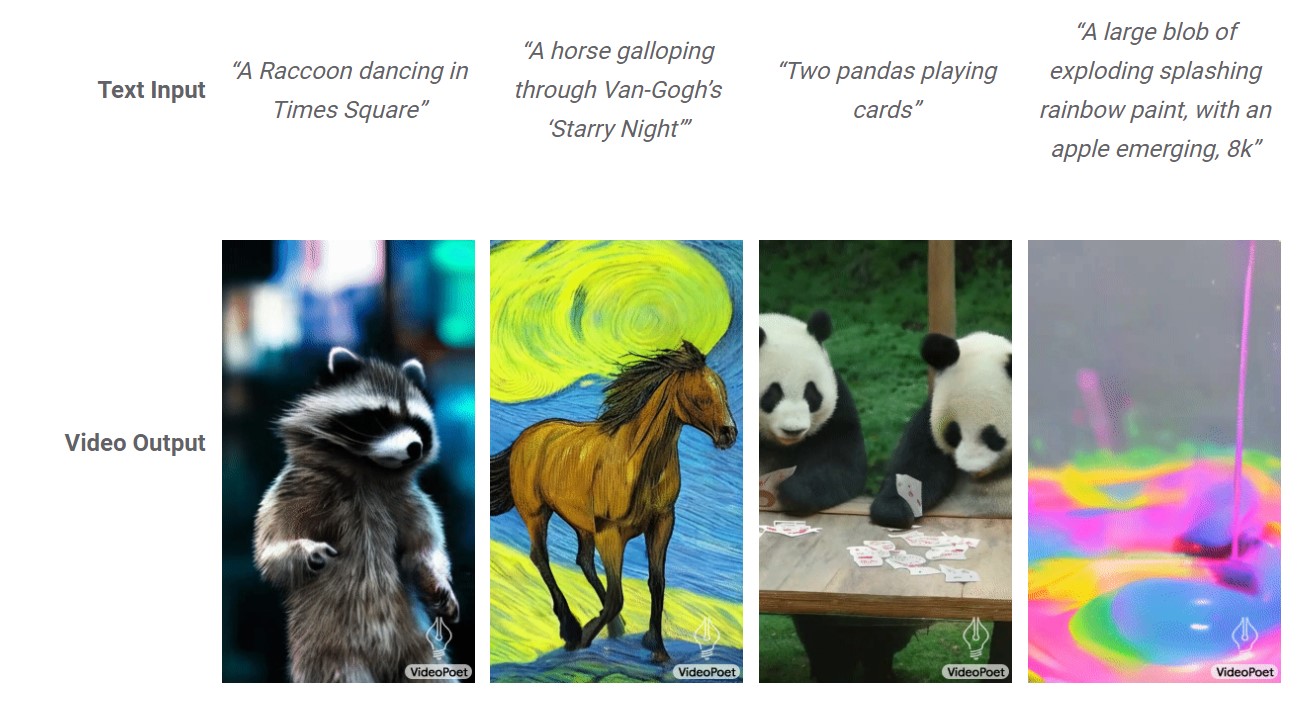

Google VideoPoet is an experimental large language model that can generate videos from a text-based prompt. You can describe a fictional scene, even one as ridiculous as “A robot cat eating spaghetti,” and have a video ready to watch within seconds. If you’ve ever used an AI image generator like Midjourney or DALL-E 3, you already know what to expect from VideoPoet.

Like AI image generators, VideoPoet can also perform edits in existing video content. For example, you could crop out a portion of the video frame and ask the AI to fill in the gap with something from your imagination instead.

Google has invested in startups like Runway working on AI video generation, but VideoPoet comes courtesy of the company’s internal efforts. The VideoPoet technical paper enlists as many as 31 researchers from Google Research.

How does Google VideoPoet work?

In the aforementioned paper, Google’s researchers explained that VideoPoet differs from conventional text-to-image and text-to-video generators. Unlike Midjourney, for example, VideoPoet does not use a diffusion model to generate images from random noise. That approach works well for individual images but falls flat for videos where the model needs to account for motion and consistency over time.

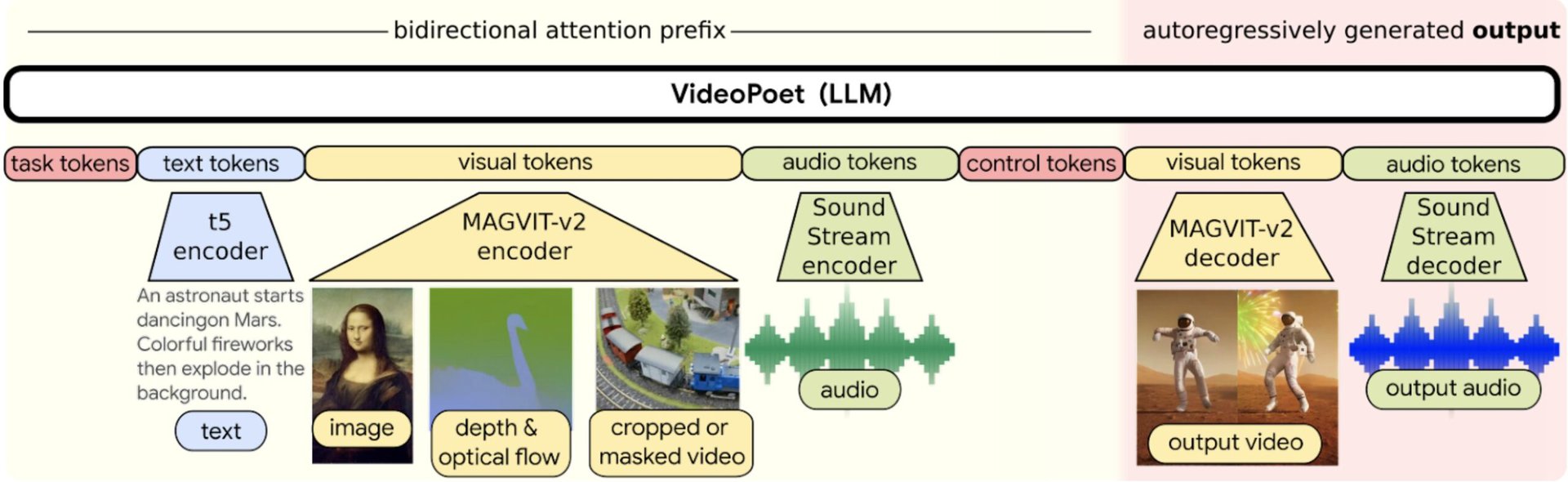

At its core, Google’s VideoPoet is a large language model. This means that it’s based on the same technology powering ChatGPT and Google Bard that can predict how words fit together to form sentences. VideoPoet takes that concept a step further as it’s also capable of predicting video and audio chunks, and not just text.

VideoPoet is a large language model that generates videos instead of text.

VideoPoet required a specialized pre-training process which involved translating images, video frames, and audio clips into a common language, called tokens. Put simply, the model learned how to interpret different modalities from the training data. Google says that it used one billion image-text pairs and 270 million public video samples to train VideoPoet. Ultimately, VideoPoet has become capable of predicting video tokens just like a traditional LLM model would predict text tokens.

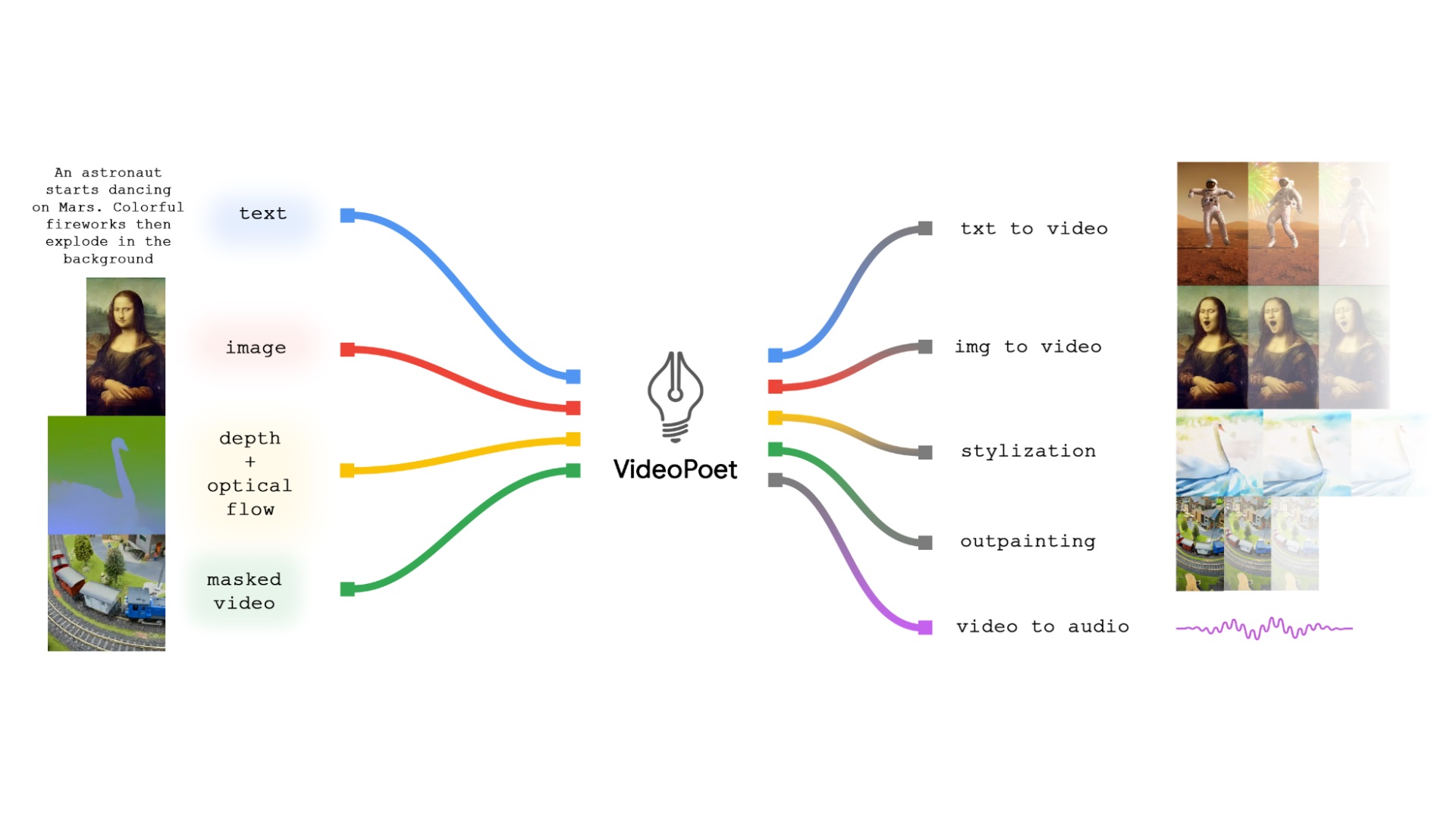

VideoPoet has a robust foundation thanks to its training that allows it to perform tasks beyond text-to-video generation as well. For example, it can apply styles to existing videos, perform edits like adding background effects, change the look of an existing video with filters, and change the motion of a moving object in an existing video. Google demonstrated the latter with a raccoon dancing in various styles.

VideoPoet vs. rival AI video generators: What’s the difference?

Google’s VideoPoet differs from most of its rivals that rely on diffusion models to turn text into videos. However, it’s not exactly the first – a smaller number of Google Brain researchers presented Phenaki last year. Likewise, Meta’s Make-A-Video project made waves in the AI community for generating diverse videos without training on video-text pairs beforehand. However, neither models were publicly released.

So given that we don’t have access to any video-generating models, we can only rely on the information Google has provided about VideoPoet. With that in mind, the paper’s authors assert that “In many cases, even the current leading models either generate small motion or, when producing larger motions, exhibit noticeable artifacts.” VideoPoet, on the other hand, can handle more motion.

VideoPoet can generate longer videos and handle motion more gracefully than the competition.

Google also says that VideoPoet can generate longer videos than the competition. While it’s limited to an initial burst of two-second videos, it can maintain context across eight to ten seconds of video. That may not sound like much but it’s impressive given how much a scene could change in that time period. Having said that, Google’s example videos only include a few dozen frames, far from the 24 or 30 frames per second benchmark used for professional video or filmmaking.

Google VideoPoet availability: Is it free?

While Google has published dozens of example videos to demonstrate the strengths of VideoPoet, it stopped short of announcing a public rollout. In other words, we don’t know when we’ll be able to use VideoPoet, if at all.

Google hasn't announced a product or release date for VideoPoet yet.

As for pricing, we may have to take the hint from AI image generators like Midjourney that are only available via a subscription. Indeed, AI-generated images and videos are computationally expensive so opening up access to everyone may not be feasible, even for Google. We’ll have to wait for a disruptive release like OpenAI’s ChatGPT to force the search giant’s hand. Until then, we’ll simply have to wait and watch from the sidelines.