Affiliate links on Android Authority may earn us a commission. Learn more.

Here's exactly how Google improved Portrait Mode on Pixel 4

The Google Pixel 4 is the first Pixel device with more than one rear camera, marking a long-overdue move for the company in a time when triple cameras are expected.

Google was able to work magic with the single-camera Pixel phones, particularly with Portrait Mode, which traditionally requires a second rear camera. Now, the company has taken to its AI blog to explain how the Pixel 4 Portrait Mode improves upon things.

It turns out that the Pixel 4 still uses some of the same single-camera Portrait Mode technology as the previous Pixels. The older phones used dual pixel autofocus tech for Portrait Mode, dividing each pixel on the 12MP main camera in half. Each half of the camera pixel sees a slightly different viewpoint (particularly in the background). This allows for a rough estimation of depth by finding corresponding pixels between the two viewpoints.

This solution has its limitations though, Google notes on the blog, as the dual pixels are confined to a tiny, single camera. This means the difference between viewpoints is extremely small, and you really want wider viewpoints for better depth estimation.

Google discovers telephoto cameras

This is where the Pixel 4’s telephoto lens comes into play, as Google notes that the distance between the primary and telephoto cameras is 13mm. Google illustrates the difference between what dual pixels see versus what dual cameras see in the GIF below. Notice how there’s a slight vertical shift with dual pixels (L), as opposed to the very noticeable shift with dual cameras.

Dual cameras do introduce one major challenge in this regard, as pixels in one view might not be found in the other view (owing to the wider viewpoints). And that’s why Google isn’t throwing away the legacy approach to Portrait Mode on the Pixel 4.

“For example, the background pixels immediately to the man’s right in the primary camera’s image have no corresponding pixel in the secondary camera’s image. Thus, it is not possible to measure the parallax to estimate the depth for these pixels when using only dual cameras,” reads an excerpt from the AI blog. “However, these pixels can still be seen by the dual pixel views (left), enabling a better estimate of depth in these regions.”

Nevertheless, dual cameras also help deal with the so-called aperture problem, which makes depth estimation of vertical lines more challenging. Furthermore, Google also trained its neural network to calculate depth well enough when only one method (dual pixel or dual cameras) is available. The company says that the phone uses both depth mapping methods when the camera is at least 20cm away from the subject (the minimum focus distance for the telephoto camera).

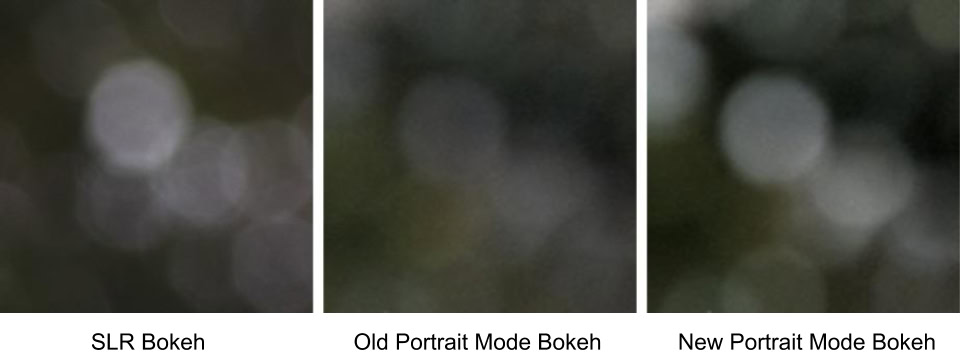

Finally, Google says it’s also worked to make the bokeh stand out more, so you can more clearly see the bokeh disks in the background of a shot. Check out the images above for a better idea of the finished product.

This isn’t the only new tech now on Google Pixel phones, as the company previously detailed how its astrophotography mode works. Either way, we hope Google keeps pushing the computational photography boundaries in 2020.