Affiliate links on Android Authority may earn us a commission. Learn more.

Google explains how that crazy astrophotography mode works

Published onNovember 27, 2019

One of the most impressive Google Pixel 4 features has to be the astrophotography mode, allowing users to accurately capture pictures of the stars. Google has previously touched on how it works, but the company has now published a full explanation on its AI blog, and it makes for some interesting reading.

First of all, the company notes that the Pixel 4 astrophotography mode allows for exposures of up to four minutes. The feature is also available on the Pixel 3 and Pixel 3a series, but these phones deliver exposures of up to one minute.

One of the biggest issues with the long exposure times is that it can introduce significant blur, Google explains.

“Viewers will tolerate motion-blurred clouds and tree branches in a photo that is otherwise sharp, but motion-blurred stars that look like short line segments look wrong,” the company explained on the AI blog. “To mitigate this, we split the exposure into frames with exposure times short enough to make the stars look like points of light.”

Google found that the ideal per frame exposure time for shooting the night sky is 16 seconds, so it combines 15 frames (each lasting for 16 seconds) to deliver a four minute overall exposure on the Pixel 4. The end-results, as seen in our own samples above, can be rather slick.

Four more challenges

The company also identified a few more hurdles in developing the Pixel 4 astrophotography mode, starting with the issue of warm/hot pixels.

Warm or hot pixels pop up during longer exposures, appearing as tiny bright dots in the image (even though there aren’t actually bright dots in the scene). But Google says it’s able to identify these bright dots by “comparing the value of neighboring pixels” in the frame and across all captured frames.

Once it spots a bright dot, Google is able to conceal it by replacing its value with the average of neighboring pixels. Check out an example of warm or hot pixels in the picture on the left, and Google’s solution on the right.

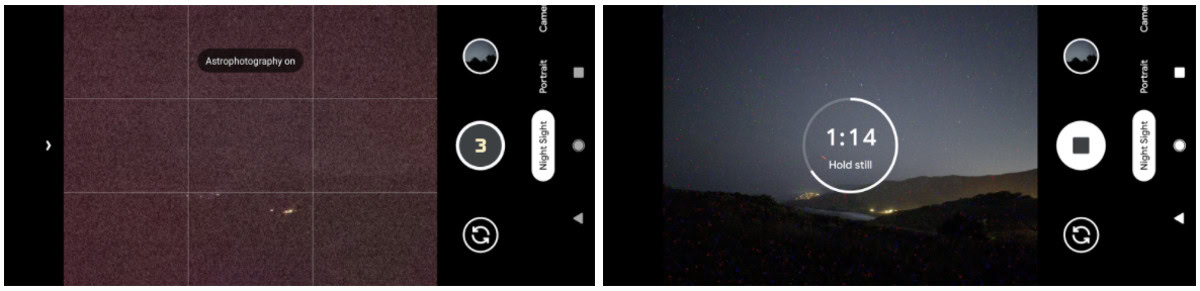

The second challenge Google had to solve when it comes to the astrophotography mode is scene composition. The phone camera’s viewfinder usually updates 15 times each second, but this becomes a problem at night.

“At light levels below the rough equivalent of a full moon or so, the viewfinder becomes mostly gray — maybe showing a few bright stars, but none of the landscape — and composing a shot becomes difficult,” Google explains. The company’s solution is to display the latest captured frame in astrophotography mode (check out the image on the right).

“The composition can then be adjusted by moving the phone while the exposure continues. Once the composition is correct, the initial shot can be stopped, and a second shot can be captured where all frames have the desired composition.”

Autofocus is another issue when it comes to the Pixel 4 astrophotography mode, as the extreme low light often means it can’t actually find anything to focus on. Google’s solution is a so-called “post-shutter autofocus” technique. This sees two autofocus frames of up to a second each being captured after you hit the shutter key, being used to detect any details worth focusing on (these frames aren’t used for the final image though). The focus is set to infinity if the post-shutter autofocus still can’t find anything. But users can always manually focus on a subject instead.

The final hurdle for Google is getting the light levels in the sky just right: “At night we expect the sky to be dark. If a picture taken at night shows a bright sky, then we see it as a daytime scene, perhaps with slightly unusual lighting.”

The search giant’s solution is to use machine learning to darken the sky in low-light situations. Google uses an on-device neural network trained on over 10,000 images to identify the night sky, darkening it in the process. The ability to detect the sky is also used to reduce noise in the sky, and increase contrast for specific features (e.g. clouds or the Milky Way). Check out the initial result on the left, and the darkened result on the right.

This all comes together to deliver some rather fantastic results, we’ve found in our own testing.

“For Pixel 4 we have been using the brightest part of the Milky Way, near the constellation Sagittarius, as a benchmark for the quality of images of a moonless sky. By that standard Night Sight is doing very well,” the company concluded. “Milky Way photos exhibit some residual noise, they are pleasing to look at, showing more stars and more detail than a person can see looking at the real night sky.”

But Google notes that it’s so far unable to adequately capture low-light scenes with an extremely wide brightness range (such as a moonlit landscape and the moon itself). Back at the Pixel 4 launch though, the firm hinted at a solution for this issue, so this seems like the next step for Google.

Have you tried out astrophotography mode on a Pixel 4 or older Pixel? Give us your thoughts below.