Affiliate links on Android Authority may earn us a commission. Learn more.

Google is finally tweaking camera algorithms for people of color

- While Google’s camera algorithms can do some incredible stuff, they aren’t so incredible for people of color.

- Algorithms simply aren’t optimized for darker skin tones and certain types of hair styles.

- At Google I/O 2021, Google made a commitment to changing this inequity and discussed its progress so far.

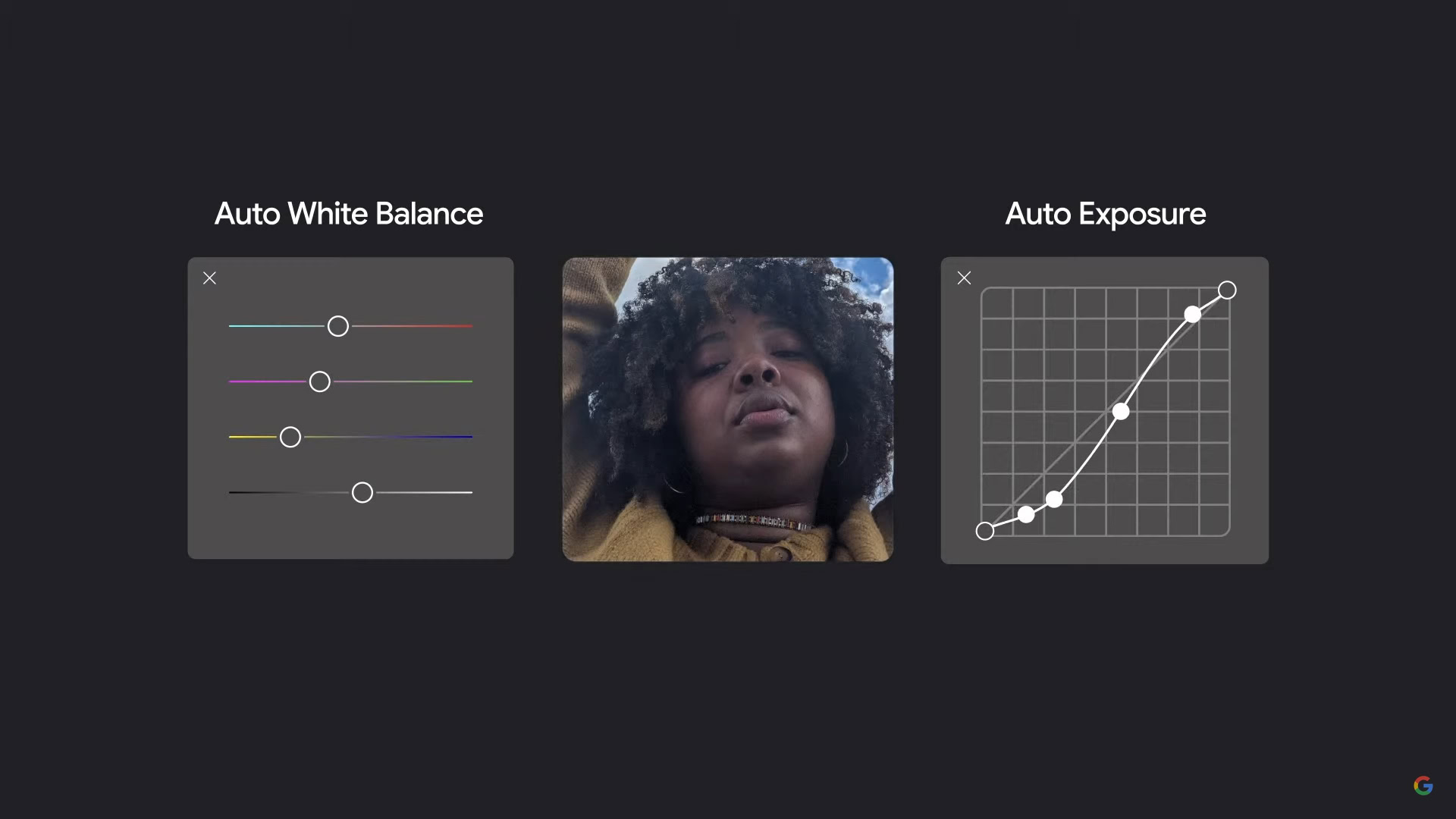

Smartphone cameras can do some incredible things. Using software smarts, they can blur out a background while keeping the focus on a subject. They can alter the coloring, fix exposure, and even add motion to static images.

However, one thing camera algorithms haven’t been so good at is working well for people of color. Due to inherent racial biases, algorithms are built on databases filled with white people. This can make PoC feel excluded even from their own photos.

Thankfully, Google is finally admitting to this fact. What’s more, it is committed to tweaking its Google camera algorithms to be more inclusive for PoC. During the keynote event of Google I/O 2021, the company showed its plans and current progress in addressing this disparity.

Google camera algorithms: A move to more equity

When you use the artificial bokeh effect on your phone (aka portrait mode), you might notice that your hair gets blurry at the edges. This is because the camera algorithm has a hard time distinguishing the very tiny strands of hair from the background. To fix this, Google and other companies are constantly tweaking their algorithms using machine learning.

However, PoC oftentimes will have much worse results in this regard due to their hair being much different than the hair images used to feed that machine learning. A similar problem happens when you introduce darker skin tones into an image.

See also: Here are the best Android mobile camera lens add-ons

To combat this, Google is working with over a dozen photographers and other image experts from all across the world and with diverse skin tones, hair types, and cultural backgrounds. These experts are capturing thousands of images that will then head to Google to help diversify the pool that feeds its machine learning algorithms.

It might take a while for Google camera algorithms to work the same for all different types of people, but at least Google is admitting there’s a problem and trying to fix it.