Affiliate links on Android Authority may earn us a commission. Learn more.

3 things you should know about the AV1 codec

The Aomedia Video 1 codec, or AV1, has been making its way into consumer’s hands. In early 2020, Netflix made the headlines when it said it had started streaming AV1 to some Android viewers. Later, Google brought the AV1 codec to its Duo video chat app, and MediaTek enabled AV1 YouTube video streams on its Dimensity 1000 5G SoC.

What is all the fuss about? What is the AV1 codec? Why is it important? Here is a quick look at AV1 and what it means for video streaming over the five years.

AV1 is royalty-free and open source

Inventing technology, designing components, and doing research is expensive. Engineers, materials, and buildings cost money. For a “traditional” company the return on investment comes from sales. If you design a new gadget and it sells in the millions, then you get the money back that was initially spent. That is true of physical products, like smartphones, but it is also true for software development.

A game company spends money developing a game, paying the engineers and the artists along the way, and then it sells the game. It might not even physically exist on a DVD/ROM cartridge/whatever. This might be a digital download. However, the sales pay for its development.

What happens if you design a new algorithm or technique for doing something, say for compressing video? You can’t offer an algorithm as a digital download, it won’t be bought by consumers, but rather by product makers who want to include the algorithm in smartphones, tablets, laptops, TVs, and so on.

Netflix made the headlines when it said it had started streaming AV1 to some Android viewers.

If an algorithm’s inventor is able to sell the technique to third parties then one of the business options is to charge a small fee, a royalty charge, for every device that ships with the algorithm. This all seems fair and equitable. However, the system is open to abuse. From unfriendly renegotiations about the fees, to patent trolls, to million-dollar lawsuits, the history of royalty-based businesses is long and full of unexpected wins and losses, for both the “bad guys” and the “good guys.”

Once a technology becomes pervasive then an odd thing happens: products can’t be built without it, but they can’t be built with it, unless the fees are negotiated. Before a product even gets past the initial conception it is already burdened with the prospect of royalty fees. It is like trying to charge a product maker for building a gadget that uses electricity, not the amount of electricity used, but just the fact it uses electricity.

The reaction against this is to look for, and develop, tech which is free from royalty payments and free from the shackles of patents. This is the aim of the AV1 codec.

Many of the current leading and ubiquitous video streaming technologies are not royalty-free. MPEG-2 Video (used in DVDs, satellite TV, digital broadcast TV, and more), H.264/AVC (used in Blu-Ray Discs and many internet streaming services), and H.265/HEVC (the recommended codec for 8K TV) are all laden with royalty claims and patents. Sometimes the fees are waived, sometimes they are not. For example, Panasonic has over 1,000 patents related to H.264, and Samsung has over 4,000 patents related to H.265!

The AV1 codec is designed to be royalty-free. It has lots of big names supporting it, which means that a legal challenge against the combined patents pools and financial muscle of Google, Adobe, Microsoft, Facebook, Netflix, Amazon, and Cisco, would be futile. However, that hasn’t stopped some patent trolls, like Sisvel, from rattling their chains.

Also: How to smartphone cameras work?

AV1 codec is 30% better than H.265

Besides being royalty-free and open-source friendly, AV1 needs to actually offer advantages over already established technologies. Aomedia (the guardians of AV1 codec) claim it offers 30% better compression than H.265. That means it uses less data while offering the same quality for 4K UHD video.

There are two important metrics for any video codec. The bitrate (i.e. the size) and the quality. The higher the bitrate, the larger the encoded files. The larger the encoded files, the greater the amount of data that needs to be streamed. As the bitrate changes, so does the quality. In simple terms, if there is less data then the fidelity and accuracy to the original source material will decrease. The more data, the better chance of representing the original.

Video codecs like AV1 (and H.264/H.265) use lossy compression. That means that the encoded version is not the same (pixel by pixel) as the original. The trick is to encode the video in such a way as to make the losses imperceivable to the human eye. There are lots of techniques to do this and it is a complex subject. Three of the principal techniques are to use incremental frame changes, quantization, and motion vectors.

AV1 is designed to be royalty-free.

The first is a simple win in terms of compression, rather than send a full frame of video 30 times a second (for a 30fps video), why not just send the changes from one frame to the next. If the scene is two people throwing a ball around then the changes will be the ball and the people. The rest of the scene will remain relatively static. The video encoder need only worry about the difference, a much small data set. Whenever the scene changes, or at forced regular intervals, a full-frame (a keyframe) needs to be included and then the differences are tracked from that last full-frame.

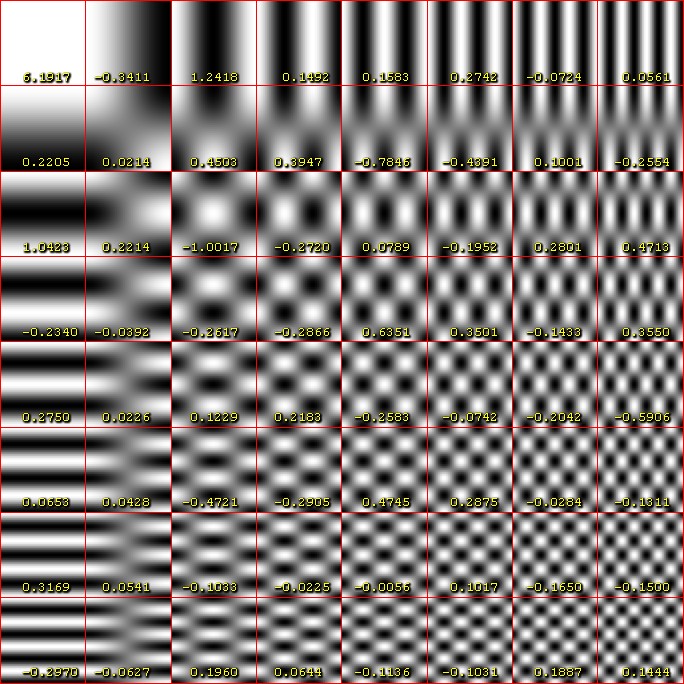

When you take a photo on your smartphone the chances are that it is saved in JPEG format (a .jpg file). JPEG is a lossy image compression format. It works by using a technique called quantization. The basic idea is this, a given segment of a photo (8×8 pixels) can be represented by a fixed sequence of shaded patterns (one for each color channel) layered on top of each other. These patterns are generated using a Discrete Cosine Transform (DCT). Using 64 of these patterns an 8×8 block can be represented by deciding how much of each pattern is needed to get an approximation of the original block. It turns out that maybe only 20% of the patterns are needed to get a convincing imitation of the original block. This means that rather than storing 64 numbers (one per pixel), the image with lossy compression may only need 12 numbers. 64 down to 12, per color channel, is quite a saving.

The number of shaded patterns, the transforms need to generate them, the weighting given to each pattern, the amount of rounding that is done, are all variable and alter the quality and the size of the image. JPEG has one set of rules, H.264 another set, AV1 another set, and so on. But the basic idea is the same. The result is that each frame in the video is, in fact, a lossy representation of the original frame. Compressed and smaller than the original.

Third, there is motion tracking. If we go back to our scene of two people throwing around a ball, then the ball travels across the scene. For some of its travels, it will look exactly the same, so rather than send the same data again and about the ball, it would be better to just note that the block with the ball has how moved a bit. Motion vectors can be complex and finding those vectors and plotting the tracks can be time-consuming during encoding, but not during decoding.

It is all about the bits

The supreme battle for a video encoder is to keep the bitrate low and the quality high. As video encoding has progressed over the years the aim of each successive generation was to decrease the bitrate and maintain the same level of quality. At the same time, there has also been an increase in the display resolutions able to consumers. DVD (NTSC) was 480p, Blu-Ray was 1080p and today we have 4K video streaming services and we are edging slowing to 8K. A high screen resolution also means more pixels to represent which means more data is needed for each frame.

The “bitrate” is the number of 1’s and 0’s that are used, per second, by the video codec. As a starting point, a rule of thumb, the higher the bitrate the better the quality. What bitrate you “need” for good quality depends on the codec. But if you use a low bitrate the picture quality can disintegrate quickly.

When the files are store (on a DVD disc, Blu-Ray disk, or on a hard drive) the bitrate determines the file size. To make things simple we will ignore any audio tracks and any embedded information inside of a video stream. If a DVD is roughly 4.7GB and you wanted to store a two hour (120 minutes or 7200 seconds) movie, then the maximum bitrate possible would be 5200 kilobits per second or 5.2Mbps.

Megabits vs megabytes: Megabits per second (Mb/s) vs Megabytes per second (MB/s).

In comparison, a 4K video clip straight out of my Android smartphone (in H.264) used 42Mbps, around 8x higher, but while recording at a resolution with around 25x as many pixels per frame. Just looking at those very rough numbers we can see that H.264 offers at least 3x better compression than MPEG-2 Video. The same file encoded in H.265 or AV1 would use roughly around 20Mbps, meaning that both H.265 and the AV1 codec offer twice as much compression as H.264.

The supreme battle for a video encoder is to keep the bitrate low and the quality high.

These are very much rough estimations about the compression ratios available because the numbers I have given imply a constant bitrate. However, some codecs allow videos to be encoded in a variable bitrate governed by a quality setting. This means that the bitrate changes moment by moment, with a predefined maximum bitrate used when the scenes are complex and lower bitrates when things are less cluttered. It is then this quality setting that determines the overall bitrate.

There are various ways to measure quality. You can look at the peak signal to noise ratio as well as other statistics. Plus you can look at the perceptive quality. If 20 people what the same video clips from different encoders, which ones will be ranked higher for quality.

This is where the 30% better compression claims come from. According to different bits of research, a video stream encoded in AV1 can use a lower bitrate (by 30%) while achieving the same level of quality. From a personal, subjective point of view that is hard to verify and equally hard to dispute.

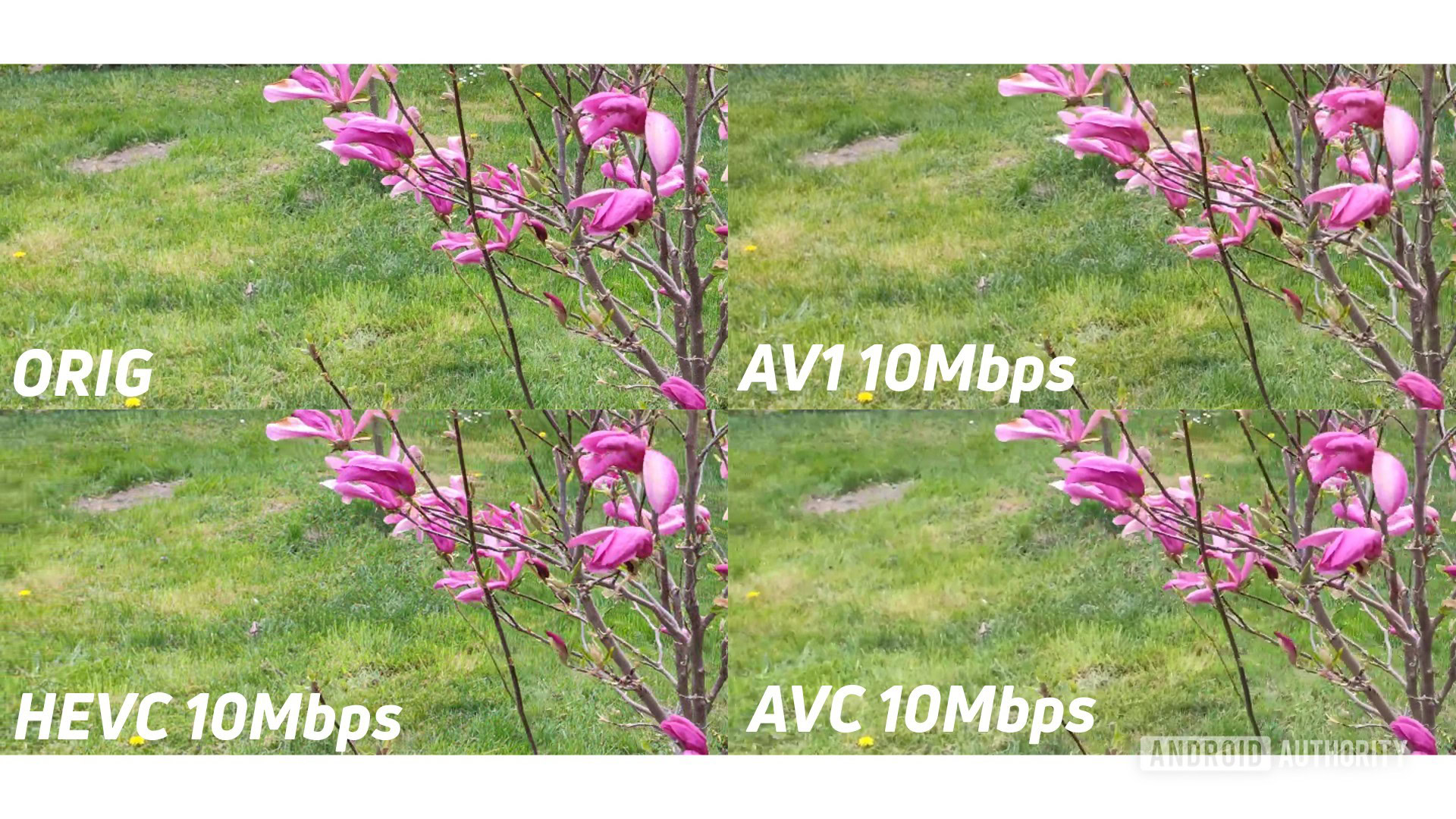

Above is a montage of a single frame from the same video, encoded in three different ways. The top left is the original video. Next to the right is the AV1 codec, with H.264 below it and H.265 below the original source. The original source was 4K. This is a less than perfect method to visualize the differences, but it should help illustrate the point.

Due to the reduction of the overall resolution (this is a 1,920 x 1,080) image, I find it hard to spot much of a difference between the four images, especially without pixel peeping. Here is the same type of montage but with the image zoomed in, so we can pixel peep, a little.

Here I can see that the original source video probably has the best quality, and the H.264 the worst (relative) to the original. I would struggle to declare a winner between H.265 and AV1. If forced I would say the AV1 codec does a better job of reproducing the colors on petals.

One of the claims that Google made about its use of AVI in its Duo app was that it would, “improve video call quality and reliability, even on very low bandwidth connections.” Back to our montage, this time each encoder has been forced to 10Mbps. This is completely unfair for H.264 as it doesn’t claim to offer the same quality at the same bitrates as H.265/Av1, but it will help us see. Also, the original is unchanged.

H.264 at 10Mbps is clearly the worst of the 3. A quick glance at H.265 and AV1 leaves me feeling that they are very similar. If I go pixel peeping I an see that AV1 is doing a better job with the grass in the top left-hand corner of the frame. So AV1 is the champ, but on points only, it certainly wasn’t a knock-out.

AV1 codec isn’t ready for the masses (yet)

Royalty-free and 30% better. Where do I sign-up? But there is a problem, actually a huge problem. Encoding AV1 files is slow. My original 4K clip from my smartphone is 15 seconds long. To encode it, using software only, into H.264, on my PC takes around 1 minute, so four times longer than the clip length. If I use hardware acceleration available in my NVIDIA video card, then it takes 20 seconds. Just a little longer than the original clip.

For H.265 things are a little slower. Software only encoding takes about 5 minutes, quite a bit longer than the original. Fortunately encoding via hardware into H.265 also takes just 20 seconds. So hardware-enabled encoding of H.264 and H.265 are similar on my setup.

Before all the video geeks start screaming, yes, I know there are a billion different settings that can alter encoding times. I did my best to make sure I was encoding like-for-like.

My hardware doesn’t support AV1 encoding, so my only option is software-based. The same 15-second clip, that took five minutes for H.265 in software, takes 10 minutes for Av1. But that wasn’t like-for-like, that was tweaked to get the best performance. I tested several different variations of the quality settings and presets, 10 minutes was the best time. One variation I ran took 44 minutes. 44 minutes for 15 seconds of video. This is using the SVT-AV1 encoder that Netflix is keen about. There are alternatives out there, but they are much slower, like hours and hours, much slower.

| Encoding of 4K 15 sec clip | SW or HW | Time |

|---|---|---|

| Encoding of 4K 15 sec clip H.264 | SW or HW Software | Time 1 min |

| Encoding of 4K 15 sec clip H.264 | SW or HW Hardware | Time 20 secs |

| Encoding of 4K 15 sec clip H.265 | SW or HW Software | Time 5 mins |

| Encoding of 4K 15 sec clip H.265 | SW or HW Hardware | Time 20 secs |

| Encoding of 4K 15 sec clip AV1 | SW or HW Software | Time 10 mins |

This means that if I have a one-hour movie I have edited from my vacation way to somewhere exotic, then to convert it to H.265 using hardware acceleration on my PC will take 80 minutes. The same file using the current software AV1 encoders will take 40 hours!

That is why it isn’t ready for the masses (yet). Improvements will come to the encoders. The software will get better and hardware support will start to appear. The decoders are already becoming lean and efficient, that is how Netflix is able to start streaming some content in AV1 to Android devices. But in terms of a ubiquitous replacement for H.264? No, not yet.