Affiliate links on Android Authority may earn us a commission. Learn more.

What is Apple's Photonic Engine all about?

Apple’s iPhone 14 series announcement came full of surprises. With so many odd Apple terms thrown around, we can understand how things can get a bit confusing. One feature you might not fully understand is Apple’s new Photonic Engine. Let’s talk about it!

QUICK ANSWER

Apple's Photonic Engine is the evolution of their previous-generation Deep Fusion. Deep Fusion is a technique in which the iPhone camera takes multiple shots using different settings. It then uses Apple's machine learning, processing power, and neural engine to merge these images and take the best out of each shot.

In the case of the Photonic Engine on iPhone 14 and newer models, the phone will start taking more uncompressed images earlier in the process of taking a shot. This will reproduce more detail, retain textures, improve exposure, and make colors more vibrant in lower-light environments.

JUMP TO KEY SECTIONS

First, let’s talk about Deep Fusion

Since Apple’s Photonic Engine is an evolution of Deep Fusion, we must first talk about its predecessor. Apple introduced Deep Fusion with the iPhone 11 series. It’s Apple’s way of handling image processing in darker environments. It retains textures, makes images sharper, improves lighting, and improves color reproduction.

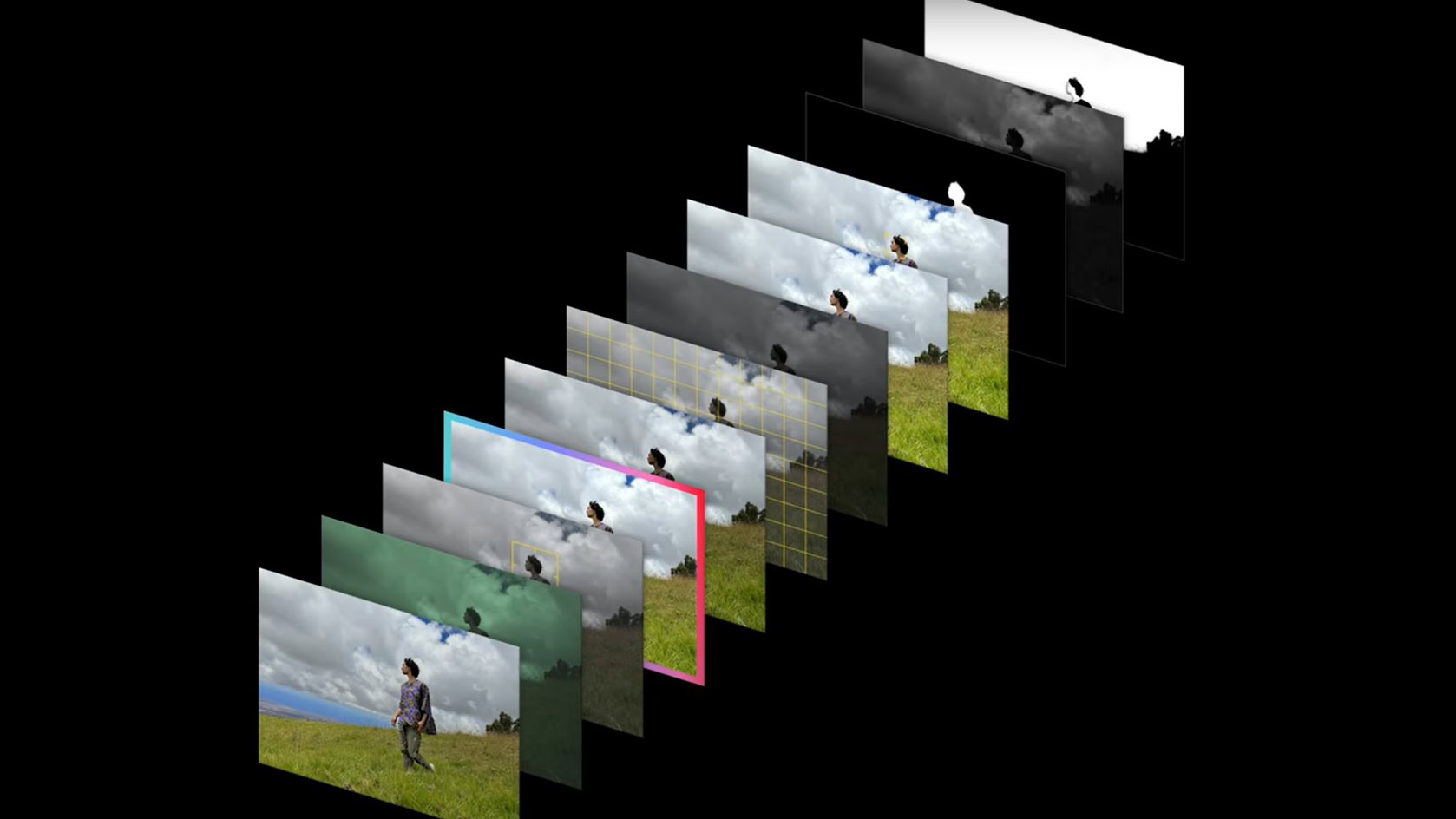

Apple’s Deep Fusion does this by shooting nine images in total. The phone will capture four before you press the shutter button. The camera will then shoot another four photos and a longer exposure image. All these images will then be compiled and merged, creating a single image with all the best parts of all the pictures taken. The neural engine will work pixel by pixel to guarantee maximum quality.

How is Photonic Engine different?

Apple’s “new” technology seems to be the same concept as Deep Fusion, but extended. The main difference is that the process will begin “much earlier in the process, on uncompressed images.” Apple claims this will improve detail, color, and exposure in low-light situations.

Apple states this technology makes images in low light two times better on all secondary cameras and up to three times better on the main one.

So, it’s pretty much like HDR photography?

Photography enthusiasts and techies will probably realize this sounds much like HDR (High Dynamic Range). In HDR photography, a photographer will shoot the same frame at multiple exposure levels. These photos can then be merged using specialized software, and the result will be an image with more details in the shadows and highlights. You can learn more about this in our HDR guide.

While Apple is pretty much using the same process as HDR, the technique is not quite the same. HDR focuses mainly on improving exposure and contrast. Apple’s Photonic Engine does a bit more, looking into things like sharpness, detail, color, motion blur, and more.

If you’re lost about some of these terms, we also have a list of the most essential photography terms to learn.

Can I turn Photonic Engine on or off?

You can’t turn Photonic Engine on or off manually. The camera software will decide to use it during low-light and mid-light situations, when it deems it necessary. The same was the case with Deep Fusion, and it was a decision made by Apple to avoid people having to pick and choose how to take better images. Apple wants the iPhone to do all the deciding so that all you have to do is press the shutter button. It’s part of the whole “it just works” philosophy.

Does it replace Night Mode?

Photonic Engine is a technology that improves general images. It will likely be activated on most photo shots, especially if they include at least some darker areas. Night Mode focuses on making very dark photos brighter. Some of its processes and features might overlap, but we can say Night Mode is for much darker scenes needing more help. The process is slower, but it can usually pull up more exposure. As such, Photonic Engine and Night Mode will live together in the iPhone photo experience, at least for now.

Does Android have its version of Photonic Engine?

While Apple makes the feature sound new and exciting, it’s a technique that’s been around in the Android ecosystem. One of the most obvious examples is Google’s AI camera, found on its Pixel devices, including the Pixel 7 series. Google’s AI camera has a technique they call “optical flow.” Optical flow can shoot over 12 images in rapid succession and then combine them into a single, improved photo.

Additionally, all Android smartphone manufacturers use a certain level of computational photography, and most have features like HDR and Night Mode.

FAQs

Apple’s Photonic Engine is an improved version of Deep Fusion. They both take multiple photos and merge them into a single one. The main difference is that Photonic Engine takes more images, and they’re uncompressed.

Apple’s Photonic Engine technology can’t be turned on or off at will. Supported iPhones will decide when to use it to improve low-light photos.

Google uses a very similar technique, which they call Optical Flow.

While it uses a very similar method for improving photos, Photonic Engine isn’t the same as HDR. Apple’s Photonic Engine focuses on improving more than exposure and contrast. It also works with textures, colors, and more.

Photonic Engine is available only for iPhone 14 series and newer. So far, Apple has given us no signs it will update older devices with the new technology.