Affiliate links on Android Authority may earn us a commission. Learn more.

What is the Kirin 970's NPU? - Gary explains

Neural Networks(NN) and Machine Learning (ML) were two of the year’s biggest buzzwords in mobile processoring. HUAWEI’s HiSilicon Kirin 970, the image processing unit (IPU) inside the Google Pixel 2, and Apple’s A11 Bionic, all feature dedicated hardware solutions for NN/ML.

Since HUAWEI, Google, and Apple are all touting hardware-based neural processors or engines, you might think that machine learning requires a dedicated piece of hardware. It doesn’t. Neural networks can be run on just about any type of processor—from microprocessors to CPUs, GPUs, and DSPs. Any processor that can perform matrix multiplications can probably run a neural network of some kind. The question isn’t if the processor can utilize NN and ML, but rather how fast and how efficiently it can.

Let me take you back to a time when the humble desktop PC didn’t include a Floating Point Unit (FPU). The Intel 386 and 486 processors came in two flavors, ones with an FPU and ones without. By floating point I basically mean “real numbers” including rational numbers (7, -2 or 42), fractions (1/2, 4/3 or 3/5), and all the irrational numbers (pi or the square root of two). Many types of calculations require real numbers. Calculating percentages, plotting a circle, currency conversions, or 3D graphics, all require floating point numbers. Back in the day, if you owned a PC without an FPU then the relevant calculations were performed in software, however they were much slower than the calculations performed in the hardware FPU.

The question isn’t if the processor can utilize NN and ML, but rather how fast can it do it and how efficiently.

Fast forward 30 years and all general purpose CPUs contain hardware floating point units and even some microprocessors (like some Cortex-M4 and M7 cores). We are now in a similar situation with NPUs. You don’t need an NPU to use neural networks, or even use them effectively. But companies like HUAWEI are making a compelling case for the need of NPUs when it comes to real-time processing.

Difference between training and inference

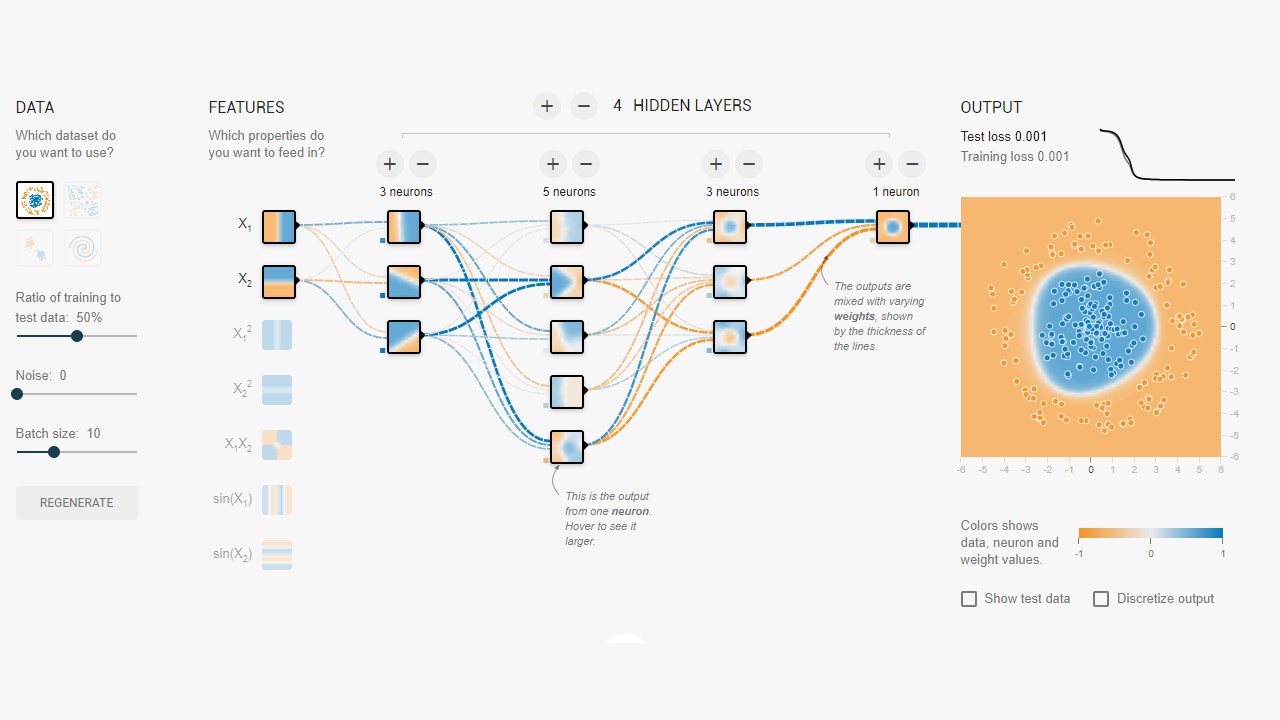

Neural Networks are one of several different techniques in Machine Learning to “teach” a computer to distinguish between things. The “thing” might be a photo, a spoken word, an animal noise, whatever. A Neural Network is a set of “neurons” (nodes) which receive input signals and then propagate a signal further across the network depending on the strength of the input and its threshold.

A simple example would be an NN that detects if one of several lights is switched on. The status of each light is sent to the network and the result is either zero (if all the lights are off), or one (if one or more of the lights are on). Of course, this is possible without Neural Networking, but it illustrates a very simple use case. The question here is how does the NN “know” when to output zero and when to output one? There are no rules or programming which tell the NN the logical outcome we are trying to achieve.

The way to get the NN to behave correctly is to train it. A set of inputs are fed into the network, along with the expected result. The various thresholds are then adjusted slightly to make the desired result more likely. This step is repeated for all inputs in the “training data.” Once trained, the network should yield the appropriate output even when the inputs have not been previously seen. It sounds simple, but it can be very complicated, especially with complex inputs like speech or images.

Once a network is trained, it is basically a set of nodes, connections, and the thresholds for those nodes. While the network is being trained, its state is dynamic. Once training is complete, it becomes a static model, which can then be implemented across millions of devices and used for inference (i.e. for classification and recognition of previously unseen inputs).

The inference stage is easier than the training stage and this is where the NPU is used.

Fast and efficient inference

Once you have a trained neural network, using it for classification and recognition is just a case of running inputs through the network and using the output. The “running” part is all about matrix multiplications and dot product operations. Since these are really just math, they could be run on a CPU or a GPU or a DSP. However what HUAWEI has done is design an engine which can load the static neural network model and run it against the inputs. Since the NPU is hardware, it can do that quickly and in a power efficient manner. In fact, the NPU can process “live” video from a smartphone’s camera in real time, anywhere from 17 to 33 frames per second depending on the task.

The inference stage is easier than the training stage and this is where the NPU is used.

The NPU

The Kirin 970 is a power house. It has 8 CPU cores and 12 GPU cores, plus all the other normal bells and whistles for media processing and connectivity. In total the Kirin 970 has 5.5 billion transistors. The Neural Processing Unit, including its own SRAM, is hidden among those. But how big is it? According to HUAWEI, the NPU takes up roughly 150 million transistors. That is less than 3 percent of the whole chip.

Its size is important for two reasons. First, it doesn’t increase the overall size (and cost) of the Kirin SoC dramatically. Obviously it has a cost associated with it, but not on the level of CPU or GPU. That means adding an NPU to SoCs is possible not only for those in flagships, but also mid-range phones. It could have a profound impact on SoC design over the next 5 years.

Second, it is power efficient. This isn’t some huge power hungry processing core that will kill battery life. Rather it is a neat hardware solution that will save power by moving the inference processing away from the CPU and into dedicated circuits.

One of the reasons the NPU is small is because it only does the inference part, not the training. According to HUAWEI, when training up a new NN, you need to use the GPU.

Wrap-up

If HUAWEI can get third-party app developers on board to use its NPU, the possibilities are endless. Imagine apps using image, sound, and voice recognition, all processed locally (without an internet connection or “the cloud”) to enhance and augment our apps. Think of a tourist feature that points out local landmarks directly from within your camera app, or apps that recognize your food and give you information about the calorie count or warn you of allergies.

What do you think, will NPUs eventually become a standard in SoCs just like Floating Point Units became standard in CPUs? Let me know in the comments below.