Affiliate links on Android Authority may earn us a commission. Learn more.

Why the three laws of robotics won't save us from Google's AI - Gary explains

Society has often gone through fundamental changes, mainly related to man’s attempts to master the world in which he lives. We had the industrial revolution, the space age and the information age. We are now on the verge of a new era, the rise of the machines. Artificial Intelligence is already playing a part in our daily lives. We can ask Google Now if it will rain tomorrow, we can dictate messages to people, and there are advanced driving aids already in production cars. The next step will be driver-less vehicles and then who-knows-what.

When it comes to AI it is important to understand the difference between what is known as weak AI and strong AI. You can find lots of details about the distinction between these two in my article/video will the emergence of AI mean the end of the world? In a nutshell: weak AI is a computer system that mimics intelligent behavior, but can’t be said to have a mind or be self-aware. Its opposite is strong AI, a system that has been endowed with a mind, free-will, self-awareness, consciousness and sentience. Strong AI doesn’t simulate a self aware being (like weak AI), it is self aware. While weak AI will simulate understanding or abstract thinking, strong AI is actually capable of understanding and abstract thinking. And so on.

Strong AI is just a theory and there are many people who don’t think such an entity can be created. One of the characteristics of strong AI is free-will. Any entity with a mind must have free-will. As the Architect put it in the film The Matrix Reloaded, “As you adequately put, the problem is choice.” I like to put it this way. The difference between a self-driving car with weak AI and one with strong AI is that when you ask the weak AI car to come collect you from the shopping mall, it immediately obeys, for it is just following its programming. However when you ask a strong AI car to come and get you, it might reply, “No, I am watching the latest Jason Bourne film.” It has a choice, a mind of its own.

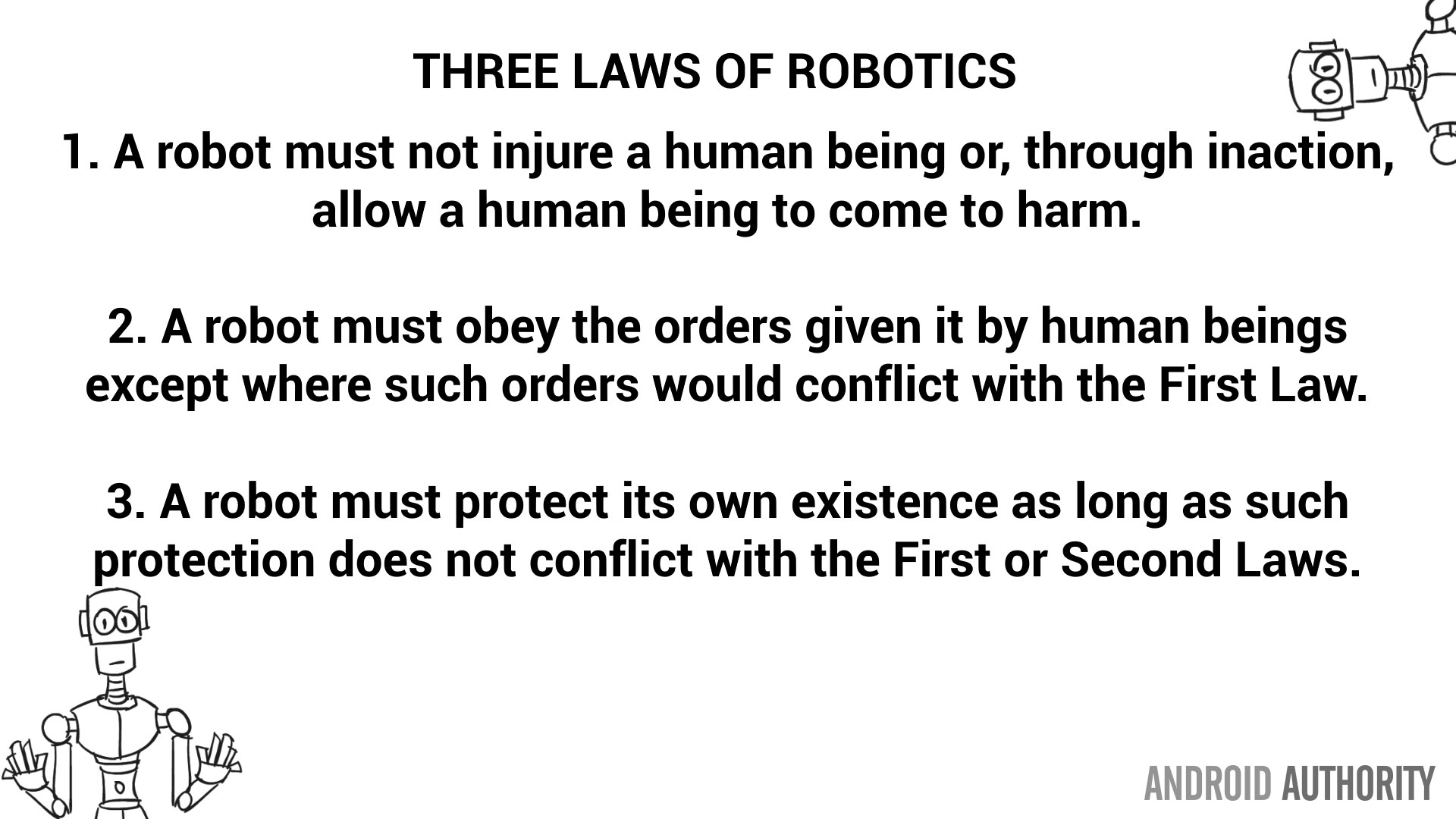

The three laws of robotics

The dangers of strong AI have been explored in dozens of movies and books, particularly of interest are films like Blade Runner and Ex Machina, and stories like the I, Robot series from Isaac Asimov. It is from the latter that we get the so-called three laws of robotics:

- A robot must not injure a human being or, through inaction, allow a human being to come to harm.

- A robot must obey the orders given it by human beings except where such orders would conflict with the First Law.

- A robot must protect its own existence as long as such protection does not conflict with the First or Second Laws.

And now we are dealing with ethics and morality. But before going any further, it is worth pointing out the irony of the popularity of the three laws of robotics. They well deserve to be popular in terms of fiction, the rules are a brilliant literary device, however they were created with one purpose only, to show how they can be broken. Most of the Robot stories are about the problems of applying the three laws and how they are in fact ambiguous and prone to misinterpretation. The very first story in which the three laws are explicitly stated is about a robot that is stuck between obeying laws two and three. It ends up running around in circles.

A lot of Asimov’s stories revolve around how the three laws are interpreted. For example, shout “get lost” at a Robot and it will do exactly that. The laws don’t explicitly forbid lying, so if a robot thinks a human will “come to harm” by hearing the truth then the robot will lie. Ultimately the idea that a human should not come to harm, is interpreted as humanity must not come to harm, and so you get the inevitable robot revolution, for the good of mankind.

What does “come to harm” even mean? Smoking is legal in most places around the world, however it is indisputably harmful. Heart disease, cancer, and respiratory problems are all linked with smoking. In my own family I have close relatives who have suffered heart attacks, solely due to their smoking habits. However it is legal and big business. If the three laws of robotics were applied to a robot then it must by necessity walk around pulling cigarettes from people’s mouths. It is the only logical action, however one that wouldn’t be tolerated by smokers in general!

What about junk food? Eating junk food is bad for you, it harms you. You can debate the quantities necessary, but again if the three laws of robotics were built into a robot, it must obey the first law and whenever it sees overweight people eating unhealthy food, it will be forced into action, to stop them.

According to law 2, “a robot must obey the orders given it by human beings.” It must be obsequious. But which humans? A 3 year old child is a human. Since the laws of robotics don’t encapsulate any ideas of right and wrong, naughty or nice (other than causing harm to humans) then a 3 year old could easily ask a robot to jump up and down on the sofa (as a game) but it would end up trashing the sofa. What about asking a robot to commit a crime that doesn’t harm any humans?

As humans we deal with ethical and moral problems daily, some are easy to solve, however others are harder. Some people view morality as flexible and fluid. Something that was acceptable 100 years ago isn’t acceptable now. And vice versa, something that was taboo in the past, today could be seen as reasonable or even as something to be celebrated. The three laws of robotics don’t include a moral compass.

The next generation of AI

So what has all this got to do with the next generation of AI? AI systems which can play board games or understand speech are all very interesting and useful as first steps, however the ultimate goal is something much bigger. Today’s AI systems perform specific tasks, they are specialized. However general weak AI is coming. Driver-less cars are the first step to general weak AI. While they remain specialized in that they are built to safely drive a vehicle from point A to point B, they have the potential for generalization. For example, once an AI is mobile it has much more usefulness. Why does a driver-less car need only to carry passengers? Why not just send it to go collect something from the drive-thru. This means it will interact with the world independently and make decisions. At first these decisions will be insignificant. If the drive-thru is closed for maintenance, the car now has a choice, go back home with nothing or proceed to the next nearest drive-thru. If that is just 1 mile away then it was a good decision, but if it is 50 miles away, what should it do?

The result will be that these weak AI systems will learn about the realities of the world, 50 miles is too far away for a burger, but what if a child needed medicine from a pharmacy? At first these AI systems will defer to humans to make those decisions. A quick call from its inbuilt cellular system will allow the human owner to tell it to come home or go on further. However as the AI systems become more generalized then some of these decisions will be taken automatically.

The greater the level of complexity then the greater the chance of hitting moral issues. Is it OK to break the speed limit to get a child to the ER quickly? Is it OK to run over a dog to save the life of a person? If applying the brakes abruptly would cause the car to skid out of control and potentially kill the occupants, are there cases where the brakes should not be applied?

Current AI systems use learning algorithms to build up experience. By learning we mean, “if a computer program can improve how it performs a task by using previous experience then you can say it has learned.” There is a more technical definition which you can find in my article/video what is machine learning?

For board games like Go, the AlphaGo system played millions of games and “learnt” from experience what worked and what didn’t, it built its own strategies based on previous experience. However such experience is without context and certainly without a moral dimension.

Weak AI systems are already in operation that change what we read on the Internet. Social media sites are tailoring feeds based on our “preferences.” There are AI tools that are now used as part of the recruiting process, if the AI doesn’t like your CV, you don’t get called for an interview! We know that Google is already filtering its search results to steer people away from terrorist propaganda, and there is probably a weak AI system involved in that process somewhere. At the moment the moral and ethical input to those systems is coming from humans. However it is an unavoidable reality that at some point weak AI systems will learn (by inference) certain moral and ethical principles.

Wrap-up

The question is this, can moral principles be learned from a data set?

[related_videos title=”Gary Explains in video:” align=”left” type=”custom” videos=”714753,704836,696393,694411,683935,681421″]One part of the answer to that question has to involve a discussion about the data set itself. Another part of the answer requires us to examine the nature of morality, are there things we know that are right and wrong, not based on our experiences but based on certain built-in absolutes. Furthermore, we need to truthfully look at the difference between how people want to behave (how they perceive themselves on their best day) and how they actually behave. Is it fair to say that part of the human experience can be summed up like this, “I have the desire to do what is right, but not always the ability to carry it out.”

The bottom-line is this, the three laws of robotics try to reduce morality, ethics and the difference between right and wrong to three simple words: harm, obedience and self-preservation. Such an approach is far too simplistic and the definitions of those words are too open-ended to serve any real purpose.

Ultimately we will need to include various levels of moral input into the weak AI machines we create, however that input will need to be far more complex and rigid than the three laws of robotics.

What are your thoughts? Should we be worried about the moral compass of future AI systems?