Affiliate links on Android Authority may earn us a commission. Learn more.

Will the emergence of AI mean the end of the world?

Some quite famous people have been making some quite public statements about AI recently. One of the first was Elon Musk who said that Artificial Intelligence (AI) was “potentially more dangerous than nukes.” Stephen Hawkings is also concerned, “The development of full artificial intelligence could spell the end of the human race,” Professor Hawking told the BBC. “It would take off on its own, and re-design itself at an ever increasing rate.”

That was late 2014. In early 2015 Bill Gates also went on record to say that, “I am in the camp that is concerned about super intelligence. I agree with Elon Musk and some others on this and don’t understand why some people are not concerned.”

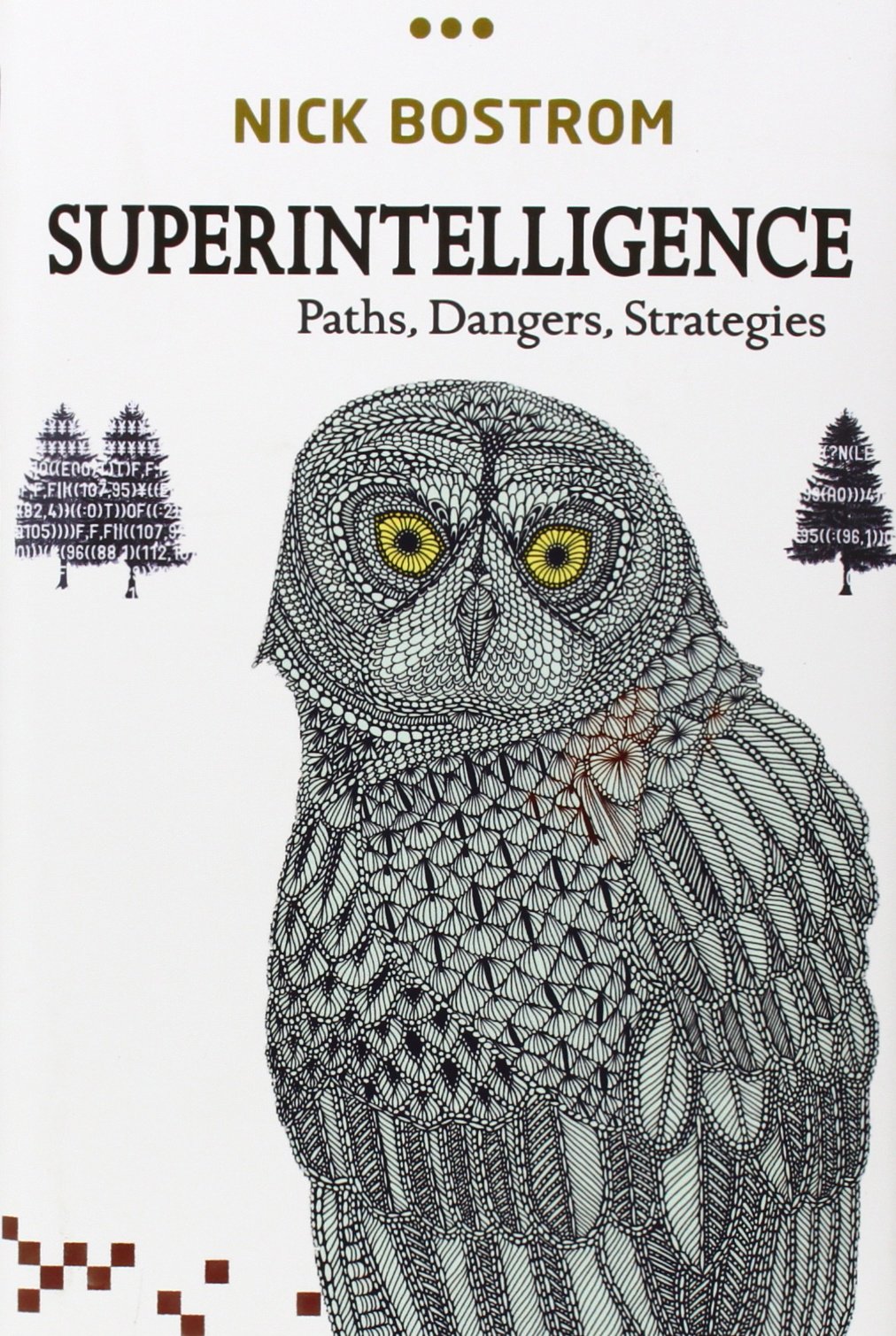

So what is all the fuss about? In a nutshell they have all been reading a book by Nick Bostrom, an Oxford Professor of Philosophy, who wrote a book called Superintelligence: Paths, Dangers, Strategies which outlines the dangers and possible strategies for dealing with Artificial Superintelligence (ASI).

Artificial Super-what? OK, we need to take a step back and define a few things before we can really understand what Elon and his buddies are talking about.

Artificial Intelligence

Since the dawn of the computer age there have been scientists, philosophers, authors and movie makers, who have talked about Artificial Intelligence (AI) in one form or another. In the 1960s and 1970s pundits declared that we were only a step away from creating a computer that could think. Obviously that didn’t happen and in all fairness today’s AI experts are less specific about when the problem of creating an AI will be solved.

When science fiction writers and philosophy professors talk about AI they are generally referring to strong AI.

Because of the emotive and profound concepts surrounding general and artificial intelligence, the AI community has come up with three special terms to classify what we exactly mean by AI. They are weak AI, strong AI, and Artificial Superintelligence.

Weak AI

Weak AI is a computer system which can imitate and simulate the various aspects of human consciousness and understanding. At no point do the designers of a weak AI system claim it has a mind or self-awareness, however it can interact with humans in away that, on the surface at least, makes the machine appear to have a form of intelligence.

When I was 11 or 12 my grandfather wrote a simple chat program on a computer. You typed in a sentence to the computer and it replied with an answer. For an 11 year old it was amazing, I tapped away at the keyboard and the computer answered. It really couldn’t even be classed as an AI since the responses where mainly pre-programmed, however if you multiply that concept by several orders of magnitude then you start to get any idea about an weak AI. My Android smartphone can answer some fairly complex questions, “OK Google, will I need an umbrella tomorrow?”, “No, rain is not expected tomorrow.”

This is primitive weak AI. A machine intelligence which can process questions, recognize subject matters, track the context of a conversation and reply with meaningful answers. We have that today. Now multiply that by another several orders of magnitude and you have a true weak AI. The ability to interact with a machine in a natural way and get meaningful answers and output.

It is sometimes phrased like this, 'Since I doubt, I think; since I think I exist,' or more commonly, 'I think therefore I am.'

A general weak AI is one that can function in any environment. IBM’s Deep Blue couldn’t play Jeopardy! and Watson can’t play chess. Since they are computers they could of course be re-programmed, however they don’t function outside of their specific environments.

Strong AI

Strong AI is the name given to a theoretical computer system which actually is a mind, and to all intents and purposes is essentially the same as a human in terms of self-awareness, consciousness and sentience. It doesn’t simulate a self aware being, it is self aware. It doesn’t simulate understanding or abstract thinking, it is actually capable of understanding and abstract thinking. And so on.

When science fiction writers and philosophy professors talk about AI they are generally referring to strong AI. The HAL 9000 is a strong AI, as are the Cylons, Skynet, the machines that run the Matrix, and Isaac Asimov’s robots.

The thing about strong AI is that unless it is somehow deliberately restrained, it will theoretically be able to perform AI research itself, which means it can theoretically create other AIs, or reprogram itself and therefore grow.

Artificial superintelligence

Assuming that strong AI systems can reach the same level of general intelligence as a human, and assuming that they are able to create other AIs or reprogram themselves, then it is postulated that this will inevitably lead to the emergence of Artificial Superintelligence (ASI).

In his book “Superintelligence: Paths, Dangers, Strategies” Nick Bostrom hypothesizes about what that would mean for the human race. Assuming we are unable to control (i.e. restrain) an ASI, what would be the outcome? As you can imagine there are parts of his book which talk about the end of the human race as we know it. The idea is that the emergence of ASI will be a singularity, a moment that radically changes the course of the human race, including the possibility of extinction.

The “Strategies” part of Bostrom’s theoretical musings cover what we should be doing now to ensure that this singularity never happens. This is why Elon Musk says things like, “I’m increasingly inclined to think that there should be some regulatory oversight, maybe at the national and international level, just to make sure that we don’t do something very foolish.”

Reality check

Science fiction is fun, one genre of book and film which I really like is Sci-Fi. But it is precisely that, fiction. Of course all good Sci-Fi is based on some science fact and occasionally a bit of science fiction turns into science fact. However, just because we can imagine something, just because we can hypothesize about something, it doesn’t mean it is possible or will ever happen. When I was a kid we were only years away from flying cars, nuclear fusion power plants, and room temperate super conductors. None of these ever arrived, but yet they were talked about with such credibility, that you were convinced that they would appear.

There are some very strong arguments against the emergence of strong AI and Artificial Superintelligence. One of the best arguments against the idea that an AI computer can have a mind was put forward by John Searle, an American philosopher and Professor of Philosophy at Berkeley. It is known as the Chinese Room argument, and it goes like this:

Imagine a locked room with a man inside who doesn’t speak Chinese. In the room he has a rule book which tells him how to respond to messages in Chinese. The rule book doesn’t translate the Chinese into his native language, it just tells him how to form a reply based on what he is given. Outside the room a native Chinese speaker passes messages to the man under the door. The man takes the message, looks up the symbols and follows the rules about which symbols to write in the reply. The reply is then passed to the person outside. Since the reply is in good Chinese the person outside the room will believe that the person inside the room speaks Chinese.

When the idea is applied to AI you can see very quickly that a complex computer program is able to mimic intelligence, but never actually have any.

The key points are:

- The man in the room doesn’t speak Chinese.

- The man in the room doesn’t understand the messages.

- The man in the room doesn’t understand the replies he forms.

When this idea is applied to AI you can see very quickly that a complex computer program is able to mimic intelligence, but never actually has any. At no point does the machine gain understanding of what it is being told or what answers it is giving. As Searle put it, “syntax is insufficient for semantics.”

Another arguments again strong AI is that computers lack consciousness and that consciousness can’t be computed. The idea is the main subject of Sir Roger Penrose’s book, The Emperor’s New Mind. In the book he says, “with thought comprising a non-computational element, computers can never do what we human beings can.”

It is also interesting to note that not all AI experts think strong AI is possible. You would imagine that since this is their area of expertise then they would all be very eager to promote the ideas of strong AI. For example, Professor Kevin Warwick of Reading University, who is sometimes known as “Captain Cyborg” – due to his predisposition to implanting various bits of tech into his body, is a proponent of strong AI. However Professor Mark Bishop of Goldsmith University, London, is a vocal opponent of strong AI. What is even more interesting is that Professor Warwick used to be Professor Bishop’s boss when they worked together in Reading. Two experts who worked together and yet have very different ideas about strong AI.

The conviction of things not seen

If faith is defined as “the conviction of things not seen” then you need to have faith that strong AI is possible. In many ways it is blind faith, you need to take a leap. There is not one strand of evidence that strong AI is possible.

Weak AI is clearly possible. Weak AI is about processing power, algorithms, and likely other techniques which haven’t been invented yet. We see it in its infancy now. We enjoy the benefits of these early progressions. But the idea that a computer can become a sentient being, well…

Since humans have consciousness and according to Sir Roger Penrose it isn’t computable, why do humans have consciousness? Penrose tries to explain it using quantum mechanics. However there is an alternative. What if we aren’t just biological machines? What if there is more to man?

The ghost in the machine

Philosophy, history and theology are peppered with the idea that man is more than just a clever monkey. The dualism of the body and mind is most often linked with René Descartes. He argued that everything can be doubted, even the existence of the body, but the fact that he could doubt means that he can think, and because he can think he exists. It is sometimes phrased like this, “Since I doubt, I think; since I think I exist,” or more commonly, “I think therefore I am.”

This notion of dualism is found in many different tenets of theology, “God is spirit, and those who worship him must worship in spirit and truth.” This leads us to questions like: What is spirituality? What is love? Does man have eternity set into his heart?

Is it possible that we have words for things like spirit, soul, and consciousness because we are more than just a body. As one ancient writer put it, “for who knows a person’s thoughts except their own spirit within them?”

Wrap up

The biggest assumption made by believers in strong AI is that the human mind can be reproduced in a computer program, but if man is more than just a body with a brain on top, if the mind is the working of biology and something else, then strong AI will never be possible.

Having said that, the growth of weak AI is going to be fast. During Google I/O 2015 the search giant even included a section on deep neural networks in its keynote. These simple weak AIs are being used in Google’s search engine, in Gmail and in Google’s photo service.

Like most technologies the progress in the area will snowball, with each new step building on the work done previously. Ultimately services like Google Now, Siri and Cortana will become very easy to use (due to their natural language processing) and we will look back and chuckle at how primitive it all was, in the same way that we look back at VHS, vinyl records and analogue mobile phones.