Affiliate links on Android Authority may earn us a commission. Learn more.

AlphaGo's victory: how it was achieved and why it matters

Out of sight and out of mind, machine learning is becoming a part of our everyday life, in applications ranging from face detection features in airport security cameras, to speech recognition and automatic translation software such as Google Translate, to virtual assistants like Google Now. Our very own Gary Sims had a nice introduction to machine learning which is available to watch here.

In scientific applications, machine learning is becoming a cardinal tool for analyzing what is called “Big Data”: information from hundreds of millions of observations with hidden structures which could be literally impossible for us to understand without access to the computational abilities of supercomputers.

Very recently, Google’s DeepMind AI-focused subsidiary utilized its resources to master an ancient Chinese board game: Go.

What is special about Go is that, unlike chess, where the king is the most precious piece and it needs to be defended, in Go, all the stones have the same value. This means that, ideally, a player should pay the same level of attention to any part of the board to overcome his opponent. This feature makes Go computationally much more complex relative to chess, as the potential number of combinations of sequential moves is infinitely (YES (!), infinitely according to a result given by a leading mathematical computing software) larger than with chess. If you are not convinced, please try dividing 250^150 (potential combinations in a game of Go) by 35^80 (potential combinations in chess).

Due to this computational impossibility, expert Go players need to rely on their intuition about which move to make to overcome their opponents. Scientific forecasts previously claimed that we need more than a decade of continuous work until machines can master Go at a level comparable to human expert players.

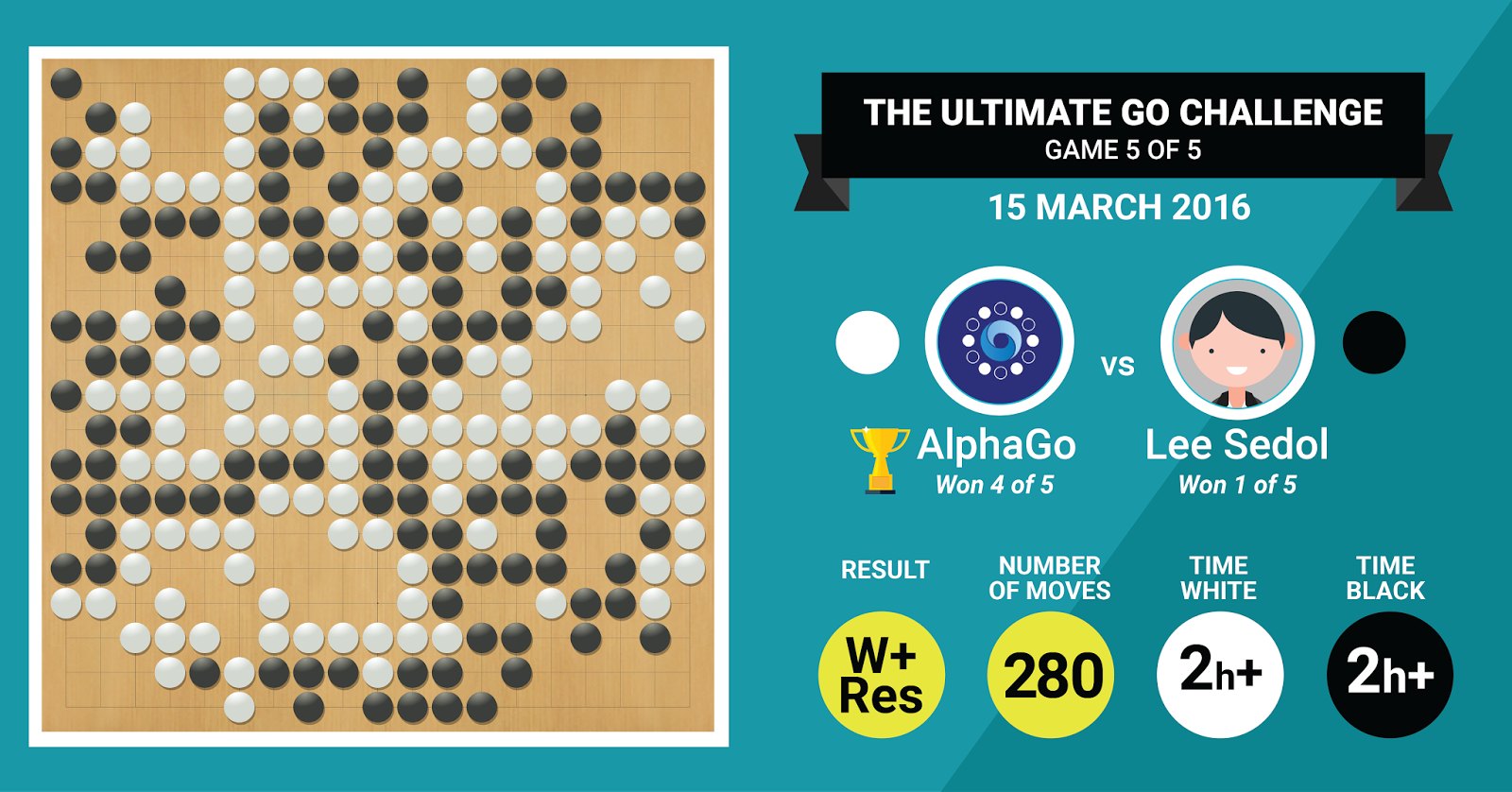

This is exactly what DeepMind’s AlphaGo algorithm just achieved, by beating legendary Go master Lee Sedol in a match of five games with a final score of 4:1.

Let us first listen to what the masters of the art will say about their work, and then progress with explaining how they did it.

The Hardware

Let’s start with the hardware behind the scenes and the training AlphaGo went through before taking on the European and the World Champions.

While making its decisions, AlphaGo used a multi-threaded search (40 threads) by simulating the potential outcomes of each candidate moves over 48 CPUs and 8 GPUs, in its competition setting or over a whopping 1202 CPUs and 176 GPUs in its distributed form (which did not appear in the competitions against the European and World Champions).

Here, the computational power of GPUs is particularly important to accelerate decisions, as the GPU contains much higher number of cores for parallel computing and some of our more informed readers may be familiar with the fact that NVIDIA is consistently making investments to push this technology further (for example, their Titan Z graphics card has 5760 CUDA cores).

Compare this computational power to, for example, our human decision-making research, in which we typically use 6/12 core Xeon workstations with professional grade GPUs, which sometimes need to work in tandem for six days continuously in order to make estimations about human decisions.

Why does AlphaGo need this massive computational power to achieve expert-levels decision accuracy? The simple answer is the vast number of possible outcomes that could branch out from the current state of the board in a game of Go.

The vast amount of information to be learned

AlphaGo started its training by analyzing the still pictures of boards with stones positioned in various locations, drawn from a database containing 30 million positions from 160,000 different games played by professionals. This is very similar to the way object recognition algorithms work, or what is called machine vision, the simplest example of this being face detection in camera apps. This first stage took three weeks to complete.

Of course, studying the movements of professionals alone is not enough. AlphaGo needed to be trained specifically to win against a world-class expert. This is the second level of training, in which AlphaGo used reinforcement learning based on 1.3 million simulated games against itself to learn how to win, which took one day to complete over 50 GPUs.

Finally, AlphaGo was trained to associate values with each potential move it could make in a game, given the current position of stones on the board, and to associate values with those moves in order to predict whether any particular move would eventually lead to a win or a loss at the end of the game. In this final stage, it analysed and learned from 1.5 billion (!) positions using 50 GPUs and this stage took another week to complete.

Convolutional Neural Networks

The way AlphaGo mastered these learning sessions falls into the domain of what is known as Convolutional Neural Networks, a technique that assumes that machine learning should be based on the way neurons in the human brain talk to each other. In our brain, we have different kinds of neurons, which are specialized to process different features of external stimuli (for example, color or shape of an object). These different neural processes are then combined to complete our vision of that object, for example, recognizing it to be a green Android figurine.

Similarly, AlphaGo convolves information (related to its decisions) coming from different layers, and combines them into a single binary decision about whether or not to make any particular move.

So in brief summary, convolutional neural networks supply AlphaGo with the information that it needs to effectively reduce the big multi- dimensional data to a simple, final output: YES or NO.

The way decisions are made

So far, we briefly explained how AlphaGo learned from previous games played by human Go experts and refined its learning to guide its decisions towards winning. But we did not explain how AlphaGo orchestrated all of these processes during the game, in which it needed to make decisions fairly quickly, around five seconds per move.

Considering that the potential number of combinations is intractable, AlphaGo needs to focus its attention on specific parts of the board, which it considers to be more important to the outcome of the game based on previous learning. Let us call these the “high-value” regions where the competition is more fierce and/or which are more likely to determine who wins in the end.

Remember, AlphaGo identifies these high-value regions based on its learning from expert players. In the next step, AlphaGo constructs “decision trees” in these high-value regions which branch out from the current state of the board. In this way, the initial quasi-infinite search space (if you take the whole of the board into account) is reduced to a high dimensional search space, which, although huge, now becomes computationally manageable.

Within this relatively constrained search space, AlphaGo uses parallel processes to make its final decision. On one hand, it uses the power of CPUs to conduct quick simulations, around 1000 simulations per second per CPU tread (meaning that it could simulate about eight million trajectories of the game in the five seconds it needs to make a decision).

In parallel, the GPUs convolve information using two different networks (set of rules for information processing, for example excluding illegal moves determined by the rules of the game). One network, called the policy network, reduces multi-dimensional data to calculate the probabilities of which move is better to make. The second network, called the value network, makes a prediction about whether any of the possible moves may end up in a win or a loss at the end of the game.

AlphaGo then considers the suggestions of these parallel processes and when they are in conflict, AlphaGo resolves this by selecting the most frequently suggested move. Additionally, when the opponent is thinking about his response move, AlphaGo uses the time to feed the information that was acquired back to its own repository, in case it could be informative later on in the game.

In summary, the intuitive explanation as to why AlphaGo is so successful is that it starts its decision making with the potentially high-value regions on the board, just like a human expert player, but from there on, it can make much higher computations to forecast how the game could take shape, relative to a human. Additionally, it would make its decisions with an extremely small margin of error, which can never be achieved by a human, simply due to the fact that we have emotions, we feel pressure under stress and we feel fatigue, all of which might affect our decision making negatively. In fact, the European Go Champion, Fan Hui (a 2 dan expert), who lost 5-0 against AlphaGo, confessed after a game that on one occasion he would ideally have preferred to make a move which was predicted by AlphaGo.

At the time when I was writing this commentary, AlphaGo was competing against Lee Sedon, a 9 dan expert player, who is also the most frequent winner of World Championships from the last decade, with a $1 million prize at stake. The final result of the match was in AlphaGo’s favor – the algorithm won four matches out of five.

Why I am excited

I personally find the recent developments in machine learning and AI simply fascinating, and its implications staggering. This line of research will help us conquer key public health challenges, such as mental health disorders and cancer. It will help us understand the hidden structures of information from the vast amount of data that we are collecting from outer space. And that’s just the tip of the iceberg.

I find the way AlphaGo makes its decisions closely related to previous accounts of how the human mind works, which showed that we make our decisions by reducing the search space in our mind by cutting down certain branches of a decision tree (like pruning a Bonsai tree). Similarly, a recent study conducted on expert Shogi (Japanese chess) players showed that their brain signals during the game resembles the values predicted by a Shogi playing computer algorithm for each move.

This means that machine learning and recent developments in AI will also help us to have a unified understanding of how the human mind works, which is regarded as another frontier, just like outer space.

Why I am worried

You might remember the recent comments by Bill Gates and Stephen Hawking that advancements in AI may turn out to be dangerous for the human existence in the long term. I share these worries to an extent, and in a sci-fi, apocalyptic fashion, invite you to consider this scenario where there two countries are at war. What happens if satellite images of the war zone are fed into a powerful AI (replacing Go’s board and stones). Does this eventually lead to SkyNet from the Terminator movies?

Please comment down below and share your thoughts!