Affiliate links on Android Authority may earn us a commission. Learn more.

What is VSync and why should you use it (or not)

We need PC games to be perfect. After all, we’re dumping loads of cash into the hardware so we can get the most immersive experience possible. But there’s always some type of hiccup, whether it’s a fault in the game itself, issues stemming from hardware, and so on. One glaring problem could be screen tearing, a graphic anomaly that seemingly stitches the screen together using ripped strips of a photograph. You’ve seen a game setting called VSync that supposedly fixes this issue. What is VSync and should you use it? We offer a simplified explanation.

If you’re rather new to PC gaming, we first look at two important terms you should know to understand why you may need VSync. First, we will cover your monitor’s refresh rate followed by the output of your PC. Both have everything to do with the screen-ripping anomaly. Some of this will be slightly technical so you’ll understand why the anomaly happens in the first place.

See more: Display types and technologies explained

Input: Understanding refresh rate

The first half of the equation is your display’s refresh rate. Believe it or not, it’s currently updating what you see multiple times per second, though you likely can’t see it. If your display didn’t update (or refresh), then all you’d see is a static image.

You need to see movement even at the most basic level. If you’re not watching video or playing games, the display still needs to update so you can see where the mouse cursor moves, what you’re typing, and so on.

Refresh rates are defined in hertz, a unit of frequency.

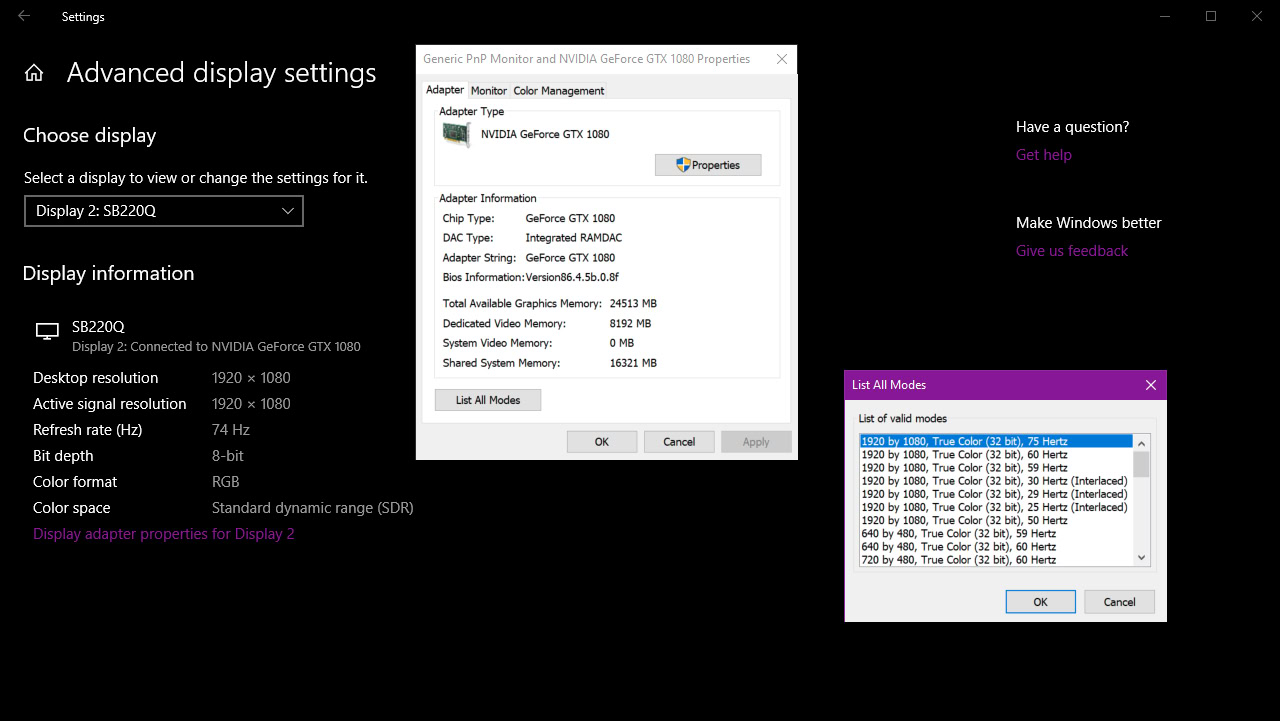

If you have a display with a 60Hz refresh rate, then it refreshes each pixel 60 times per second. If your panel does 120Hz, then it can refresh each pixel 120 times per second. Thus, the higher the updates each second, the smoother the experience.

The goal of a high refresh rate is to reduce the common motion blurring problem associated with LCD and OLED panels. Actually, you are part of the problem: Your brain predicts the path of motion faster than the display can render the next image. Increasing the refresh rate helps but typically other technologies are required to minimize the blur.

Modern mainstream desktop displays typically have a resolution of 1,920 x 1,080 at 60Hz. However, that’s pretty much the baseline now. For example, the laptop we’re using right now is running at 3,200 x 2,000 at 90Hz, while our secondary machine is running at 2,560 x 1,440 at 165Hz.

Now let’s follow your HDMI, DisplayPort, DVI, or VGA cable back to the source: Your gaming PC.

Output Part 1: The display, and refresh rate

This is the other half of the equation. Movies, TV shows, and games are nothing more than a sequence of images. There’s no actual movement involved. Instead, these images trick your brain into perceiving movement based on the contents of each image, or frame.

Movies and TV shows in North America typically run at 24 frames per second, or 24Hz (or 24fps). We’ve grown accustomed to the low framerate even though our eyeballs can see 1,000 frames per second or more. Movies and TV shows are designed to be an escape from reality, and the low 24Hz rate helps preserve that dream-like state.

Higher framerates, as seen with The Hobbit trilogy shot at 48Hz, jarringly moves close to real-world motion. In fact, live video jumps up to 30Hz or 60Hz, depending on the broadcast. James Cameron initially targeted 60Hz with Avatar 2 but dropped the rate down to 48Hz.

We’ve grown accustomed to the low framerate in movies and TV shows. Gaming is different.

Gaming is different. You don’t want that dream-like state. You want immersive, fluid, real-world-like action because, in your mind, you’re participating in another reality. A game running at 30 frames per second is tolerable, but it’s just not liquid-smooth. You’re fully aware that everything you do and see is based on moving images, killing the immersion.

Jump up to 60 frames per second and you’ll feel more connected with the virtual world. Movements are fluid, like watching live video. The illusion gets even better if your gaming machine and display can handle 120Hz and 240Hz. That’s eye candy right there, folks.

Related: AMD vs. NVIDIA: What’s the best add-in GPU for you?

Output Part 2: The GPU, and the computing pipeline

Your PC’s graphics processing unit, or GPU, handles the rendering load. Since it cannot directly access the system memory, the GPU has its own memory to temporarily store graphics-related assets, like textures, models, and frames.

Meanwhile, your CPU handles most of the math: Game logic, artificial intelligence (NPCs, etc), input commands, calculations, online multiplayer, and so on. System memory temporarily holds everything the CPU needs to run the game (scratch pad) while the hard drive or SSD stores everything digitally (file cabinet).

All four factors – GPU, CPU, memory, and storage – play a part in your game’s overall output. The goal is for the GPU to render as many frames as possible per second. Again, ideally, that number is 60. The higher the frame count, the better the visual experience.

Output largely depends on the hardware and software environment.

That said, output largely depends on the hardware and software environment. For instance, while your CPU handles everything needed to run the game, it’s also dealing with external processes required to run your computer. Temporarily shutting down some of these processes helps, but generally, you want a super-fast CPU, so Windows isn’t interfering with gameplay.

Other elements affect output: A slow or fragmented drive, slow system memory, or a GPU that just can’t handle all the action at a specific resolution. If your game runs at 10 frames per second because you insist on playing at 4K, your GPU is most likely the bottleneck. But even if you have more than you need to run a game, the on-screen action handled by both the GPU and CPU may be momentarily overwhelming, dropping the framerate. Heat is another framerate killer.

The bottom line is that framerates fluctuate. This fluctuation stems from the rendering load, the underlying hardware, and the operating system. Even if you toggle an in-game setting that caps the framerate, you may still see fluctuations.

Why do you need VSync? Screen tearing explained

So let’s breathe for a moment and recap. Your display – the input – draws an image multiple times per second. This number typically does not fluctuate. Meanwhile, your PC’s graphics chip – the output – renders an image multiple times per second. This number does fluctuate.

The problem with this scenario is an ugly graphics anomaly called screen tearing. Let’s get a little technical to understand why.

The GPU has a special spot in its dedicated memory (VRAM) for frames called the frame buffer. This buffer splits into Primary (front) and Secondary (back) buffers. The current completed frame resides in the Primary buffer and is delivered to the display during the refresh. The Secondary (back) buffer is where the GPU renders the next frame.

Once the GPU completes a frame, these two buffers swap roles: The Secondary buffer becomes the Primary and the former Primary now becomes the Secondary. The game then has the GPU render a new frame in the new Secondary buffer.

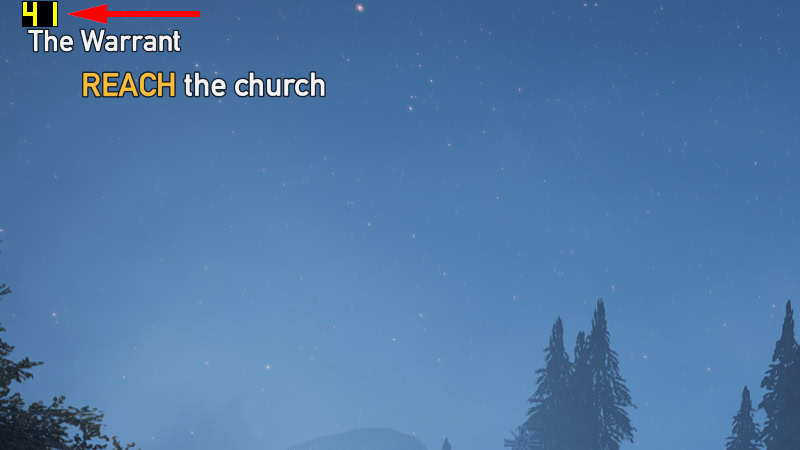

Here’s the problem. Buffer swaps can happen at any time. When the display signals that it’s ready for a refresh and GPU sends a frame over the wire (HDMI, DisplayPort, VGA, DVI), a buffer swap may be underway. After all, the GPU is rendering faster than the display can refresh. As a result, the display renders part of the first completed frame stored in the old Primary, and part of the second completed frame in the new Primary.

So if your view changed between two frames, the on-screen result will show a fractured scene: The top showing one angle and the bottom showing another angle. You may even see three strips stitched together as seen in NVIDIA’s sample screenshot shown above.

This screen tearing is mostly noticeable when the camera moves horizontally. The virtual world seemingly separates horizontally like invisible scissors cutting up a photograph. It’s annoying and pulls you out of the immersion. However, because images are registered vertically, you won’t see tearing up and down the screen.

What is VSync and what does it do?

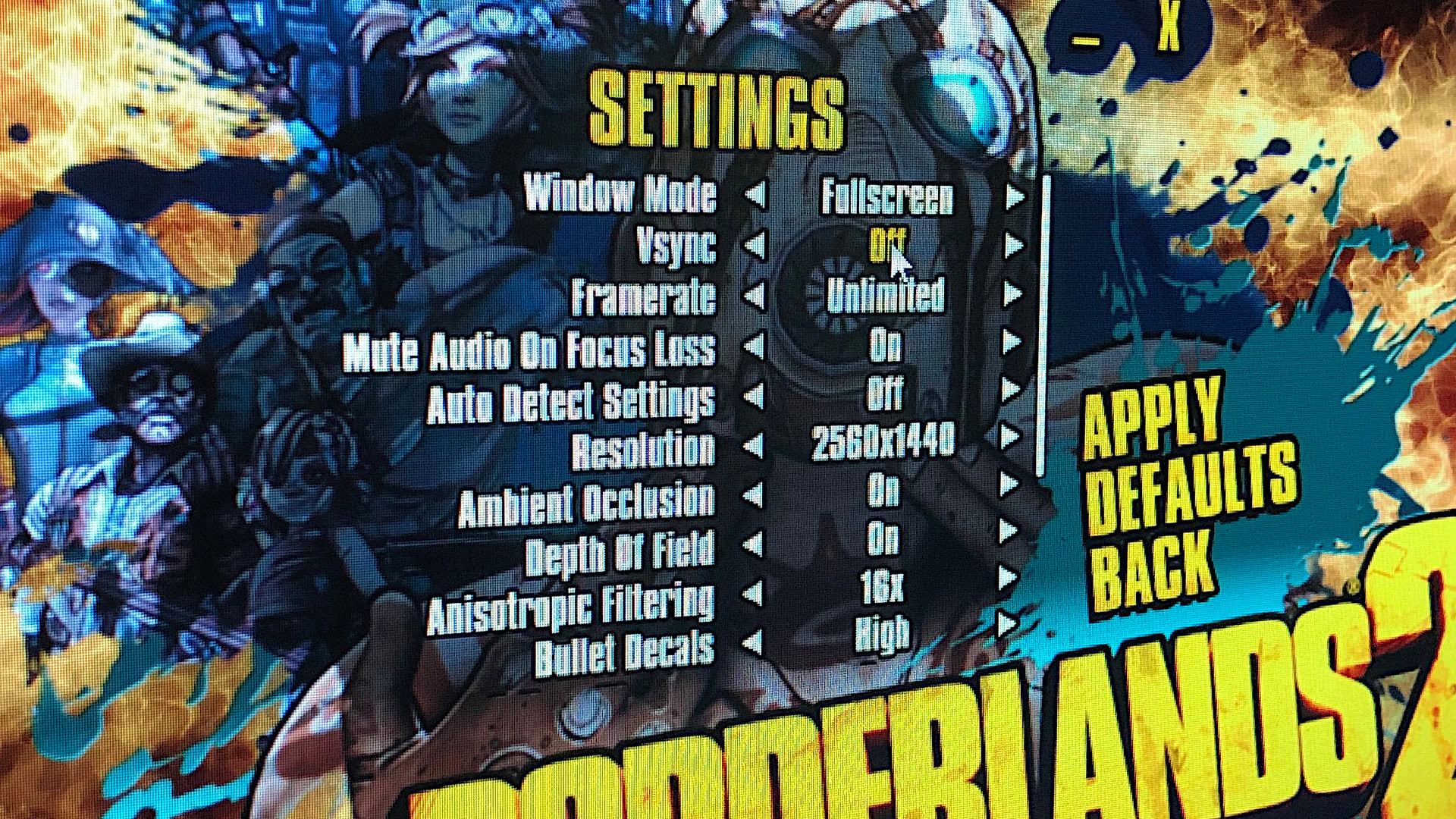

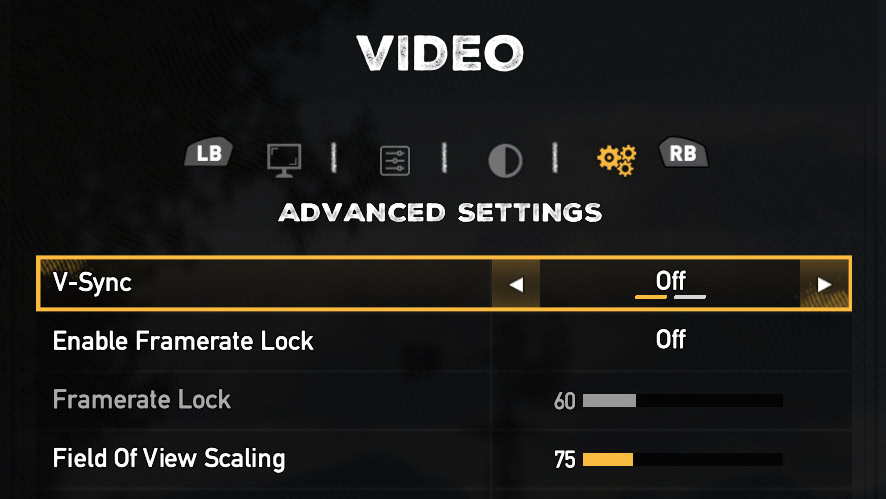

You can reduce screen tearing with a software solution called Vertical Synchronization (VSync, V-Sync). It’s a software solution provided in games as a toggle. It prevents the GPU from swapping buffers until the display successfully receives a refresh. The GPU sits idle until it’s given the green light to swap buffers and render a new image.

In other words, the game’s framerate won’t go higher than the display’s refresh rate. For instance, if the display can only do 60Hz at 1,920 x 1,080, VSync will lock the framerate at 60 frames per second. No more screen tearing.

But there’s a side effect. If your PC’s GPU can’t keep a stable framerate that matches the display’s refresh rate, you’ll experience visual “stuttering.” That means the GPU is taking longer to render a frame than it takes the monitor to refresh. For example, the display may refresh twice using the same frame while it waits for the GPU to send over a new frame. Rinse and repeat.

As a result, VSync will drop the game’s framerate to 50 percent of the refresh rate. This creates another problem: lag. There’s nothing wrong with your mouse, keyboard, or game controller. It’s not an issue on the input side. Instead, you’re simply experiencing visual latency.

Why? Because the game acknowledges your input, but the GPU is forced to delay frames. That translates to a longer period between your input (movement, fire, etc) and when that input appears on the screen.

The amount of input lag depends on the game engine. Some may produce large amounts while others have minimal lag. It also depends on the display’s maximum refresh rate. For instance, if your screen does 60Hz, then you may see lag up to 16 milliseconds. On a 120Hz display, you could see up to 8 milliseconds. That’s not ideal in competitive games like Overwatch, Fortnite, and Quake Champions.

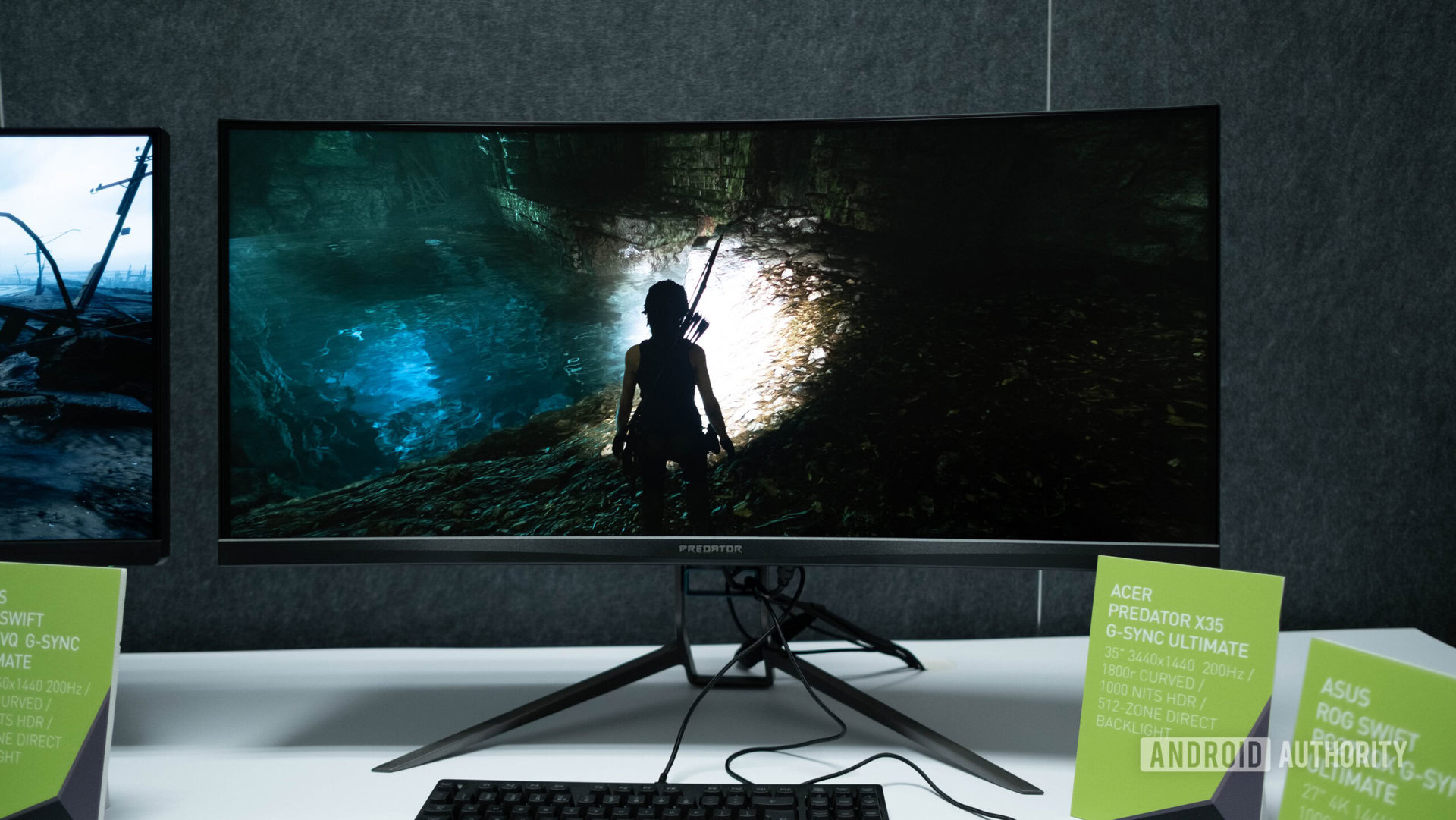

Related: What is G-Sync? NVIDIA’s display sync technology explained

Triple buffering: The best VSync setting?

You may find a triple buffering toggle in your game’s settings. In this scenario, the GPU uses three buffers instead of two: One Primary and two Secondary. Here the software and GPU draw in both Secondary buffers. When the display is ready for a new image, the Secondary buffer containing the latest completed frame changes to the Primary buffer. Rinse and repeat at every display refresh.

Thanks to that second Secondary buffer, you won’t see screen tearing given the Primary and Secondary buffers aren’t swapping while the GPU delivers a new image. There’s also no artificial delay as seen with double-buffering and VSync turned on. Triple buffering is essentially the best of both worlds: The tear-free visuals of a PC with VSync turned on, and the high framerate and input performance of a PC with VSync turned off.

Related: What is FreeSync? AMD’s display sync technology explained

Which devices does VSync support?

The beauty of VSync is that it’s a software implementation that’s pretty much platform-agnostic. This means that VSync can run on more or less any gaming PC. It’s an old technology, especially compared to the hardware-driven display synchronization technologies we have today. However, it also has wider support. If you have a gaming PC, chances are that the games on it will have a VSync toggle in them, letting you use VSync.

Advantages of VSync

- Gets rid of screen tearing

- Wide software and hardware support

- Doesn’t need dedicated hardware to work

- Maintains compatibility with older hardware and software

- Reduces strain on GPU

Disadvantages of VSync

- Adds input lag, causing massive overall lag

- Can cause frame drops and stutters

- Not great for high-performance or competitive gaming

Should you use VSync?

Yes and no. The overall problem boils down to preference. Screen tearing can be annoying, yes, but is it tolerable? Does it ruin the experience? If you’re viewing little amounts and it’s not an issue, then don’t bother with VSync. If your display has a super-high refresh rate that your GPU can’t match, chances are you’ll likely not see tearing anyway.

But if you need VSync, just remember the drawbacks. It will cap the framerate either at the display’s refresh rate, or half that rate if the GPU can’t maintain the higher cap. However, the latter halved number will produce visual “lag” that could hinder gameplay.

Other technologies like NVIDIA G-Sync and AMD FreeSync have pulled ahead, but they have much more limited support. As such, despite being old, VSync still has a solid place in the display sync space. You may not need to use it for every single game, but there are bound to be times when it will come in handy.