Affiliate links on Android Authority may earn us a commission. Learn more.

Why are smartphone chips suddenly including an AI processor?

If virtual assistants have been the breakthrough technology in this year’s smartphone software, then the AI processor is surely the equivalent on the hardware side.

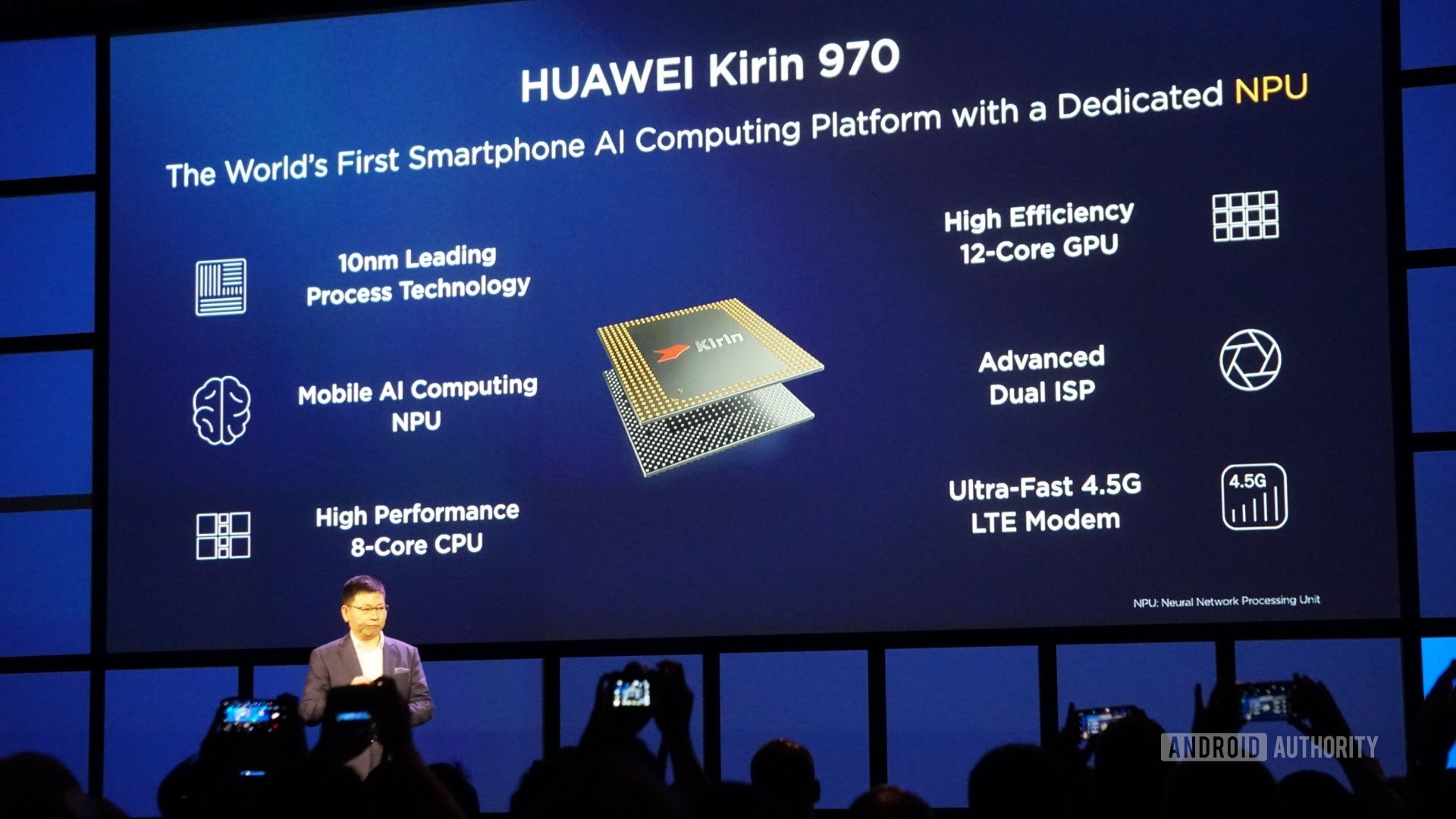

Apple has taken to calling its latest SoC the A11 Bionic on account of its new AI “Neural Engine”. HUAWEI’s latest Kirin 970 boasts a dedicated Neural Processing Unit (NPU) and is billing its upcoming Mate 10 as a “real AI phone“. Samsung’s next Exynos SoC is rumored to feature a dedicated AI chip too.

Qualcomm has actually been ahead of the curve since opening up the Hexagon DSP (digital signal processor) inside its Snapdragon flagships to heterogeneous compute and neural networking SDKs a couple of generations ago. Intel, NVIDIA, and others are all working on their own artificial intelligence processing products too. The race is well and truly on.

There are some good reasons for including these additional processors inside today’s smartphone SoCs. Demand for real-time voice processing and image recognition is growing fast. However, as usual, there’s a lot of marketing nonsense being thrown around, which we’ll have to decipher.

AI brain chips, really?

Companies would love us to believe that they’ve developed a chip smart enough to think on its own or one that can imitate the human brain, but even today’s cutting edge lab projects aren’t that close. In a commercial smartphone, the idea is simply fanciful. The reality is a little more boring. These new processor designs are simply making software tasks such as machine learning more efficient.

These new processor designs are simply making software tasks such as machine learning more efficient.

There’s an important difference between artificial intelligence and machine learning that’s worth distinguishing. AI is a very broad concept used to describe machines that can “think like humans” or that have some form of artificial brain with capabilities that closely resemble our own.

Machine learning is not unrelated, but only encapsulates computer programs that are designed to process data and make decisions based on the results, and even learn from results to inform future decisions.

Neural networks are computer systems designed to help machine learning applications sort through data, enabling computers to classify data in ways similar to humans. This includes processes like picking out landmarks in a picture or identifying the make and color of a car. Neural networks and machine learning are smart, but they’re definitely not sentient intelligence.

When it comes to talk of AI, marketing departments are attaching a more common parlance to a new area of technology that makes it harder to explain. It’s equally as much an effort to differentiate themselves from their competitors too. Either way, what all of these companies have in common is that they’re simply implementing a new component into their SoCs that improves the performance and efficiency of tasks that we now associate with smart or AI assistants. These improvements mainly concern voice and image recognition, but there are other use cases, too.

New types of computing

Perhaps the biggest question yet to answer is: why are companies suddenly including these components? What does their inclusion make it easier to do? Why now?

You may have noticed a recent increase in chatter about Neural Networks, Machine Learning, and Heterogeneous Computing. These are all tied into emerging use cases for smartphone users, and across a broader range of fields. For users, these technologies are helping to empower new user experiences with enhanced audio, image and voice processing, human activity prediction, language processing, speeding up database search results, and enhanced data encryption, among others.

One of the questions still yet to be answered is whether computing these results is best done in the cloud or on the device, though. Despite what one OEM or another says is better, it’s more likely to depend on the exact task being calculated. Either way, these use cases require some new and complicated approaches to computing, which most of today’s general 64-bit CPUs aren’t particularly well suited to dealing with. 8- and 16-bit floating point math, pattern matching, database/key lookup, bit-field manipulation, and highly parallel processing, are just some examples that can be done faster on dedicated hardware than on a general purpose CPU.

To accommodate the growth of these new use cases, it makes more sense to design a custom processor that’s better at these type of tasks rather than have them run poorly on traditional hardware. There’s definitely an element of future proofing in these chips too. Adding in an AI processor early will give developers a baseline on which they can target new software.

Efficiency is the key

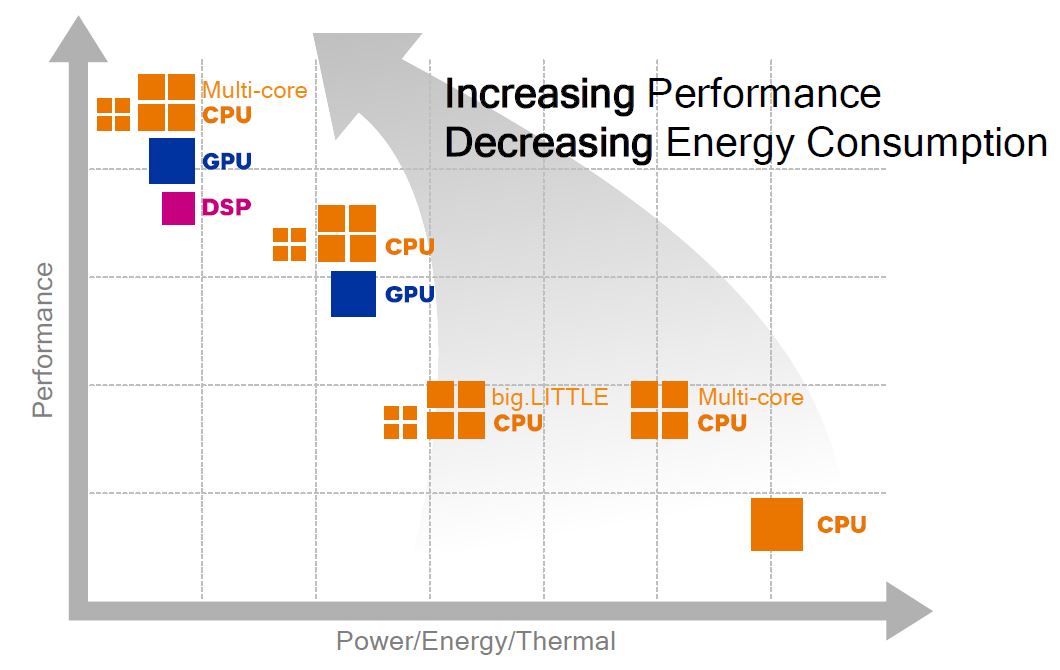

It’s worth noting that these new chips aren’t just about providing more computational power. They’re also being built to increase efficiency in three main areas: size, computation, and energy.

Today’s high-end SoCs pack in a ton of components, ranging from display drivers to modems. These parts have to fit into a small package and limited power budget, without breaking the bank (see Moore’s Law for more information). SoC designers have to stick to these rules when introducing new neural net processing capabilities too.

A dedicated AI processor in a smartphone SoC is designed around area, computational, and power efficiency for a certain subset of mathematical tasks.

It’s possible that smartphone chip designers could build larger, more powerful CPU cores to better handle machine learning tasks. However, that would significantly bulk up the size of the cores, taking up considerable die size given today’s octa-core setups, and make them much more expensive to produce. Not to mention that this would also greatly increase their power requirements, something that there simply isn’t a budget for in sub-5W TDP smartphones.

Instead, it’s much more astute to design a single dedicated component of its own, something that can handle a specific set of tasks very efficiently. We have seen this many times over the course of processor development, from the optional floating point units in early CPUs to the Hexagon DSPs inside Qualcomm’s higher-end SoCs. DSPs have fallen in and out of use across audio, automotive, and other markets over the years, due to the ebb and flow of computational power versus cost and power efficiency. The low power and heavy data crunching requirements of machine learning in the mobile space is now helping to revive demand.

An extra processor dedicated to complex math and data sorting algorithms is only going to help devices crunch numbers faster.

Wrap Up

It’s not cynical to question whether companies are being really accurate with their portrayal of neural networking and AI processors. However, the addition of an extra processor dedicated to complex math and data sorting algorithms is only going to help smartphones, and other pieces of technology, crunch numbers better and enable a variety of new useful technologies, from automatic image enhancement to faster video library searches.

As much as companies may tout virtual assistants and the inclusion of an AI processor as making your phone smarter, we’re nowhere near seeing true intelligence inside our smartphones. That being said, these new technologies combined with emerging machine learning tools are going to make our phone even more useful than ever before, so definitely watch this space.