Affiliate links on Android Authority may earn us a commission. Learn more.

Project Soli showcased on a smartwatch at Google I/O

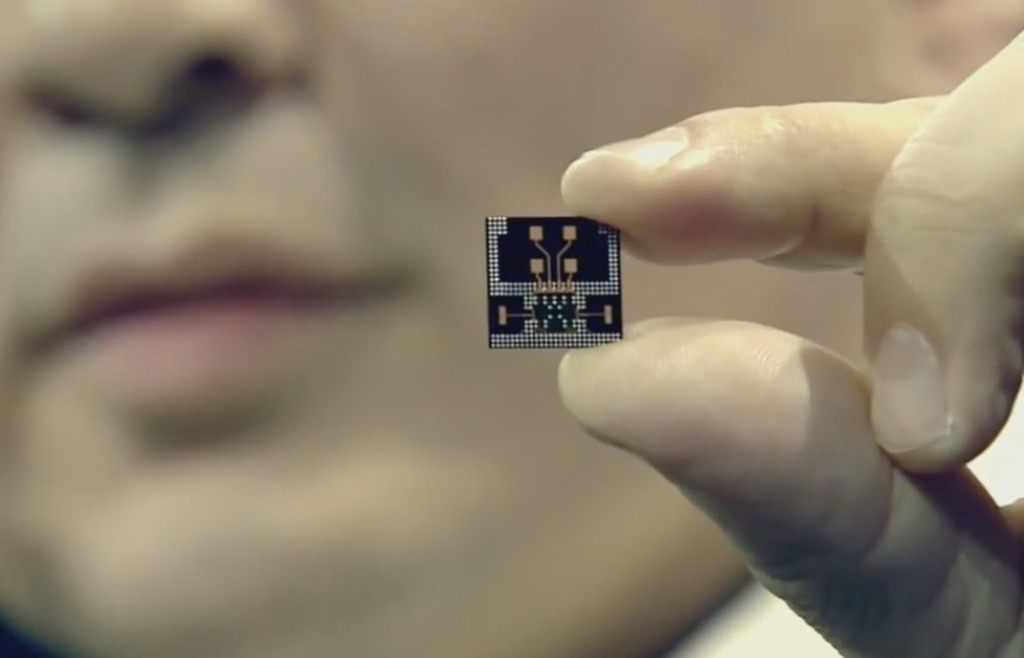

We haven’t heard much from Project Soli in a while, but those with their fingertips to the pulse of the tech world will recall Google demonstrating their hands-off approach to device interaction last year. The technology uses radar to determine the position of your hands in space, and natural movements can be used for input. Unfortunately, the power demands for this tiny radar device proved to be restrictive, and developers who received the early access dev kit last year had to rely on power from a laptop to keep it alive. Since this technology is being specifically developed for wearables, you can see how this was something of a roadblock.

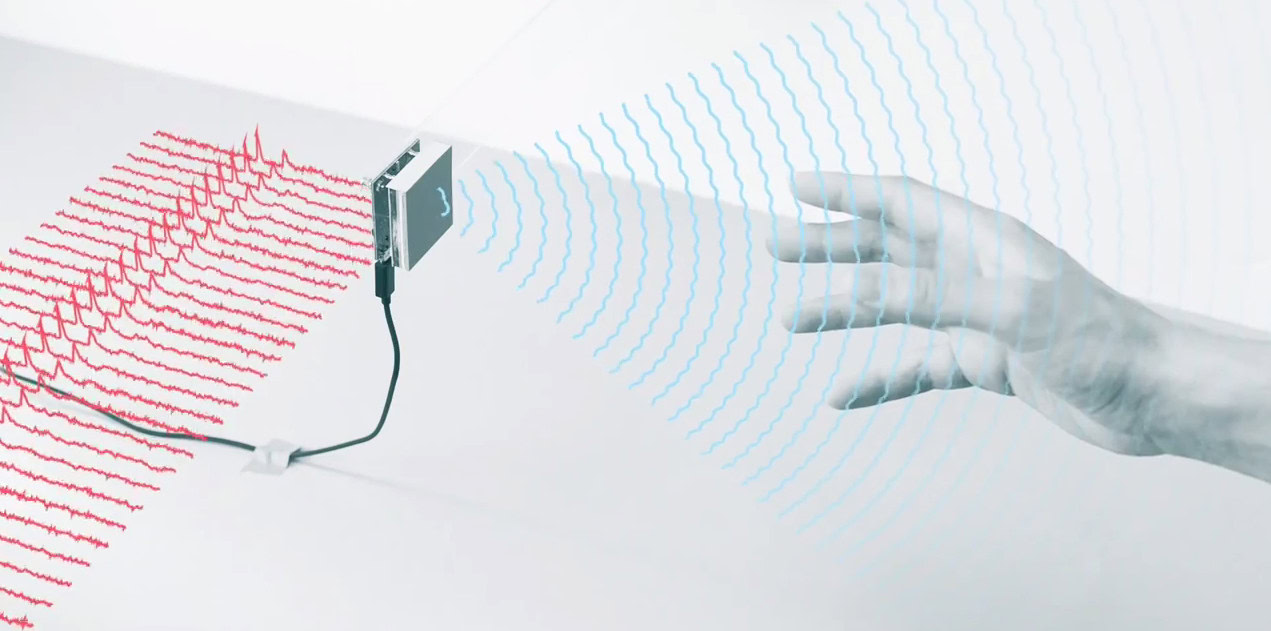

Interacting with this wearable makes you look like a freaking magician, as simple commands can be relayed to the device without even making physical contact with it. One ailment that has long plagued smartwatches is that information display is inhibited by the small screen. UI designers have to take into account the reality that using your finger on a touch display so small covers up a substantial portion of the screen. Being able to interact with the device at a distance alleviates this problem.

The team demoed that the technology isn’t limited to wearables. They also created a speaker system that is controllable via hand gestures that can be read at a distance of 15 meters. The future smart home may see us controlling all aspects of our environment by simply standing in the living room and motioning like a maestro before an orchestra.

It will likely be some time before we start seeing this technology at the consumer level. However, Google is planning on releasing a new, better developers kit in 2017 that will be on the beta level. What are your thoughts regarding Project Soli? Is this the future of device interaction, or will things take a more verbal, conversational route? Let us know your theory and opinions in the comments!