Affiliate links on Android Authority may earn us a commission. Learn more.

How does Alexa work? The tech behind Amazon's virtual assistant, explained

We’ve got quite a few guides for using Amazon Alexa on Android Authority, but you may be curious about the voice assistant’s underlying technology. Here’s a brief explanation of how Alexa works, from its overall structure to how it hears and responds to voice commands.

How Alexa works: An overview

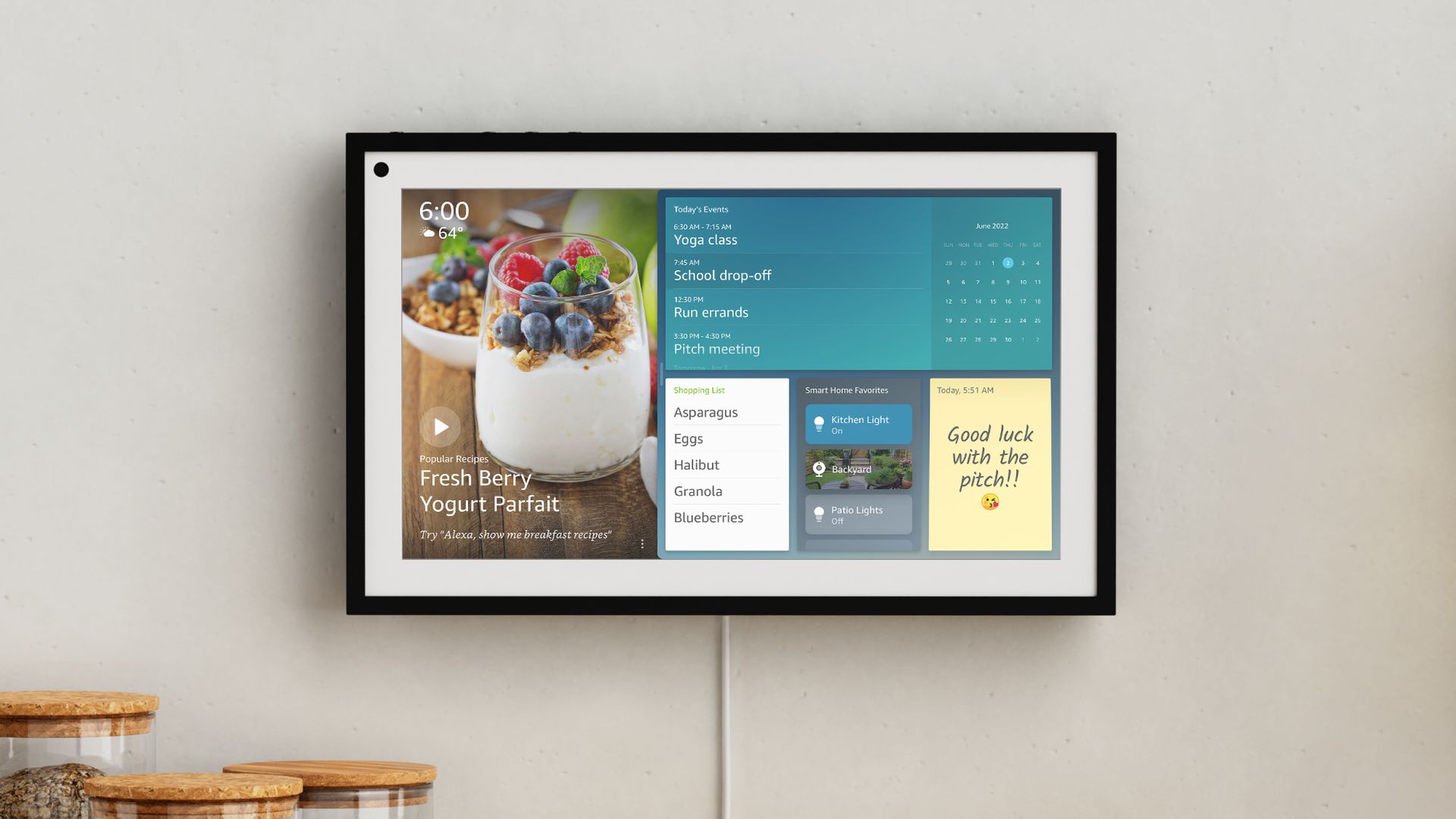

The base components of Alexa, from a user perspective, are an Amazon account and an Alexa-enabled device connected to the internet, usually a smart speaker or display. The account lets you build a profile, save software and hardware settings, and link compatible devices, services, and accessories. Alexa devices listen for voice commands, upload them to Amazon servers for translation, then deliver results in the form of audio, video, or device/accessory triggers. Some models also serve as Matter controllers, Thread border routers, and/or Zigbee hubs for compatible smart home products.

All voice commands begin with a wake word that tells a device to listen. The default of course is “Alexa,” but using the assistant’s app for Android or iPhone/iPad, you can change this to “Amazon,” “Computer,” “Echo,” or “Ziggy.” In fact the app is effectively a third base component, since it’s (normally) needed for device setup and linking things to your Amazon account. Amazon has all but completely phased out web-based setup.

There are many possible Alexa commands, so we won’t delve too far here, but these represent natural-language voice requests for everything from knowledge questions to media playback and smart home control. For instance:

- “Alexa, what’s the weather outside?”

- “Alexa, shuffle The Best Ambient Playlist You’ll Find on Spotify.”

- “Alexa, set the Living Room thermostat to 72 degrees.”

- “Alexa, how close is the nearest star?”

Some functions require enabling “skills,” whether through Amazon’s website or the Alexa app. Using the commands above as examples, the music one wouldn’t work without a skill linking your Spotify account, and thermostat control would require an appropriate brand skill such as Ecobee or Google Nest.

Most skills are free to enable since they’re really just supporting existing products and services. Paid skills are rare, but they do exist, such as extended high-quality loops for sleep sounds.

The Alexa app also enables routines, which is just another word for automations. These are user-created, and trigger actions based on voice commands or various conditions, such as location, accessory status, or the time of day. A “Good Morning” routine for example might turn on your lights, play NPR news, and warm up your coffee maker via a smart plug when you say “Alexa, start my day.”

To be controlled by Alexa, smart home accessories must specifically support the platform or the universal Matter standard. Just about any kind of accessory type is available, however. Aside from plugs, thermostats, and smart bulbs, you can get everything from air purifiers to robot vacuums. These are paired using the Alexa app, regardless of whether they connect via skills, Wi-Fi, Thread, and/or Zigbee.

How does Alexa hear?

While all Alexa-equipped devices have at least one microphone, there are often two or more on smart speakers and displays. This makes it easier to isolate voices from ambient noise, since it creates directional data that can be compared and filtered through signal processing algorithms. There are finite limits of course — you can’t stand next to a loud TV or dishwasher and expect an Echo speaker to understand.

Contrary to what you may have been told, Alexa isn’t continually recording everything you say. It is constantly listening for its wake word, and subsequent audio (ending after you stop talking) is usually sent to Amazon for interpretation. We say usually because Amazon has experimented with offline processing on devices devices like the 4th gen Echo or Echo Show 10, which have one of the company’s AZ Neural Edge processors. It seems to have drifted away from the idea, though, for reasons unknown.

Amazon says it encrypts uploaded audio recordings, but saves them by default and analyzes “an extremely small sample” of anonymized clips to improve Alexa’s performance. Recordings have been used in criminal cases, and some sounds or phrases can be misinterpreted as wake words — so if you’re concerned about privacy, you’ll want to opt out of saving, or regularly delete your voice history.

How does Alexa respond?

The reason Alexa has been utterly dependent on the cloud until lately is the demands of natural language processing. Each command has to be broken down into individual speech units called phonemes, and those units are then compared with a database to find the closest word matches. On top of that the software has to identify sentence structure, as well as terms relevant to different subsystems. If you say “set the thermostat to cool,” Alexa knows to forward that to a smart home API (application programming interface).

Alexa can distinguish different accents and dialects, but there are unique databases for each language Amazon supports, including regional variations. Users need to select them in the Alexa app if their device doesn’t ship with them preloaded. An American Echo speaker can’t understand German out of the box, for example, as anyone who’s asked for songs by Nachtmahr or Grausame Töchter can attest.

Machine learning plays a critical role, since context and history gives Alexa a better shot at guessing your intentions. It’s why Amazon is so invested in analyzing recordings from real-world customers. Humans tend to use context and history to gauge meaning in conversation — using strict computer logic, Alexa might interpret something like “play music by CHVRCHES” (the Scottish synthpop band) as a request to hear music by church choirs. Alexa can and does make mistakes, but the seas of data Amazon has access to means that the assistant evolves over time.

Responses use synthesized speech based on recorded voice samples. Privately Amazon has been experimenting with audio mimicry, including even dead voices. The company should start rolling out generative AI conversations with more complex responses and emotional inflection in due course. However, the first signs of Amazon Alexa’s AI future has emerged on Fire TV, for users seeking movies and content.

FAQs

Effectively. While some devices may allow offline voice control of volume and hub-linked smart home accessories, or checking and canceling things like timers and reminders, just about everything else requires communicating with Amazon servers and/or linked third-party services. Even devices that can process audio locally are still uploading transcripts of voice commands.

It’s always listening for its wake word, assuming you haven’t muted a device’s microphones.

Crucially though, it’s not recording everything. Recording is only triggered after a wake word is detected, and ends once you stop talking (or Alexa thinks you have, anyway). If you’re worried about privacy, you’ll need to opt out of these recordings being saved, or regularly delete your voice history.

According to some definitions. It’s capable of limited learning and problem solving, for instance interpreting voice commands it hasn’t been pre-programmed for.

That said, it usually relies on what’s called “weak” AI. It doesn’t display the same adaptability as a human or animal mind. You can’t have a genuine conversation, and its learning happens incrementally rather than on the fly. It’s certainly nowhere near sentient, no matter how difficult that might be to define.

Amazon is planning to roll out generative AI conversations in the near future, but it’s worth remembering that generative tech only simulates natural conversations — there’s no brain or personality behind a chatbot.