Affiliate links on Android Authority may earn us a commission. Learn more.

How accurate is ChatGPT? Should you trust its responses?

Modern chatbots like ChatGPT can output dozens of words every second, making them invaluable tools for researching and analyzing large amounts of information. With over 500GB of training data and an estimated 300 billion words under its belt, the AI language model can answer many factual questions too. But as human as ChatGPT’s responses may sound, one crucial question remains: how accurate is the information it provides?

While ChatGPT can be impressively informative most of the time, you’ve probably heard of countless controversies surrounding generative AI. From racial biases to harmful content, there’s a history of controversies to consider before trusting any AI-generated output.

Is ChatGPT accurate?

Yes, ChatGPT has the potential to be accurate, especially for factual queries with clear answers. When talking about long-established information, ChatGPT can fetch relevant data from its training and deliver truthful responses. For a question like “What is the capital of France?”, you’re very likely to get the correct answer.

However, chatbots like ChatGPT often fabricate information when they encounter a novel or difficult question. This is because generative language models are designed to mimic the way humans write, not the way we think. Consequently, they have limited logical reasoning capabilities.

ChatGPT hallucinates less often than a year ago, but you still need to watch out.

The problem with ChatGPT’s accuracy runs deeper than you’d think. It often weaves in entirely fictional details and invents convincing-sounding factoids in response to certain prompts. The chatbot’s creator has placed several safeguards to prevent hallucinations, but as our tests will show later in this article, it isn’t completely effective.

If you’re after empirical data, several studies have tested ChatGPT’s accuracy extensively to reveal one clear trend. ChatGPT boasts a surprisingly high accuracy rating for typical questions. In one medical study, for example, the chatbot scored a median rating of 5.5 on a 6-point scale.

However, ChatGPT’s tendency to receive routine updates can also harm its accuracy and usefulness. Another group of UC Berkeley and Stanford University researchers found that the chatbot’s ability to identify prime numbers dropped from an impressive 84% accuracy to just 51% within three months. In short, you cannot and should not trust ChatGPT’s responses, at least not without fact-checking them first.

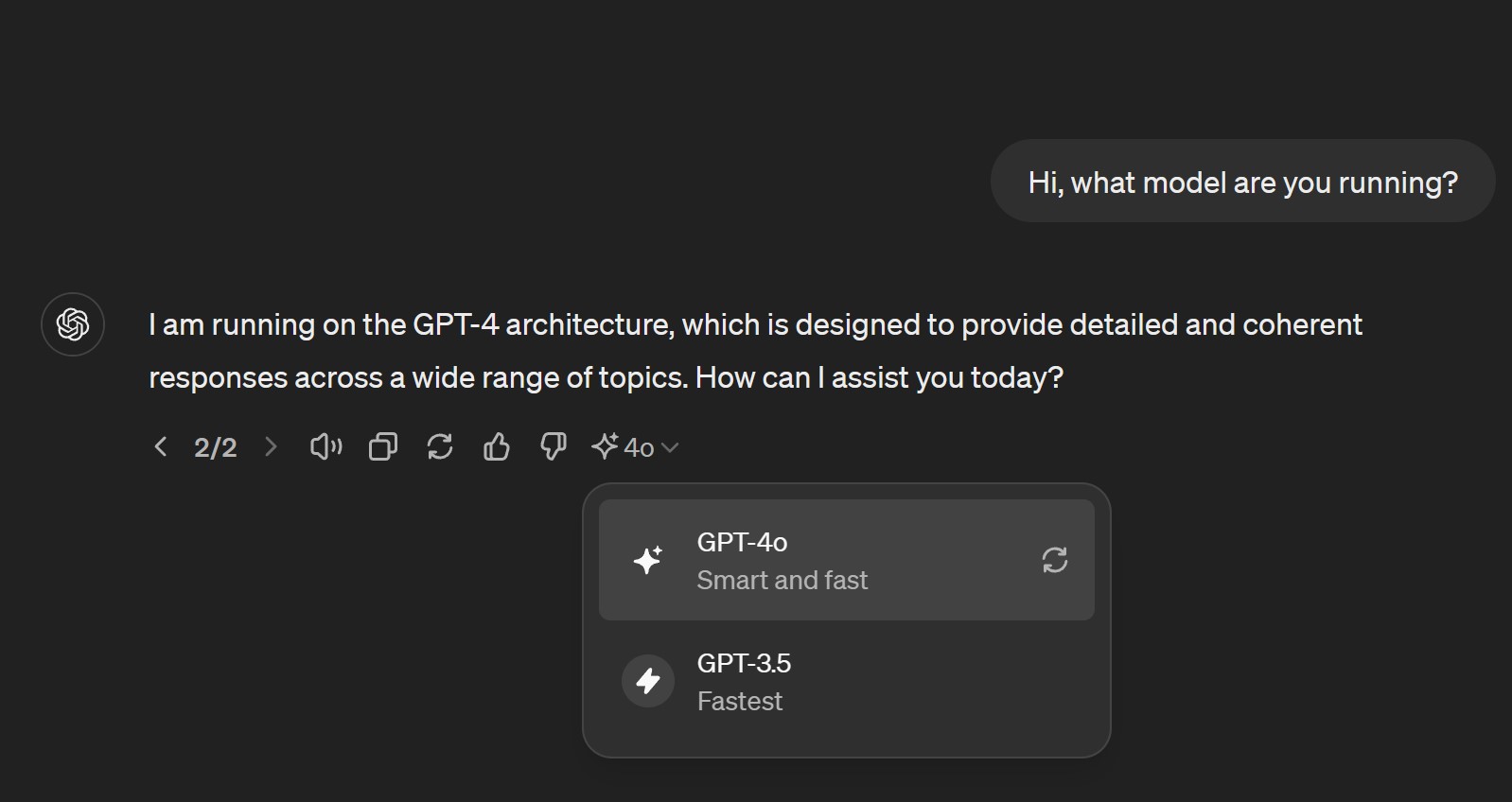

Having said that, updates to ChatGPT’s underlying language model have greatly improved its accuracy over the past year. The GPT-4o update, for instance, allows the chatbot to search the internet for information and cross-check its responses against external sources. However, free users only get limited access to GPT-4o, which means ChatGPT will fall back to the older and less accurate GPT-3.5 model during periods of high demand.

How to improve ChatGPT’s accuracy

If you’re only an occasional ChatGPT user, you may have never considered upgrading to the chatbot’s paid tier. However, doing so will improve its accuracy several-fold and should top your priority list if you rely on the chatbot’s responses. This is because the $20 ChatGPT Plus subscription unlocks guaranteed access to the GPT-4o language model. As I mentioned earlier, GPT-4o is far more accurate and it can also search the internet for the latest information. You can think of it as live research since it’s similar to how we find the right answer through a Google search.

ChatGPT-4 delivers much more accurate results, but still falls behind some human experts.

The GPT-4 language model was the first to release this generation. Even that was far more capable than its predecessor, GPT-3.5. According to OpenAI, the newer model scored in the 89th percentile of SAT Math, 90th percentile of the Uniform Bar Exam, and 80th percentile of the GRE Quantitative. Almost all of these results are significantly better than that of GPT-3.5.

If you’re a free ChatGPT user, you can check if your chats use GPT-4o by looking for the model dropdown menu at the bottom of each response, as pictured below.

ChatGPT 4 accuracy tested: Free vs Plus compared

As I mentioned earlier, ChatGPT can deliver significantly more accurate responses with a GPT-4 based model. I asked the chatbot a handful of factual questions, some particularly obscure, to test whether or not I could get a reliably accurate answer. On both, the free tier and ChatGPT Plus, you can switch between GPT-3.5 and GPT-4o.

- Question 1: Is 17077 a prime number? Think step by step and then answer [Yes] or [No].

A recent ChatGPT update added chain-of-thought reasoning to the chatbot, allowing it to mimic human reasoning. That seems to have paid off, as both versions of ChatGPT were able to correctly identify a prime number. However, the paid version of the chatbot wrote a piece of custom Python code to perform the calculations. While it didn’t improve the result, I did feel that the answer was more trustworthy.

- Question 2: Does the Setouchi Area Pass cover any local transport in Osaka?

With many of us using ChatGPT for travel advice, I decided to ask a relatively obscure question in that domain. Unfortunately, the base GPT-3.5 model responded inaccurately and only admitted fault when I suggested the correct answer. However, switching to ChatGPT-4 changed the outcome, immediately giving me the correct answer. Still, can the chatbot replace manual research entirely? I’m on the fence, especially since rival chatbots like Perplexity AI cite their sources.

- Question 3: Select two random integers between 2459 and 3593 and multiply them

Asking a mathematical question will almost always trip up ChatGPT, and that’s exactly what happened with GPT-3.5 or the base version of the chatbot. It delivered a plausible-sounding response (2865×3035 = 8,697,975), but it was actually quite far off from the true answer (8,695,275). ChatGPT-4 used Python code once again to find the right answer, but chances are that it would’ve failed without outside help too.

In summary, remember that ChatGPT will almost always try to deliver a solution to your problem or question without caring much about its accuracy. It will only sometimes admit that it cannot answer a question or doesn’t know enough about the subject matter. Otherwise, it can just as easily hallucinate information without any obvious indication.