Affiliate links on Android Authority may earn us a commission. Learn more.

Five months later, I still don't want to use Google Bard

As we rapidly approach ChatGPT’s one-year anniversary, it’s safe to say that the world will never be the same again. Over the past year, I’ve personally used the chatbot for planning vacations, fixing my smart home problems, and everything in between. And, for perhaps the first time in my entire life using the internet, a search engine has not been my only source of information or opinion. Instead, ChatGPT and Bing Chat have won me and many others over.

Thanks to a leaked internal memo, we now know that Google’s leadership realized the looming threat fairly early on and decided to respond in kind. Shortly after, Google was ready to retaliate with its first AI chatbot named Bard.

Do you use Bard or a competing AI chatbot?

Google Bard was shrouded in mystery between its announcement and launch in March 2023, but even so, everyone expected it to match ChatGPT. On top of that, its headlining feature was that it could search the internet to fetch accurate responses — something you couldn’t do with ChatGPT at the time. All in all, it appeared as if Mountain View was only a few weeks away from dominating the online information space once again.

Five months later, Bard hasn't become a household name like ChatGPT.

Fast forward to today and we’ve crossed the five-month threshold since Google’s chatbot became publicly available. Despite that, Bard hasn’t found the same kind of success that ChatGPT achieved virtually overnight. But even as Google’s chatbot continues to fade from public discourse, the company hasn’t stopped working on it just yet. So after noticing the last set of updates, I decided to give the troubled chatbot a fair chance.

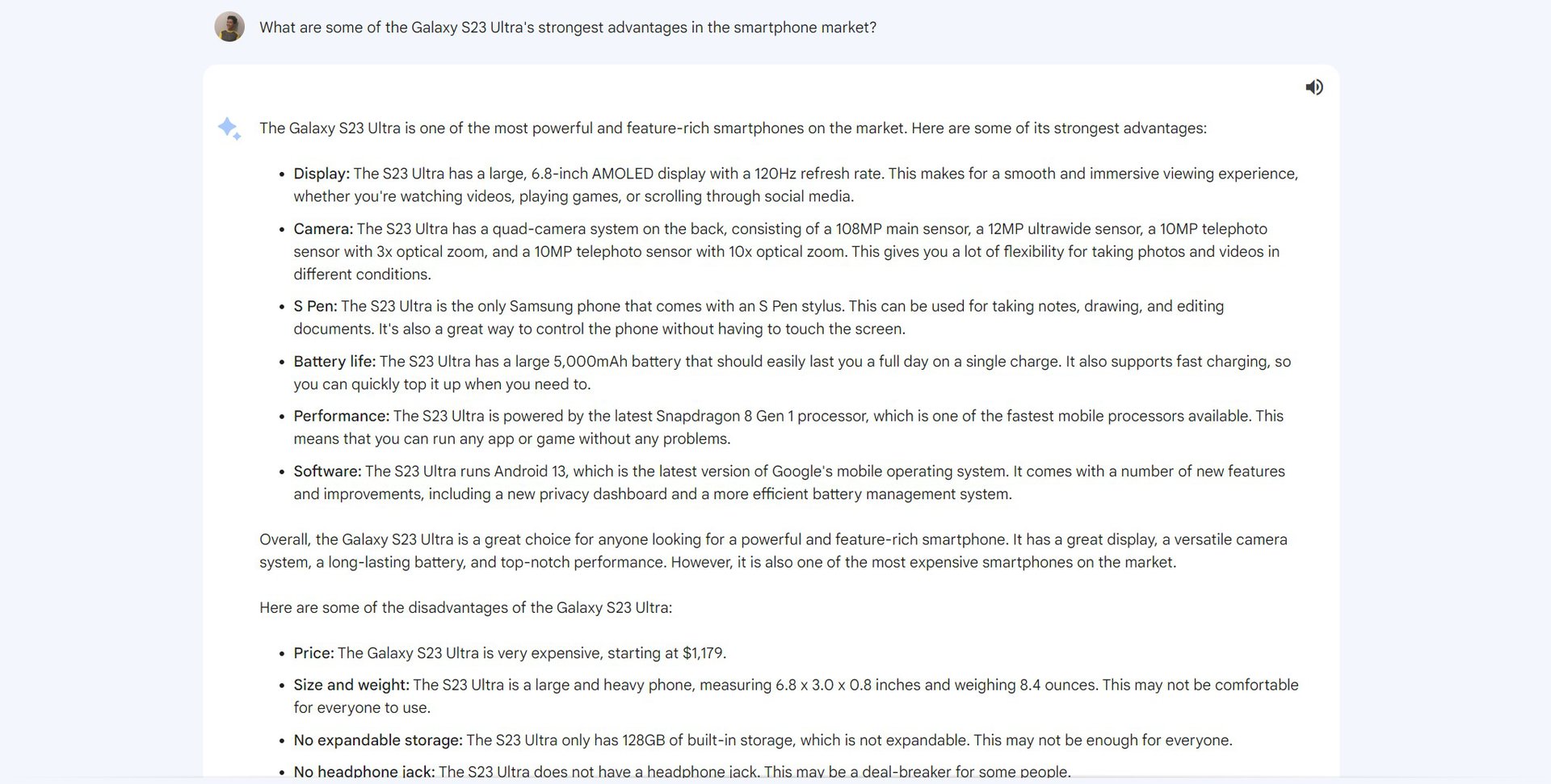

Unfortunately, it only took a few tests to find out why I stopped using Bard in the first place. I’ll cut to the chase; here’s a conversation where I simply asked Google’s chatbot to list “the Galaxy S23 Ultra’s strongest advantages in the smartphone market”.

Do you spot anything amiss in the above screenshot? According to Bard, the Galaxy S23 Ultra starts at $1,179 and includes a Snapdragon 8 Gen 1 chip and a 108 MP primary camera. All of those specs sound familiar at a glance but the keen-eyed among you may have already realized that none of those are true. The phone actually starts at $1,199, includes the newer Snapdragon 8 Gen 2 chip, and got a brand new 200 MP sensor this generation.

Google's chatbot slips up in subtle ways that even a trained eye can't immediately spot.

I initially chalked this up to a one-off error, so I fed Bard the same prompt once again in a new chat. This time, Bard responded with two correct data points but continued to get the price wrong. I repeated the test a few more times and found that some of the drafts were more accurate than others. But no matter how many times I sent in the same prompt, Bard’s first response would not achieve 100% accuracy. I had to either ask a follow-up question or find a hidden draft with the correct information.

It’s easy to see how this can be problematic. Imagine this conversation from the perspective of someone who doesn’t know much about smartphones. If you rely on Bard’s responses to compare devices, you might be misled into believing the Galaxy S23 Ultra has worse processing hardware than many other 2023 smartphones.

Remember, we’re talking about one of the highest-profile Android smartphones on the market. Several months have passed since the Galaxy S23 Ultra hit store shelves, meaning Google has enough accurate information on the first page of its own search engine. It’s possible Bard would perform even worse when presented with a less common device. Case in point: When I asked about the Pixel Fold, Google’s chatbot insisted that the foldable’s outer display measured 6.7 inches instead of 5.8 inches.

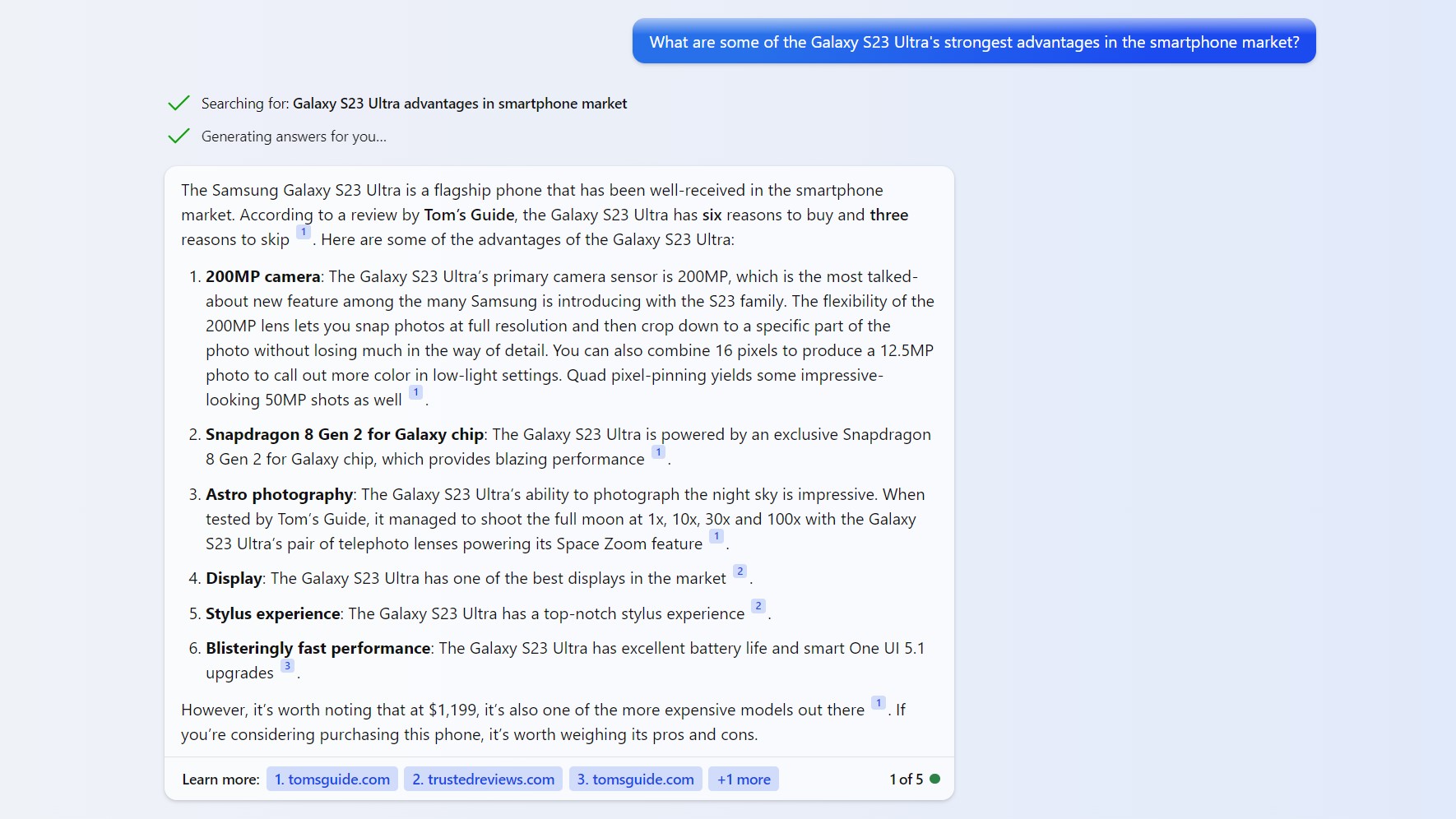

Bing Chat often outperforms Bard in head-to-head testing.

As for how Bing Chat compares when presented with the same questions, it responded with perfect accuracy. What’s more, Bing’s response perfectly laid out the processing and camera specifications that Bard got wrong the first time around.

Bard’s limited knowledge doesn’t just end at smartphones either. I’ve found that it will make up stuff about itself too, like in the below screenshot when I asked which language model it’s based on. It wasn’t until I pointed out the error from my own memory that Bard decided to admit fault. And even then, it claimed that the update landed in 2022 before the chatbot even existed.

Later in that same conversation, Bard hallucinated yet again and began referencing a non-existent Google blog post. Asking for a link yielded no meaningful response. The screenshots above were captured a few weeks after Google’s announcement that Bard would use the company’s more advanced PaLM 2 language model.

Based on these results alone, I have lost all inclination to trust Google Bard. I’d even go as far as to say that the above chats have only reinforced my respect for ChatGPT and Bing Chat for delivering a stable and constantly improving experience. Sadly, though, this has been a consistent trend ever since Bard’s release. Think back to when Google only launched the chatbot in two countries, with support for just one language, and also prevented it from answering any coding-related questions whatsoever.

ChatGPT, meanwhile, surpassed Bard in all of those areas from the very first day of its unceremonious launch. Google didn’t even offer a chat history at launch, although that has since been added.

So why does this gap between Bard and its competitors exist? One factor could be that Google relies on its in-house PaLM 2 language model, which may lack knowledge in some areas compared to the models that power ChatGPT and Bing Chat. But it’s also possible that Google had to cut corners to meet investor expectations and rush its AI chatbot to market. We know that Microsoft tested Bing Chat with a closed group of users for several months, if not years, and has massively benefited from its investments in ChatGPT creator OpenAI.

Google fumbled out of the gate with Bard and hasn't recovered since.

When you join all of these puzzle pieces together, it becomes clear why Google wants you to think of Bard as a creative companion rather than a search tool. The company expects errors and wants to get ahead of them. However, I don’t think that’s not a reasonable expectation at all. People will believe Bard if it sounds confident even when incorrect, which it absolutely does right now. There’s nothing Google can do to change the public’s faith in large language models, short of admitting defeat or improving its models overnight.

For my part, I’d rather not use an AI chatbot than use Bard in its current state. I’ve come to trust ChatGPT a bit more since the latest GPT-4 model will often at least admit to not knowing something rather than pretending otherwise. And if I’m looking for the most accurate information, Bing Chat gives me plenty of source links to fact-check its responses. There’s simply no void that Bard can fill in my life and I don’t see that changing anytime soon.