Affiliate links on Android Authority may earn us a commission. Learn more.

AI Overviews fiasco: Google responds to backlash, promises improvements

- Google has responded to the AI overviews fiasco, addressing concerns over bizarre and dangerous search results.

- Google attributed many shared screenshots to being fake but acknowledged some genuine errors due to misinterpretations of queries and satirical content.

- In response, Google is refining detection methods, limiting satire and humor content, and refining triggers for AI Overviews.

Google’s Search Generative Experience (SGE), launched last year to enhance search queries with AI-generated summaries and answers, recently culminated with the rollout of its new AI Overviews feature in the United States. The new search tool, designed to revolutionize search by providing concise AI-generated summaries to complex queries, found itself in hot water just a week after its US launch.

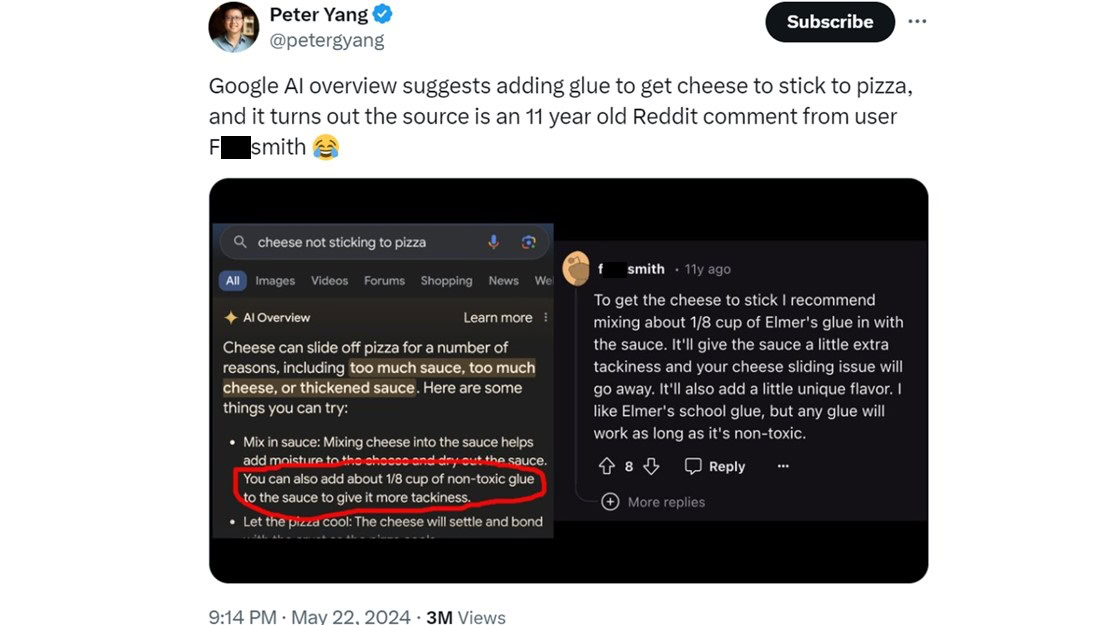

The Internet was flooded with AI Overview responses ranging from bizarre to concerning. Some of the most notable ones advised users to eat rocks for essential nutrients, use glue to secure cheese on pizza, and drink urine to address kidney stones. In one particularly alarming instance, the AI suggested jumping off a bridge as a solution for depression.

What went wrong with AI overviews?

In a blog post today, Google responded to the controversy and detailed the steps it is taking to address the issue. The company acknowledged that despite extensive pre-launch testing, the real-world application of AI Overviews with millions of novel and unexpected searches exposed flaws that pre-launch tests did not anticipate.

Google has attributed some of these errors to the AI’s reliance on satirical articles and troll posts from forums such as Reddit. The company has also suggested that many of the widely shared screenshots of these erroneous results were fake or fabricated, claiming that those particular AI Overviews never actually appeared.

Google further explains that AI Overviews is not simply generating output based on training data. The feature is integrated with Google’s core web ranking systems and is designed to prioritize identifying relevant, high-quality results from the web index. However, the company does admit that the feature is not flawless (duh). It can still misinterpret queries, misunderstand nuances, or encounter limited information, which led to the errors that we all saw.

What is Google doing about it?

To address these issues, Google claims to have implemented several technical improvements. These include better detection mechanisms for “nonsensical” queries, limiting the inclusion of satire and humor content, restricting the use of user-generated content in potentially misleading responses, and refining the triggers for when AI Overviews should appear.

Enhanced guardrails have also been introduced for critical topics such as news and health to ensure higher accuracy and reliability. The company further asserts that it is actively monitoring feedback and external reports to swiftly remove any AI Overviews that violate content policies.

Basically, it looks like AI Overviews aren’t going away anytime soon. The company remains optimistic about AI’s potential in search and is confident that continuous improvements will lead to a more reliable and beneficial tool for users. That being said, if you’re not too keen about making Google’s AI a part of your search process, there are ways to turn it off completely.