Affiliate links on Android Authority may earn us a commission. Learn more.

A closer look at Arm's machine learning hardware

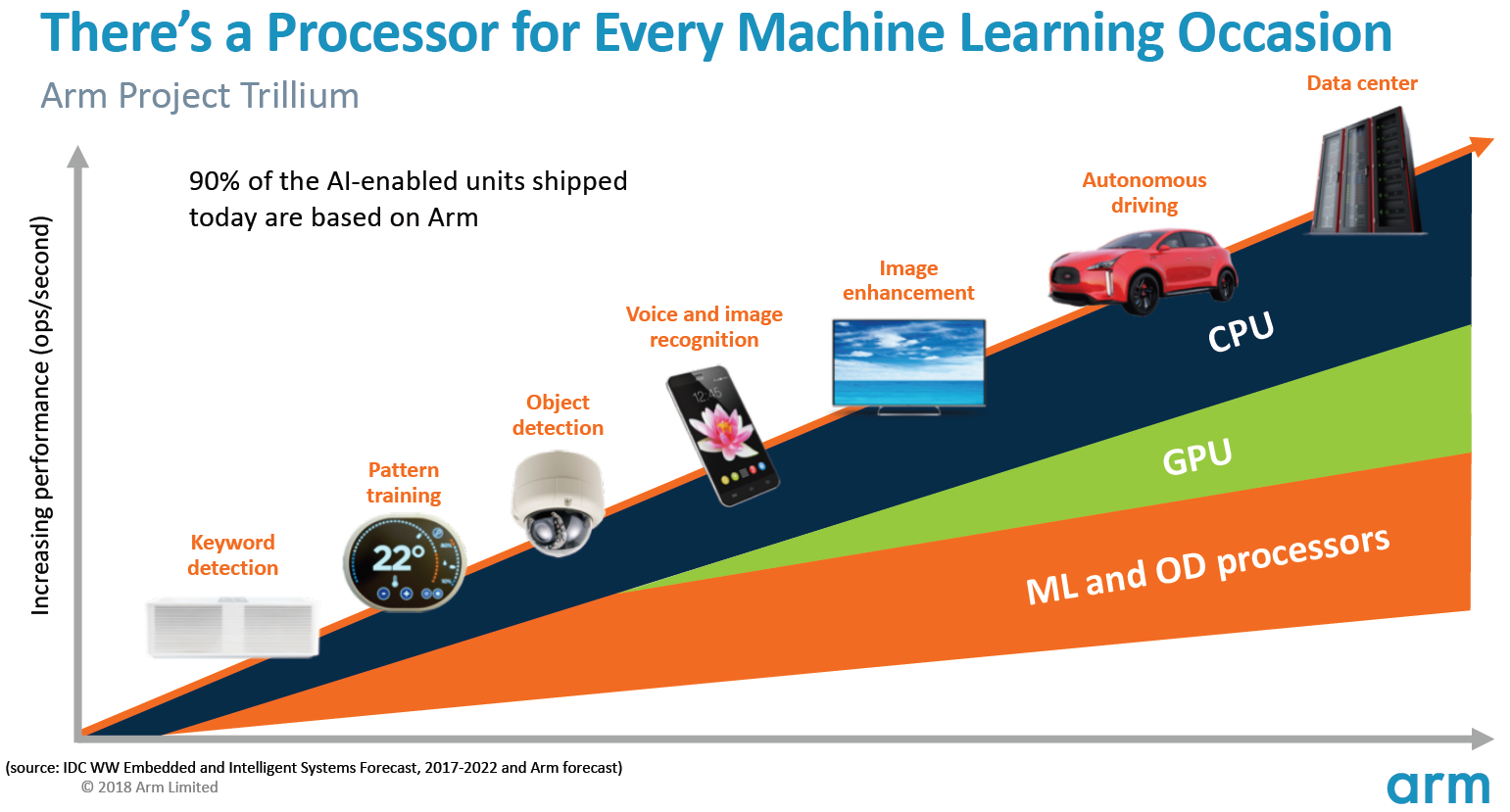

Back at the start of 2017, Arm announced its first batch of dedicated machine learning (ML) hardware. Under the name Project Trillium, the company unveiled a dedicated ML processor for products like smartphones, along with a second chip designed specifically to accelerate object detection (OD) use cases. Let’s delve deeper into Project Trillium and the company’s broader plans for the growing market for machine learning hardware.

It’s important to note that Arm’s announcement relates entirely to low power inference hardware. Its ML and OD processors are designed to efficiently run trained machine learning tasks on consumer-level hardware, rather than training algorithms on huge datasets like Google’s Cloud TPUs are designed to do. To start, Arm is focusing on what it sees as the two biggest markets for ML inference hardware — smartphones and internet protocol/surveillance cameras.

New machine learning processor

Despite the new dedicated machine learning hardware announcements with Project Trillium, Arm remains dedicated to supporting these type of tasks on its CPUs and GPUs too, with optimized dot product functions inside its latest CPU and GPU cores. Trillium augments these capabilities with more heavily optimized hardware, enabling machine learning tasks to be performed with higher performance and much lower power draw. But Arm’s ML processor is not just an accelerator — it’s a processor in its own right.

The processor boasts a peak throughput of 4.6 TOPs in a power envelope of 1.5 W, making it suitable for smartphones and even lower power products. This gives the chip a power efficiency of 3 TOPs/W, based on a 7 nm implementation, a big draw for the energy conscious product developer. For comparison, a typical mobile device might only be able to offer around 0.5 TOPs of mathematical grunt.

Interestingly, Arm’s ML processor is taking a different approach to some smartphone chip manufacturers that repurposed digital signal processors (DSPs) to help run machine learning tasks on their high-end processors. During a chat at MWC, Arm vp, fellow and gm of the Machine Learning Group Jem Davies, mentioned buying a DSP company was an option to get into this hardware market, but that ultimately the company decided on a ground-up solution specifically optimized for the most common operations.

Arm's ML processor boasts a 4-6x performance boost over typical smartphones, along with reduced power consumption.

Arm’s ML processor is designed exclusively for 8-bit integer operations and convolution neural networks (CNNs). It specializes in mass-multiplication of small byte sized data, which should make it faster and more efficient than a general purpose DSP at these type of tasks. CNNs are widely used for image recognition, probably the most common ML task at the moment. If you’re wondering why 8-bit, Arm sees 8-bit data is the sweet spot for accuracy versus performance with CNNs, and the development tools are the most mature. Not forgetting that the Android NN framework only supports INT8 and FP32, the latter of which can already be run on CPUs and GPUs if you need it.

The biggest performance and energy bottleneck, particularly in mobile products, is memory bandwidth and mass matrix multiplication requires lots of reading and writing. To address this issue, Arm included a chunk of internal memory to speed up execution. The size of this memory pool is variable and Arm expects to offer a selection of optimized designs for its partners, depending on the use case. We’re looking at 10s of kb of memory for each execution engine capping out at around 1MB in the largest designs. The chip also uses lossless compression on the ML weights and metadata to save up to 3x in bandwidth.

Arm’s ML processor is designed for 8-bit integer operations and convolution neural networks.

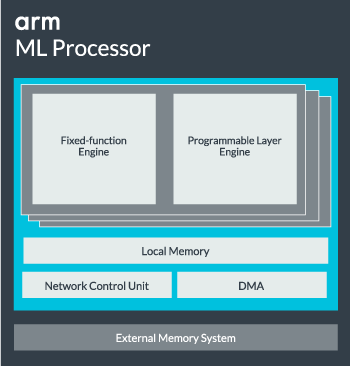

The ML processor core can be configured from a single core up to 16 execution engines for increased performance. Each comprises the optimized fixed-function engine as well as a programmable layer. The fixed-function engine handles convolution calculation with a 128-wide Multiply-Accumulate (MAC) unit, while the programmable layer engine, a derivative of Arm’s microcontroller technology, handles the memory and optimizes the data path for the machine learning algorithm being run. The name may be a bit misleading as this isn’t a unit exposed to the programmer directly for coding, but is instead configured at the compiler stage to optimize the MAC unit.

Finally, the processor contains a Direct Memory Access (DMA) unit, to ensure fast direct access to memory in other parts of the system. The ML processor can function as its own standalone IP block with an ACE-Lite interface for incorporation into a SoC, or operate as a fixed block outside of a SoC. Most likely, we will see the ML core sitting off the memory interconnect inside a SoC, just like a GPU or display processor. From here, designers can closely align the ML core with CPUs in a DynamIQ cluster and share access to cache memory via cache snooping, but that’s a very bespoke solution that probably won’t see use in general workload devices like mobile phone chips.

Fitting everything together

Last year Arm unveiled its Cortex-A75 and A55 CPUs, and high-end Mali-G72 GPU, but it didn’t unveil dedicated machine learning hardware until almost a year later. However, Arm did place a fair bit of focus on accelerating common machine learning operations inside its latest hardware and this continues to be part of the company’s strategy going forward.

Its latest Mali-G52 graphics processor for mainstream devices improves the performance of machine learning tasks by 3.6 times, thanks to the introduction of dot product (Int8) support and four multiply-accumulate operations per cycle per lane. Dot product support also appears in the A75, A55, and G72.

Arm will continue to optimize ML workloads across its CPUs and GPUs too.

Even with the new OD and ML processors, Arm is continuing to support accelerated machine learning tasks across its latest CPUs and GPUs. Its upcoming dedicated machine learning hardware exists to make these tasks more efficient where appropriate, but it’s all part of a broad portfolio of solutions designed to cater to its wide range of product partners.

In addition to offering flexibility across various performance and energy points to its partners – one of Arm’s key goals – this heterogeneous approach is important even in future devices equipped with an ML processor to optimize power efficiency. For example, it may not be worth powering up the ML core to quickly perform a task when the CPU is already running, so it’s best to optimize workloads on the CPU too. In phones, the ML chip is likely to only come into play for longer running, more demanding neural network loads.

From single to multi-core CPUs and GPUs, through to optional ML processors which can scale all the way up to 16 cores (available inside and outside a SoC core cluster), Arm can support products ranging from simple smart speakers to autonomous vehicles and data centers, which require much more powerful hardware. Naturally, the company is also supplying software to handle this scalability.

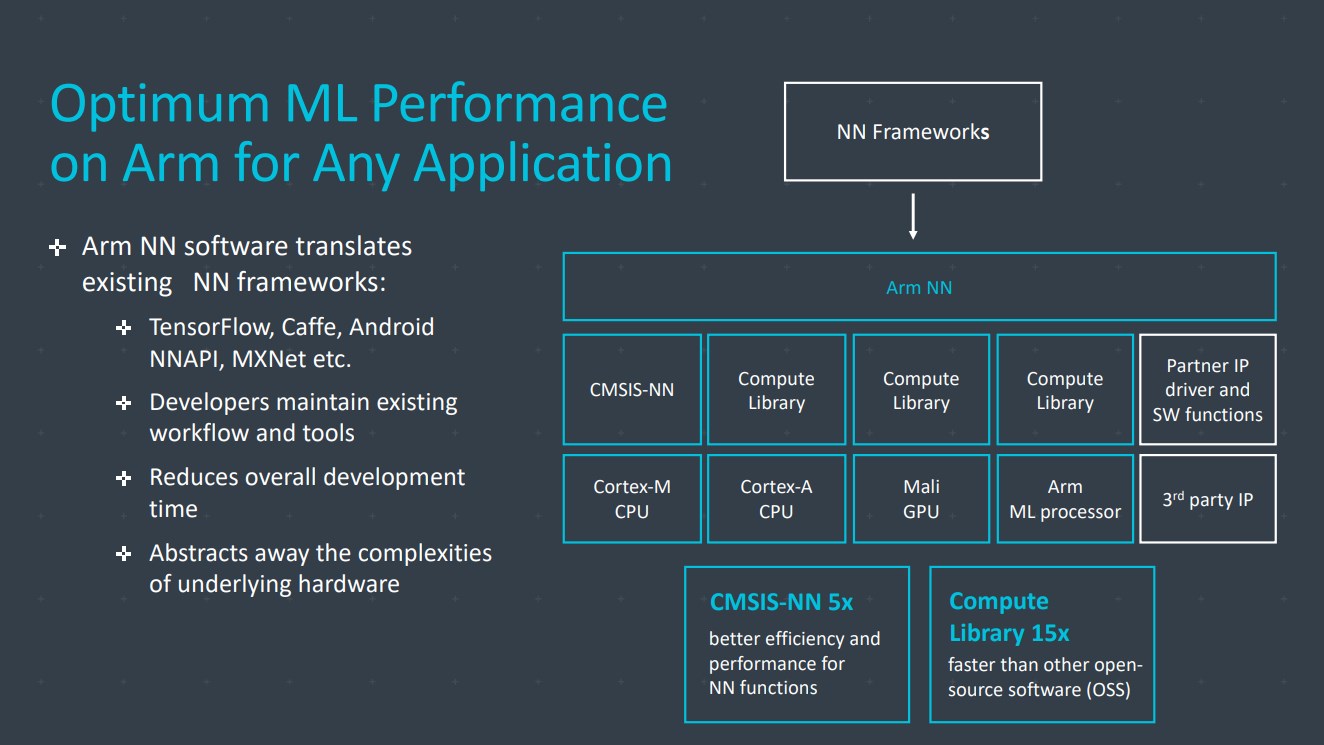

The company’s Compute Library is still the tool for handling machine learning tasks across the company’s CPU, GPU, and now ML hardware components. The library offers low-level software functions for image processing, computer vision, speech recognition, and the like, all of which run on the most applicable piece of hardware. Arm is even supporting embedded applications with its CMSIS-NN kernels for Cortex-M microprocessors. CMSIS-NN offers up to 5.4 times more throughput and potentially 5.2 times the energy efficiency over baseline functions.

Arm's work on libraries, compilers, and drivers ensures that application developers don't have to worry about the range of underlying hardware.

Such broad possibilities of hardware and software implementation require a flexible software library too, which is where Arm’s Neural Network software comes in. The company isn’t looking to replace popular frameworks like TensorFlow or Caffe, but translates these frameworks into libraries relevant to run on the hardware of any particular product. So if your phone doesn’t have an Arm ML processor, the library will still work by running the task on your CPU or GPU. Hiding the configuration behind the scenes to simplify development is the aim here.

Machine Learning today and tomorrow

At the moment, Arm is squarely focused on powering the inference end of the machine learning spectrum, allowing consumers to run the complex algorithms efficiently on their devices (although the company hasn’t ruled out the possibility of getting involved in hardware for machine learning training at some point in the future). With high-speed 5G internet still years away and increasing concerns about privacy and security, Arm’s decision to power ML computing at the edge rather than focusing primarily on the cloud like Google seems like the correct move for now.

Most importantly, Arm’s machine learning capabilities aren’t being reserved just for flagship products. With support across a range of hardware types and scalability options, smartphones up and down the price ladder can benefit. In the longer term, the company is eying performance targets all the way from tiny IoT up to server class processors too. But even before Arm’s dedicated ML hardware hits the market, modern SoCs utilizing its dot product-enhanced CPUs and GPUs will receive performance- and energy-efficiency improvements over older hardware.

Arm says that Project Trillium machine learning hardware, which remains unnamed, will be landing in RTL form sometime mid-2018. To expedite development, Arm POP IP will offer physical designs for SRAM and the MAC unit optimized for cost-effective 16nm and cutting-edge 7nm processes. We likely won’t see Arm’s dedicated ML and object detection processors in any smartphones this year. Instead, we will have to wait until 2019 to get our hands on some of the first handsets benefiting from Project Trillium and its associated hardware.