Affiliate links on Android Authority may earn us a commission. Learn more.

Instructions Per Cycle - Gary explains

There was a time when the clock speed of a CPU was the only thing people were talking about. Back at the turn of the century, Intel and AMD locked horns in a race to release the first 1GHz desktop CPU. This was mainly a marketing thing, however it reinforced a false idea about the way a modern CPU core works, that the clock frequency is the most important thing. Well, it isn’t. There are many factors that determine the overall performance of a CPU core including the number of instructions it executes per clock cycle. Instructions Per Cycle (IPC) is a key aspect of a CPU’s design, but what is it? How does it affect the performance? Let me explain.

Gary also explains:

- What is Bluetooth 5?

- Does bloatware drain your battery?

- Does a 3000 mAh portable power bank charge a 3000 mAh phone?

- What is a VPN?

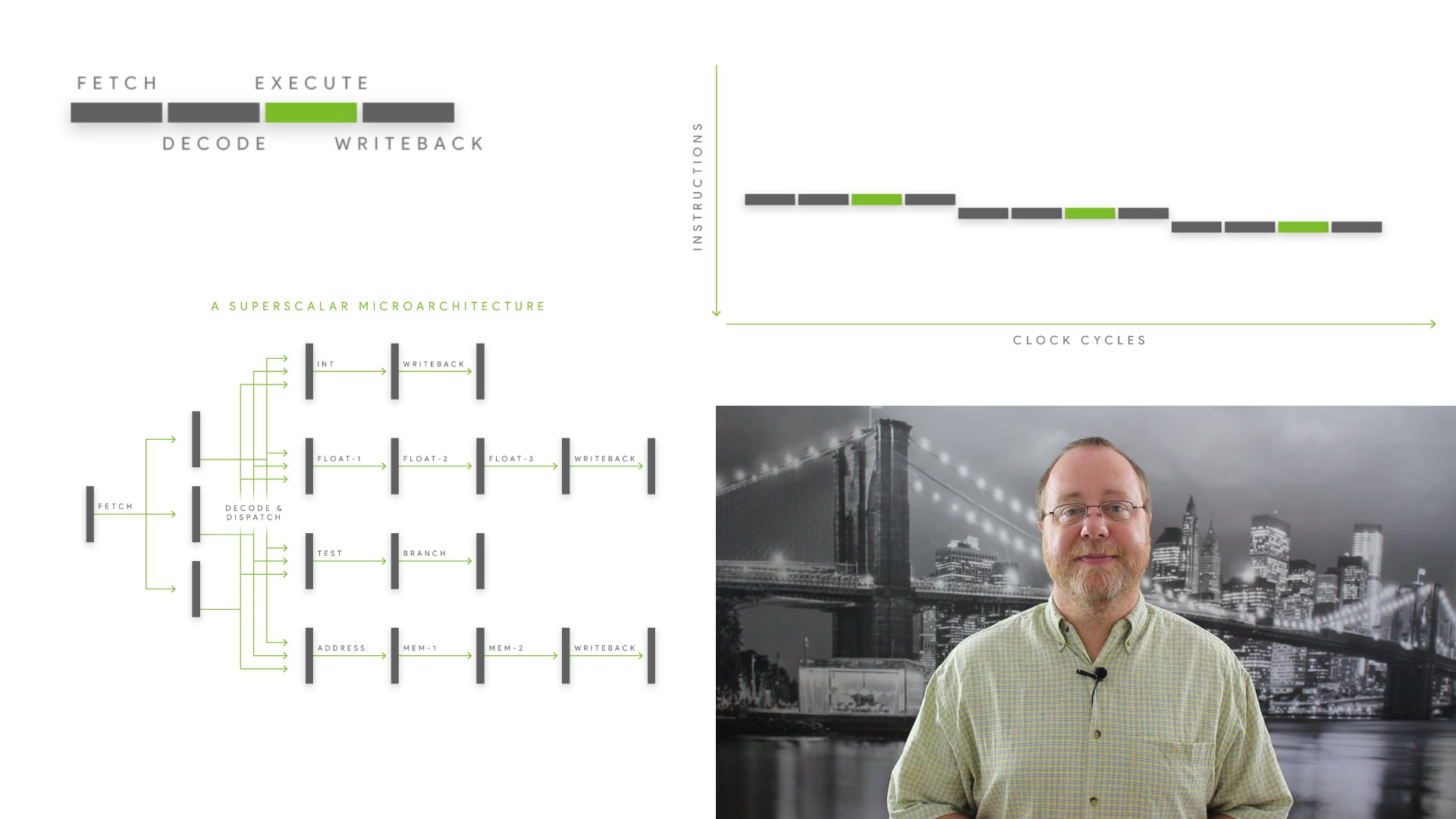

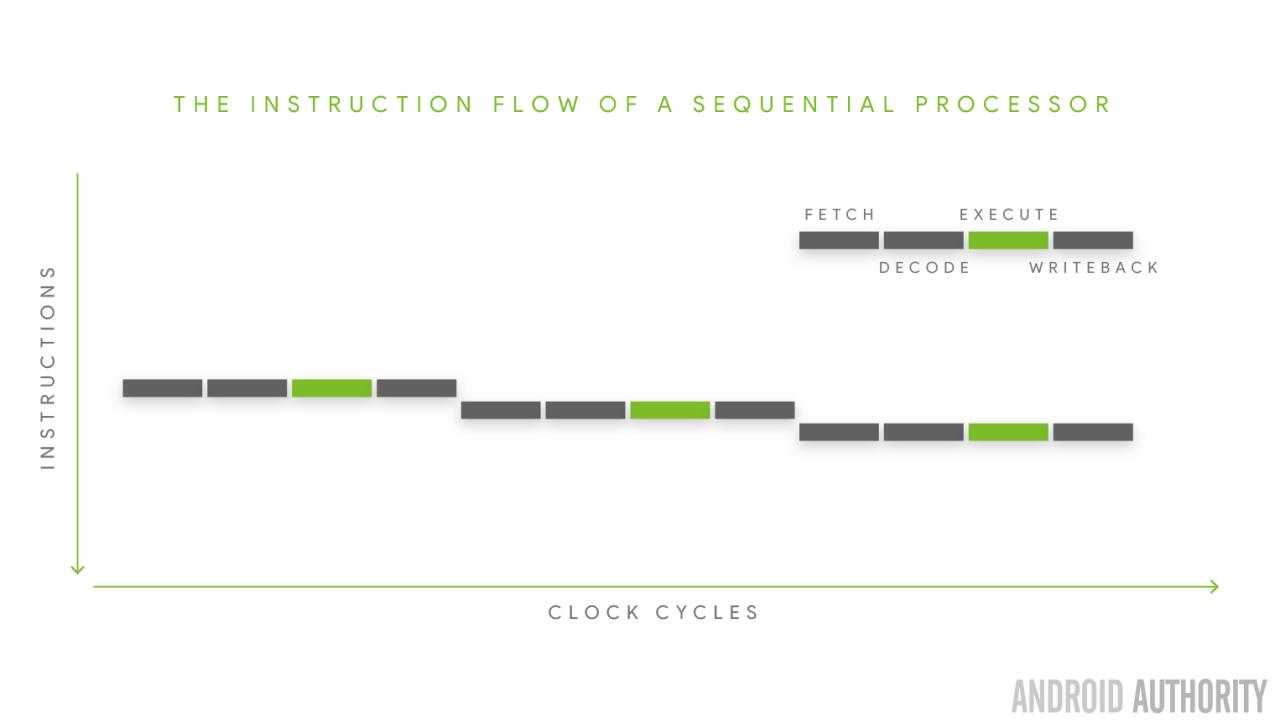

First a bit of history. Back in the day of 8-bit processors, and probably a bit after as well, all CPU instructions were executed sequentially. When one instruction was completed, the next instruction was executed and so on. The steps needed to execute an instruction can be broadly defined as: fetch, decode, execute, and write-back.

So first the instruction needs to be fetched from memory. Then it needs to be decoded to find out what type of instruction it is. When the CPU knows what it needs to do it goes forward and executes the instruction. The resulting changes to the registers and status flags etc. are then written back, ready for the next instruction to be executed.

Some processors of that era would start the fetch of the next instruction while the decode, execute and write-back stages were occurring, but essentially everything was sequential. So this means that it would take 4 clock cycles to execute an instruction, which is 0.25 instructions per clock. To get more performance you need to up the clock speed. That means that for these “simple” designs then the clock speed was the main factor influencing performance.

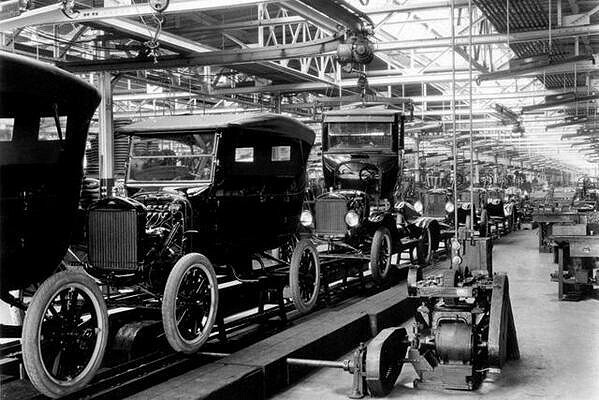

Henry Ford

One of the things Henry Ford is famous for is the use of the assembly line for the mass production of cars. Rather than start one car and work on it through to completion, Ford introduced the idea of working on many cars simultaneously and passing the uncompleted car down the line to the next station, until it was completed. The same idea can be applied to executing CPU instructions.

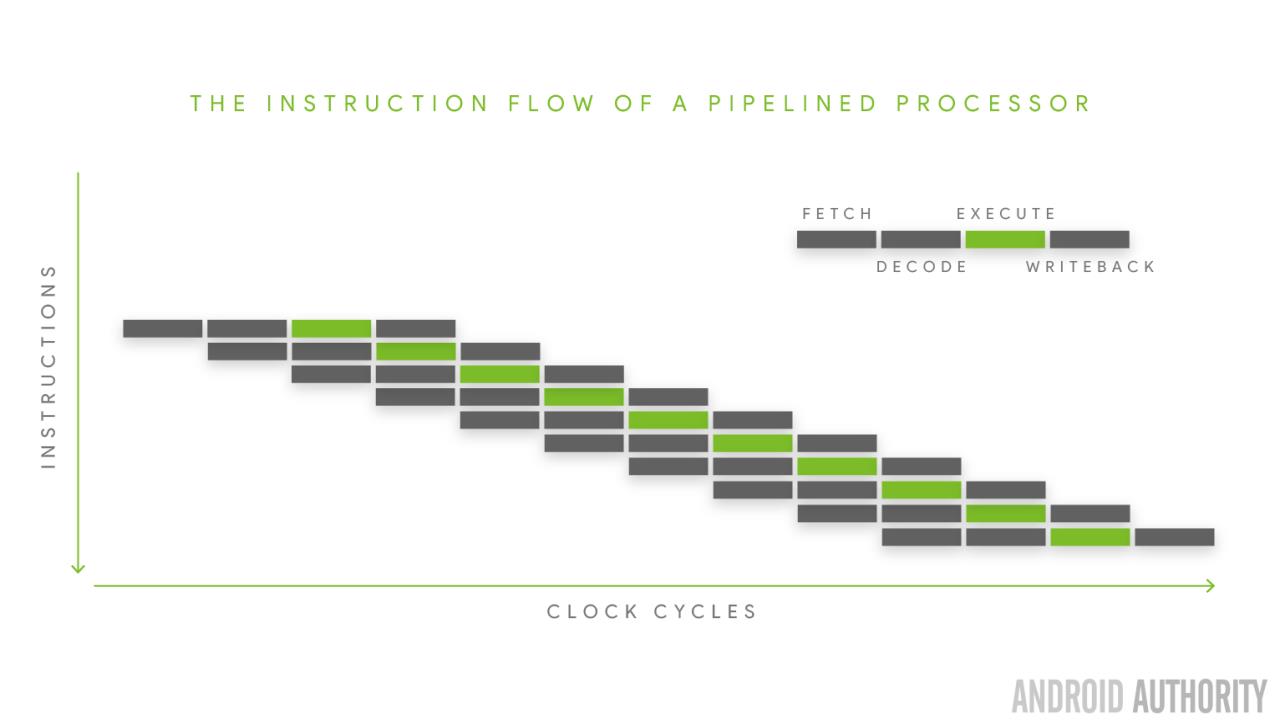

I already mentioned that some 8-bit CPUs would start to fetch the next instruction while the current instruction was being decoded and executed. Now if the processing of instructions can be split into different stages, into a pipeline, then four instructions can be on the assembly line (in the pipeline) simultaneously. Once the pipeline is full there is an instruction in the fetch stage, one in the decode stage, one in the execute stage, and one in the write-back stage.

To execute some instructions the execute stage will need to know the results of the previous execute, which is now in the write-back stage. However since the result from that instruction are available the next instruction is able to use the results immediately, rather than waiting for the write-back to occur.

The result of the pipeline approach is that now one instruction can be completed per clock cycle, bumping the IPC from 0.25 to 1.

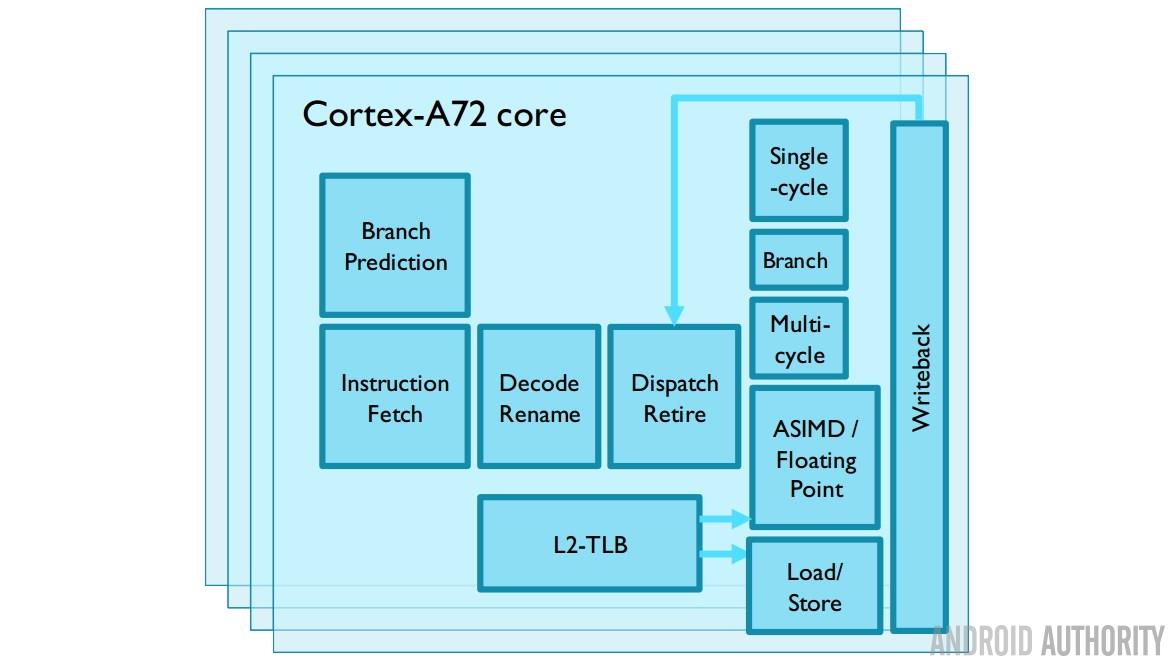

The pipeline idea can be extended further to break down the instruction handling into more than just four stages. These superpipelines have the advantage that complex stages can be broken down into smaller bits. With shorter pipelines the slowest stage dictates the speed of the whole pipeline, making it a bottleneck. Any bottlenecks can be alleviated by turning one complex stage into several simpler, but faster, ones. For example, the ARM Cortex-A72 uses a 15 stage pipeline, while the Cortex-A73 uses an 11 stage pipeline.

Not all instructions are the same

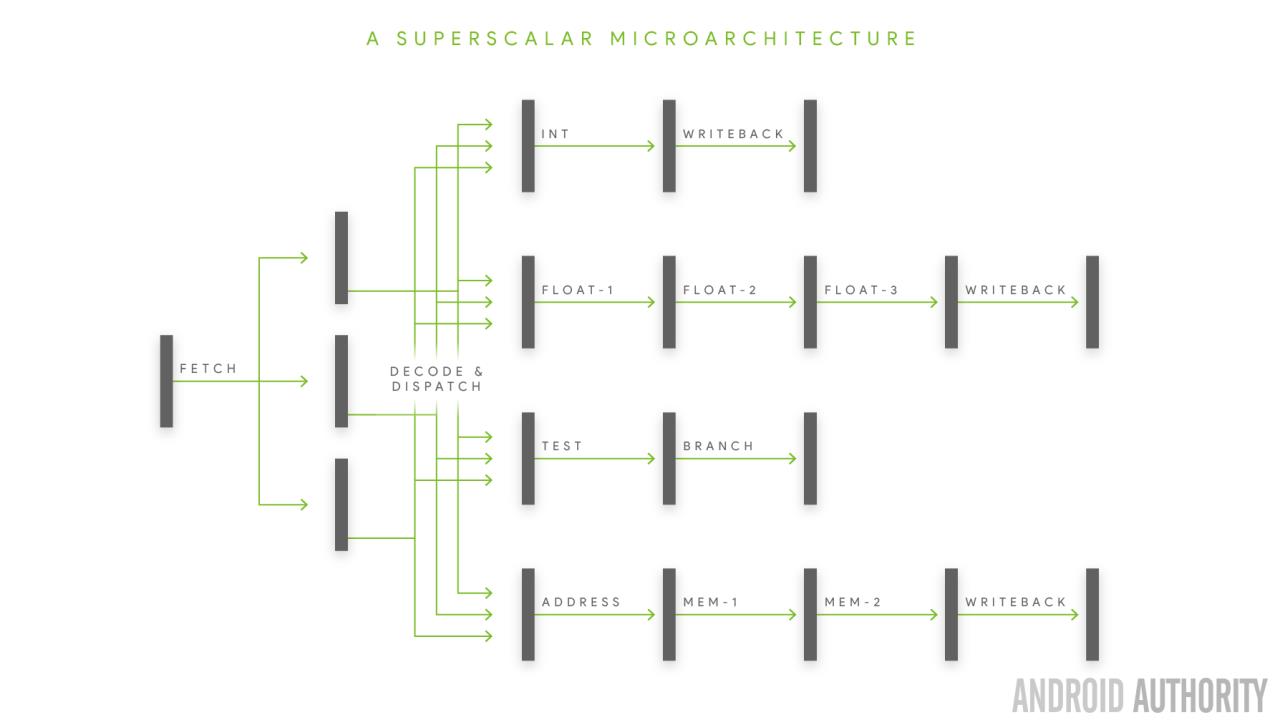

Any CPU instruction set has different classes of instructions. For example reading a value from memory is a different class of instruction than adding two integer numbers, which is in turn different to multiplying two floating point numbers, which is again different to testing if a condition is true, and so on.

Multiplying two floating point numbers is slower than adding two integers, or loading a value into a register. So the next step to improving performance is to split the execution engine into separate units which can run in parallel. This means that while a slow floating point multiply is occurring then a quick integer operation can also be dispatched and completed. Since there are now two instructions in the execute engine this is known as instruction-level parallelism (ILP).

When applied across the pipeline it means that these superscalar processors can have multiple decode units as well as multiple execution units. What is interesting is that the parallelism doesn’t need to be confined to different classes of instructions, but there can also be two load/store units, or two floating point engines and so on. The more execution units the greater the ILP. The greater the ILP the higher the performance.

The length of the pipeline is sometimes referred to as the processors depth, while its ILP capabilities are known as its width. Deep and wide seems to offer a lot of performance benefits. A wide CPU can complete more than one instruction per cycle. So we started at an IPC of 0.25. That went up to 1 and now it can be anywhere from 2 to 8 depending on the CPU.

All that glitters is not gold

At first glance it would seem that creating the deepest and widest processor possible would yield the highest performance gains. However there are some limitations. Instruction level parallelism is only possible if one instruction doesn’t depend on the result of the next. Here is a simplified example:

x = y * 3.14;

z = x + 2;

When compiled into machine code the instructions for the multiplication have to occur first and then the add. While the compiler could make some optimizations, if they are presented to the CPU in the order written by the programmer then the add instructions can’t occur until after the multiply. That means that even with multiple execution units the integer unit can’t be used until the floating point unit has done its job.

This creates what is called a bubble, a hiccup in the pipeline. This means that the IPC will drop, in fact it is very rare (if not impossible) that a CPU will run at its full theoretical IPC. This means that ILP also has practical limitations, often referred to as the ILP Wall.

There is also another problem with ILP. Computer programs aren’t linear. In fact they jump about all over the place. As you tap on the user interface in an app the program needs to jump to one place or another to execute the relevant code. Also things like loops cause the CPU to jump, backwards to repeat the same section of code, again and again, and then to jump out of the loop when it completes.

The problem with branching is that the pipeline is being filled preemptively with the next set of instructions. When the CPU branches then all the instructions in the pipeline could be the wrong ones! This means that the pipeline needs to be emptied and re-filled. To minimize this the CPU needs to predict what will happen at the next branch. This is called branch prediction. The better the branch prediction the better the performance.

Out-of-order

You got to love the English language, “out of order” can mean that something is broken, but it can also mean that something was done in a different sequence than originally specified. Since there can be bubbles in the pipeline then it would be good if the CPU could scan ahead and see if there are any instructions it could use to fill those gaps. Of course this means that the instructions aren’t being executed in the order specified by the programmer (and the compiler).

This is OK, as long as the CPU can guarantee that executing the instructions in a different order won’t alter the functionality of the program. To do this the CPU needs to run dependency analysis on potential instructions that will be executed out-of-order. If one of these instructions loads a new value into a register that is still being used by a previous group of instructions then the CPU needs to create a copy of the registers and work on both sets separately. This is known as register renaming.

The problem with out-of-order execution, dependency analysis and register renaming is that it is complex. It takes a lot of silicon space and because it is used for every instruction, all the time, then it can be a power hog. As a result not all CPUs have out-of-order capabilities. For example the ARM Cortex-A53 and the Cortex-A35 are “in order” processors. This means that they use less power than their bigger siblings, like the Cortex-A57 or Cortex-A73, but they also have lower performance levels. It is the old trade-off between power consumption and performance.

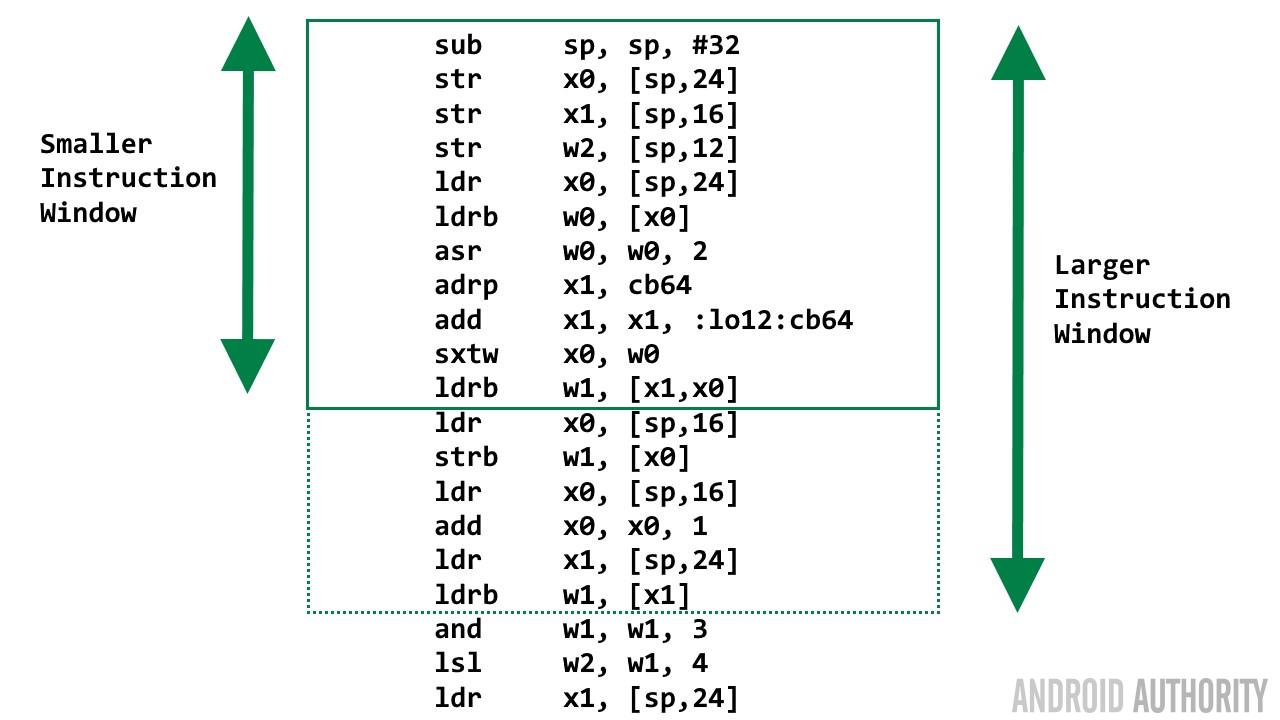

Instruction window

When looking for instructions to execute out of order, the CPU needs to scan ahead in the pipleline. How far ahead it scans is known as the instruction window. A larger instruction window gives a higher performance per clock cycle, a greater IPC. However it also means a greater silicon area and higher power consumption. It also presents problems for CPU designers as the larger the instruction window, the harder it is to get the internal timings right which means the peak clock frequency will be lower. CPUs with larger instruction windows need more internal resources. There needs to be more register renaming resources, the issue queues need to be longer and the various internal buffers need to be increased.

This means that CPU designers have a choice to work with a smaller instruction windows and aim for high clock frequencies, less silicon, and less power consumption; or work with a larger instruction window, with lower clock frequencies, more silicon and greater power consumption.

Since larger instruction windows means lower clock frequencies, more silicon (which means higher costs), and greater power consumption then you might think that the choice would be easy. However it isn’t because although the clock frequency is lower, the IPC is higher. And although the power consumption is higher the CPU has a greater chance of going to idle quicker, which saves power in the long run.

ARM, Apple, Qualcomm, Samsung etc

ARM licenses its CPU designs (i.e. Intellectual Property or IP) to its customers who then in turn build their own chips. So a processor like the Qualcomm Snapdragon 652 contains four ARM Cortex A72 cores and four ARM Cortex A53 cores, in a big.LITTLE arrangement. However ARM also grants some OEMs (via another license) the right to design their own ARM architecture cores, with the condition that the designs are fully compatible with the ARM instruction set. These are known as “architectural licenses”. Such licenses are held by Qualcomm, Apple, Samsung, NVIDIA and HUAWEI.

In general when ARM designs an out-of-order CPU core it opts for a smaller instruction window and higher clock speeds. The Cortex-A72 is capable of running at 2.5GHz, while the Cortex-A73 is able to reach 2.8GHz. The same is probably true of the Samsung Mongoose core, it can peak at 2.6GHz. However it looks like Qualcomm and Apple are going with the larger instruction window approach.

Qualcomm and Apple aren’t very forthcoming about the internal details of their CPUs, unlike ARM. But looking at the clock frequencies we see that the Kryo core in the Snapdragon 820 has a peak clock frequency of 2.15GHz. Now that isn’t particularly low, however it is lower than the 2.5GHz-2.8GHz of the ARM and Samsung cores. However the performance of the Kryo core is at least equal, if not better, than the ARM and Samsung cores. This means that the Kryo has a higher IPC, greater ILP and probably a larger instruction window.

| CPU Core | Clock speed | Pipeline | Instruction window & width |

|---|---|---|---|

| CPU Core ARM Cortex-A72 | Clock speed 2.5GHz | Pipeline 15 | Instruction window & width Smaller instruction window, narrower dispatch, higher clock speed |

| CPU Core ARM Cortex-A73 | Clock speed 2.8GHz | Pipeline 11 | Instruction window & width Smaller instruction window, narrower dispatch, higher clock speed |

| CPU Core Samsung Mongoose | Clock speed 2.6GHz | Pipeline ? | Instruction window & width Smaller instruction window, narrower dispatch, higher clock speed |

| CPU Core Qualcomm Kryo | Clock speed 2.15GHz | Pipeline ? | Instruction window & width Larger instruction window, wider dispatch, lower clock speed |

| CPU Core Apple A8 | Clock speed 1.5GHz | Pipeline 16 | Instruction window & width Larger instruction window, wider dispatch, lower clock speed |

| CPU Core Apple A9 | Clock speed 1.85GHz | Pipeline 16 | Instruction window & width Larger instruction window, wider dispatch, lower clock speed |

When it comes to Apple’s core designs, it seems that Cupertino has heavily invested in the larger instruction window idea. The Apple A9 (as found in the iPhone 6S) is clocked at only 1.85 GHz. More than that it is a dual-core design, compared to the quad-core and octa-core designs from Qualcomm and Samsung. However the performance of the A9 is clearly on par or better than the current high end Snapdragon and Exynos processors. The same can be said for its predecessor, the Apple A8, which was clocked at just 1.5GHz.

The future and wrap-up

Clearly both the higher clock speed with lower IPC and the lower clock speed with higher IPC approaches have their merits. Some mobile SoC makers have opted to go with the first (i.e. ARM and Samsung) and it seems that others (like Apple and Qualcomm) have opted for the latter. Of course, the overall power and performance levels of a System-on-a-Chip is more than just a tale of IPC, ILP and the instruction window. Other factors include the cache memory system, the interconnects between the various components, the GPU, the speed of the external memory and so on.

[related_videos title=”Gary also explains:” align=”center” type=”custom” videos=”704836,699914,699887,696393,694411,684167″]

It will be interesting to see over the next few years in which direction CPU design will head. Since there are limits to clock frequency and to ILP, which solution will be the best in the long run? Both? Neither? Also the process nodes that are used to make CPUs are getting harder and harder. We are already at 14/16nm, next comes 10nm and then 7nm, but after that it isn’t clear if 5nm, will be viable. This means that CPU designers will need to find new and interesting ways to boost performance while keeping the power consumption low.

Gary also explains:

- What is Bluetooth 5?

- Does bloatware drain your battery?

- Does a 3000 mAh portable power bank charge a 3000 mAh phone?

- What is a VPN?

I would be interested to hear your thoughts on instructions per cycle. Do you think that one approach is better than the other? Please use the comment below to let me know what you think.