Affiliate links on Android Authority may earn us a commission. Learn more.

Virtual Memory explained: How Android keeps your apps running smoothly

At the heart of your Android smartphone sits the Linux kernel, a modern multitasking operating system. Its job is to manage the computing resources on your phone, including the CPU, the GPU, the display, the storage, the networking, and so on. It is also responsible for the Random Access Memory (RAM). The apps, the background services, and even Android itself all need access to the RAM. How Linux partitions that memory and allocates it is vital to your smartphone running smoothly. This is where virtual memory comes in.

What is Virtual Memory?

As a quick refresher, programs (apps) consist of code and data. The code is loaded into memory when you launch an app. The code starts at a given point and progresses one instruction at a time. The data is either then read from the storage, retrieved over the network, generated, or a combination of all three. Each location in memory that stores code or data is known by its address. Just like a postal address that uniquely identifies a building, a memory address uniquely identifies a place in the RAM.

Virtual memory maps app data to a space in your phone's physical RAM.

The problem is that apps don’t know where they are going to be loaded into RAM. So if the program is expecting address 12048, for example, to be used as a counter, then it has to be that exact address. But the app could be loaded somewhere else in memory, and address 12048 may be used by another app.

The solution is to give all apps virtual addresses, that start at 0 and go up to 4GB (or more in some cases). Then every app can use any address it needs, including 12048. Each app has its own unique virtual address space, and it never needs to worry about what other apps are doing. These virtual addresses are mapped to actual physical addresses somewhere in the RAM. It’s the job of the Linux kernel to manage all the mapping of the virtual addresses to physical addresses.

Why is Virtual Memory useful?

Virtual Memory is a digital representation of the physical memory implemented so that each app has its own private address space. This means that apps can be managed and run independently of each other, as each app is memory self-sufficient.

This is the fundamental building block of all multitasking operating systems, including Android. Since the apps run in their own address space, Android can start running an app, pause it, switch to another app, run it, and so on. Without virtual memory, we’d be stuck running just one app at a time.

Without virtual memory, we'd be stuck running just one app at a time.

It also enables Android to use swap space or zRAM and therefore increase the number of apps that can stay in memory before being killed off to make room for a new app. You can read more about how zRAM affects smartphone multitasking at the link below.

Read more: How much RAM does your Android phone really need?

That’s the basics of virtual memory covered, so let’s dig into exactly how it all works under the hood.

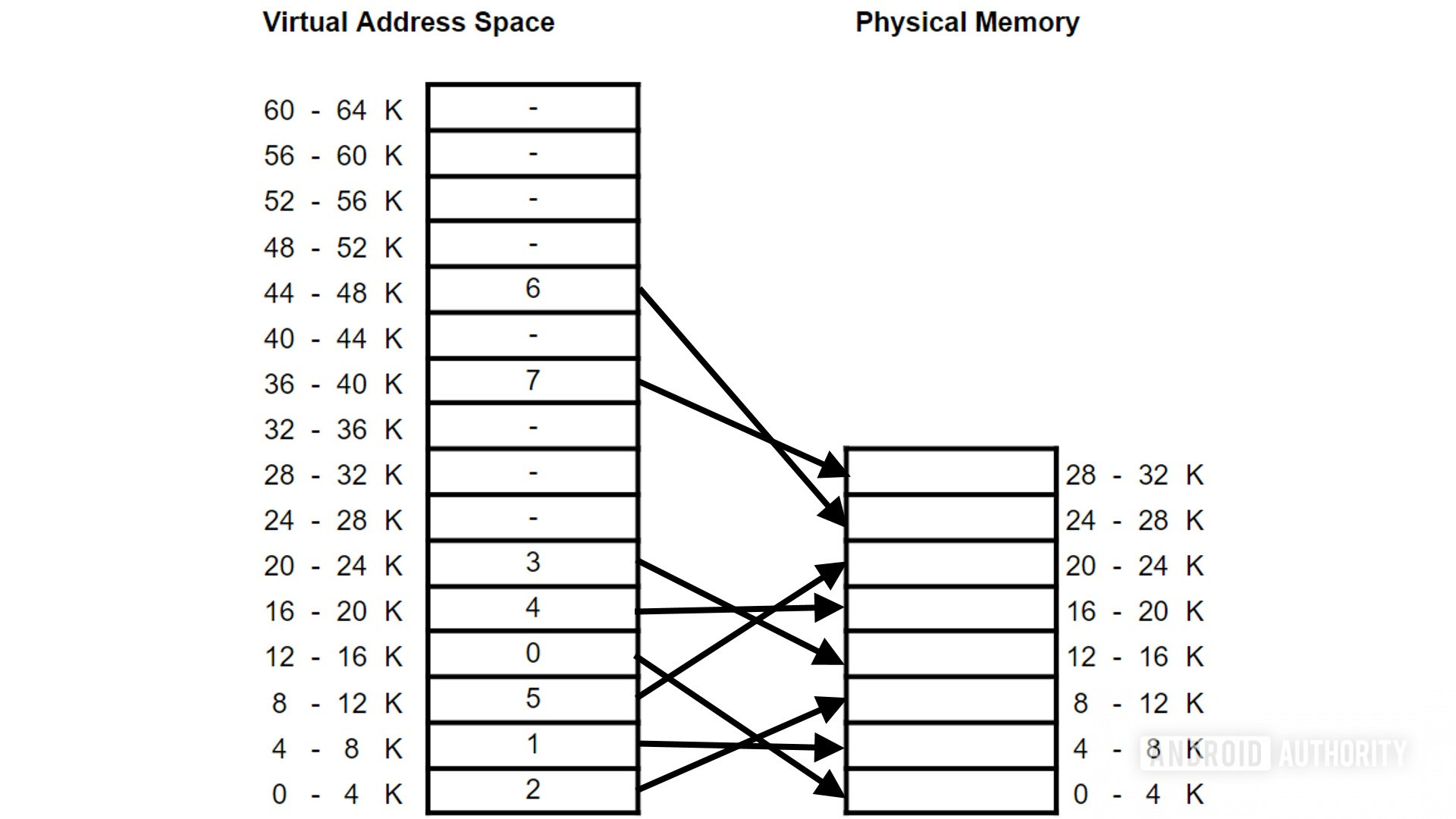

Virtual memory and pages

To aid the mapping from virtual to physical, both address spaces are divided into sections, called pages. Pages in the virtual and physical space need to be the same size and are generally 4K in length. To differentiate between the virtual pages and the physical ones, the latter are called page frames rather than just pages. Here is a simplified diagram showing the mapping of 64K of virtual space to 32K of physical RAM.

Page zero (from 0 to 4095) in the virtual memory (VM) is mapped to page frame two (8192 to 12287) in the physical memory. Page one (4096 to 8191) in VM is mapped to page frame 1 (also 4096 to 8191), page two is mapped to page frame five, and so on.

One thing to note is that not all virtual pages need to be mapped. Since each app is given ample address space, there will be gaps that don’t need to be mapped. Sometimes these gaps can be gigabytes in size.

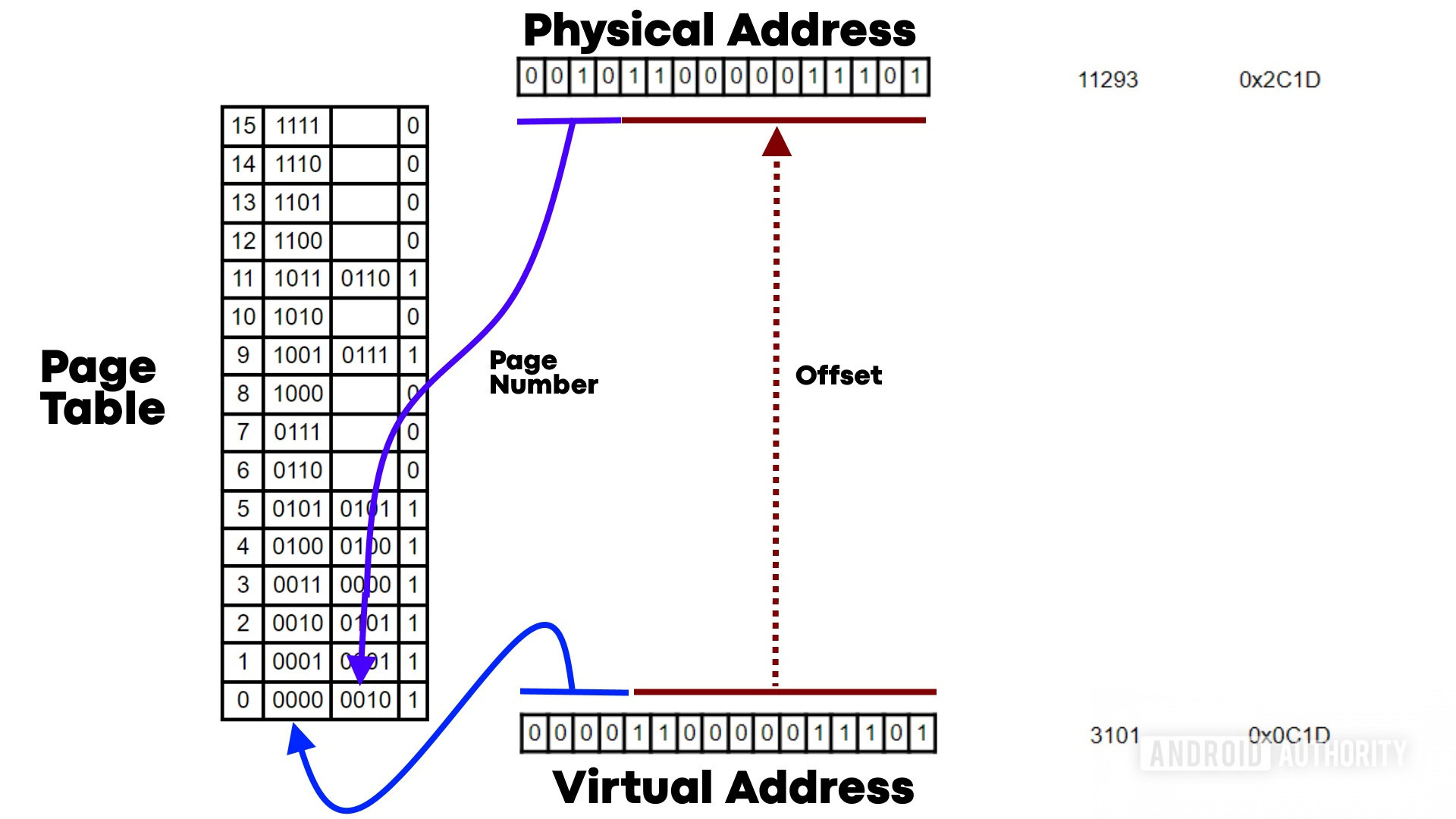

If an app wants to access the virtual address 3101 (that is in page zero), it is translated to an address in physical memory in page frame two, specifically physical address 11293.

Memory Management Unit (MMU) is here to help

Modern processors have a dedicated piece of hardware that handles the mapping between the VM and the physical memory. It is called the Memory Management Unit (MMU). The MMU holds a table that maps pages to page frames. This means that the OS doesn’t need to do the translation, it happens automatically in the CPU, which is much faster and more efficient. The CPU knows that the apps are trying to access virtual addresses and it automatically translates them into physical addresses. The job of the OS is to manage the tables that are used by the MMU.

How does the MMU translate the addresses?

The MMU uses the page table set up by the OS to translate virtual addresses to physical addresses. Sticking with our example of address 3101, which is 0000 1100 0001 1101 in binary, the MMU translates it to 11293 (or 0010 1100 0001 1101). It does it like this:

- The first four bits (0000) are the virtual page number. It is used to look up the page frame number in the table.

- The entry for page zero is page frame two, or 0010 in binary.

- The bits 0010 are used to create the first four bits of the physical address.

- The remaining twelves bits, called the offset, are copied directly to the physical address.

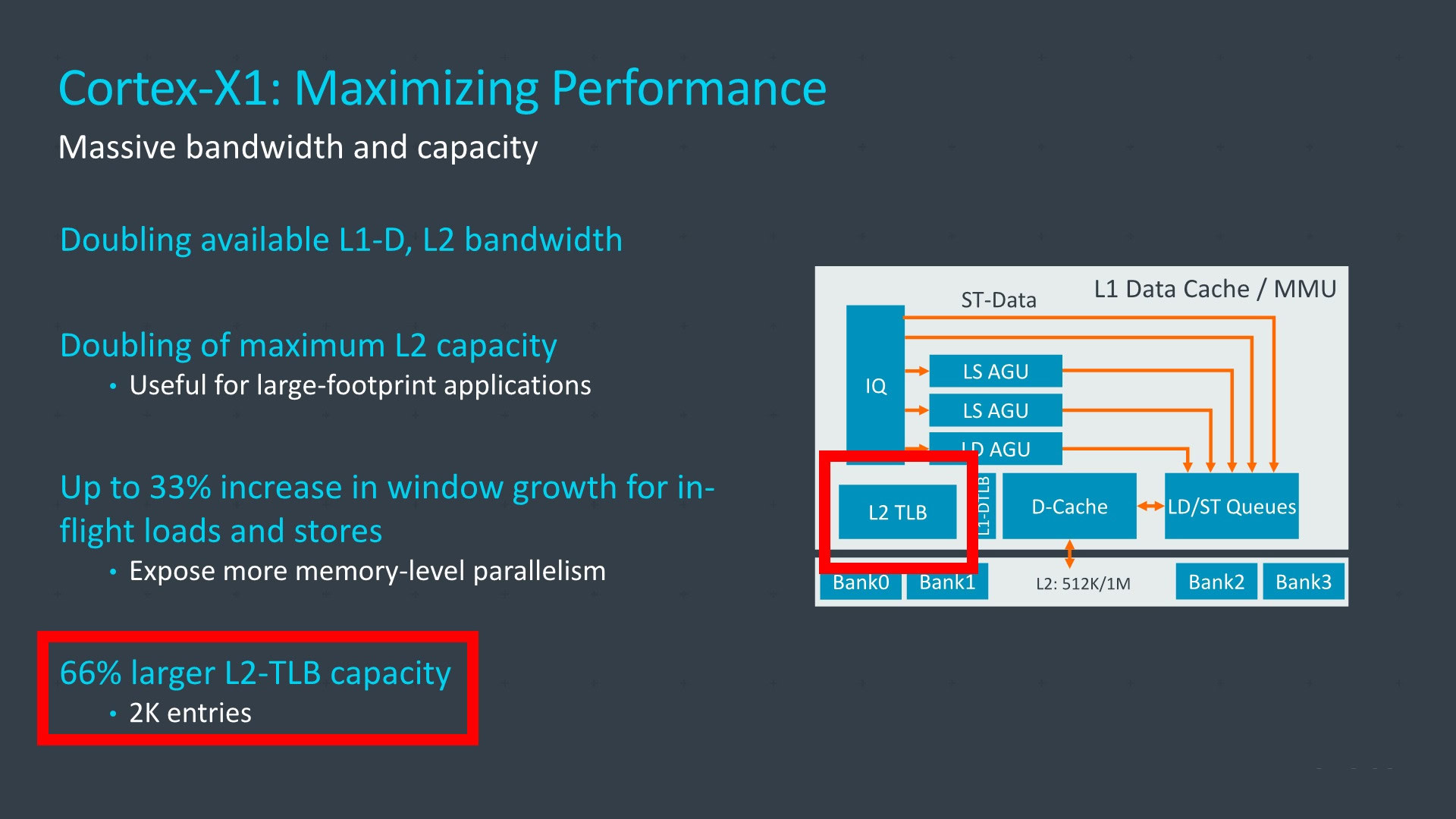

The only difference between 3101 and 11293 is that the first four bits changed to represent the page in physical memory, rather than the page in virtual memory. The advantage of using pages is that the next address, 3102, uses the same page frame as 3101. Only the offset changes, so when the addresses stay inside the 4K page the MMU has an easy time doing the translations. In fact, the MMU uses a cache called the Translation Lookaside Buffer (TLB) to speed up the translations.

Translation Lookaside Buffer explained

The Translation Lookaside Buffer (TLB) is a cache of recent translations performed by the MMU. Before an address is translated, the MMU checks to see if the page-to-page frame translation is already cached in the TLB. If the requested page lookup is available (a hit) then the translation of the address is immediately available.

Each TLB entry typically contains not just the page and page frames but also attributes such as memory type, cache policies, access permissions, and so on. If the TLB does not contain a valid entry for the virtual address (a miss) then the MMU is forced to look up the page frame in the page table. Since the page table is itself in memory, this means the MMU is required to access memory again to resolve the ongoing memory access. Dedicated hardware within the MMU enables it to read the translation table in memory quickly. Once the new translation is performed it can be cached for possible future reuse.

Looking back: The history of Android — the evolution of the biggest mobile OS in the world

Is it just as simple as that?

At one level the translations performed by the MMU seem quite simple. Do a lookup and copy over some bits. However, there are a few problems that complicate matters.

My examples have been dealing with 64K of memory, but in the real world, apps can use hundreds of megabytes, even a gigabyte or more of RAM. A full 32-bit page table is around 4MB in size (including frames, absent/present, modified, and other flags). Each app needs its own page table. If you have 100 tasks running (including apps, background services, and Android services) then that is 400MB of RAM just to hold the page tables.

To differentiate between the virtual pages and the physical ones, the latter are called page frames.

Things get worse if you go over 32-bits, the pages tables must stay in RAM all the time and they can’t be swapped out or compressed. On top of that, the page table needs an entry for every page even if it is not being used and has no corresponding page frame.

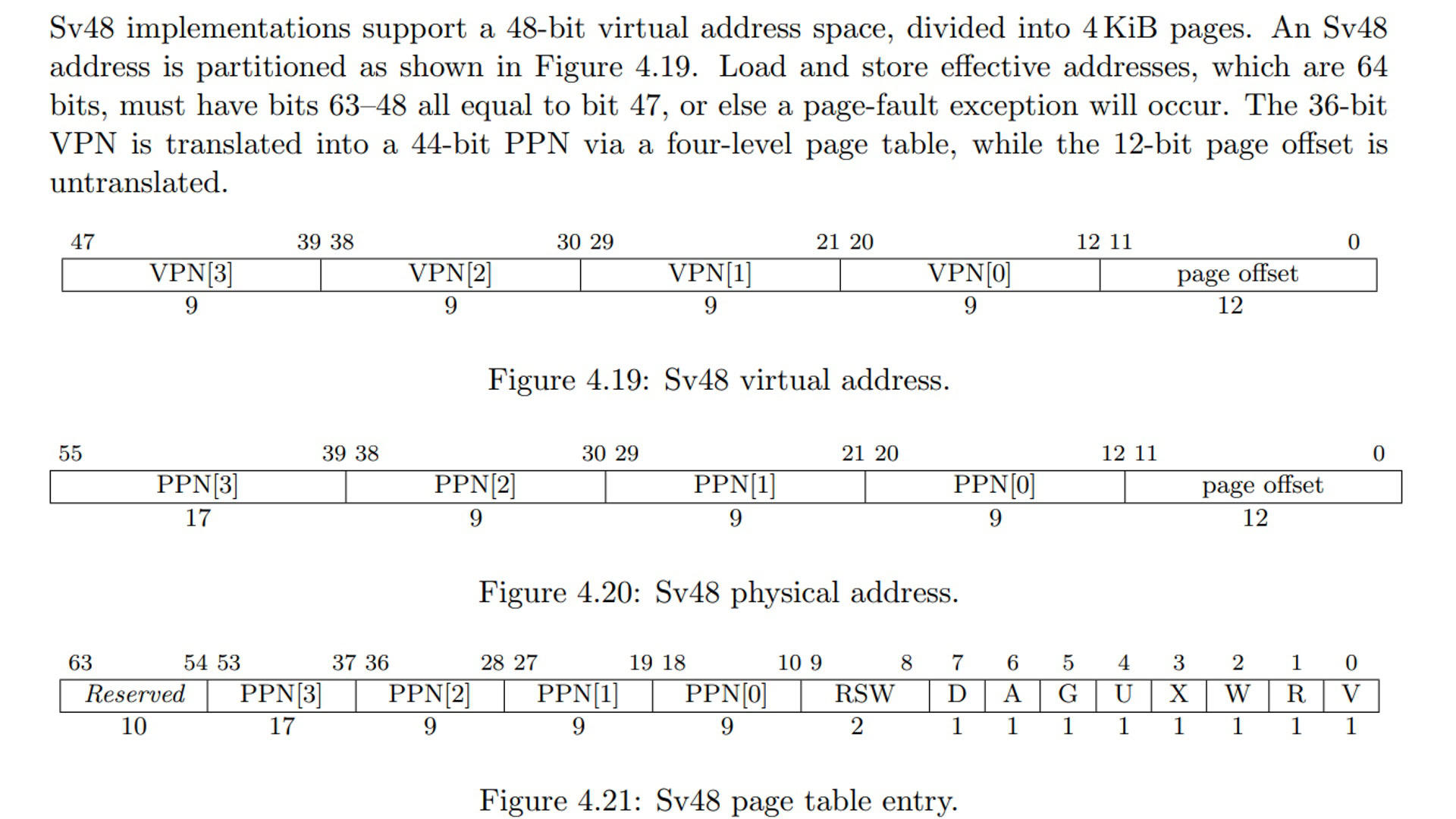

The solution to these problems is to use a multilevel page table. In our working example above we saw that four bits were used as page numbers. It is possible to split the table into multiple parts. The first two bits can be used as a reference to another table that contains the page table for all addresses starting with those two bits. So there would be a page table for all addresses starting with 00, another for 01, and 10, and finally 11. So now there are four page tables, plus a top-level table.

Check out: The best phones with 16GB of RAM

The top-level tables must remain in memory but the other four can be swapped out if needed. Likewise, if there are no addresses starting with 11 then no page table is needed. In a real-world implementation, these tables can be four or five levels deep. Each table points to another one, according to the relevant bits in the address.

Above is a diagram from the RISC-V documentation showing how that architecture implements 48-bit virtual addressing. Each Page Table Entry (PTE) has some flags in the space that would be used by the offset. The permission bits, R, W, and X, indicate whether the page is readable, writable, and executable, respectively. When all three are zero, the PTE is a pointer to the next level of the page table; otherwise, it is a leaf PTE and the lookup can be performed.

How Android handles a page fault

When the MMU and the OS are in perfect harmony then all is well. But there can be errors. What happens when the MMU tries to look up a virtual address and it can’t be found in the page table?

This is known as a page fault. And there are three types of page fault:

- Hard page fault — The page frame is not in memory and needs to be loaded from swap or from zRAM.

- Soft page fault — If the page is loaded in memory at the time the fault is generated but is not marked in the memory management unit as being loaded in memory, then it is called a minor or soft page fault. The page fault handler in the operating system needs to make the entry for that page in the MMU. This could happen if the memory is shared by different apps and the page has already been brought into memory, or when an app has requested some new memory and it has been lazily allocated, waiting for the first page access.

- Invalid page fault — The program is trying to access memory that isn’t in its address space. This leads to a segmentation fault or access violation. This can happen if the program tries to write to read-only memory, or it deferences a null pointer, or due to buffer overflows.

The benefits of Virtual Memory

As we have discovered, Virtual Memory is a way to map the physical memory so that apps can use the RAM independently, without worrying about how other apps are using the memory. It allows Android to multitask as well as use swapping.

Without Virtual Memory, our phones would be limited to running one app at a time, apps couldn’t be swapped out, and any attempts to hold more than one app at a time in memory would need some fancy programming.

The next time you start an app, you’ll now be able to ponder all that’s going on inside the processor and inside of Android to make your smartphone experience as smooth as possible.

Up next: The best phones with 12GB of RAM — what are your best options?