Affiliate links on Android Authority may earn us a commission. Learn more.

Responding to user activity with the Activity Recognition API

Published onJanuary 12, 2018

Smartphones have become one of those essentials that we carry with us everywhere, so your typical mobile app is going to be used in all kinds of situations and locations.

The more your app knows about this changing context, the better it can adapt to suit the user’s current context. Whether your app detects the user’s location and displays this information on a map; reverse-geocodes the device’s coordinates into a street address; or uses hardware sensors to respond to changes in light levels or user proximity, there’s a huge range of contextual information that your app can access, and then use to provide a more engaging user experience.

The Activity Recognition API is a unique way of adding contextual awareness to your application, by letting you detect whether the user is currently walking, running, cycling, travelling in a car, or engaged in a range of other physical activities.

This information is essential for many fitness applications, but even if you don’t dream of conquering Google Play’s Health & Fitness category, this is still valuable information that you can use in a huge range of applications.

In this article, I’m going to show you how to build an application that uses the Activity Recognition API to detect a range of physical activities, and then display this information to the user.

What is the Activity Recognition API?

The Activity Recognition API is an interface that periodically wakes the device, reads bursts of data from the device’s sensors, and then analyzes this data using powerful machine learning models.

Activity detection isn’t an exact science, so rather than returning a single activity that the user is definitely performing, the Activity Recognition API returns a list of activities that the user may be performing, with a confidence property for each activity. This confidence property is always an integer, ranging from 0 to 100. If an activity is accompanied by a confidence property of 75% or higher, then it’s generally safe to assume that the user is performing this activity, and adjust your application’s behaviour accordingly (although it’s not impossible for multiple activities to have a high confidence percentage, especially activities that are closely related, such as running and walking).

We’re going to display this confidence percentage in our application’s UI, so you’ll be able to see exactly how this property updates, in response to changing user activity.

The Activity Recognition API can detect the following activities:

- IN_VEHICLE. The device is in a vehicle, such as a car or bus. The user may be the one behind the wheel, or they could be the passenger.

- ON_BICYLE. The device is on a bicycle.

- ON_FOOT. The device is being carried by someone who is walking or running.

- WALKING. The device is being carried by someone who is walking. WALKING is a sub-activity of ON_FOOT.

- RUNNING. The device is being carried by someone who is running. RUNNING is a sub-activity of ON_FOOT.

- TILTING. The device’s angle relative to gravity has changed significantly. This activity is often detected when the device is lifted from a flat surface such as a desk, or when it’s inside someone’s pocket, and that person has just moved from a sitting to a standing position.

- STILL. The device is stationary.

- UNKNOWN. The Activity Recognition API is unable to detect the current activity.

How can I use the Activity Recognition API?

Google Play’s Health & Fitness category is packed with apps dedicated to measuring and analyzing your day-to-day physical activities, which makes it a great place to get some inspiration about how you might use Activity Recognition in your own projects. For example, you could use the Activity Recognition API to create an app that motivates the user to get up and stretch when they’ve been stationary for an extended period of time, or an application that tracks the user’s daily run and prints their route on a map, ready for them to post to Facebook (because if Facebook isn’t aware that you got up early and went for a run before work, then did it even really happen?)

While you could deliver the same functionality without the Activity Recognition API, this would require the user to notify your app whenever they’re about to start a relevant activity. You can provide a much better user experience by monitoring these activities, and then performing the desired action automatically.

Although fitness applications are the obvious choice, there’s lots of ways that you can use Activity Recognition in applications that don’t fall into the Health & Fitness category. For example, your app might switch to a “hands-free” mode whenever it detects that the user is cycling; request location updates more frequently when the user is walking or running; or display the quickest way to reach a destination by road when the user is travelling in a vehicle.

Create your project

We’re going to build an application that uses the Activity Recognition API to retrieve a list of possible activities and percentages, and then display this information to the user.

The Activity Recognition API requires Google Play Services. To help keep the number of methods in our project under control, I’m only adding the section of this library that’s required to deliver the Activity Recognition functionality. I’m also adding Gson as a dependency, as we’ll be using this library throughout the project:

dependencies {

compile 'com.google.android.gms:play-services-location:11.8.0'

compile 'com.google.code.gson:gson:2.8.1'

...

...

...Next, add the com.google.android.gms.permission.ACTIVITY_RECOGNITION permission to your Manifest:

<uses-permission android:name="com.google.android.gms.permission.ACTIVITY_RECOGNITION" />Create your user interface

Let’s get the easy stuff out of the way and create the layouts we’ll be using throughout this project:

- main_activity. This layout contains a button that the user will press when they want to start recording their activity.

- detected_activity. Eventually, we’ll display each detected activity in a ListView, so this layout provides a View hierarchy that the adapter can use for each data entry.

Open the automatically-generated main_activity.xml file, and add the following:

<?xml version="1.0" encoding="utf-8"?>

<LinearLayout xmlns:android="http://schemas.android.com/apk/res/android"

android:layout_width="match_parent"

android:layout_height="match_parent"

android:orientation="vertical"

android:paddingBottom="@dimen/layout_padding"

android:paddingLeft="@dimen/layout_padding"

android:paddingRight="@dimen/layout_padding"

android:paddingTop="@dimen/layout_padding">

<Button

android:id="@+id/get_activity"

android:layout_width="wrap_content"

android:layout_height="wrap_content"

android:onClick="requestUpdatesHandler"

android:text="@string/track" />

<ListView

android:id="@+id/activities_listview"

android:layout_width="match_parent"

android:layout_height="wrap_content" />

</LinearLayout>Next, create a detected_activity file:

- Control-click your project’s ‘res/layout’ folder.

- Select ‘New > Layout resource file.’

- Name this file ‘detected_activity’ and click ‘OK.’

Open this file and define the layout for each item in our data set:

<?xml version="1.0" encoding="utf-8"?>

<LinearLayout xmlns:android="http://schemas.android.com/apk/res/android"

android:layout_width="match_parent"

android:layout_height="match_parent"

android:orientation="vertical">

<RelativeLayout

android:layout_width="match_parent"

android:layout_height="wrap_content">

<TextView

android:id="@+id/activity_type"

android:layout_width="wrap_content"

android:layout_height="wrap_content"

android:layout_marginTop="@dimen/textview_margin"

android:text="@string/still" />

<TextView

android:id="@+id/confidence_percentage"

android:layout_width="wrap_content"

android:layout_height="wrap_content"

android:layout_alignParentRight="true"

android:layout_alignParentEnd="true"

android:layout_marginTop="@dimen/textview_margin" />

</RelativeLayout>

</LinearLayout>These layouts reference a few different resources, so open your project’s strings.xml file and define the button’s label, plus all the strings we’ll eventually display in our ListView:

<resources>

<string name="app_name">Activity Recognition</string>

<string name="track">Track Activity</string>

<string name="percentage">%1$d%%</string>

<string name="bicycle">On a bicycle</string>

<string name="foot">On foot</string>

<string name="running">Running</string>

<string name="still">Still</string>

<string name="tilting">Tilting</string>

<string name="unknown_activity">Unknown activity</string>

<string name="vehicle">In a vehicle</string>

<string name="walking">Walking</string>

</resources>We also need to define a few dimens.xml values. If your project doesn’t already contain a res/values/dimens.xml file, then you’ll need to create one:

- Control-click your ‘res/values’ folder.

- Select ‘New > Values resource file.’

- Enter the name ‘dimens’ and then click ‘OK.’

Open your dimens.xml file and add the following:

<resources>

<dimen name="layout_padding">20dp</dimen>

<dimen name="textview_margin">10dp</dimen>

</resources>

Create your IntentService

Many applications use the Activity Recognition API to monitor activities in the background and then perform an action whenever a certain activity is detected.

Since leaving a service running in the background is a good way to use up precious system resources, the Activity Recognition API delivers its data via an intent, which contains a list of activities the user may be performing at this particular time. By creating a PendingIntent that’s called whenever your app receives this intent, you can monitor the user’s activities without having to create a persistently running service. Your app can then extract the ActivityRecognitionResult from this intent, and convert this data into a more user-friendly string, ready to display in your UI.

Create a new class (I’m using ActivityIntentService) and then implement the service that’ll receive these Activity Recognition updates:

import java.util.ArrayList;

import java.lang.reflect.Type;

import android.content.Context;

import com.google.gson.Gson;

import android.content.Intent;

import android.app.IntentService;

import android.preference.PreferenceManager;

import android.content.res.Resources;

import com.google.gson.reflect.TypeToken;

import com.google.android.gms.location.ActivityRecognitionResult;

import com.google.android.gms.location.DetectedActivity;

//Extend IntentService//

public class ActivityIntentService extends IntentService {

protected static final String TAG = "Activity";

//Call the super IntentService constructor with the name for the worker thread//

public ActivityIntentService() {

super(TAG);

}

@Override

public void onCreate() {

super.onCreate();

}

//Define an onHandleIntent() method, which will be called whenever an activity detection update is available//

@Override

protected void onHandleIntent(Intent intent) {

//Check whether the Intent contains activity recognition data//

if (ActivityRecognitionResult.hasResult(intent)) {

//If data is available, then extract the ActivityRecognitionResult from the Intent//

ActivityRecognitionResult result = ActivityRecognitionResult.extractResult(intent);

//Get an array of DetectedActivity objects//

ArrayList<DetectedActivity> detectedActivities = (ArrayList) result.getProbableActivities();

PreferenceManager.getDefaultSharedPreferences(this)

.edit()

.putString(MainActivity.DETECTED_ACTIVITY,

detectedActivitiesToJson(detectedActivities))

.apply();

}

}

//Convert the code for the detected activity type, into the corresponding string//

static String getActivityString(Context context, int detectedActivityType) {

Resources resources = context.getResources();

switch(detectedActivityType) {

case DetectedActivity.ON_BICYCLE:

return resources.getString(R.string.bicycle);

case DetectedActivity.ON_FOOT:

return resources.getString(R.string.foot);

case DetectedActivity.RUNNING:

return resources.getString(R.string.running);

case DetectedActivity.STILL:

return resources.getString(R.string.still);

case DetectedActivity.TILTING:

return resources.getString(R.string.tilting);

case DetectedActivity.WALKING:

return resources.getString(R.string.walking);

case DetectedActivity.IN_VEHICLE:

return resources.getString(R.string.vehicle);

default:

return resources.getString(R.string.unknown_activity, detectedActivityType);

}

}

static final int[] POSSIBLE_ACTIVITIES = {

DetectedActivity.STILL,

DetectedActivity.ON_FOOT,

DetectedActivity.WALKING,

DetectedActivity.RUNNING,

DetectedActivity.IN_VEHICLE,

DetectedActivity.ON_BICYCLE,

DetectedActivity.TILTING,

DetectedActivity.UNKNOWN

};

static String detectedActivitiesToJson(ArrayList<DetectedActivity> detectedActivitiesList) {

Type type = new TypeToken<ArrayList<DetectedActivity>>() {}.getType();

return new Gson().toJson(detectedActivitiesList, type);

}

static ArrayList<DetectedActivity> detectedActivitiesFromJson(String jsonArray) {

Type listType = new TypeToken<ArrayList<DetectedActivity>>(){}.getType();

ArrayList<DetectedActivity> detectedActivities = new Gson().fromJson(jsonArray, listType);

if (detectedActivities == null) {

detectedActivities = new ArrayList<>();

}

return detectedActivities;

}

}Don’t forget to register the service in your Manifest:

</activity>

<service

android:name=".ActivityIntentService"

android:exported="false" />

</application>

</manifest>Retrieving Activity Recognition updates

Next, you need to decide how frequently your app should receive new activity recognition data.

Longer update intervals will minimize the impact your application has on the device’s battery, but if you set these intervals too far apart then it could result in your application performing actions based on significantly out-of-date information.

Smaller update intervals mean your application can respond to activity changes more quickly, but it also increases the amount of battery your application consumes. And if a user identifies your application as being a bit of a battery hog, then they may decide to uninstall it.

Note that the Activity Recognition API will try to minimize battery use automatically by suspending reporting if it detects that the device has been stationary for an extended period of time, on devices that support the Sensor.TYPE_SIGNIFICANT_MOTION hardware.

Your project’s update interval also affects the amount of data your app has to work with. Frequent detection events will provide more data, which increases your app’s chances of correctly identifying user activity. If further down the line you discover that your app’s activity detection isn’t as accurate as you’d like, then you may want to try reducing this update interval.

Finally, you should be aware that various factors can interfere with your app’s update interval, so there’s no guarantee that your app will receive every single update at this exact frequency. Your app may receive updates ahead of schedule if the API has reason to believe the activity state is about to change, for example if the device has just been unplugged from a charger. At the other end of the scale, your app might receive updates after the requested interval if the Activity Recognition API requires additional data in order to make a more accurate assessment.

I’m going to define this update interval (alongside some other functionality) in the MainActivity class:

import android.support.v7.app.AppCompatActivity;

import android.os.Bundle;

import android.content.Context;

import android.content.Intent;

import android.widget.ListView;

import android.app.PendingIntent;

import android.preference.PreferenceManager;

import android.content.SharedPreferences;

import android.view.View;

import com.google.android.gms.location.ActivityRecognitionClient;

import com.google.android.gms.location.DetectedActivity;

import com.google.android.gms.tasks.OnSuccessListener;

import com.google.android.gms.tasks.Task;

import java.util.ArrayList;

public class MainActivity extends AppCompatActivity

implements SharedPreferences.OnSharedPreferenceChangeListener {

private Context mContext;

public static final String DETECTED_ACTIVITY = ".DETECTED_ACTIVITY";

//Define an ActivityRecognitionClient//

private ActivityRecognitionClient mActivityRecognitionClient;

private ActivitiesAdapter mAdapter;

@Override

public void onCreate(Bundle savedInstanceState) {

super.onCreate(savedInstanceState);

setContentView(R.layout.activity_main);

mContext = this;

//Retrieve the ListView where we’ll display our activity data//

ListView detectedActivitiesListView = (ListView) findViewById(R.id.activities_listview);

ArrayList<DetectedActivity> detectedActivities = ActivityIntentService.detectedActivitiesFromJson(

PreferenceManager.getDefaultSharedPreferences(this).getString(

DETECTED_ACTIVITY, ""));

//Bind the adapter to the ListView//

mAdapter = new ActivitiesAdapter(this, detectedActivities);

detectedActivitiesListView.setAdapter(mAdapter);

mActivityRecognitionClient = new ActivityRecognitionClient(this);

}

@Override

protected void onResume() {

super.onResume();

PreferenceManager.getDefaultSharedPreferences(this)

.registerOnSharedPreferenceChangeListener(this);

updateDetectedActivitiesList();

}

@Override

protected void onPause() {

PreferenceManager.getDefaultSharedPreferences(this)

.unregisterOnSharedPreferenceChangeListener(this);

super.onPause();

}

public void requestUpdatesHandler(View view) {

//Set the activity detection interval. I’m using 3 seconds//

Task<Void> task = mActivityRecognitionClient.requestActivityUpdates(

3000,

getActivityDetectionPendingIntent());

task.addOnSuccessListener(new OnSuccessListener<Void>() {

@Override

public void onSuccess(Void result) {

updateDetectedActivitiesList();

}

});

}

//Get a PendingIntent//

private PendingIntent getActivityDetectionPendingIntent() {

//Send the activity data to our DetectedActivitiesIntentService class//

Intent intent = new Intent(this, ActivityIntentService.class);

return PendingIntent.getService(this, 0, intent, PendingIntent.FLAG_UPDATE_CURRENT);

}

//Process the list of activities//

protected void updateDetectedActivitiesList() {

ArrayList<DetectedActivity> detectedActivities = ActivityIntentService.detectedActivitiesFromJson(

PreferenceManager.getDefaultSharedPreferences(mContext)

.getString(DETECTED_ACTIVITY, ""));

mAdapter.updateActivities(detectedActivities);

}

@Override

public void onSharedPreferenceChanged(SharedPreferences sharedPreferences, String s) {

if (s.equals(DETECTED_ACTIVITY)) {

updateDetectedActivitiesList();

}

}

}Displaying the activity data

In this class, we’re going to retrieve the confidence percentage for each activity, by calling getConfidence() on the DetectedActivity instance. We’ll then populate the detected_activity layout with the data retrieved from each DetectedActivity object.

Since each activity’s confidence percentage will change over time, we need to populate our layout at runtime, using an Adapter. This Adapter will retrieve data from the Activity Recognition API, return a TextView for each entry in the data set, and then insert these TextViews into our ListView.

Create a new class, called ActivitiesAdapter, and add the following:

import android.support.annotation.NonNull;

import android.support.annotation.Nullable;

import java.util.ArrayList;

import java.util.HashMap;

import android.widget.ArrayAdapter;

import android.content.Context;

import android.view.LayoutInflater;

import android.widget.TextView;

import android.view.View;

import android.view.ViewGroup;

import com.google.android.gms.location.DetectedActivity;

class ActivitiesAdapter extends ArrayAdapter<DetectedActivity> {

ActivitiesAdapter(Context context,

ArrayList<DetectedActivity> detectedActivities) {

super(context, 0, detectedActivities);

}

@NonNull

@Override

public View getView(int position, @Nullable View view, @NonNull ViewGroup parent) {

//Retrieve the data item//

DetectedActivity detectedActivity = getItem(position);

if (view == null) {

view = LayoutInflater.from(getContext()).inflate(

R.layout.detected_activity, parent, false);

}

//Retrieve the TextViews where we’ll display the activity type, and percentage//

TextView activityName = (TextView) view.findViewById(R.id.activity_type);

TextView activityConfidenceLevel = (TextView) view.findViewById(

R.id.confidence_percentage);

//If an activity is detected...//

if (detectedActivity != null) {

activityName.setText(ActivityIntentService.getActivityString(getContext(),

//...get the activity type...//

detectedActivity.getType()));

//..and the confidence percentage//

activityConfidenceLevel.setText(getContext().getString(R.string.percentage,

detectedActivity.getConfidence()));

}

return view;

}

//Process the list of detected activities//

void updateActivities(ArrayList<DetectedActivity> detectedActivities) {

HashMap<Integer, Integer> detectedActivitiesMap = new HashMap<>();

for (DetectedActivity activity : detectedActivities) {

detectedActivitiesMap.put(activity.getType(), activity.getConfidence());

}

ArrayList<DetectedActivity> temporaryList = new ArrayList<>();

for (int i = 0; i < ActivityIntentService.POSSIBLE_ACTIVITIES.length; i++) {

int confidence = detectedActivitiesMap.containsKey(ActivityIntentService.POSSIBLE_ACTIVITIES[i]) ?

detectedActivitiesMap.get(ActivityIntentService.POSSIBLE_ACTIVITIES[i]) : 0;

//Add the object to a temporaryList//

temporaryList.add(new

DetectedActivity(ActivityIntentService.POSSIBLE_ACTIVITIES[i],

confidence));

}

//Remove all elements from the temporaryList//

this.clear();

//Refresh the View//

for (DetectedActivity detectedActivity: temporaryList) {

this.add(detectedActivity);

}

}

}Testing your app

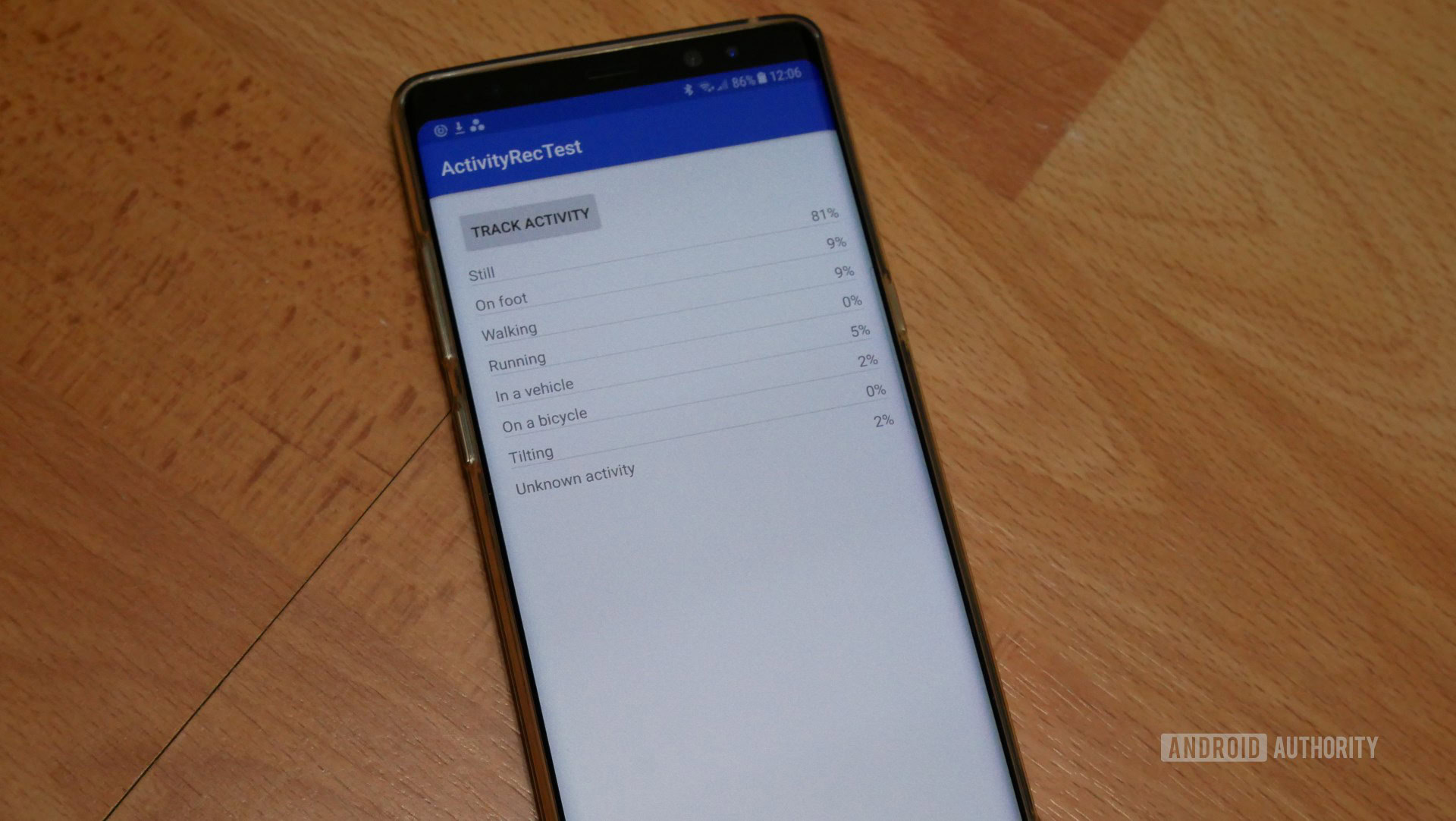

It’s time to put this app to the test! Install your project on an Android device and tap the ‘Track Activity’ button to start receiving activity updates.

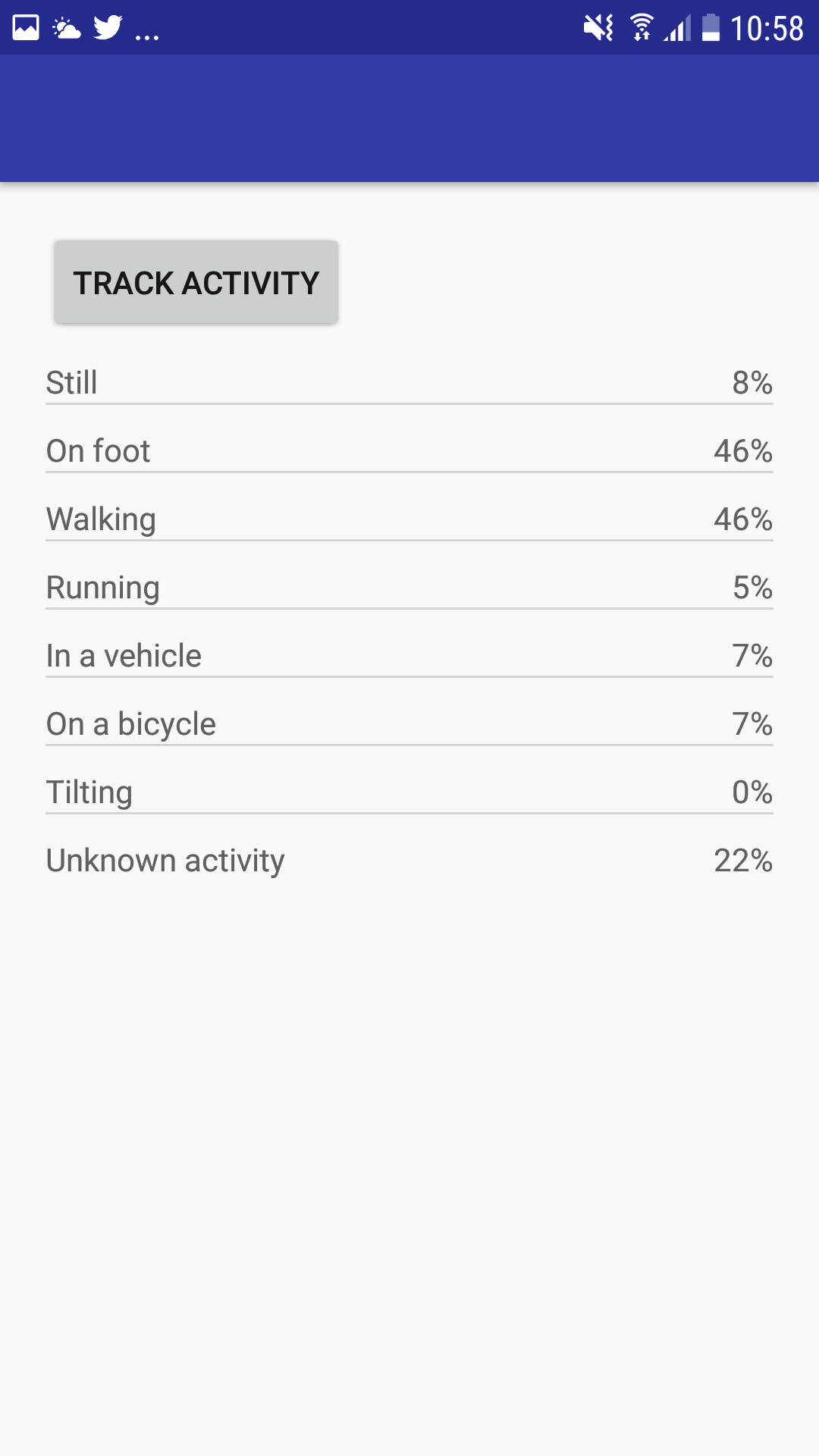

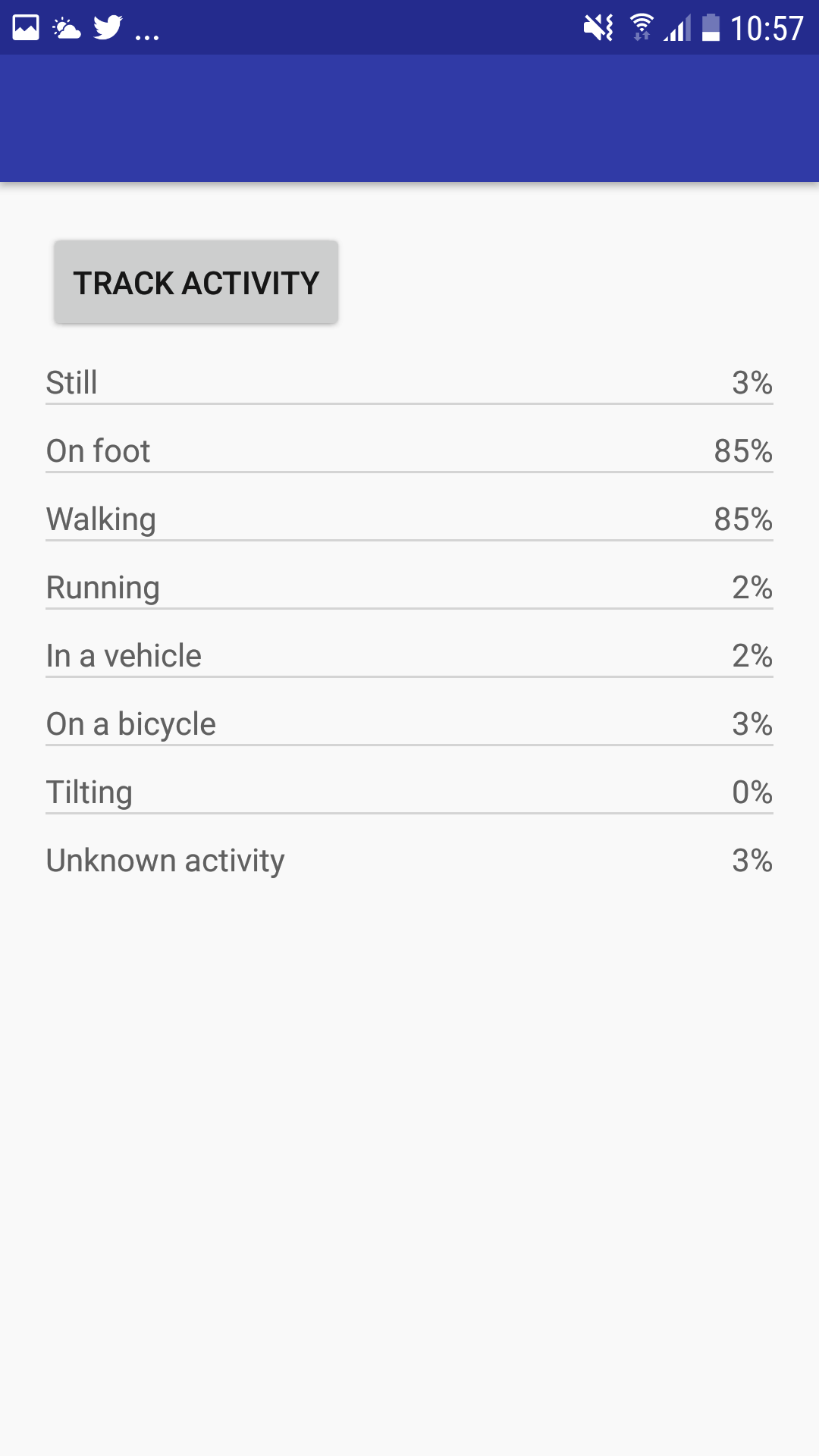

Since this data is never going to change while your Android device is sat on your desk, now’s the perfect time to get up and go for a walk (even if it is just around your house!) Keep in mind that it’s not unusual to see percentages across multiple activities, for example the following screenshot was taken while I was walking.

Although there’s apparently a 2-3% chance that I’m stationary, running, travelling in a vehicle, on a bicycle, or performing some unknown activity, the highest percentage is walking/on foot, so the app has detected the current activity successfully.

Using the Activity Recognition API in real-life projects

In this tutorial we’ve built an application that retrieves activity recognition data and displays a probability percentage for each activity. However, this API returns far more data than most applications actually need, so when you use Activity Recognition in your own projects you’ll typically want to filter this data in some way.

One method, is to retrieve the activity that has the highest probability percentage:

@Override

protected void onHandleIntent(Intent intent) {

//Check whether the Intent contains activity recognition data//

if (ActivityRecognitionResult.hasResult(intent)) {

//If data is available, then extract the ActivityRecognitionResult from the Intent//

ActivityRecognitionResult result = ActivityRecognitionResult.extractResult(intent);

DetectedActivity mostProbableActivity

= result.getMostProbableActivity();

//Get the confidence percentage//

int confidence = mostProbableActivity.getConfidence();

//Get the activity type//

int activityType = mostProbableActivity.getType();

//Do something//

...

...

...Alternatively, you may want your app to respond to specific activities only, for example requesting location updates more frequently when the user is walking or running. To make sure your app doesn’t perform this action every single time there’s a 1% or higher probability that the user is on foot, you should specify a minimum percentage that this activity must meet, before your application responds:

//If ON_FOOT has an 80% or higher probability percentage...//

if(DetectedActivity == “On_Foot” && result.getConfidence()> 80)

{

//...then do something//

}Wrapping up

In this article, we created an application that uses the Activity Recognition API to monitor user activity, and display this information in a ListView. We also covered some potential ways of filtering this data, ready for you to use in your applications.

Are you going to try using this API in your own projects? Let us know in the comments below!