Affiliate links on Android Authority may earn us a commission. Learn more.

Pichai says AI is like fire, but will we get burnt?

The impact of artificial intelligence and machine learning on all of our lives over the next decade and beyond cannot be understated. The technology could greatly improve our quality of life and catapult our understanding of the world, but many are worried about the risks posed by unleashing AI, including leading figures at the world’s biggest tech companies.

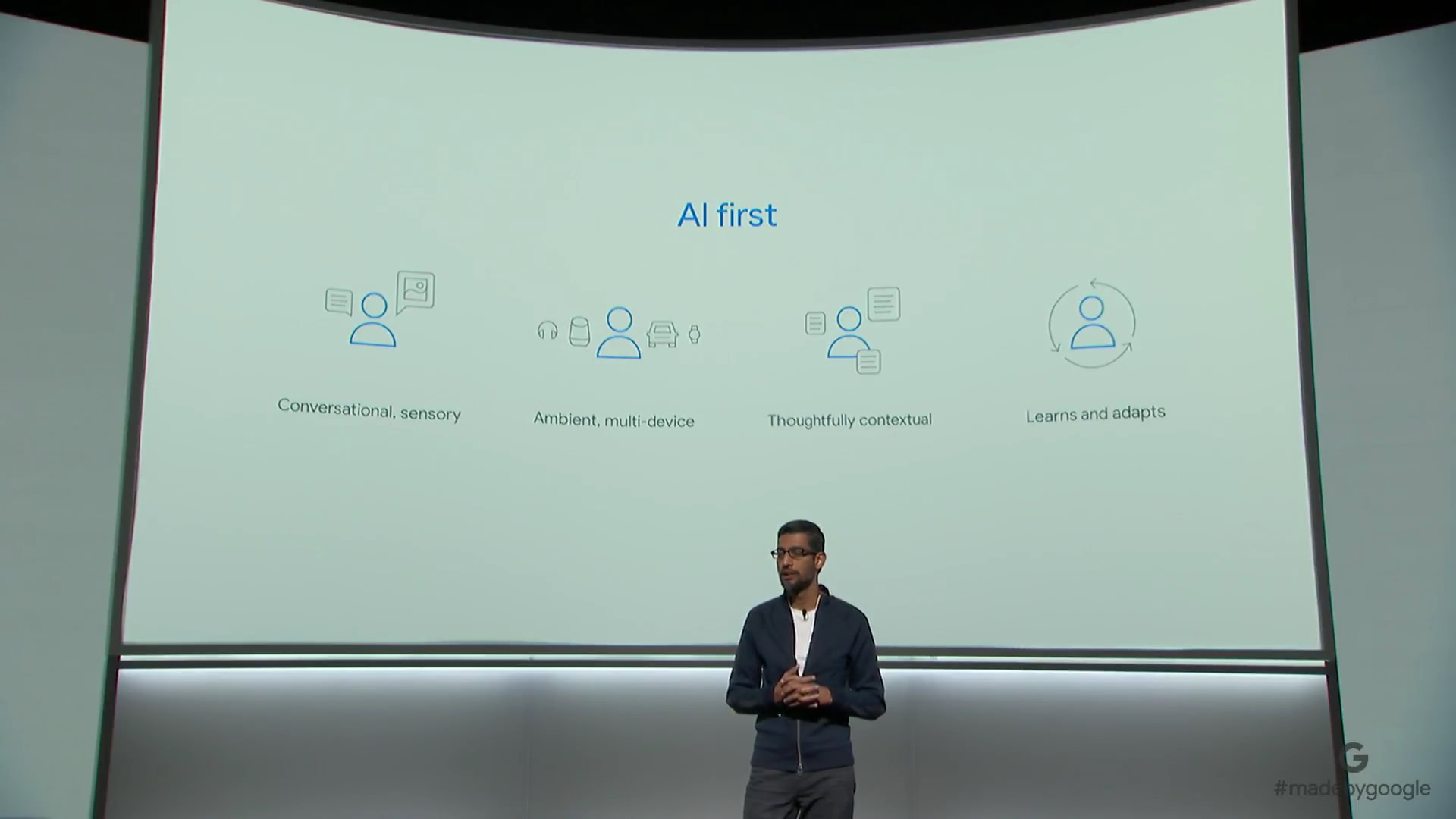

In an excerpt from an upcoming interview with Recode and MSNBC, Google’s Sundar Pichai provocatively compared AI to fire, noting its potential to harm as well as help those who wield it and live with it. If humanity is to embrace and rely on capabilities that exceed our own abilities, this is an important commentary worth exploring in more depth.

Rise of the machines

Before going any further, we should shake off any notion that Pichai is warning exclusively about the the technological singularity or some post apocalyptic sci-fi scenario where man is enslaved by machine, or ends up locked in a zoo for our own protection. There are merits to warning about over-dependence on or control exerted through a “rogue” sophisticated synthetic intelligence, but any form of artificial consciousness capable of such a feat is still very much theoretical. Even so, there are reasons to be concerned about even some less sophisticated current ML applications and some AI uses just around the corner.

The acceleration of machine learning has opened up a new paradigm in computing, exponentially extending capabilities ahead of human abilities. Today’s machine learning algorithms are able to crunch through huge amounts of data millions of times faster than us and correct their own behavior to learn more efficiently. This makes computing more human-like in its approach, but paradoxically tougher for us to follow exactly how such a system comes to its conclusions (a point we’ll explore more in depth later on).

AI is one of the most important things humans are working on, it's more profound than electricity or fire ... AI holds the potential for some the biggest advances we are going to see ... but we have to overcome its downsides tooSundar Pichai

Sticking with the imminent future and machine learning, the obvious threat comes from who yields such power and for what purposes. While big data analysis may help cure diseases like cancer, the same technology can be used equally well for more nefarious purposes.

Government organizations like the NSA already chew through obscene amounts of information, and machine learning is probably already helping to refine these security techniques further. Although innocent citizens probably don’t like the thought of being profiled and spied upon, ML is already enabling more invasive monitor about your life. Big data is also a valuable asset in business, facilitating better risk assessment but also enabling deeper scrutiny of customers for loans, mortgages, or other important financial services.

Various details of our lives are already being used to draw conclusions about our likely political affiliations, probability of committing a crime or reoffending, purchasing habits, proclivity for certain occupations, and even our likelihood of academic and financial success. The problem with profiling is that it may not be accurate or fair, and in the wrong hands the data can be misused.

This places a lot of knowledge and power in the hands of very select groups, which could severely affect politics, diplomacy, and economics. Notable minds like Stephen Hawking, Elon Musk, and Sam Harris have also opened up similar concerns and debates, so Pichai is not alone.

Big data can draw accurate conclusions about our political affiliations, probability of committing a crime, purchasing habits, and proclivity for certain occupations.

There’s also a more mundane risk to placing faith in systems based on machine learning. As people play a smaller role in producing the outcomes of a machine learning system, predicting and diagnosing faults becomes more difficult. Outcomes may change unexpectedly if erroneous inputs make their way into the system, and it could be even easier to miss them. Machine learning can be manipulated.

City wide traffic management systems based on vision processing and machine learning might perform unexpectedly in an unanticipated regional emergency, or could be susceptible to abuse or hacking simply by interacting with the monitoring and learning mechanism. Alternatively, consider the potential abuse of algorithms that display selected news pieces or advertisements in your social media feed. Any systems dependant on machine learning need to be very well thought out if people are going to be dependant on them.

Stepping outside of computing, the very nature of the power and influence machine learning offers can be threatening. All of the above is a potent mix for social and political unrest, even ignoring the threat to power balances between states that an explosion in AI and machine assisted systems pose. It’s not just the nature of AI and ML that could be a threat, but human attitudes and reactions towards them.

Utility and what defines us

Pichai seemed mostly convinced AI be used for the benefit and utility of humankind. He spoke quite specifically about solving problems like climate change, and the importance of coming to a consensus on the issues affecting humans that AI could solve.

It’s certainly a noble intent, but there’s a deeper issue with AI that Pichai doesn’t seem to touch on here: human influence.

AI appears to have gifted humanity with the ultimate blank canvas, yet it’s not clear if it’s possible or even wise for us to treat the development of artificial intelligence as such. It seems a given humans will create AI systems reflecting our needs, perceptions, and biases, all of which are shaped by our societal views and biological nature; after all, we are the ones programming them with our knowledge of color, objects, and language. At a basic level, programming is a reflection of the way humans think about problem solving.

It seems axiomatic that humans will create AI systems that reflect our needs, perceptions, and biases, which are both shaped by our societal views and our biological nature.

We may eventually also provide computers with concepts of human nature and character, justice and fairness, right and wrong. The very perception of issues that we use AI to solve can be shaped by both the positive and negative traits of our social and biological selves, and the proposed solutions could equally come into conflict with them.

How would we react if AI offered us solutions to problems that stood in contrast with our own morals or nature? We certainly can’t pass the complex ethical questions of our time to machines without due diligence and accountability.

Pichai is correct to identify the need for AI to focus on solving human problems, but this quickly runs into issues when we try to offload more subjective issues. Curing cancer is one thing, but prioritizing the allocation of limited emergency service resources on any given day is a more subjective task to teach a machine. Who can be certain we would like the results?

Noting our tendencies towards ideology, cognitive dissonance, self-service, and utopianism, reliance on human-influenced algorithms to solve some ethically complex issues is a dangerous proposition. Tackling such problems will require a renewed emphasis on and public understanding about morality, cognitive science, and, perhaps most importantly, the very nature of being human. That’s tougher than it sounds, as Google and Pichai himself recently split opinion with their handling of gender ideology versus inconvenient biological evidence.

Into the unknown

Pichai’s observation is an accurate and nuanced one. At face value, machine learning and synthetic intelligence have tremendous potential to enhance our lives and solve some of the most difficult problems of our time, or in the wrong hands create new problems which could spiral out of control. Under the surface, the power of big data and increasing influence of AI in our lives presents new issues in the realms of economics, politics, philosophy, and ethics, which have the potential to shape intelligence computing as either a positive or negative force for humanity.

The Terminators might not be coming for you, but the attitudes towards AI and the decisions being made about it and machine learning today certainly have the possibility to burn us in the future.