Affiliate links on Android Authority may earn us a commission. Learn more.

Sony eyes a future in 3D despite Project Tango's failure

Sony is, apparently, sticking around in the smartphone market to ensure it’s ready to pounce when the next big thing arrives. Perhaps it’s expecting the industry’s next leap to involve 3D imaging and augmented reality, as this is what Sony’s engineers are working on at its Atsugi Technology Center in Tokyo.

The company is developing infrared 3D sensors designed for smartphones and augmented reality products, ranging from industrial equipment to self-driving cars. These sensors are said to be able to map 3D environments and even detect objects and people in a scene. According to an October 2017 report from Bloomberg, Sony intends to begin mass production of these sensors later this year, and they could arrive in products before the end of 2018 or in early 2019. It’s a market estimated to be worth around $4.5 billion by 2022, according to Yole Developpement.

Sony isn’t the first to start work on smart 3D mapping sensors and technology. Google’s Tango technology appeared inside two commercial smartphones, though the search giant is now focused on its more universal ARCore technology instead. Intel has also continued to invest in its RealSense hardware and platform, which provides similar functionality.

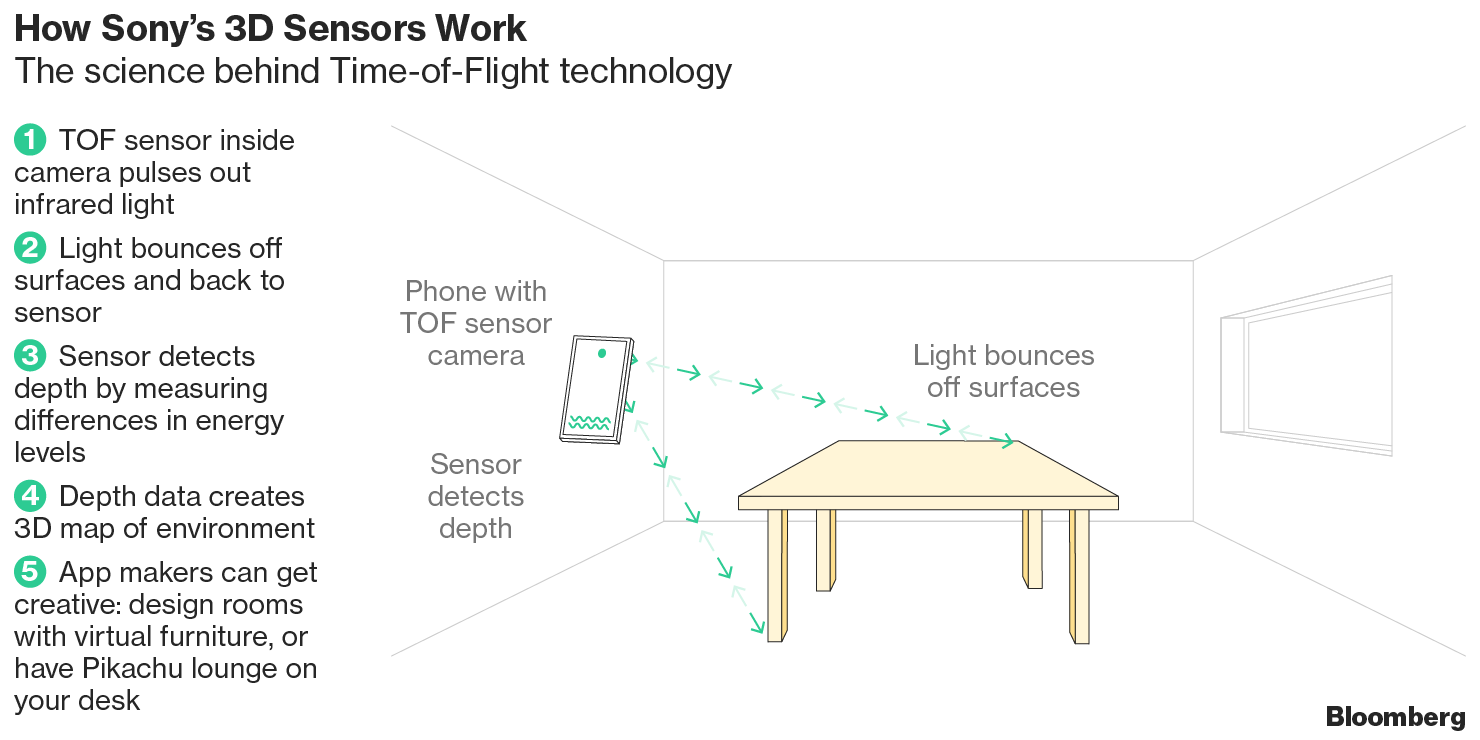

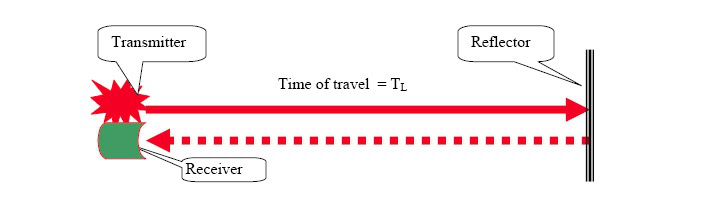

Much like RealSense and Tango, Sony’s implementation relies on a combination of infrared light pulses and complimentary sensors that record the time taken for a pulse to bounce back in order to calculate its distance. These are known as time-of-flight (TOF) sensors.

At its most basic implementation, like in a rangefinder, a single infrared illumination unit is paired up with a receiving photodiode to record when the light returns. The distance of an object from the TOF sensor can be calculated by multiplying the return time with the speed of light (d = ct/2). In a sensor like the one Sony is developing, this is scaled up to include multiple infrared transmitters and receiving photodiodes into a single package or TOF camera, allowing for the capture of more complex scenes.

Instead of making images for the eyes of human beings, we’re creating them for the eyes of machines ... Whether it’s AR in smartphones or sensors in self-driving cars, computers will have a way of understanding their environment.Satoshi Yoshihara - Manager of Sony Sensor Division

The downside of TOF sensors is they typically don’t offer the same resolution as a traditional “2D” camera found on your smartphone. High resolution cameras are more expensive, but even low resolution ones are capable of mapping objects with a reasonable degree of accuracy. Furthermore, by pinging thousands of pulses back to the sensor — often at more than 100 frames per second — it’s possible to combine readings to build a more accurate picture and even track movement through space in real time.

Apple is using infrared for its Face ID technology. Many smartphones use a more basic example for fast camera focusing. These are all similar to the underlying idea of Microsoft’s Kinect accessory for the Xbox as well. Sony is leveraging its expertise in the image sensor industry, both on the research and manufacturing side, to improve the technology and carve out its place as one of the market’s most influential players. The company’s TOF sensors are smaller than existing models, and able to calculate depth over greater distances. Apple’s technology may be good for a face right in front of the sensor, but Sony’s technology can map object further away in the room.

It’s possible that Apple and others may adopt Sony’s TOF technology in the future, especially if the company puts all of its manufacturing might behind it. Currently, STMicroelectronics is selling its FlightSense sensors to Apple, and other smartphone OEMs for camera focusing.

Sony’s focus on a dedicated hardware approach to augmented reality and world mapping comes as Apple and Google, the two leaders in smartphone AR, are preferring to support conventional camera hardware through their ARKit and ARCore platforms. Google’s Tango, which required dedicated infrared hardware, was simply too costly and time consuming to convince smartphone OEMs to invest.

Sony may well face similar difficulties, at least when it comes to smartphone AR, if OEMs opt for solutions that work with traditional camera hardware, even if the results aren’t necessarily as good. However, Sony is targeting a much bigger market than just smartphones, and won’t be in short supply of customers for its sensors.

Dedicated hardware didn't work for Google's Tango, but Sony has the manufacturing clout and is targeting more than just phones

The use cases for TOF sensors are far reaching. The automotive market is likely to offer a big opportunity for Sony, as are industrial and commercial applications that rely on high accuracy 3D measurements. On the consumer side, TOF sensors are likely to find homes in simple technologies that allow us to interact with them through 3D gestures, as well as smartphones or other products packing in powerful processing technology to overlay augmented reality into the real world.

Many of these use cases are going to rely on intelligent software too, particularly when it comes to image recognition situations powered by machine learning. There’s no word on how much software Sony is developing to complement its hardware products, or if it will be left mostly to the companies buying and implementing the sensors.

Sony is taking a big bet on powering upcoming 3D mapping technologies, and possibly even a future full of augmented reality products too. The company seems convinced that we’re moving into a world of 3D interactions, but do you agree? Sound off in the comments!