Affiliate links on Android Authority may earn us a commission. Learn more.

ML Kit Image Labeling: Determine an image’s content with machine learning

Machine learning (ML) can be a powerful addition to your Android projects. It helps you create apps that intelligently identify text, faces, objects, famous landmarks, and much more, and use that information to deliver compelling experiences to your users. However, getting started with machine learning isn’t exactly easy!

Even if you’re a seasoned ML expert, sourcing enough data to train your own own machine learning models, and adapting and optimizing them for mobile devices, can be complex, time consuming, and expensive.

ML Kit is a new machine learning SDK that aims to make machine learning accessible to everyone — even if you have zero ML experience!

Google’s ML Kit offers APIs and pre-trained models for common mobile use cases, including text recognition, face detection, and barcode scanning. In this article we’ll be focusing on the Image Labeling model and API. We’ll be building an Android app that can process an image and return labels for all the different entities it identifies within that image, like locations, products, people, activities, and animals.

Image Labeling is available on-device and in the cloud, and both approaches have strengths and weaknesses. To help you choose the approach that works best in your own Android applications, I’ll show you how to process an image on-device, using a local ML model that your app downloads at install-time, and how to perform Image Labeling in the cloud.

What is Image Labeling?

ML Kit’s Image Labeling is an API and model that can recognize entities in an image, and supply information about those entities in the form of labels.

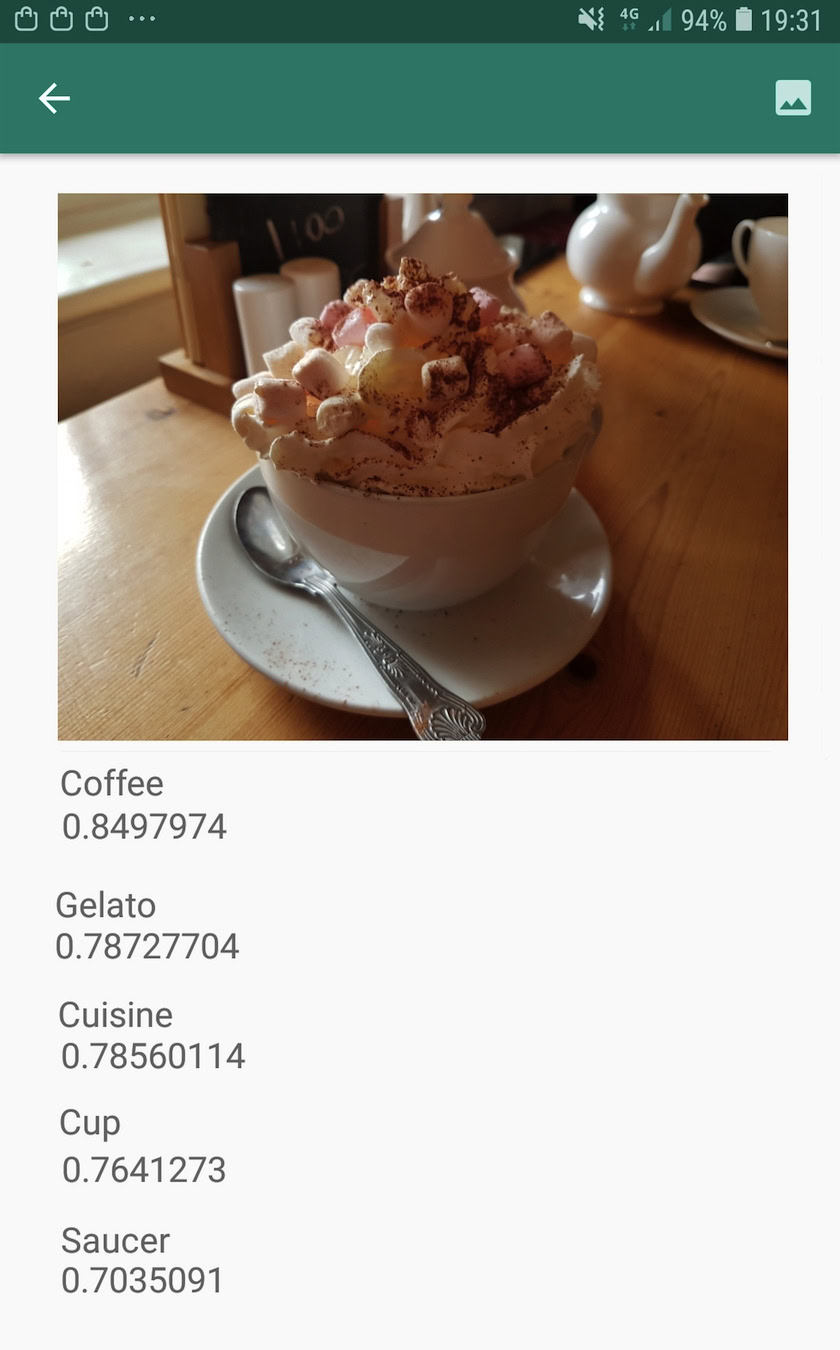

Each label has an accompanying score indicating how certain ML Kit is about this particular label. For example, if you provide ML Kit with an image of a fancy latte, then it might return labels such as “gelato,” “dessert,” and “coffee,” all with varying confidence scores. Your app must then decide which label is most likely to accurately reflect the image’s content — hopefully, in this scenario “coffee” will have the highest confidence score.

Once you’ve identified an image’s content, you can use this information in all kinds of ways. You might tag photos with useful metadata, or automatically organize the user’s images into albums based on their subject matter.

This API can also be handy for content moderation. If you give users the option to upload their own avatars, Image Labeling can help you filter out inappropriate images before they’re posted to your app.

The Image Labeling API is available both on-device and in the cloud, so you can pick and choose which approach makes the most sense for your particular app. You could implement both methods and let the user decide, or even switch between local and cloud-powered Image Labeling based on factors like whether the device is connected to a free Wi-Fi network or using its mobile data.

If you’re making this decision, you’ll need to know the differences between on-device and local Image Labeling:

On device, or in the cloud?

There’s several benefits to using the on-device model:

- It’s free – No matter how many requests your app submits, you won’t be charged for performing Image Labeling on-device.

- It doesn’t require an Internet connection – By using the local Image Labeling model, you can ensure your app’s ML Kit features remain functional, even when the device doesn’t have an active Internet connection. In addition, if you suspect your users might need to process a large number of images, or process high-resolution images, then you can help preserve their mobile data by opting for on-device image analysis.

- It’s faster – Since everything happens on-device, local image processing will typically return results quicker than the cloud equivalent.

The major drawback is on-device model has much less information to consult than its cloud-based counterpart. According to the official docs, on-device Image Labeling gives you access to over 400 labels covering the most commonly used concepts in photos. The cloud model has access to over 10,000 labels.

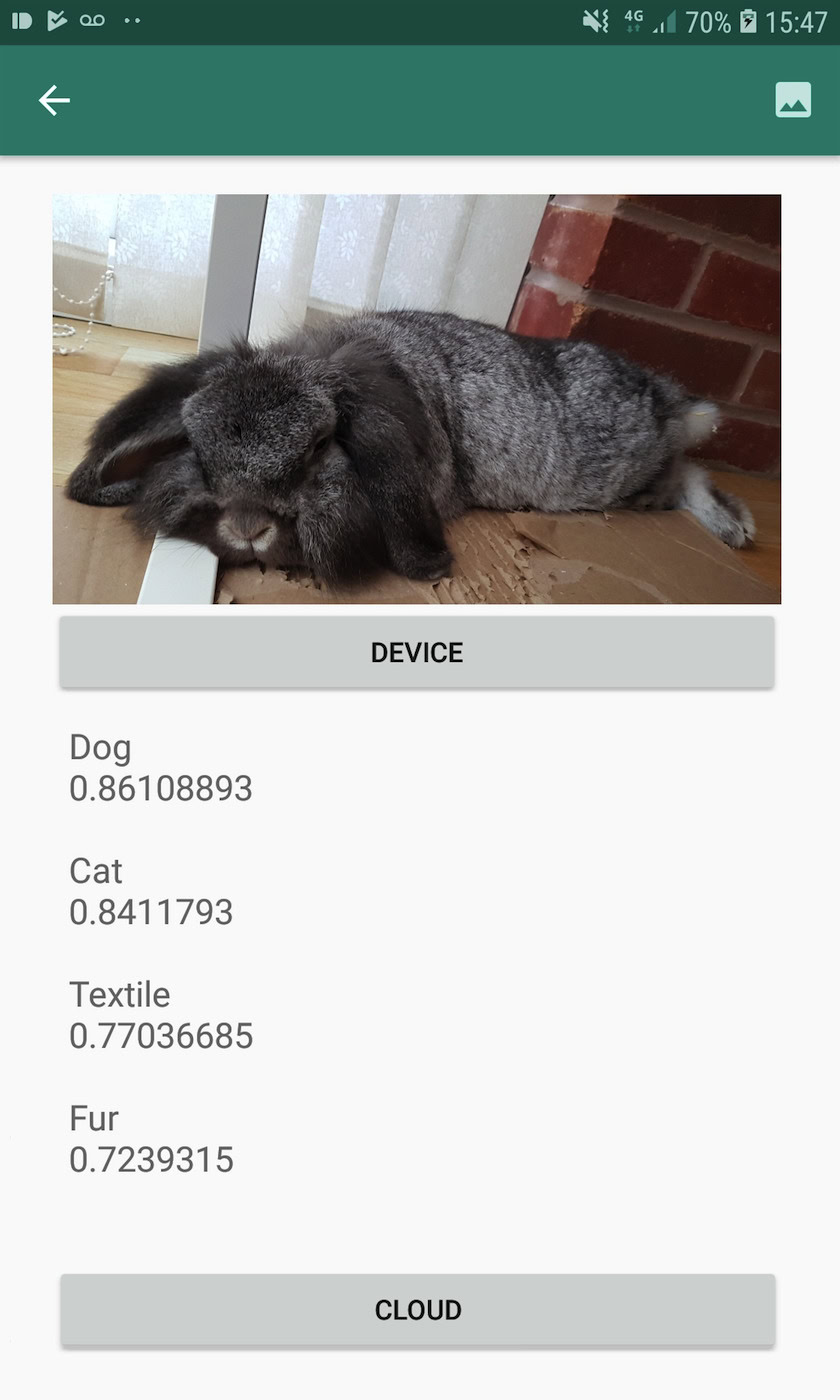

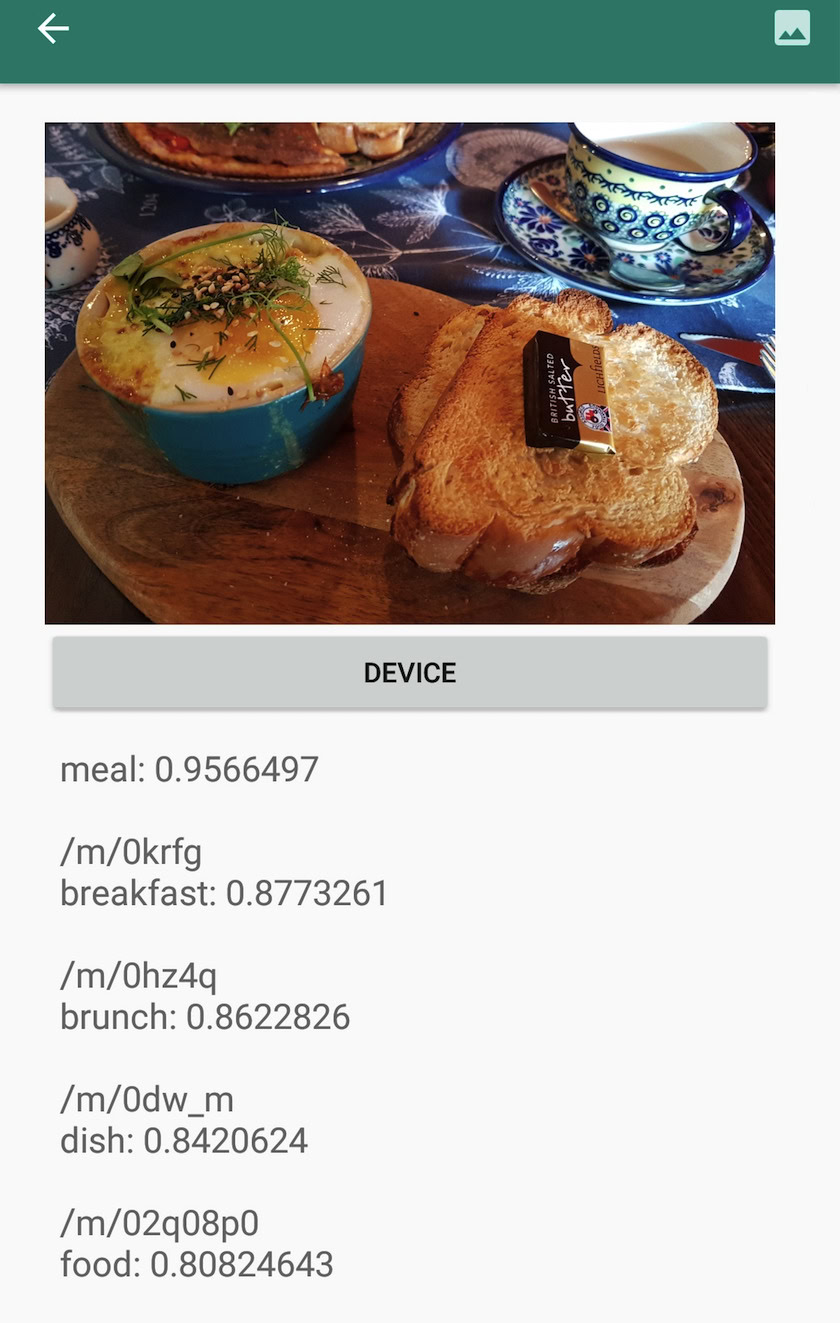

While accuracy will vary between images, you should be prepared to receive less accurate results when using Image Labeling’s on-device model. The following screenshot shows the labels and corresponding confidence scores for an image processed using the on-device model.

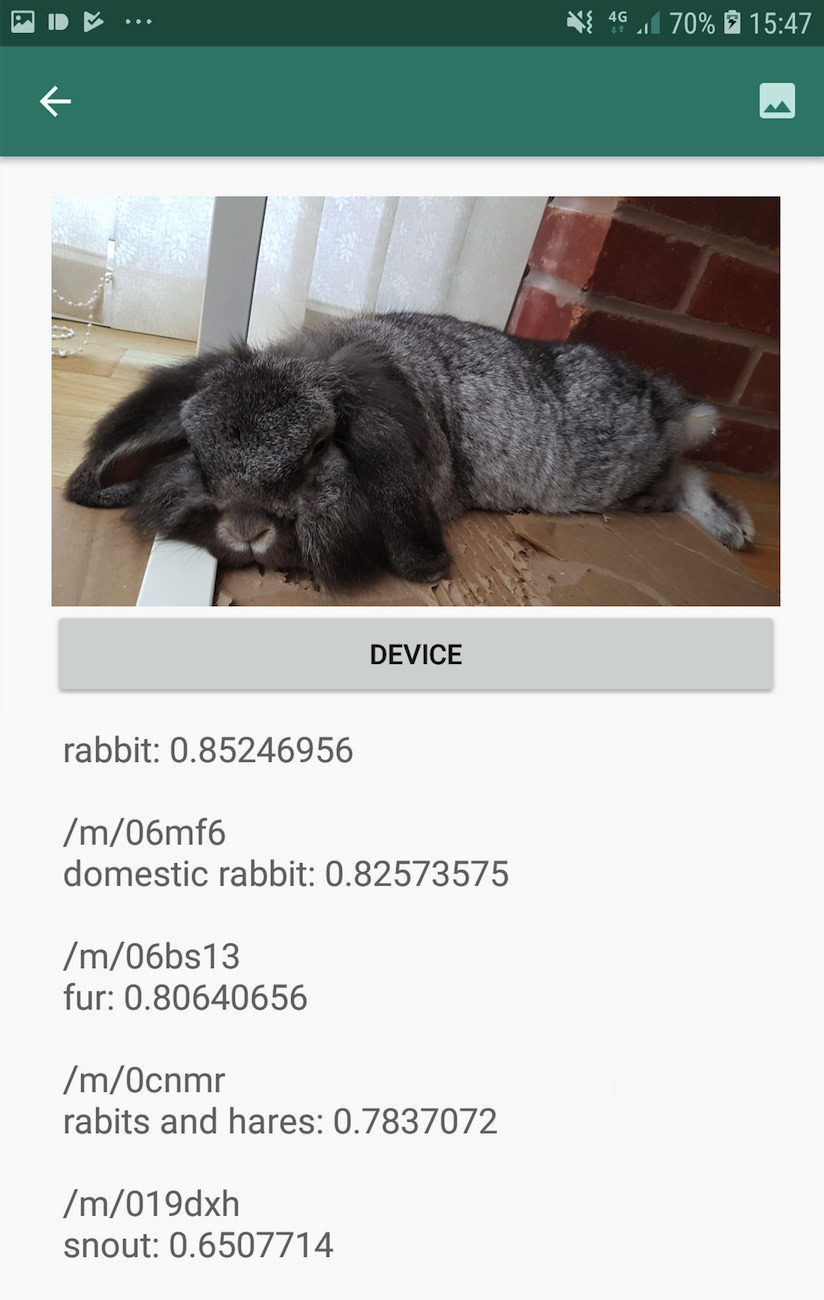

Now here are the labels and confidence scores retrieved using the cloud model.

As you can see, these labels are much more accurate, but this increased accuracy comes at a price!

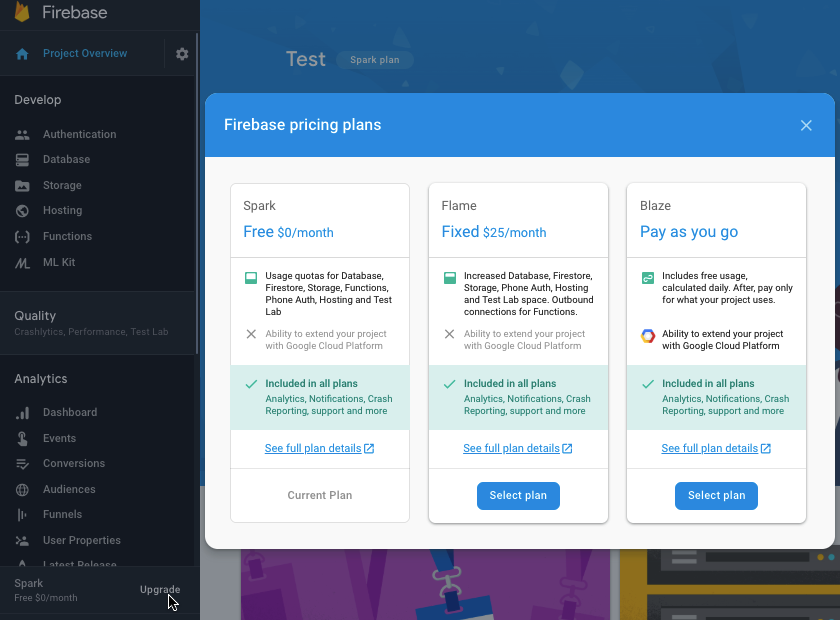

The cloud-based Image Labeling API is a premium service that requires upgrading your Firebase project to the pay-as-you-go Blaze plan. It also requires an internet connection, so if the user goes offline they’ll lose access to all parts of your app that rely on the Image Labeling API.

Which are we using, and will I need to enter my credit card details?

In our app, we’ll be implementing both the on-device and cloud Image Labeling models, so by the end of this article you’ll know how to harness the full power of ML Kit’s cloud-based processing, and how to benefit from the real-time capabilities of the on-device model.

Although the cloud model is a premium feature, there’s a free quota in place. At the time of writing, you can perform Image Labeling on up to 1,000 images per month for free. This free quota should be more than enough to complete this tutorial, but you will need to enter your payment details into the Firebase Console.

If you don’t want to hand over your credit card information, just skip this article’s cloud sections — you’ll still end up with a complete app.

Create your project and connect to Firebase

To start, create a new Android project with the settings of your choice.

Since ML Kit is a Firebase service, we need to create a connection between your Android Studio project, and a corresponding Firebase project:

- In your web browser, head over to the Firebase Console.

- Select “Add project” and give your project a name.

- Read the terms and conditions, and then select “I accept…” followed by “Create project.”

- Select “Add Firebase to your Android app.”

- Enter your project’s package name, and then click “Register app.”

- Select “Download google-services.json.” This file contains all the necessary Firebase metadata.

- In Android Studio, drag and drop the google-services.json file into your project’s “app” directory.

- Next, open your project-level build.gradle file and add Google Services:

classpath 'com.google.gms:google-services:4.0.1'- Open your app-level build.gradle file, and apply the Google services plugin, plus the dependencies for ML Kit, which allows you to integrate the ML Kit SDK into your app:

apply plugin: 'com.google.gms.google-services'

…

…

…

dependencies {

implementation fileTree(dir: 'libs', include: ['*.jar'])

//Add the following//

implementation 'com.google.firebase:firebase-core:16.0.5'

implementation 'com.google.firebase:firebase-ml-vision:18.0.1'

implementation 'com.google.firebase:firebase-ml-vision-image-label-model:17.0.2'- To make sure all these dependencies are available to your app, sync your project when prompted.

- Next, let the Firebase Console know you’ve successfully installed Firebase. Run your application on either a physical Android smartphone or tablet, or an Android Virtual Device (AVD).

- Back in the Firebase Console, select “Run app to verify installation.”

- Firebase will now check that everything is working correctly. Once Firebase has successfully detected your app, it’ll display a “Congratulations” message. Select “Continue to the console.”

On-device Image Labeling: Downloading Google’s pre-trained models

To perform on-device Image Labeling, your app needs access to a local ML Kit model. By default, ML Kit only downloads local models as and when they’re required, so your app will download the Image Labeling model the first time it needs to use that particular model. This could potentially result in the user trying to access one of your app’s features, only to then be left waiting while your app downloads the model(s) necessary to deliver that feature.

To provide the best on-device experience, you should take a proactive approach and download the required local model(s) at install-time. You can enable install-time downloads by adding “com.google.firebase.ml.vision.DEPENDENCIES” metadata to your app’s Manifest.

While we have the Manifest open, I’m also going to add the WRITE_EXTERNAL_STORAGE permission, which we’ll be using later in this tutorial.

<?xml version="1.0" encoding="utf-8"?>

<manifest xmlns:android="http://schemas.android.com/apk/res/android"

package="com.jessicathornsby.imagelabelling">

//Add the WRITE_EXTERNAL_STORAGE permission//

<uses-permission android:name="android.permission.WRITE_EXTERNAL_STORAGE" />

<application

android:allowBackup="true"

android:icon="@mipmap/ic_launcher"

android:label="@string/app_name"

android:roundIcon="@mipmap/ic_launcher_round"

android:supportsRtl="true"

android:theme="@style/AppTheme">

<activity android:name=".MainActivity">

<intent-filter>

<action android:name="android.intent.action.MAIN" />

<category android:name="android.intent.category.LAUNCHER" />

</intent-filter>

</activity>

//Add the following metadata//

<meta-data

android:name="com.google.firebase.ml.vision.DEPENDENCIES"

android:value="label" />

</application>

</manifest>Now, as soon as our app is installed from the Google Play Store, it’ll automatically download the ML models specified by “android:value.”

Building our Image Labeling layout

I want my layout to consist of the following:

- An ImageView – Initially, this will display a placeholder, but it’ll update once the user selects an image from their device’s gallery.

- A “Device” button – This is how the user will submit their image to the local Image Labeling model.

- A “Cloud” button – This is how the user will submit their image to the cloud-based Image Labeling model.

- A TextView – This is where we’ll display the retrieved labels and their corresponding confidence scores.

- A ScrollView – Since there’s no guarantee the image and all of the labels will fit neatly on-screen, I’m going to display this content inside a ScrollView.

Here’s my completed activity_main.xml file:

<?xml version="1.0" encoding="utf-8"?>

<RelativeLayout xmlns:android="http://schemas.android.com/apk/res/android"

xmlns:tools="http://schemas.android.com/tools"

android:layout_width="match_parent"

android:layout_height="match_parent"

android:padding="20dp"

tools:context=".MainActivity">

<ScrollView

android:layout_width="match_parent"

android:layout_height="match_parent"

android:layout_alignParentTop="true"

android:layout_centerHorizontal="true">

<LinearLayout

android:layout_width="match_parent"

android:layout_height="wrap_content"

android:orientation="vertical">

<ImageView

android:id="@+id/imageView"

android:layout_width="match_parent"

android:layout_height="wrap_content"

android:adjustViewBounds="true"

android:src="@drawable/ic_placeholder" />

<Button

android:id="@+id/btn_device"

android:layout_width="match_parent"

android:layout_height="wrap_content"

android:text="Device" />

<TextView

android:id="@+id/textView"

android:layout_width="match_parent"

android:layout_height="wrap_content"

android:layout_marginTop="20dp"

android:textAppearance="@style/TextAppearance.AppCompat.Medium" />

<Button

android:id="@+id/btn_cloud"

android:layout_width="match_parent"

android:layout_height="wrap_content"

android:text="Cloud" />

</LinearLayout>

</ScrollView>

</RelativeLayout>This layout references a “ic_placeholder” drawable, which we’ll need to create:

- Select File > New > Image Asset from the Android Studio toolbar.

- Open the “Icon Type” dropdown and select “Action Bar and Tab Icons.”

- Make sure the “Clip Art” radio button is selected.

- Give the “Clip Art” button a click.

- Select the image that you want to use as your placeholder; I’m using “Add to photos.”

- Click “OK.”

- In the “Name” field, enter “ic_placeholder.”

- Click “Next.” Read the on-screen information, and if you’re happy to proceed then click “Finish.”

Action bar icons: Choosing an image

Next, we need to create an action bar item, which will launch the user’s gallery, ready for them to select an image.

You define action bar icons inside a menu resource file, which lives inside a “res/menu” directory. If your project doesn’t already contain a “menu” directory, then you’ll need to create one:

- Control-click your project’s “res” directory and select New > Android Resource Directory.

- Open the “Resource type” dropdown and select “menu.”

- The “Directory name” should update to “menu” automatically, but if it doesn’t then you’ll need to rename it manually.

- Click “OK.”

Next, create the menu resource file:

- Control-click your project’s “menu” directory and select New > Menu resource file.

- Name this file “my_menu.”

- Click “OK.”

- Open the “my_menu.xml” file, and add the following:

<menu xmlns:android="http://schemas.android.com/apk/res/android"

xmlns:app="http://schemas.android.com/apk/res-auto"

xmlns:tools="http://schemas.android.com/tools"

tools:context="com.firebase.gde.MainActivity">

//Create an <item> element for every action//

<item

android:id="@+id/action_gallery"

android:orderInCategory="102"

android:title="@string/action_gallery"

android:icon="@drawable/ic_gallery"

app:showAsAction="ifRoom"/>

</menu>The menu file references an “action_gallery” string, so open your project’s res/values/strings.xml file and create this resource. While I’m here, I’m also defining all the other strings we’ll be using throughout this project:

<resources>

<string name="app_name">ImageLabelling</string>

<string name="action_gallery">Gallery</string>

<string name="permission_request">This app needs to access files on your device</string>

</resources>Next, we need to create the action bar’s “ic_gallery” icon:

- Select File > New > Image Asset from the Android Studio toolbar.

- Set the “Icon Type” dropdown to “Action Bar and Tab Icons.”

- Click the “Clip Art” button.

- Choose a drawable; I’m using “image.”

- Click “OK.”

- To ensure this icon is clearly visible in your app’s action bar, open the “Theme” dropdown and select “HOLO_DARK.”

- Name this icon “ic_gallery.”

- “Click “Next,” followed by “Finish.”

Handling permission requests and click events

I’m going to perform all the tasks that aren’t directly related to the Image Labeling API in a separate BaseActivity class. This includes instantiating the menu, handling action bar click events, requesting access to the device’s storage and then using onRequestPermissionsResult to check the user’s response to this permission request.

- Select File > New > Java class from the Android Studio toolbar.

- Name this class “BaseActivity.”

- Click “OK.”

- Open BaseActivity and add the following:

import android.Manifest;

import android.content.Intent;

import android.content.pm.PackageManager;

import android.os.Bundle;

import android.provider.MediaStore;

import android.support.annotation.NonNull;

import android.support.annotation.Nullable;

import android.support.v4.app.ActivityCompat;

import android.support.v7.app.ActionBar;

import android.support.v7.app.AppCompatActivity;

import android.view.Menu;

import android.view.MenuItem;

import java.io.File;

public class BaseActivity extends AppCompatActivity {

public static final int RC_STORAGE_PERMS1 = 101;

public static final int RC_SELECT_PICTURE = 103;

public static final String ACTION_BAR_TITLE = "action_bar_title";

public File imageFile;

@Override

protected void onCreate(@Nullable Bundle savedInstanceState) {

super.onCreate(savedInstanceState);

ActionBar actionBar = getSupportActionBar();

if (actionBar != null) {

actionBar.setDisplayHomeAsUpEnabled(true);

actionBar.setTitle(getIntent().getStringExtra(ACTION_BAR_TITLE));

}

}

@Override

public boolean onCreateOptionsMenu(Menu menu) {

getMenuInflater().inflate(R.menu.my_menu, menu);

return true;

}

@Override

public boolean onOptionsItemSelected(MenuItem item) {

switch (item.getItemId()) {

//If “gallery_action” is selected, then...//

case R.id.action_gallery:

//...check we have the WRITE_STORAGE permission//

checkStoragePermission(RC_STORAGE_PERMS1);

break;

}

return super.onOptionsItemSelected(item);

}

@Override

public void onRequestPermissionsResult(int requestCode, @NonNull String[] permissions, @NonNull int[] grantResults) {

super.onRequestPermissionsResult(requestCode, permissions, grantResults);

switch (requestCode) {

case RC_STORAGE_PERMS1:

//If the permission request is granted, then...//

if (grantResults.length > 0 && grantResults[0] == PackageManager.PERMISSION_GRANTED) {

//...call selectPicture//

selectPicture();

//If the permission request is denied, then...//

} else {

//...display the “permission_request” string//

MyHelper.needPermission(this, requestCode, R.string.permission_request);

}

break;

}

}

//Check whether the user has granted the WRITE_STORAGE permission//

public void checkStoragePermission(int requestCode) {

switch (requestCode) {

case RC_STORAGE_PERMS1:

int hasWriteExternalStoragePermission = ActivityCompat.checkSelfPermission(this, Manifest.permission.WRITE_EXTERNAL_STORAGE);

//If we have access to external storage...//

if (hasWriteExternalStoragePermission == PackageManager.PERMISSION_GRANTED) {

//...call selectPicture, which launches an Activity where the user can select an image//

selectPicture();

//If permission hasn’t been granted, then...//

} else {

//...request the permission//

ActivityCompat.requestPermissions(this, new String[]{Manifest.permission.WRITE_EXTERNAL_STORAGE}, requestCode);

}

break;

}

}

private void selectPicture() {

imageFile = MyHelper.createTempFile(imageFile);

Intent intent = new Intent(Intent.ACTION_PICK, MediaStore.Images.Media.EXTERNAL_CONTENT_URI);

startActivityForResult(intent, RC_SELECT_PICTURE);

}

}Don’t waste time processing large images!

Next, create a new “MyHelper” class, where we’ll resize the user’s chosen image. By scaling the image down before passing it to ML Kit’s detectors, we can accelerate the image processing tasks.

import android.app.Activity;

import android.app.Dialog;

import android.content.Context;

import android.content.DialogInterface;

import android.content.Intent;

import android.database.Cursor;

import android.graphics.Bitmap;

import android.graphics.BitmapFactory;

import android.net.Uri;

import android.os.Environment;

import android.provider.MediaStore;

import android.provider.Settings;

import android.support.v7.app.AlertDialog;

import android.widget.ImageView;

import android.widget.LinearLayout;

import android.widget.ProgressBar;

import java.io.File;

import java.io.FileNotFoundException;

import java.io.FileOutputStream;

import java.io.IOException;

import static android.graphics.BitmapFactory.decodeFile;

import static android.graphics.BitmapFactory.decodeStream;

public class MyHelper {

private static Dialog mDialog;

public static String getPath(Context context, Uri uri) {

String path = "";

String[] projection = {MediaStore.Images.Media.DATA};

Cursor cursor = context.getContentResolver().query(uri, projection, null, null, null);

int column_index;

if (cursor != null) {

column_index = cursor.getColumnIndexOrThrow(MediaStore.Images.Media.DATA);

cursor.moveToFirst();

path = cursor.getString(column_index);

cursor.close();

}

return path;

}

public static File createTempFile(File file) {

File dir = new File(Environment.getExternalStorageDirectory().getPath() + "/com.example.mlkit");

if (!dir.exists() || !dir.isDirectory()) {

dir.mkdirs();

}

if (file == null) {

file = new File(dir, "original.jpg");

}

return file;

}

public static void showDialog(Context context) {

mDialog = new Dialog(context);

mDialog.addContentView(

new ProgressBar(context),

new LinearLayout.LayoutParams(LinearLayout.LayoutParams.WRAP_CONTENT, LinearLayout.LayoutParams.WRAP_CONTENT)

);

mDialog.setCancelable(false);

if (!mDialog.isShowing()) {

mDialog.show();

}

}

public static void dismissDialog() {

if (mDialog != null && mDialog.isShowing()) {

mDialog.dismiss();

}

}

public static void needPermission(final Activity activity, final int requestCode, int msg) {

AlertDialog.Builder alert = new AlertDialog.Builder(activity);

alert.setMessage(msg);

alert.setPositiveButton(android.R.string.ok, new DialogInterface.OnClickListener() {

@Override

public void onClick(DialogInterface dialogInterface, int i) {

dialogInterface.dismiss();

Intent intent = new Intent(Settings.ACTION_APPLICATION_DETAILS_SETTINGS);

intent.setData(Uri.parse("package:" + activity.getPackageName()));

activity.startActivityForResult(intent, requestCode);

}

});

alert.setNegativeButton(android.R.string.cancel, new DialogInterface.OnClickListener() {

@Override

public void onClick(DialogInterface dialogInterface, int i) {

dialogInterface.dismiss();

}

});

alert.setCancelable(false);

alert.show();

}

public static Bitmap resizeImage(File imageFile, Context context, Uri uri, ImageView view) {

BitmapFactory.Options options = new BitmapFactory.Options();

try {

decodeStream(context.getContentResolver().openInputStream(uri), null, options);

int photoW = options.outWidth;

int photoH = options.outHeight;

options.inSampleSize = Math.min(photoW / view.getWidth(), photoH / view.getHeight());

return compressImage(imageFile, BitmapFactory.decodeStream(context.getContentResolver().openInputStream(uri), null, options));

} catch (FileNotFoundException e) {

e.printStackTrace();

return null;

}

}

public static Bitmap resizeImage(File imageFile, String path, ImageView view) {

BitmapFactory.Options options = new BitmapFactory.Options();

options.inJustDecodeBounds = true;

decodeFile(path, options);

int photoW = options.outWidth;

int photoH = options.outHeight;

options.inJustDecodeBounds = false;

options.inSampleSize = Math.min(photoW / view.getWidth(), photoH / view.getHeight());

return compressImage(imageFile, BitmapFactory.decodeFile(path, options));

}

private static Bitmap compressImage(File imageFile, Bitmap bmp) {

try {

FileOutputStream fos = new FileOutputStream(imageFile);

bmp.compress(Bitmap.CompressFormat.JPEG, 80, fos);

fos.close();

} catch (IOException e) {

e.printStackTrace();

}

return bmp;

}

}Displaying the user’s chosen image

Next, we need to grab the image the user selected from their gallery, and display it as part of our ImageView.

import android.content.Intent;

import android.graphics.Bitmap;

import android.net.Uri;

import android.os.Bundle;

import android.view.View;

import android.widget.ImageView;

import android.widget.TextView;

public class MainActivity extends BaseActivity implements View.OnClickListener {

private Bitmap mBitmap;

private ImageView mImageView;

private TextView mTextView;

@Override

protected void onCreate(Bundle savedInstanceState) {

super.onCreate(savedInstanceState);

setContentView(R.layout.activity_main);

mTextView = findViewById(R.id.textView);

mImageView = findViewById(R.id.imageView);

}

@Override

protected void onActivityResult(int requestCode, int resultCode, Intent data) {

super.onActivityResult(requestCode, resultCode, data);

if (resultCode == RESULT_OK) {

switch (requestCode) {

case RC_STORAGE_PERMS1:

checkStoragePermission(requestCode);

break;

case RC_SELECT_PICTURE:

Uri dataUri = data.getData();

String path = MyHelper.getPath(this, dataUri);

if (path == null) {

mBitmap = MyHelper.resizeImage(imageFile, this, dataUri, mImageView);

} else {

mBitmap = MyHelper.resizeImage(imageFile, path, mImageView);

}

if (mBitmap != null) {

mTextView.setText(null);

mImageView.setImageBitmap(mBitmap);

}

break;

}

}

}

@Override

public void onClick(View view) {

}

}Teaching an app to label images on-device

We’ve laid the groundwork, so we’re ready to start labeling some images!

Customize the image labeler

While you could use ML Kit’s image labeler out of the box, you can also customize it by creating a FirebaseVisionLabelDetectorOptions object, and applying your own settings.

I’m going to create a FirebaseVisionLabelDetectorOptions object, and use it to tweak the confidence threshold. By default, ML Kit only returns labels with a confidence threshold of 0.5 or higher. I’m going to raise the bar, and enforce a confidence threshold of 0.7.

FirebaseVisionLabelDetectorOptions options = new FirebaseVisionLabelDetectorOptions.Builder()

.setConfidenceThreshold(0.7f)

.build();Create a FirebaseVisionImage object

ML Kit can only process images when they’re in the FirebaseVisionImage format, so our next task is converting the user’s chosen image into a FirebaseVisionImage object.

Since we’re working with Bitmaps, we need to call the fromBitmap() utility method of the FirebaseVisionImage class, and pass it our Bitmap:

FirebaseVisionImage image = FirebaseVisionImage.fromBitmap(mBitmap);Instantiate the FirebaseVisionLabelDetector

ML Kit has different detector classes for each of its image recognition operations. Since we’re working with the Image Labeling API, we need to create an instance of FirebaseVisionLabelDetector.

If we were using the detector’s default settings, then we could instantiate the FirebaseVisionLabelDetector using getVisionLabelDetector(). However, since we’ve made some changes to the detector’s default settings, we instead need to pass the FirebaseVisionLabelDetectorOptions object during instantiation:

FirebaseVisionLabelDetector detector = FirebaseVision.getInstance().getVisionLabelDetector(options);The detectInImage() method

Next, we need to pass the FirebaseVisionImage object to the FirebaseVisionLabelDetector’s detectInImage method, so it can scan and label the image’s content. We also need to register onSuccessListener and onFailureListener listeners, so we’re notified whenever results become available, and implement the related onSuccess and onFailure callbacks.

detector.detectInImage(image).addOnSuccessListener(new OnSuccessListener<List<FirebaseVisionLabel>>() {

public void onSuccess(List<FirebaseVisionLabel> labels) {

//Do something if a label is detected//

}

}

}).addOnFailureListener(new OnFailureListener() {

@Override

public void onFailure(@NonNull Exception e) {

//Task failed with an exception//

}

});

}

}

}Retrieving the labels and confidence scores

Assuming the image labeling operation is a success, an array of FirebaseVisionLabels will pass to our app’s OnSuccessListener. Each FirebaseVisionLabel object contains the label plus its associated confidence score, so the next step is retrieving this information and displaying it as part of our TextView:

@Override

public void onSuccess(List<FirebaseVisionLabel> labels) {

for (FirebaseVisionLabel label : labels) {

mTextView.append(label.getLabel() + "\n");

mTextView.append(label.getConfidence() + "\n\n");

}

}At this point, your MainActivity should look something like this:

import android.content.Intent;

import android.graphics.Bitmap;

import android.net.Uri;

import android.os.Bundle;

import android.support.annotation.NonNull;

import android.view.View;

import android.widget.ImageView;

import android.widget.TextView;

import com.google.android.gms.tasks.OnFailureListener;

import com.google.android.gms.tasks.OnSuccessListener;

import com.google.firebase.ml.vision.FirebaseVision;

import com.google.firebase.ml.vision.common.FirebaseVisionImage;

import com.google.firebase.ml.vision.label.FirebaseVisionLabel;

import com.google.firebase.ml.vision.label.FirebaseVisionLabelDetector;

import com.google.firebase.ml.vision.label.FirebaseVisionLabelDetectorOptions;

import java.util.List;

public class MainActivity extends BaseActivity implements View.OnClickListener {

private Bitmap mBitmap;

private ImageView mImageView;

private TextView mTextView;

@Override

protected void onCreate(Bundle savedInstanceState) {

super.onCreate(savedInstanceState);

setContentView(R.layout.activity_main);

mTextView = findViewById(R.id.textView);

mImageView = findViewById(R.id.imageView);

findViewById(R.id.btn_device).setOnClickListener(this);

findViewById(R.id.btn_cloud).setOnClickListener(this);

}

@Override

public void onClick(View view) {

mTextView.setText(null);

switch (view.getId()) {

case R.id.btn_device:

if (mBitmap != null) {

//Configure the detector//

FirebaseVisionLabelDetectorOptions options = new FirebaseVisionLabelDetectorOptions.Builder()

//Set the confidence threshold//

.setConfidenceThreshold(0.7f)

.build();

//Create a FirebaseVisionImage object//

FirebaseVisionImage image = FirebaseVisionImage.fromBitmap(mBitmap);

//Create an instance of FirebaseVisionLabelDetector//

FirebaseVisionLabelDetector detector =

FirebaseVision.getInstance().getVisionLabelDetector(options);

//Register an OnSuccessListener//

detector.detectInImage(image).addOnSuccessListener(new OnSuccessListener<List<FirebaseVisionLabel>>() {

@Override

//Implement the onSuccess callback//

public void onSuccess(List<FirebaseVisionLabel> labels) {

for (FirebaseVisionLabel label : labels) {

//Display the label and confidence score in our TextView//

mTextView.append(label.getLabel() + "\n");

mTextView.append(label.getConfidence() + "\n\n");

}

}

//Register an OnFailureListener//

}).addOnFailureListener(new OnFailureListener() {

@Override

public void onFailure(@NonNull Exception e) {

mTextView.setText(e.getMessage());

}

});

}

}

}

@Override

protected void onActivityResult(int requestCode, int resultCode, Intent data) {

super.onActivityResult(requestCode, resultCode, data);

if (resultCode == RESULT_OK) {

switch (requestCode) {

case RC_STORAGE_PERMS1:

checkStoragePermission(requestCode);

break;

case RC_SELECT_PICTURE:

Uri dataUri = data.getData();

String path = MyHelper.getPath(this, dataUri);

if (path == null) {

mBitmap = MyHelper.resizeImage(imageFile, this, dataUri, mImageView);

} else {

mBitmap = MyHelper.resizeImage(imageFile, path, mImageView);

}

if (mBitmap != null) {

mTextView.setText(null);

mImageView.setImageBitmap(mBitmap);

}

break;

}

}

}

}Analyze an image with ML Kit

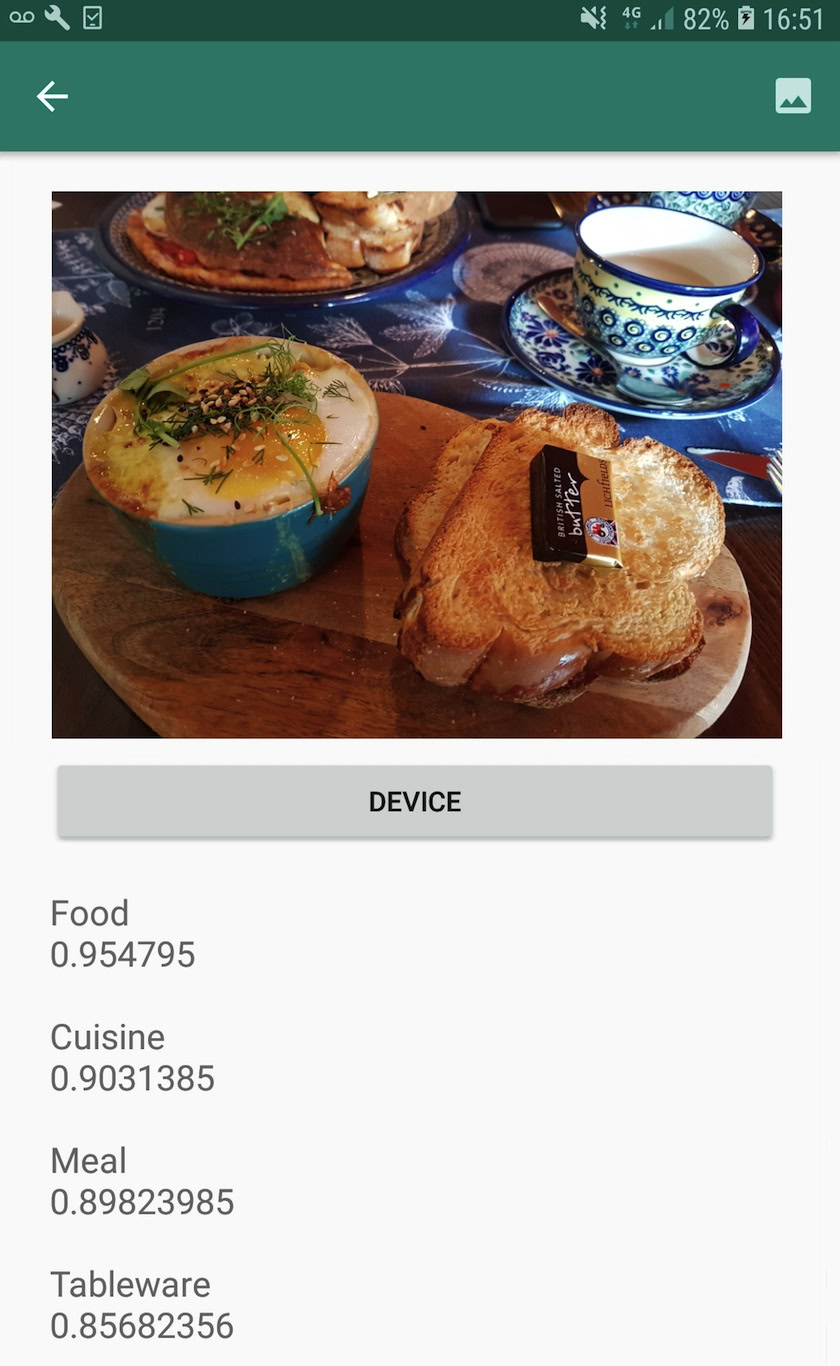

At this point, our app can download ML Kit’s Image Labeling model, process an image on device, and then display the labels and corresponding confidence scores for that image. It’s time to put our application to the test:

- Install this project on your Android device, or AVD.

- Tap the action bar icon to launch your device’s Gallery.

- Select the image that you want to process.

- Give the “Device” button a tap.

This app will now analyze your image using the on-device ML Kit model, and display a selection of labels and confidence scores for that image.

Analyzing images in the cloud

Now our app can process images on device, let’s move onto the cloud-based API.

The code for processing an image using ML’s Kit’s cloud model, is very similar to the code we used to process an image on-device. Most of the time, you simply need to add the word “Cloud” to your code, for example we’ll be replacing FirebaseVisionLabelDetector with FirebaseVisionCloudLabelDetector.

Once again, we can use the default image labeler or customize it. By default, the cloud detector uses the stable model, and returns a maximum of 10 results. You can tweak these settings, by building a FirebaseVisionCloudDetectorOptions object.

Here, I’m using the latest available model (LATEST_MODEL) and returning a maximum of five labels for each image:

FirebaseVisionCloudDetectorOptions options = new FirebaseVisionCloudDetectorOptions.Builder()

.setModelType(FirebaseVisionCloudDetectorOptions.LATEST_MODEL)

.setMaxResults(5)

.build();Next, you need to run the image labeler by creating a FirebaseVisionImage object from the Bitmap, and passing it to the FirebaseCloudVisionLabelDetector’s detectInImage method:

FirebaseVisionImage image = FirebaseVisionImage.fromBitmap(mBitmap);Then we need to get an instance of FirebaseVisionCloudLabelDetector:

FirebaseVisionCloudLabelDetector detector = FirebaseVision.getInstance().getVisionCloudLabelDetector(options);Finally, we pass the image to the detectInImage method, and implement our onSuccess and onFailure listeners:

detector.detectInImage(image).addOnSuccessListener(new OnSuccessListener<List<FirebaseVisionCloudLabel>>() {

@Override

public void onSuccess(List<FirebaseVisionCloudLabel> labels) {

//Do something if an image is detected//

}

}

}).addOnFailureListener(new OnFailureListener() {

@Override

public void onFailure(@NonNull Exception e) {

//Task failed with an exception//

}

});

}If the image labeling operation is a success, a list of FirebaseVisionCloudLabel objects will be passed to our app’s success listener. We can then retrieve each label and its accompanying confidence score, and display it as part of our TextView:

@Override

public void onSuccess(List<FirebaseVisionCloudLabel> labels) {

MyHelper.dismissDialog();

for (FirebaseVisionCloudLabel label : labels) {

mTextView.append(label.getLabel() + ": " + label.getConfidence() + "\n\n");

mTextView.append(label.getEntityId() + "\n");

}

}At this point, your MainActivity should look something like this:

import android.content.Intent;

import android.graphics.Bitmap;

import android.net.Uri;

import android.os.Bundle;

import android.support.annotation.NonNull;

import android.view.View;

import android.widget.ImageView;

import android.widget.TextView;

import com.google.android.gms.tasks.OnFailureListener;

import com.google.android.gms.tasks.OnSuccessListener;

import com.google.firebase.ml.vision.FirebaseVision;

import com.google.firebase.ml.vision.cloud.FirebaseVisionCloudDetectorOptions;

import com.google.firebase.ml.vision.cloud.label.FirebaseVisionCloudLabel;

import com.google.firebase.ml.vision.cloud.label.FirebaseVisionCloudLabelDetector;

import com.google.firebase.ml.vision.common.FirebaseVisionImage;

import com.google.firebase.ml.vision.label.FirebaseVisionLabel;

import com.google.firebase.ml.vision.label.FirebaseVisionLabelDetector;

import com.google.firebase.ml.vision.label.FirebaseVisionLabelDetectorOptions;

import java.util.List;

public class MainActivity extends BaseActivity implements View.OnClickListener {

private Bitmap mBitmap;

private ImageView mImageView;

private TextView mTextView;

@Override

protected void onCreate(Bundle savedInstanceState) {

super.onCreate(savedInstanceState);

setContentView(R.layout.activity_main);

mTextView = findViewById(R.id.textView);

mImageView = findViewById(R.id.imageView);

findViewById(R.id.btn_device).setOnClickListener(this);

findViewById(R.id.btn_cloud).setOnClickListener(this);

}

@Override

public void onClick(View view) {

mTextView.setText(null);

switch (view.getId()) {

case R.id.btn_device:

if (mBitmap != null) {

//Configure the detector//

FirebaseVisionLabelDetectorOptions options = new FirebaseVisionLabelDetectorOptions.Builder()

//Set the confidence threshold//

.setConfidenceThreshold(0.7f)

.build();

//Create a FirebaseVisionImage object//

FirebaseVisionImage image = FirebaseVisionImage.fromBitmap(mBitmap);

//Create an instance of FirebaseVisionLabelDetector//

FirebaseVisionLabelDetector detector = FirebaseVision.getInstance().getVisionLabelDetector(options);

//Register an OnSuccessListener//

detector.detectInImage(image).addOnSuccessListener(new OnSuccessListener<List<FirebaseVisionLabel>>() {

@Override

//Implement the onSuccess callback//

public void onSuccess(List<FirebaseVisionLabel> labels) {

for (FirebaseVisionLabel label : labels) {

//Display the label and confidence score in our TextView//

mTextView.append(label.getLabel() + "\n");

mTextView.append(label.getConfidence() + "\n\n");

}

}

//Register an OnFailureListener//

}).addOnFailureListener(new OnFailureListener() {

@Override

public void onFailure(@NonNull Exception e) {

mTextView.setText(e.getMessage());

}

});

}

break;

case R.id.btn_cloud:

if (mBitmap != null) {

MyHelper.showDialog(this);

FirebaseVisionCloudDetectorOptions options = new FirebaseVisionCloudDetectorOptions.Builder()

.setModelType(FirebaseVisionCloudDetectorOptions.LATEST_MODEL)

.setMaxResults(5)

.build();

FirebaseVisionImage image = FirebaseVisionImage.fromBitmap(mBitmap);

FirebaseVisionCloudLabelDetector detector = FirebaseVision.getInstance().getVisionCloudLabelDetector(options);

detector.detectInImage(image).addOnSuccessListener(new OnSuccessListener<List<FirebaseVisionCloudLabel>>() {

@Override

public void onSuccess(List<FirebaseVisionCloudLabel> labels) {

MyHelper.dismissDialog();

for (FirebaseVisionCloudLabel label : labels) {

mTextView.append(label.getLabel() + ": " + label.getConfidence() + "\n\n");

mTextView.append(label.getEntityId() + "\n");

}

}

}).addOnFailureListener(new OnFailureListener() {

@Override

public void onFailure(@NonNull Exception e) {

MyHelper.dismissDialog();

mTextView.setText(e.getMessage());

}

});

}

break;

}

}

@Override

protected void onActivityResult(int requestCode, int resultCode, Intent data) {

super.onActivityResult(requestCode, resultCode, data);

if (resultCode == RESULT_OK) {

switch (requestCode) {

case RC_STORAGE_PERMS1:

checkStoragePermission(requestCode);

break;

case RC_SELECT_PICTURE:

Uri dataUri = data.getData();

String path = MyHelper.getPath(this, dataUri);

if (path == null) {

mBitmap = MyHelper.resizeImage(imageFile, this, dataUri, mImageView);

} else {

mBitmap = MyHelper.resizeImage(imageFile, path, mImageView);

}

if (mBitmap != null) {

mTextView.setText(null);

mImageView.setImageBitmap(mBitmap);

}

}

}

}

}Activating Google’s cloud-based APIs

ML Kit’s cloud-based APIs are all premium services, so you’ll need to upgrade your Firebase project to a Blaze plan before your cloud-based code actually returns any image labels.

Although you’ll need to enter your payment details and commit to a pay-as-you-go Blaze plan, at the time of writing you can upgrade, experiment with the ML Kit features within the 1,000 free quota limit, and switch back to the free Spark plan without being charged. However, there’s no guarantee the terms and conditions won’t change at some point, so before upgrading your Firebase project always read all the available information, particularly the AI & Machine Learning Products and Firebase pricing pages.

If you’ve scoured the fine print, here’s how to upgrade to Firebase Blaze:

- Head over to the Firebase Console.

- In the left-hand menu, find the section that displays your current pricing plan, and then click its accompanying “Upgrade” link.

- A popup should now guide you through the payment process. Make sure you read all the information carefully, and you’re happy with the terms and conditions before you upgrade.

You can now enable ML Kit’s cloud-based APIs:

- In the Firebase Console’s left-hand menu, select “ML Kit.”

- Push the “Enable Cloud-based APIs” slider into the “On” position.

- Read the subsequent popup, and if you’re happy to proceed then click “Enable.”

Testing your completed machine learning app

That’s it! Your app can now process images on-device and in the cloud. Here’s how to put this app to the test:

- Install the updated project on your Android device, or AVD.

- Make sure you have an active internet connection.

- Choose an image from your device’s Gallery.

- Give the “Cloud” button a tap.

Your app will now run this image against the cloud-based ML Kit model, and return a selection of labels and confidence scores.

You can download the completed ML Kit project from GitHub, although you will still need to connect the application to your own Firebase project.

Keep an eye on your spending

Since the cloud API is a pay-as-you-go service, you should monitor how your app uses it. The Google Cloud Platform has a dashboard where you can view the number of requests your application processes, so you don’t get hit by any unexpected bills!

You can also downgrade your project from Blaze back to the free Spark plan at any time:

- Head over to the Firebase Console.

- In the left-hand menu, find the “Blaze: Pay as you go” section and click its accompanying “Modify” link.

- Select the free Spark plan.

- Read the on-screen information. If you’re happy to proceed, type “Downgrade” into the text field and click the “Downgrade” button.

You should receive an email confirming that your project has been downgraded successfully.

Wrapping up

You’ve now built your own machine learning-powered application, capable of recognizing entities in an image using both on-device and in-the-cloud machine learning models.

Have you used any of the ML Kit APIs we’ve covered on this site?