Affiliate links on Android Authority may earn us a commission. Learn more.

Interested in how Google Tensor's photography smarts work? Google's got you.

- Google just posted a bunch of info related to the photography smarts of the Google Tensor chip.

- Some of this is new info that wasn’t explained during the launch of the Google Pixel 6 series.

As exciting as the Pixel 6 series is just for existing, one of the most exciting aspects of the phones is the chipset inside. This is Google’s first attempt at designing its own silicon, and so far, it appears to be a success (check our Pixel 6 Pro review for more).

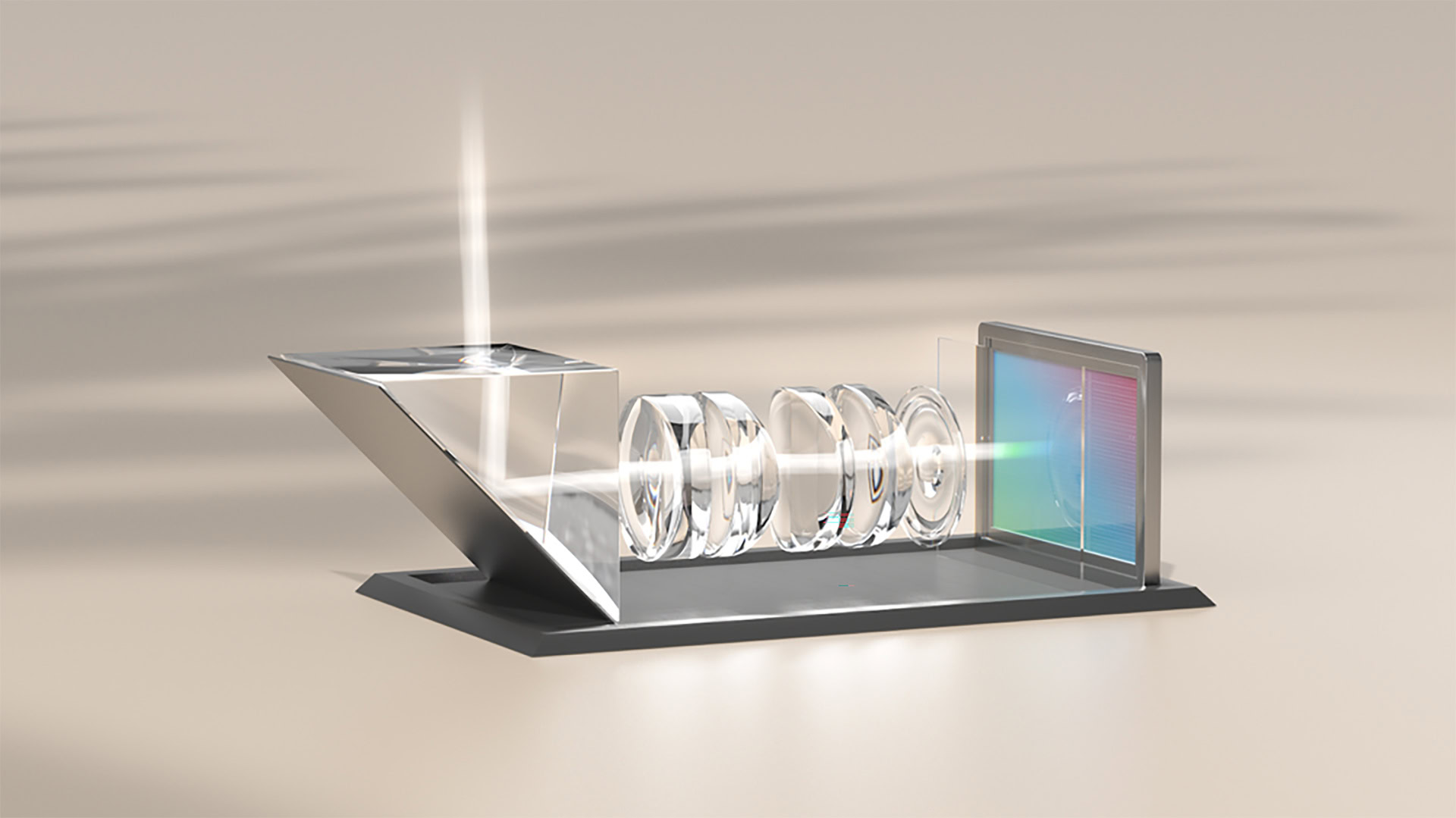

Now, Google has posted a blog entry that details just how smart Google Tensor is, at least when it comes to photography. The company gives some details on Tensor’s Image Signal Processor (ISP) and how its machine learning smarts make your photos and videos better.

See also: The best camera phones you can get

For example, the Tensor ISP incorporates Live HDR+ support right into the chip, which allows for 4K/60FPS HDR video within the native camera app but also in third-party apps. Tensor also makes Google’s Night Sight work better and faster in tandem with the new laser auto-focus system.

Google also discusses the hardware and software behind the Google Pixel 6 Pro’s telephoto lens as well as how Tensor is making computational photography more inclusive for people of color.

Some of this we already saw during the Pixel 6 launch, but some of it is also brand new. If you’re excited about putting the photography chops of a Pixel 6 phone to the test, this is a good article to read. Check out the full post here.