Affiliate links on Android Authority may earn us a commission. Learn more.

Google Lens offers a snapshot of the future for augmented reality and AI

There are a ton of exciting new technologies on the way in the near future. These include the likes of virtual reality, augmented reality, artificial intelligence, IOT, personal assistants and more. Google Lens is a part of that future. We’re taking tentative steps into the future and the next few years promise to be very exciting indeed for tech enthusiasts (that’s you!).

But when looking at these kinds of paradigm shifts, what’s more important is the technology that lies beneath them. The underlying breakthroughs what drive the innovations that ultimately end up changing our lives. Keeping your ear to the floor and looking out for examples of new technology can therefore help you to better understand what might be around the corner.

Google Lens provides us with some very big hints as to the future of Google and perhaps tech as a whole

This is certainly the case with the recently unveiled Google Lens, which provides us with some very big hints as to the future of Google and perhaps tech as a whole. This is powered by advanced computer vision, which enables such things as augmented reality, certain forms of artificial intelligence and even ‘inside-out motion tracking’ for virtual reality.

In fact, Google Lens encapsulates a number of recent technological advances and is in many ways, the perfect example of Google’s new direction as an ‘AI first’ company. It may just provide a snapshot of the future.

What is Google Lens?

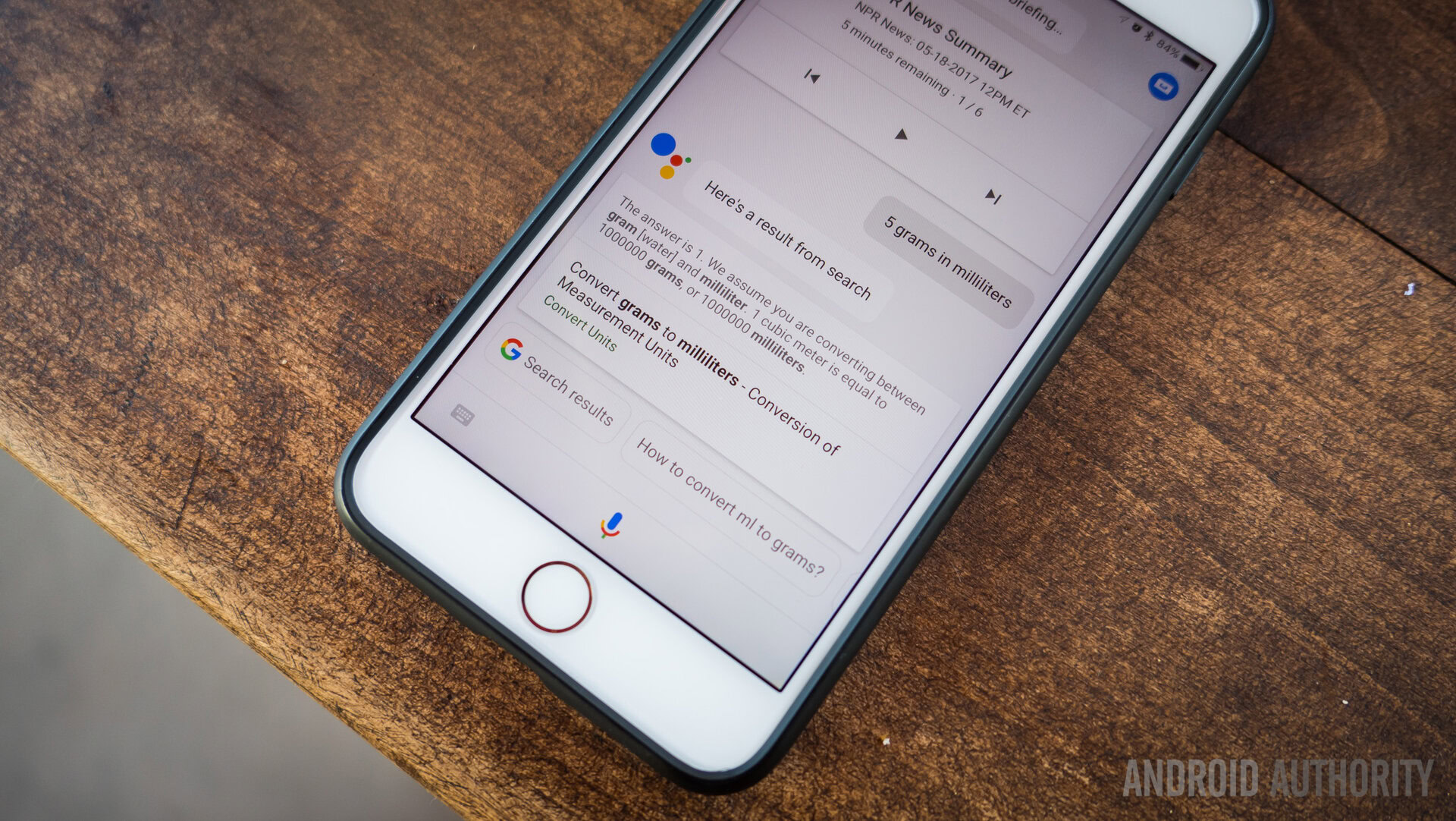

Google Lens is a tool that effectively brings search into the real world. The idea is simple: you point your phone at something around you that you want more information on and Lens will provide that information.

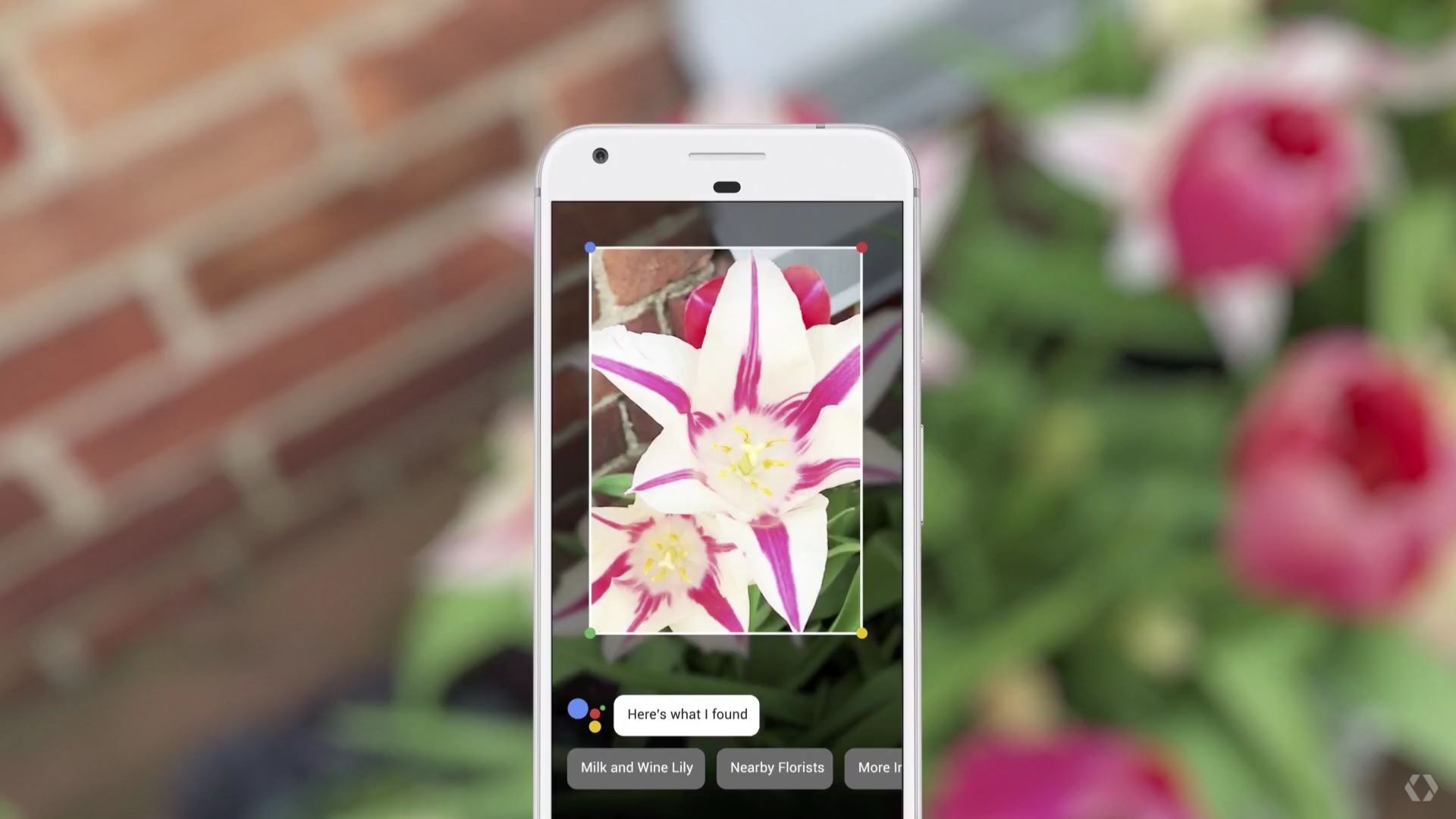

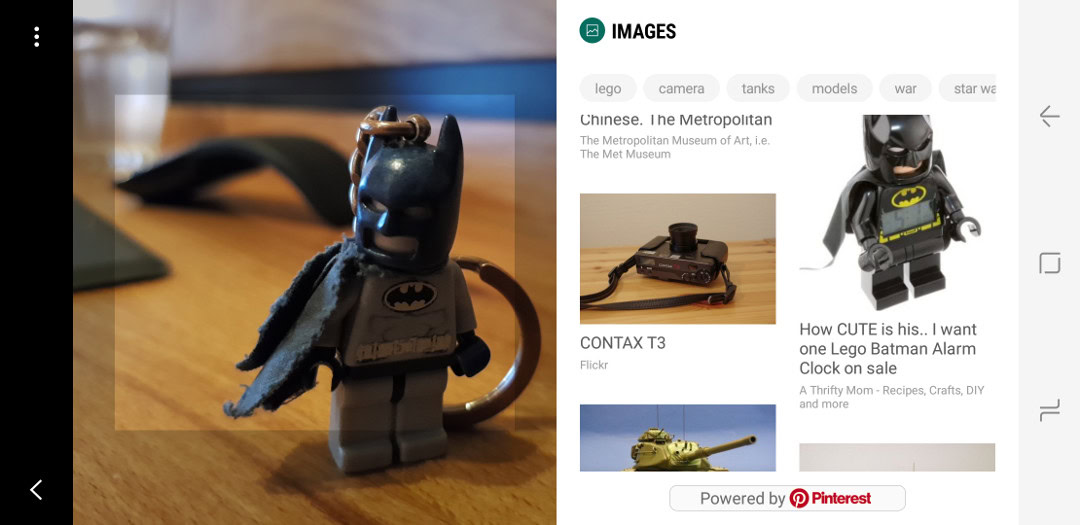

So yes, it sounds a lot like Google Goggles. It might also sound familiar to anyone who has tried out Bixby on their Galaxy S8s. Only it’s, you know, much better than either of those things. In fact, it is supposedly so good, that it can now identify the species of any flower you point it at. It can also do OCR tricks (Optical Character Recognition – i.e. reading) and a whole lot besides.

At the recent I/0 2017, Google stated that we were at an inflexion point with vision. In other words, it’s now more possible than ever before for a computer to look at a scene and dig out the details and understand what’s going on. Hence: Google Lens.

This improvement comes courtesy of machine learning, which allows companies like Google to acquire huge amounts of data and then create systems that utilize that data in useful ways. This is the same technology underlying voice assistants and even your recommendations on Spotify to a lesser extent.

More technologies that use computer vision

The same computer vision used by Google Lens will play a big role in many aspects of our future. As Computer vision is surprisingly instrumental in VR. Not for your Galaxy Gear VRs, but for the HTCVive and certainly for the new standalone headset for Daydream from HTC. These devices allow the user to actually walk around and explore the virtual world’s they are in. To do this, they need to be able ‘see’ either the user, or the world around the user, and then use that information to tell if they are walking forward or leaning sideways.

Of course, this is also important for high-quality augmented reality. In order for a program like Pokémon Go to be able to place a character into the camera-image in a realistic manner, it needs to understand where the ground is and how the user is moving. Pokemon Go’s AR is actually incredibly rudimentary, but the filters seen in Snapchat are surprisingly advanced.

This is something that we know Google is also working on, with its project Tango. This is an initiative to bring advanced computer vision to handsets through a standardized selection of sensors that can provide depth perception and more. The Lenovo Phab 2 Pro and ASUS ZenFone AR are two Tango-ready phones that are already commercially available!

With its huge bank of data, there is really no company better poised to make this happen than Google

But Google started life as a search engine and computer vision is really useful for the company in this regard. Currently, if you search Google Images for ‘Books’, you’ll be presented with a series of images from websites that use the word books. That is to say that Google isn’t really searching images at all, it is just searching for text and then showing you ‘relevant’ images. With advanced computer vision though, it will be able to search the actual content of the images.

So, Google Lens is really just an impressive example of a rapidly progressing technology that is as we speak opening a whole floodgate of new possibilities for apps and hardware. And with its huge bank of data, there is really no company better poised to make this happen than Google.

Google as an AI first company

But what does this all have to do with AI? Is it a coincidence that the same conference brought us news that the company would be using ‘neural nets to build better neural nets’? Or the quote from Sundar Pichai about a shift from ‘mobile first’ to ‘AI first’?

What does ‘AI’ first mean? Isn’t Google primarily still a search company?

Well yes, but in many ways, AI is the natural evolution of search. Traditionally, when you searched for something on Google, it would bring up responses by looking for exact matches in the content. If you type ‘fitness tips’ then that becomes a ‘keyword’ and Google would provide content with repetitious use of that word. You’ll even see it highlighted in the text.

But this isn’t really ideal. The ideal scenario would be for Google to actually understand what you’re saying and then provide results on that basis. That way, it could offer relevant additional information, it could suggest other useful things and become an even more indispensable part of your life (good for Google and for Google’s advertisers!).

And this is what Google has been very much pushing for with its algorithm updates (changes to the way it searches). Internet marketers and search engine optimizers now know that they need to use synonyms and relevant terms in order for Google to show their websites: it’s no longer good enough for them to just include the same word repeatedly. ‘Latent semantic indexing’ allows Google to understand context and gain a deeper knowledge of what is being said.

And this lends itself perfectly to other initiatives that the company has been pushing recently. It’s this natural language interpretation for instance that allows something like Google Assistant to exist.

When you ask a virtual assistant for information, you say:

“When was Sylvester Stallone born?”

You don’t say:

“Sylvester Stallone birth date”

We talk differently from how we write and this is where Google starts to work more like an AI. Other initiatives like ‘structured markup’ ask publishers to highlight key information in their content like ingredients in a recipe and dates of events. This makes life very easy for Google Assistant when you ask it ‘when is Sonic Mania coming out?’.

‘Latent semantic indexing’ allows Google to understand context and gain a deeper knowledge of what is being said.

Google has been leaning on publishers and webmasters to create their content with this direction in mind (even if they haven’t always been transparent about their motivations – internet marketers are a sensitive bunch) and in that way, they’re actually helping to make the entire web more ‘AI’ friendly – ready for Google Assistant, Siri and Alexa to step in.

Now with advancements in computer vision, this advanced ‘AI search’ can further enhance Google’s ability to search the real world around you and to provide even more useful information and responses as a result. Imagine being able to say ‘Okay Google, what’s that?’.

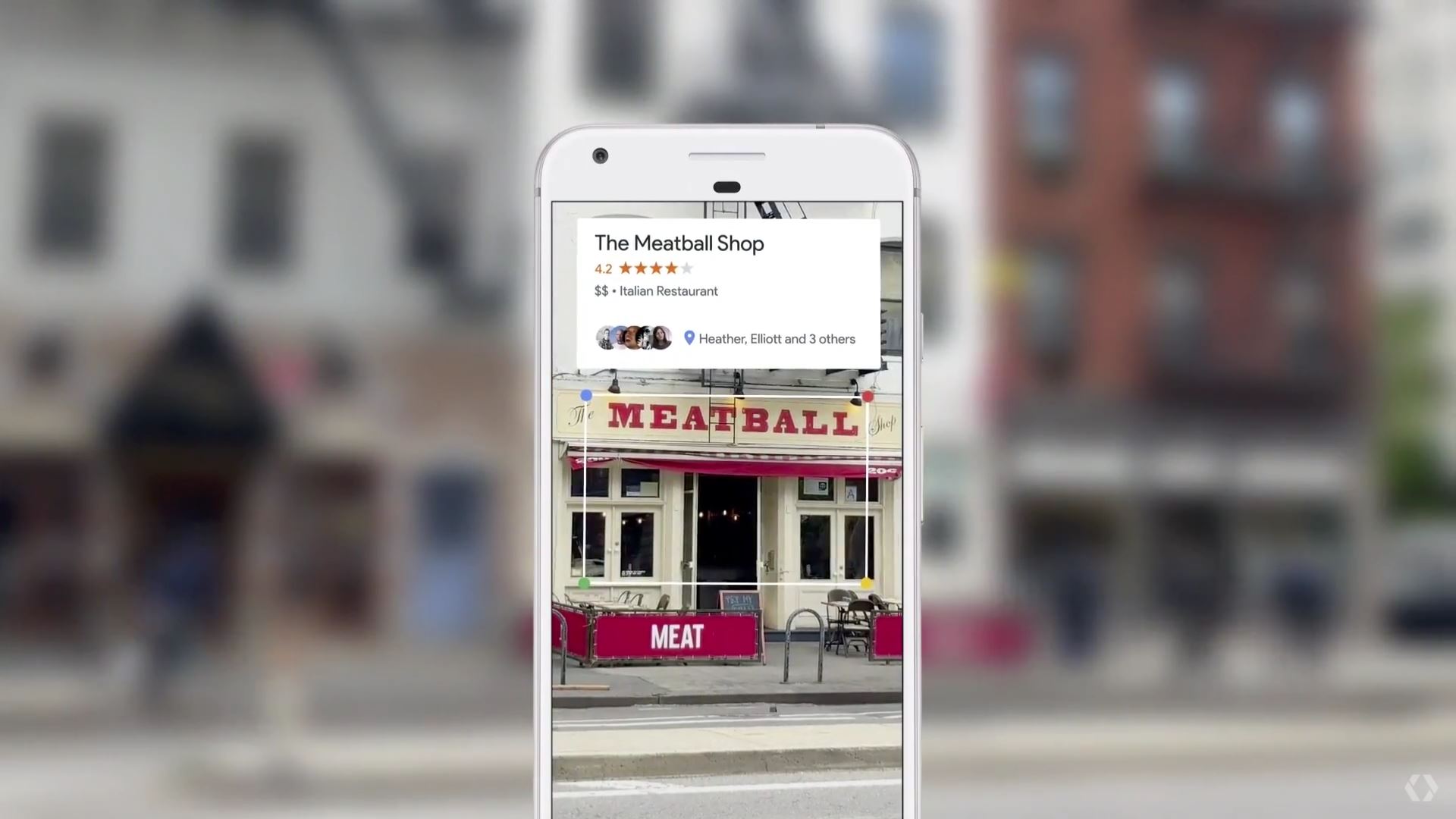

And imagine combining this with location awareness and depth perception. Imagine when you combine this with AR or VR. Google Lens can reportedly even show you reviews of a restaurant when you point your phone at it, which is as much an example of AR as it is AI. All these technologies are coming together in fantastically interesting ways and even starting to blur the line between the physical and digital worlds.

As Pichai put it:

“All of Google was built because we started understanding text and web pages. So the fact that computers can understand images and videos has profound implications for our core mission.”

Closing thoughts

Technology has been moving in this direction for a while. Bixby technically beat Google Lens to the punch except it loses points for not working quite as advertised. No doubt many more companies will be getting involved as well.

But Google’s tech is a clear statement from the company: a commitment to AI, to computer vision and to machine learning. It is a clear indication of the direction that company will be taking in the coming years and likely the direction of technology in general.

The singularity, brought to you by Google!