Affiliate links on Android Authority may earn us a commission. Learn more.

Top Android performance problems faced by app developers

From a traditional “software engineering” point of view there are two aspects to optimization. One is local optimization where a particular aspect of a program’s functionality can be improved, that is the implementation can be improved, speeded-up. Such optimizations can include changes to the algorithms used and the program’s internal data structures. The second type of optimization is at a higher level, the level of design. If a program is badly designed it will be hard to get good levels of performance or efficiency. Design level optimizations are much harder to fix (maybe impossible to fix) late in the development lifecycle, so really they should be resolved during the design stages.

When it comes to developing Android apps there are several key areas where app developers tend to trip-up. Some are design level issues and some are implementation level, either way they can drastically reduce the performance or efficiency of an app. Here is our list of the top 4 Android performance problems faced by app developers:

Battery

Most developers learnt their programming skills on computers connected to the mains electricity. As a result there is little taught in software engineering classes about the energy costs of certain activities. A study performed by Purdue University showed that “most of the energy in smartphone apps is spent in I/O,” mainly network I/O. When writing for desktops or servers, the energy cost of I/O operations is never considered. The same study also showed that 65%-75% of energy in free apps is spent in third-party advertisement modules.

The reason for this is because the radio (i.e. Wi-Fi or 3G/4G) parts of a smartphone use a energy to transmit the signal. By default the radio is off (asleep), when a network I/O request occurs the radio wakes up, handles the packets and the stays awake, it doesn’t sleep again immediately. After a keep awake period with no other activity it will finally switch off again. Unfortunately waking the radio isn’t “free”, it uses power.

As you can imagine, the worse case scenario is when there is some network I/O, followed by a pause (which is just longer than the keep awake period) and then some more I/O, and so on. As a result the radio will be use power when it is switched on, power when it does the data transfer, power while it waits idle and then it will go to sleep, only to be woken again shortly afterwards to do more work.

Rather than sending the data piecemeal, it is better to batch up these network requests and deal with them as a block.

There are three different types of networking requests that an app will make. The first is the “do now” stuff, which means that something has happened (like the user has manually refreshed a news feed) and the data is needed now. If it isn’t presented as soon as possible then the user will think that the app is broken. There is little that can be done to optimize the “do now” requests.

The second type of network traffic is the pulling down of stuff from the cloud, e.g. a new article has been updated, there is a new item for the feed etc. The third type is the opposite of the pull, the push. Your app wants to send some data up to the cloud. These two types of network traffic are perfect candidates for batch operations. Rather than sending the data piecemeal, which causes the radio to switch on and then stay idle, it is better to batch up these network requests and deal with them in a timely manner as a block. That way the radio is activated once, the network requests are made, the radio stays awake and then finally sleeps again without the worry that it will be woken again just after it has gone back to sleep. For more information on batching network requests you should look into the GcmNetworkManager API.

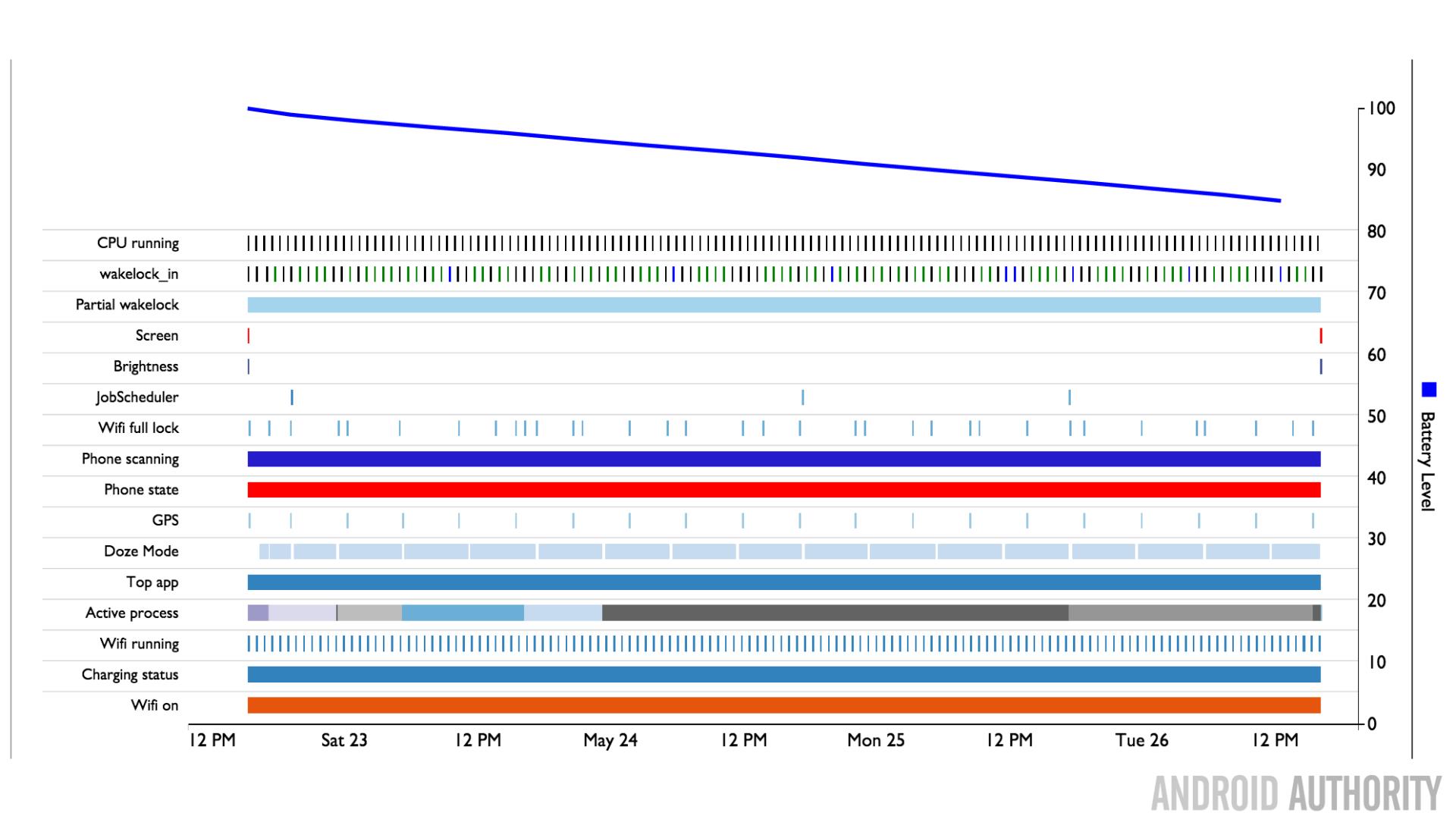

To help you diagnose any potential battery issues in your app, Google has a special tool called the Battery Historian. It records battery related information and events on an Android device (Android 5.0 Lollipop and later: API Level 21+) while a device is running on battery. It then allows you to visualize system and application level events on a timeline, along with various aggregated statistics since the device was last fully charged. Colt McAnlis has a convenient, but unofficial, Guide to Getting started with Battery Historian.

Memory

Depending on which programming language you are most comfortable with, C/C++ or Java, then your attitude to memory management is going to be: “memory management, what is that” or “malloc is my best friend and my worse enemy.” In C, allocating and freeing memory is a manual process, but in Java, the task of freeing memory is handled automatically by the garbage collector (GC). This means that Android developers tend to forget about memory. They tend to be a gung-ho bunch who allocate memory all over the place and sleep safely at night thinking that the garbage collector will handle it all.

And to some extent they are right, but… running the garbage collector can have an unpredictable impact on your app’s performance. In fact for all versions of Android prior to Android 5.0 Lollipop, when the garbage collector runs, all other activities in your app stop until it is done. If you are writing a game then the app needs to render each frame in 16ms, if you want 60 fps. If you are being too audacious with your memory allocations then you can inadvertently trigger a GC event every frame, or every few frames and this will cause you game to drop frames.

For example, using bitmaps can cause trigger GC events. If the over the network, or the on-disk format, of an image file is compressed (say JPEG), when the image is decoded into memory it needs memory for its full decompressed size. So a social media app will be constantly decoding and expanding images and then throwing them away. The first thing your app should do is re-use the memory already allocated to bitmaps. Rather than allocating new bitmaps and waiting for the GC to free the old ones your app should use a bitmap cache. Google has a great article on Caching Bitmaps over on the Android developer site.

Also, to improve the memory foot print of your app by up to 50%, you should consider using the RGB 565 format. Each pixel is stored on 2 bytes and only the RGB channels are encoded: red is stored with 5 bits of precision, green is stored with 6 bits of precision and blue is stored with 5 bits of precision. This is especially useful for thumbnails.

Data Serialization

Data serialization seems to be everywhere nowadays. Passing data to and from the cloud, storing user preferences on the disk, passing data from one process to another seems to all be done via data serialization. Therefore the serialization format that you use and the encoder/decoder that you use will impact both the performance of your app and the amount of memory it uses.

The problem with the “standard” ways of data serialization is that they aren’t particularly efficient. For example JSON is a great format for humans, it is easy enough to read, it is nicely formatted, you can even change it. However JSON isn’t meant to be read by humans, it is used by computers. And all that nice formatting, all the white space, the commas and the quotation marks make it inefficient and bloated. If you aren’t convinced then check out Colt McAnlis’ video on why these human-readable formats are bad for your app.

Many Android developers probably just extend their classes with Serializable in a hope to get serialization for free. However in terms of performance this is actually quite a bad approach. A better approach is to use a binary serialization format. The two best binary serialization libraries (and their respective formats) are Nano Proto Buffers and FlatBuffers.

Nano Proto Buffers is a special slimline version of Google’s Protocol Buffers designed specially for resource-restricted systems, like Android. It is resource-friendly in terms of both the amount of code and the runtime overhead.

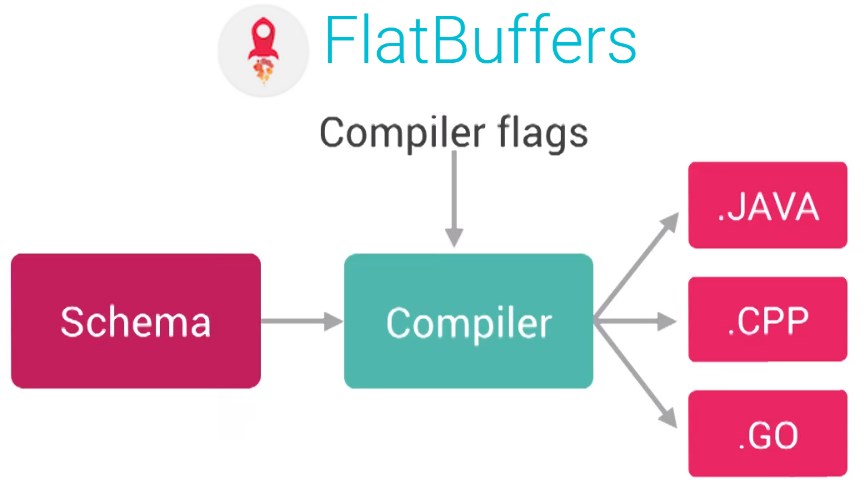

FlatBuffers is an efficient cross platform serialization library for C++, Java, C#, Go, Python and JavaScript. It was originally created at Google for game development and other performance-critical applications. The key thing about FlatBuffers is that it represents hierarchical data in a flat binary buffer in such a way that it can still be accessed directly without parsing/unpacking. As well as the included documentation there are lots of other online resources including this video: Game On! – Flatbuffers and this article: FlatBuffers in Android – An introduction.

Threading

Threading is important for getting great responsiveness from your app, especially in the era of multi-core processors. However it is very easy to get threading wrong. Because complex threading solutions require lots of synchronization, which in turn infers the use of locks (mutexes and semaphores etc) then the delays introduced by one thread waiting on another can actually slow your app down.

By default an Android app is single-threaded, including any UI interaction and any drawing that you need to do for the next frame to be displayed. Going back to the 16ms rule, then the main thread has to do all the drawing plus any other stuff that you want to achieve. Sticking to one thread is fine for simple apps, however once things start to get a little more sophisticated then it is time to use threading. If the main thread is busy loading a bitmap then the UI is going to freeze.

Things that can be done in a separate thread include (but aren’t limited to) bitmap decoding, networking requests, database access, file I/O, and so on. Once you move these types of operation away onto another thread then the main thread is freer to handle the drawing etc without it becoming blocked by synchronous operations.

All AsyncTask tasks are executed on the same single thread.

For simple threading many Android developers will be familiar with AsyncTask. It is a class that allows an app to perform background operations and publish results on the UI thread without the developer having to manipulate threads and/or handlers. Great… But here is the thing, all AsyncTask jobs are executed on the same single thread. Before Android 3.1 Google actually implemented AsyncTask with a pool of threads, which allowed multiple tasks to operate in parallel. However this seemed to cause too many problems for developers and so Google changed it back “to avoid common application errors caused by parallel execution.”

What this means is that if you issue two or three AsyncTask jobs simultaneously they will in fact execute in serial. The first AsyncTask will be executed while the second and third jobs wait. When the first task is done then the second will start, and so on.

The solution is to use a pool of worker threads plus some specific named threads which do specific tasks. If your app has those two, it likely won’t need any other type of threading. If you need help on setting up your worker threads then Google has some great Processes and Threads documentation.

Wrap-up

There are of course other performance pitfalls for Android app developers to avoid however getting these four right will ensure that you app performs well and don’t use too many system resources. If you want more tips on Android performance then I can recommend Android Performance Patterns, a collection of videos focused entirely on helping developers write faster, more efficient Android apps.