Affiliate links on Android Authority may earn us a commission. Learn more.

Everything you need to know about ARM’s DynamIQ

ARM unveiled the nature of its new DynamIQ technology back in March, but with the announcement of the company’s new Cortex-A75 and A55 CPU cores, we now have a much clearer picture about the capabilities offered by ARM’s next-gen multi-core SoC solution.

Starting at the basics, DynamIQ is a new take on multi-core processing for ARM’s CPU cores. In previous arrangements, SoC designers utilizing ARM’s big.LITTLE technology were required to use multiple core clusters to mix between CPU core micro-architectures, and these could suffer a slight performance penalty when moving data between clusters across the CCI interconnect. In other words, your octa-core big.LITTLE CPU could consist of a number of clusters, typically two, with up to four cores in each, which had to consist of the same type of core. So 4x Cortex-A73 in the first cluster and 4x Cortex-A53 in the second, or 2x Cortex-A72 + 4x Cortex-A53, etc.

Multi-core redefined

DynamIQ changes this up substantially, allowing for mixing and matching of Cortex-A75 and A55 CPU cores, with up to eight cores total in a cluster. So rather than achieving a typical octa-core design using two clusters, DynamIQ can now achieve this with one. This produces a number of benefits, both in terms of performance but also for the cost effectiveness of certain designs.

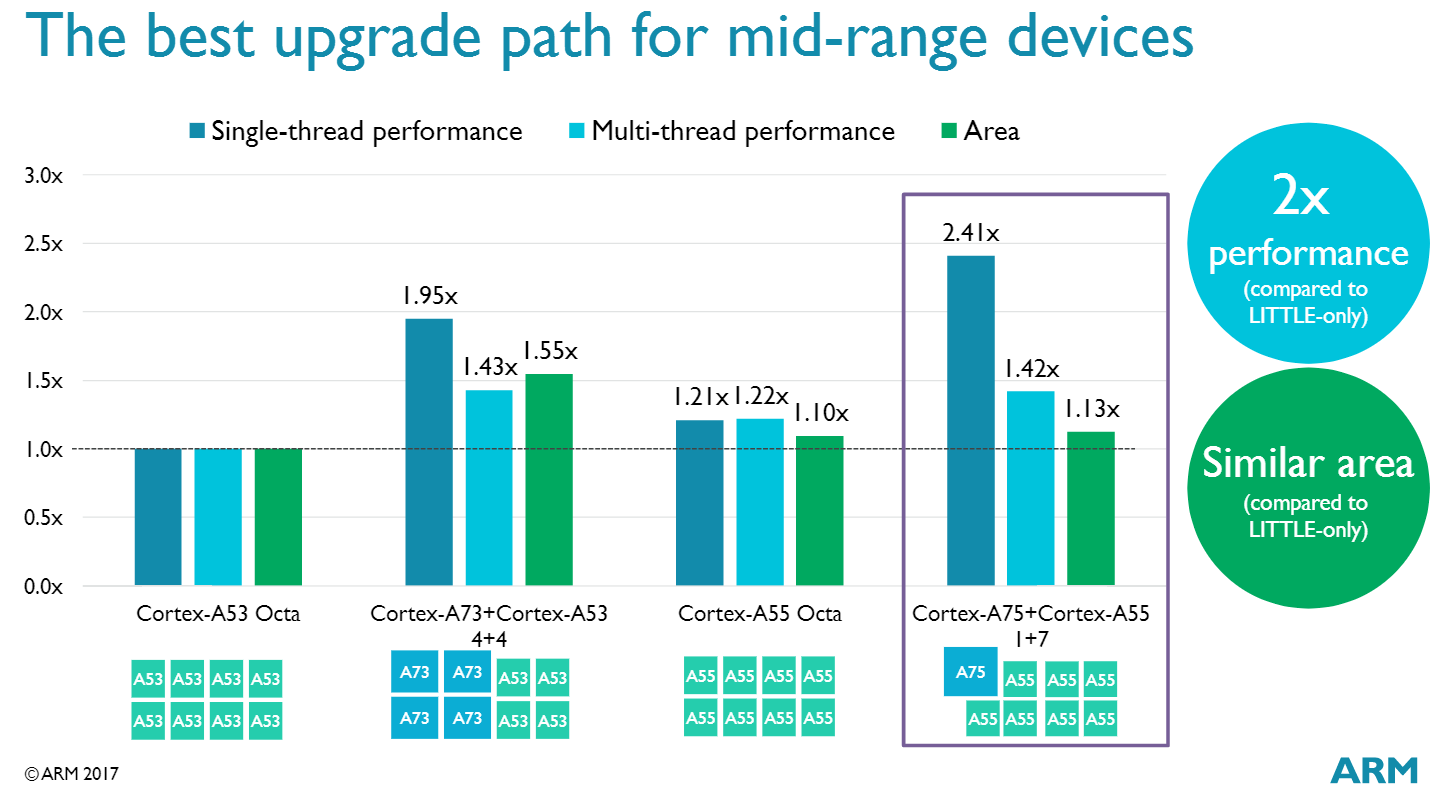

ARM points out that the cost of adding a big core, the Cortex-A75, into a DynamIQ arrangement is relatively low, especially when compared with the old method of having to implement a second cluster. Even the inclusion of a single core with strong single thread performance can have a huge impact on the user experience, speeding up loading times and offering up extra performance for the occasional heavy duty situation by up to 2x over existing multi-core A53 only designs. Using DynamIQ could free up low-end and mid-range chips to implement more flexible and powerful CPU designs more cost effectively. We could end up seeing 1+3, 1+4, 1+6 or 2+6 DynamIQ CPU designs that offer up better single threaded performance than today’s low and mid-tier SoCs.

It’s important to note that DynamIQ still functions as a cluster that is connected up to the SoCs interconnect. This means that a DynamIQ cluster can be paired up with multiple other DynamIQ clusters for higher end systems, or even the more familiar quad-core clusters that we see in today’s design. However, another essential point is that the move to this technology has required some major changes on the CPU side too. DynamIQ cores utilize the ARMAv8.2 architecture and DynamIQ Share Unit hardware, which is currently only supported by the new Cortex-A75 and Cortex-A55. However, an entire SoC must also use cores that understand exactly the same instruction set, meaning that using DynamIQ necessitates the use of ARMAv8.2 compatible cores across the system. So DynamIQ cannot be paired up with current Cortex-A73, A72, A57, or A53 cores, even if they are sitting in a separate cluster.

DynamIQ cores utilize the ARMAv8.2 architecture and DynamIQ Share Unit hardware, which is currently only supported by the new Cortex-A75 and Cortex-A55 CPU cores right now.

This has some very interesting implications for ARM’s licensees, as it presents a tougher choice between an architecture license and ARM’s latest “Built on ARM Cortex Technology” option. An architectural licensee does not receive CPU design resources from ARM, only the right to design a CPU that is compatible with ARM’s instruction set. This means no access to DynamIQ and the essential DSU design inside the A75 and A55.

So a company like Samsung, which uses an architectural license for its M1 and M2 cores, may end up sticking with a more familiar dual-cluster design. However, I should point out that using an architectural license doesn’t prevent a licensee from creating its own solution that works in a similar way to DynamIQ. We will have to wait and see what companies actually announce, but this move seems to give custom CPU designs an extra feature to compete against.

Meanwhile a company that uses a Built on ARM Cortex Technology license can tweak an A75 or A55 and use their own branding on the CPU core, while retaining the DSU and compatibility with DynamIQ. So the likes of Qualcomm could make use of DynamIQ while retaining its own branding on the core types too. The implication is that we could end up seeing an even greater differentiation in future heterogeneous SoC CPU designs, even if the core count is the same between chips.

Meet the DynamIQ Shared Unit

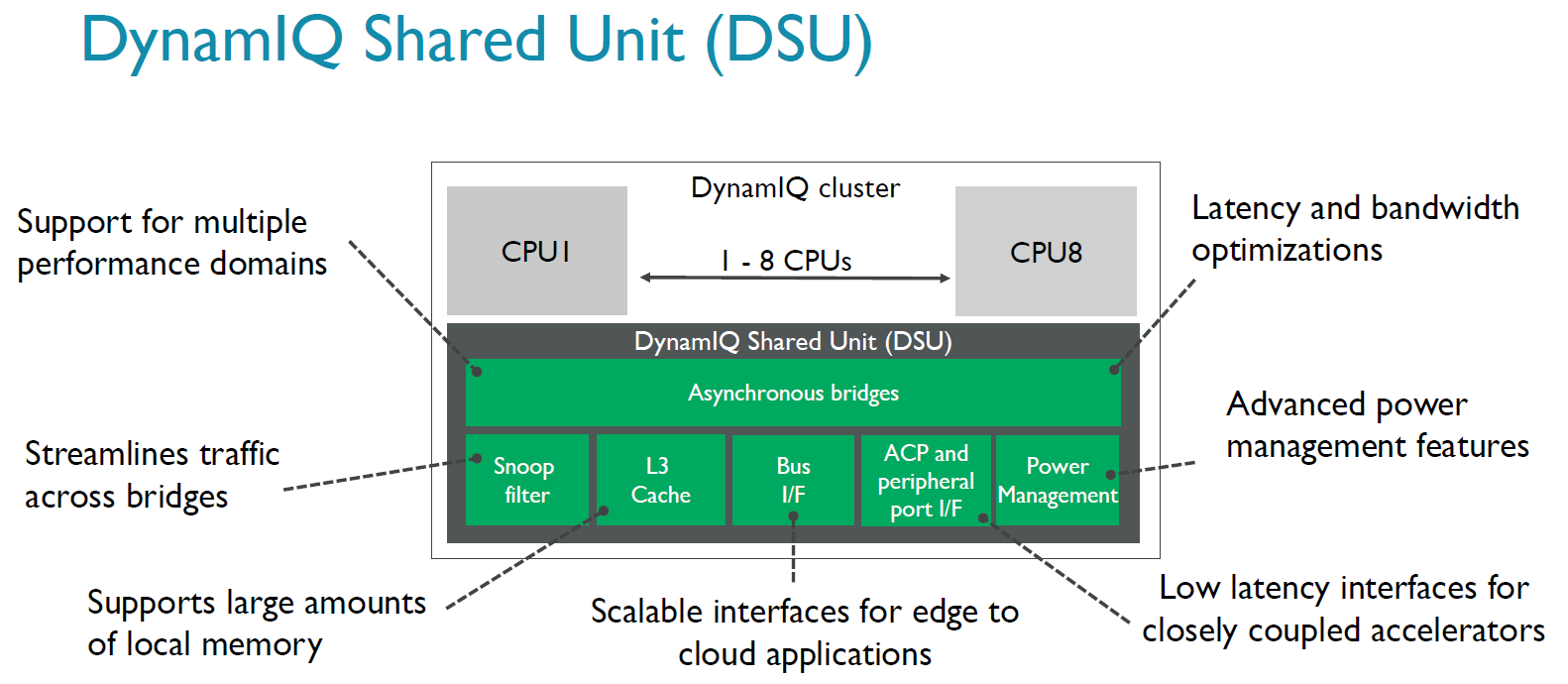

Going back to performance and the nuts and bolts of DynamIQ, we’ve mentioned one of the requirements of the new system – the DynamIQ Shared Unit (DSU). This unit is not optional, it’s integrated into the new CPU design, and houses many of the key new features available with DynamIQ. The DSU contains new Asynchronous Bridges to each CPU, a Snoop Filter, L3 Cache, busses for peripherals and interfaces, and power management features.

First up, DynamIQ represents a first for ARM as it allows designers to build their first ARM based mobile SoCs with a L3 cache. This pool of memory is shared across all of the cores within the cluster, with the main benefit being shared memory across both big and LITTLE cores, which simplifies tasks sharing between cores and greatly improves memory latency. LITTLE cores are particularly sensitive to memory latency, so this change can produce a big boost to Cortex-A55 performance in certain scenarios.

This L3 cache is 16-way set associative and is configurable from 0KB up to 4MB in size. The memory setup is designed to be highly exclusive, with very little data shared across the L1, L2, and L3 caches. The L3 cache can also be partitioned into a maximum of four groups. This can be used to avoid cache thrashing or to dedicate memory to different processes or external accelerators connected to the ACP or the interconnect. These partitions are dynamic and can be reapportioned during runtime via software.

Moving big and LITTLE cores into a single cluster with a shared memory pool reduces memory latency between cores and simplifies task sharing.

This also allows ARM to implement a power gating solution inside the L3, which can shut down part of or all of the memory when not in use. So when your smartphone is performing some very basic tasks or sleeping, the L3 cache can be left off. The pseudo-exclusive nature of these caches also means that booting up a single core doesn’t require the entire memory system to be powered up for short processes, again saving on power. The L3 cache power control is supported as part of Energy Aware Scheduling.

The introduction of a L3 cache has facilitated the move over to private L2 caches as well. This has allowed for the use of higher latency asynchronous bridges, as calls aren’t made out to the L3 as often. ARM has also reduced the L2 memory latency, with 50% faster access to the L2 compared with the Cortex-A73.

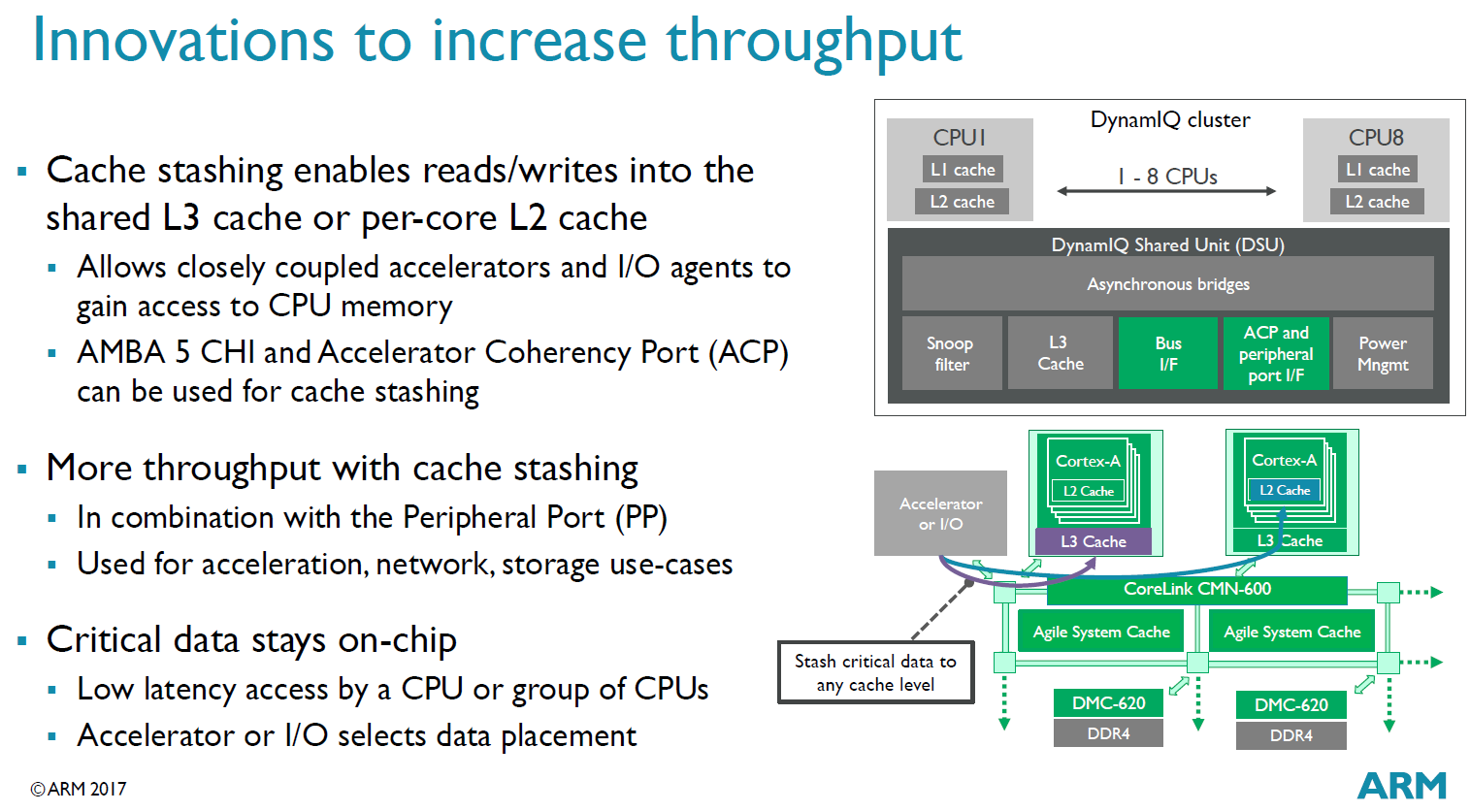

In order to increase performance and make the most of its new memory sub-system, ARM has also introduced cache stashing inside the DSU. Cache stashing grants closely coupled accelerators and I/O agents direct access to parts of the CPU memory, enabling direct reads and writes into the shared L3 cache and the L2 caches of each core.

The idea is that information from accelerators and peripherals that requires quick processing in the CPU can be injected directly into CPU memory with minimal latency, rather than having to be written to and read from much higher latency main RAM or relying on prefetching. Examples could include packet processing in network systems, communicating with a DSP or visual accelerators, or data coming from an eye tracking chip for virtual reality applications. This is much more application-specific than many of ARM’s other new features, but offers up greater flexibility and potential performance gains for SoC and system designers.

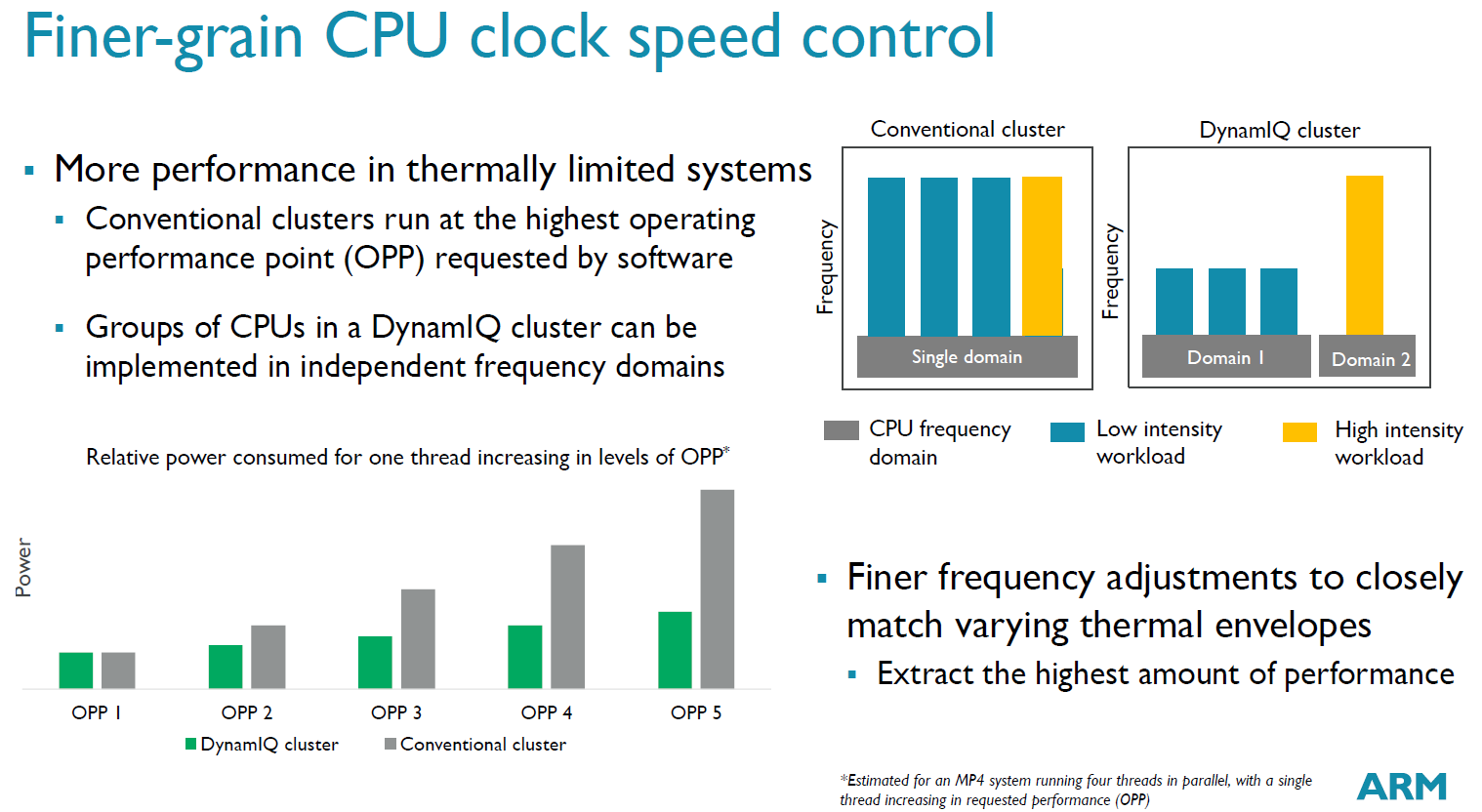

The introduction of optional asynchronous bridges offers up configurable CPU clock domains on a per core bases, this was previously limited to a per cluster basis.

Going back to power, the introduction of different CPU core types into a single cluster has necessitated a rethink of the way that power and clock frequencies are managed with DynamIQ. The introduction of optional asynchronous bridges offers up configurable CPU clock domains on a per core bases, this was previously limited to a per cluster basis. Designers can also choose to tie the core frequency synchronously to the DSU’s speed as well.

In other words, each CPU core can theoretically run at its own independently controlled frequency with DynamIQ. In reality, common core types are more likely to be tied into domain groups, which controls the frequency, voltage, and therefore power, for a group of cores rather that completely individually. ARM states that DynamIQ big.LITTLE requires that groups of big cores and LITTLE cores are able to independently dynamically scale voltage and frequency.

This is particularly useful in thermally limited use cases, such as smartphones, as it ensures that big and LITTLE cores can continue to be power scaled depending on the workload, while still occupying the same cluster. Theoretically, SoC designers could use multiple domains for targeting different CPU power points, similar to what MediaTek has attempted to do with its tri-cluster designs, although this increases complexity and cost.

With DynamIQ, ARM has also simplified its power-down sequences when using hardware controls, which should mean that unused cores can turn off that little bit faster. By moving cache and coherency management into hardware, as this was previously done in software, ARM has been able to remove time consuming steps related to disabling and flushing memory caches upon power down.

Wrap up

DynamIQ represents a notable progression for mobile multi-core processing technology, but as such makes a number of important changes to the current formula that are going to have some interesting implications for future mobile products. Not only does DynamIQ offer up some interesting potential performance improvements for multi-core systems, but it also empowers SoC developers to implement new big.LITTLE arrangements and heterogeneous compute solutions, both for mobile and beyond.

We will likely see products announced that make use of DynamIQ technology and ARM’s latest CPU cores towards the end of 2017 or perhaps in early 2018.

We will likely see products announced that make use of DynamIQ technology and ARM’s latest CPU cores towards the end of 2017 or perhaps in early 2018.