Affiliate links on Android Authority may earn us a commission. Learn more.

Best of Android: How we score

After reading all our winners this year, you’re probably wondering how we score each candidate. That’s a great question to have! We actually re-did everything this year, and I think even the most nitpicky out there will appreciate how we improved our processes. There will never be a perfect scoring algorithm, but we’re proud of what we have.

As the eponymous Gary Sims would say: Let me explain.

Objective testing

Last year we debuted a system of objective testing to determine the quality of smartphones, and admittedly it wasn’t as great as it could be. Specifically, the system we used to rank phones was too simplistic, and led to some unexpected results. Nothing wrong, mind you, but we can do better. This year, we generated a ton more data, all with the goal of being able to better contextualize performance instead of merely ranking it. You may have noticed our deep dive reviews here and there — that’s just a taste of what we can do now.

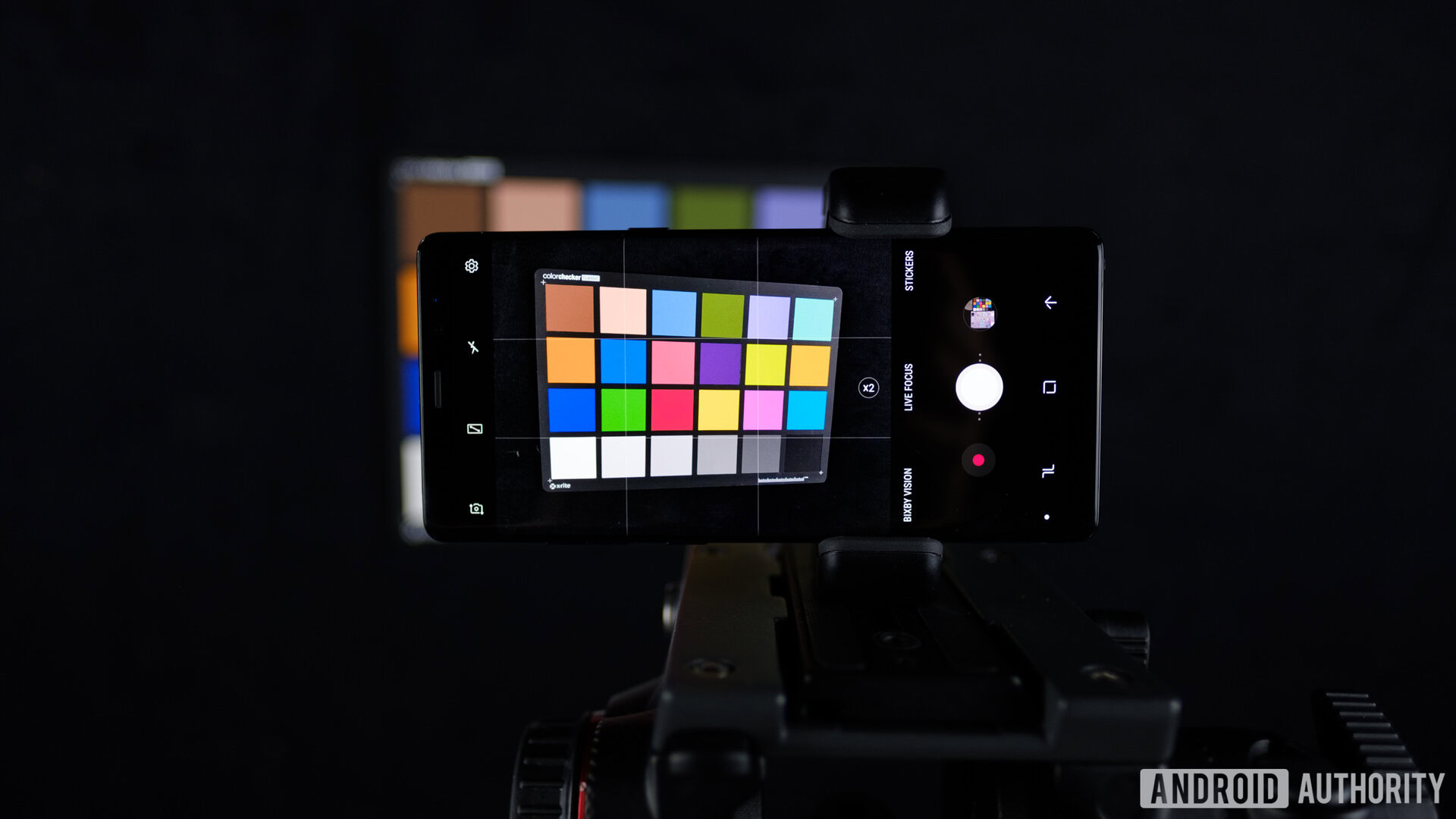

As a refresher, all of our tests are performed in a lab run by our employees, using turnkey solutions that are time-tested by industry professionals. For example, we reached out to our friends at Imatest and SpectraCal to create our camera testing and display testing suites, respectively. Both Imatest’s proprietary imaging analysis software and SpectraCal’s CalMAN software are what bigger manufacturers use, so when we publish data from our test units: it’s very similar to what they’re seeing.

For our processor tests, we gather an array of scores from several different benchmarks, each meant to gather relevant performance data in many different situations. For example, we use Geekbench to test the CPU, 3DMark to test the GPU, and so on. We use a large battery of benchmarks in the audio, display, camera, battery, and processor to get a complete picture of the phone. If you’d like to know more about how we test and what we look for, you can check it out here.

After all these tests, we’re left with a huge pile of data to sift through. How do we know what’s good? How do we know what’s bad? How do we fairly score each test?

What does the data mean?

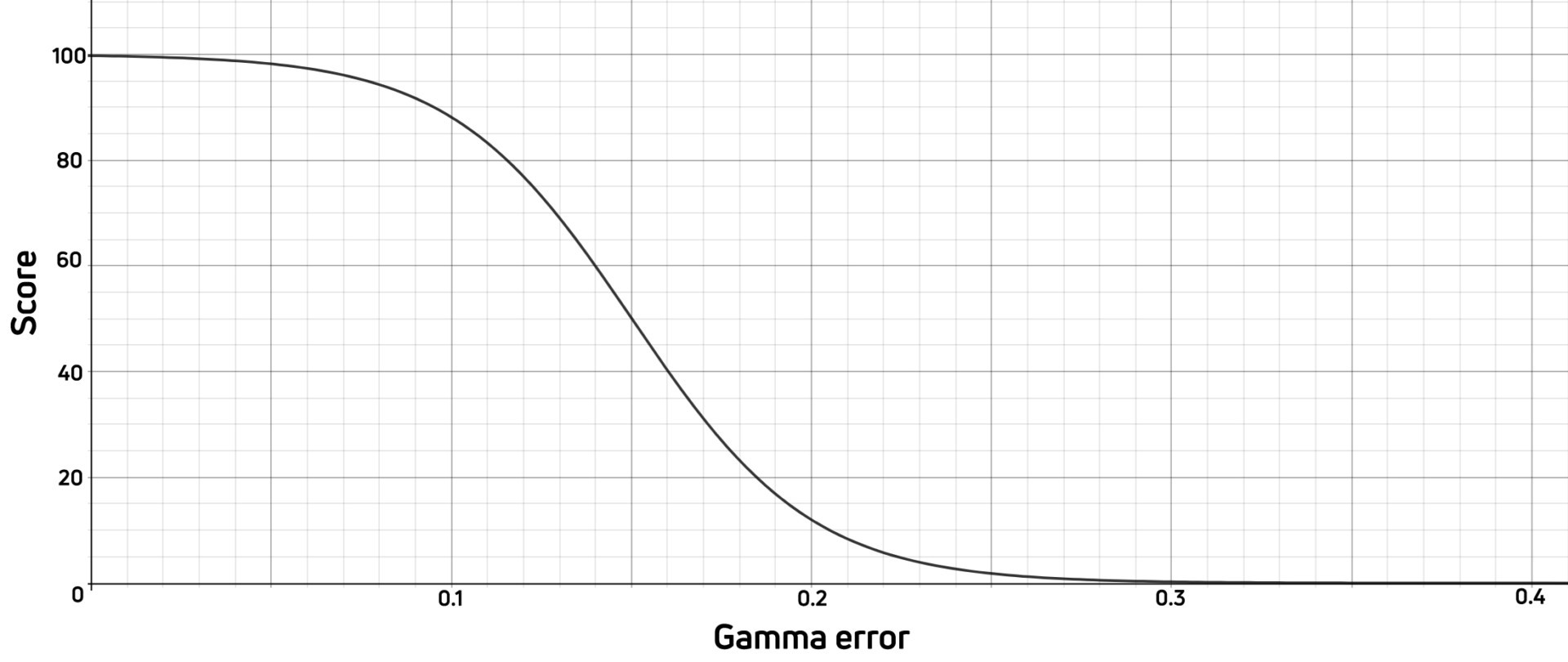

For each metric that could be limited by human perception (screen brightness, color accuracy, etc), we spent countless hours researching what those limits were, and added them to our master spreadsheet. Then we determined if there were any other philosophical tweaks needed to accommodate how people used their phones. Essentially, we want to reward devices for their performance in relation to how a human perceives it, but we don’t want any outliers in any one measure to tip the scales too far one way or another. If you can’t tell the difference, it shouldn’t be reflected in our scores, right?

For each data point, we applied an equation to assign the results a score from 0-100, but the scale awards and punishes outliers at an exponentially decreasing rate. This way, phones with infinitesimally small audio distortion wouldn’t get a boost if you can’t hear the difference, and phones with one really low score wouldn’t be sunk if they had lots of other bright spots. Once we applied these curves to each minor data point for every major category, we normalized the scores to make every major category (camera, display, audio, etc.) worth the same overall. For our purposes, a score below 10 is bad, a score of 50 is right dead-center between our limits, a score of 90 exceeds most people’s perception. Consequently, a score of 100 or 0 is nearly-impossible to achieve.

While we won’t publish our internal scores for everything, we may refer to them from time to time to drive certain points home. There’s a lot of hyperbole out there, and we’d like to put your minds at ease: even the worst smartphones are objectively pretty decent most of the time. If something scores well against our algorithms, it means that you probably won’t be able to tell the difference between it and the one “best” product for that test.

How do you turn the data into a score?

Once we collect all our data and contextualize it with our equations, we can then derive a score to show you. For each score we display, the formula used to determine it is: Score = ((product score)/(max score))*10. But don’t worry: the overall score shows exactly how the phone stacks up to the rest of the field at any given point in time.

Our site will then take all the cumulative scores for every review of that product type, and assign the highest-scoring device a score of 10. Everything else will then scale down accordingly. As you can imagine, this has two benefits:

- Scores will always reflect the position of any particular phone in the market regardless of time

- Scores will always be able to accommodate newer, better models in a fair manner

Neat, huh? Even if you were to look up an old phone that might be on clearance, you can see exactly how well that device compares to the other devices you’re researching.

While you may not agree with some of our scores, that usually means that your constellation of needs is unique to you: which is totally fine! You may find that if you were able to play with our weightings to reflect your needs, our data would agree with you. However, we have to serve the needs of all our readers here, and we decided that our new method was preferable to the old way of doing things.