Affiliate links on Android Authority may earn us a commission. Learn more.

Beware of the benchmarks, how to know what to look for

As regular followers of the wonderful world of Android you have probably glanced through numerous benchmarks already this year, especially when it comes to stacking new devices up against one another. However, after numerous scandals, odd results and the closed nature of many benchmarking tools, many are skeptical about their actual value. At ARM’s Tech Day last week we were treated to interesting talk on the subject of benchmarking and a heated discussion ensued, and we think many of the points raised are well worth sharing.

Benchmarks as a tool

There are plenty of benchmarks out there, looking to score everything from CPU and GPU performance to battery life and display quality. After all, if we’re shelling out hundreds of dollars for a piece of technology, it better perform well.

However, it’s quite widely accepted that benchmark tests don’t often accurately reflect real world applications. Even those that attempt to imitate an average user’s demands don’t always follow particularly scientific and repeatable methods. Let me share some examples.

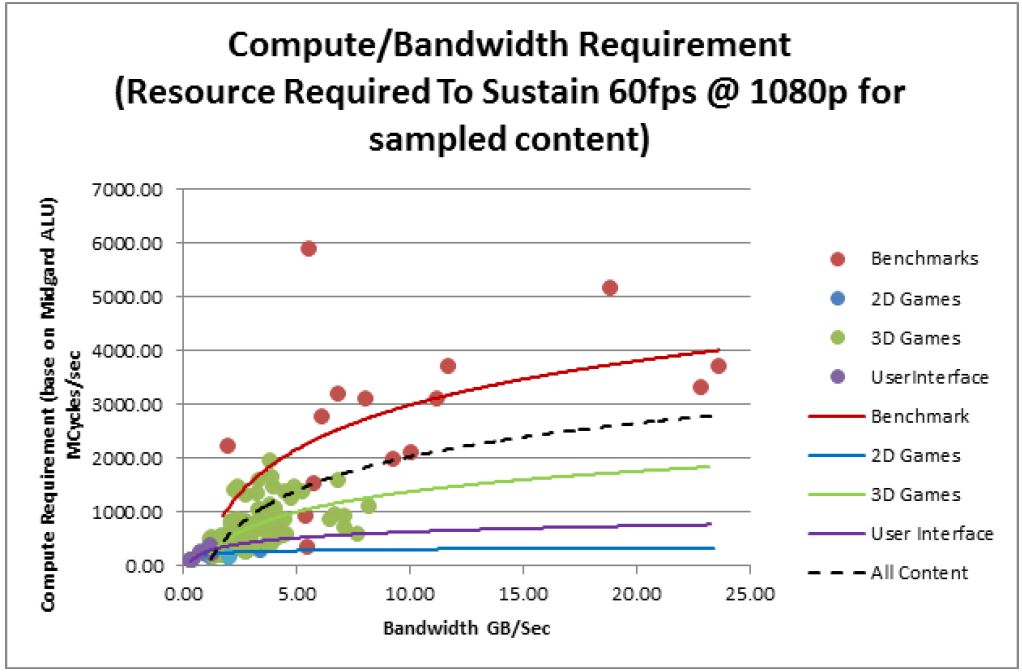

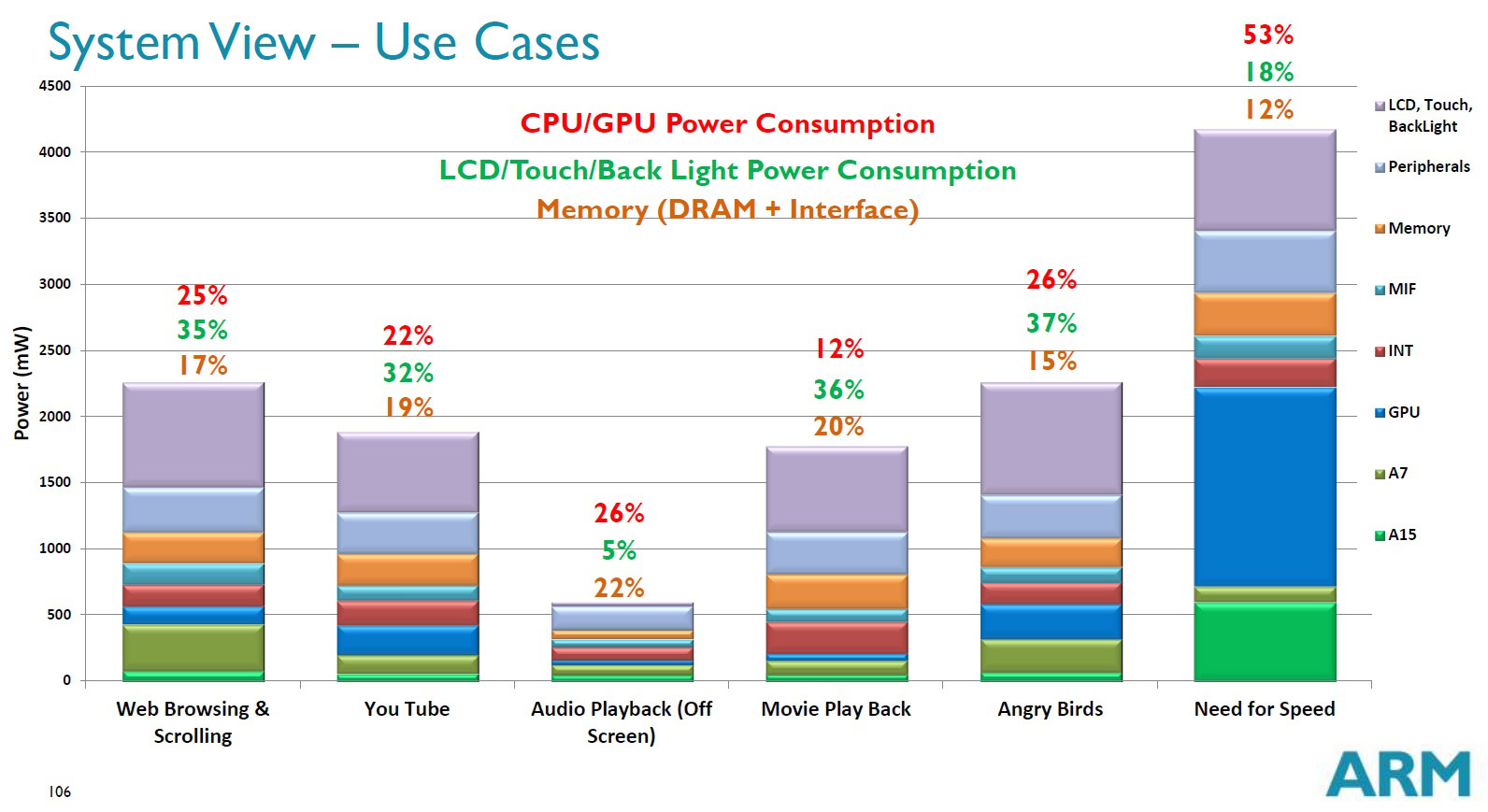

The graph above, collated by ARM, shows the compute and memory bandwidth required by a number of popular Android benchmarks, a selection of 2D and 3D games available from the Play Store, and general user interface requirements. The lines show the general trend of each group, depending on if they are leaning more towards bandwidth or compute workloads. More on that in a minute.

Clearly, the majority of the benchmarks are testing hardware far in excess of anything that users will experience with an actual app. Only three or four fall into the cluster of actual 3D games, making the rest not that useful if you want to know how well your new phone or tablet will cope in the real world. There are browser based suites than can vary widely based on nothing more than the underlying browser code and others that far exceed the memory bandwidth capacity of most devices. It’s tricky to find many that closely resemble a real-world scenario.

But suppose we just want to compare the potential peak performance of two or more devices, apps could always become more demanding in the future right? Well, there’s a problem with this too – bottlenecking and simulating higher workloads.

Looking at the graph again, we see a number of tests pushing peak memory bandwidth, but this is the biggest bottleneck in terms of mobile performance. We’re not going to see accurate results for performance metric A if the system is bottlenecked by memory speeds. Memory is also a huge drain on the battery, so it’s tricky to compare power consumption under various loads if they’re all making different demands on memory.

To try and sidestep this issue, you’ll find that some benchmarks split workloads up to test different parts, but then this isn’t a particularly good view of how the system performs as a whole.

Furthermore, how do you go about accurately predicting and simulating workloads that are more demanding than what is already out there? Some 3D benchmarks throw a ton of triangles into a scene to simulate a heavier load, but GPUs are not designed for solely that type of workload. In this sort of situation, the results are potentially testing a particular attribute of a GPU or CPU more than another, which will of course produce quite different results from other tests and can vary widely for different bits of hardware. It’s just not as reliable as a real world workload, which is what mobile processors are designed for, but testing basic games doesn’t always give us a good indication of peak performance.

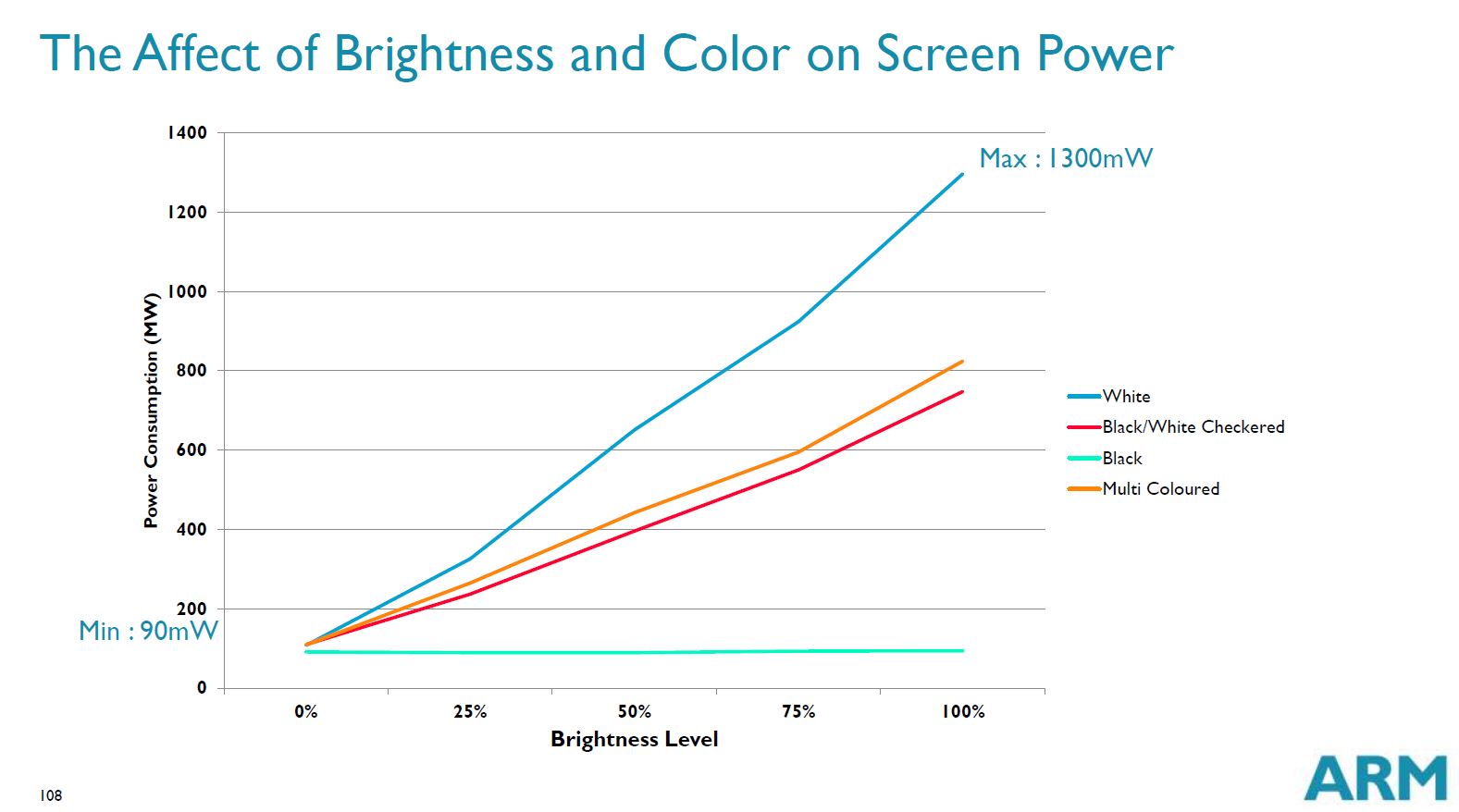

Even if we chuck benchmarking suites out of the window we are left with issues when it comes to running test using existing games and loads. Screen brightness can have a huge effect in battery tests and not all 0% settings are the same and running different videos can even have an effect on power consumption, particularly with an AMOLED display. Gaming scenarios can vary from play through to play though, especially in games with dynamic physics and gameplay.

As you can see, there’s plenty of room for variance and loads of possible things that we can test.

The trouble with numbers

Unfortunately, testing is made even more complicated by simple score results and “black-box” testing methods that prevent us from knowing what is really going on.

As we mentioned before, if we don’t know exactly what’s been tested we can’t really relate a score to the hardware differences between products. Fortunately, some benchmarks are more open than others about exactly what they test, but even then it’s tough to compare test A to test B for a more rounded picture.

Not to mention that the increasing reliance on unrelated numbers has led to companies trying to game the results, by boosting speeds and optimizing for popular test scenarios. Not too long ago companies were caught out over-clocking their parts while benchmarks were running and sadly software is still open to trickery.

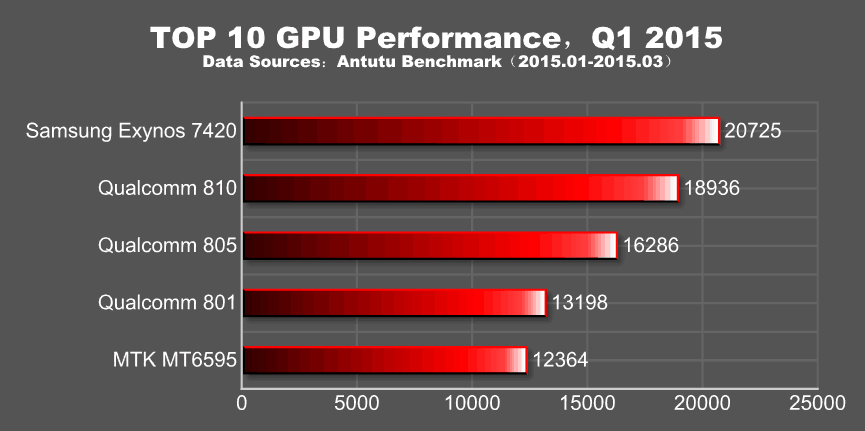

This certainly isn’t an issue solely related to benchmarking software, but it’s tougher for companies to get away with stressing their hardware when consumers might be running a game or task for a long period of time. However, there are still problems with “real-world” tests too. FPS for gaming is an overly generalized score, it doesn’t tell us about frame pacing or stuttering, and there’s still the amount of power consumed to consider. Is it worth grabbing a 60,000 AnTuTu score if your battery drains flat in less than an hour?

Is the situation hopeless?

OK, so up until now I have been quite negative about benchmarks, which maybe isn’t really fair. Although there are problems with benchmarking, there isn’t really an alternative, and as long as we are aware of the shortcomings then we can be discerning about the results and methods than we base opinions on.

A healthy sample of scores from a variety of sources is a good place to start, and ideally we take in a health mix of performance pushing benchmarks, understand any hardware weaknesses, and top it off with a good sample of repeatable real world tests. We should always remember that power consumption is the other half the argument. Mobile users constantly bemoan battery life yet demand ever faster devices.

Ultimately, we need to take in a good sample of results, from a variety of sources and test types and combine them together to form the most accurate assessment of a device’s performance.

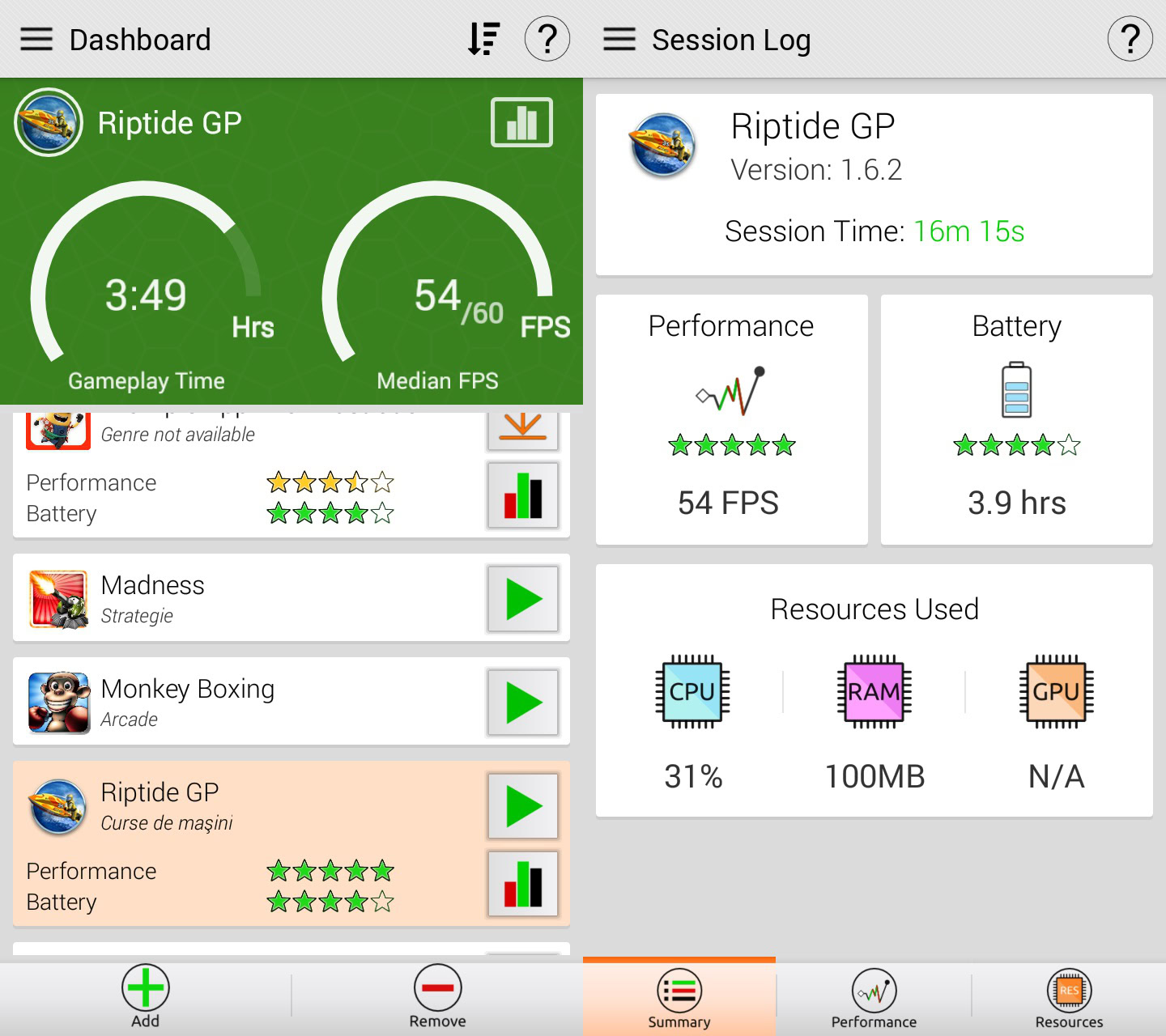

One possible light in this otherwise dark and murky field is GameBench. Rather than creating artificial tests, GameBench uses real world games and applications to judge the performance of a device. This means that the results actually reflect what real users with with real apps. If you want to know if Riptide GP2 will work better on phone X or phone Y, then GameBench can tell. However there are some draw backs. As I mentioned above, gameplay tests aren’t repeatable. If I play a game for 20 minutes and keep failing to get to the end of level 1 then the results will be different to playing levels 1 to 5 in the same time frame. Also, for the free version at least, the main metrics is frames per second, which isn’t that helpful. However on the plus side, GameBench automatically measures battery life. This means that if phone X plays Riptide GP2 at 58 fps for 2.5 hours, but phone Y plays it at 51 fps for 3.5 hours, then I would pick phone Y even though its fps is slightly lower.

Benchmarking like a pro

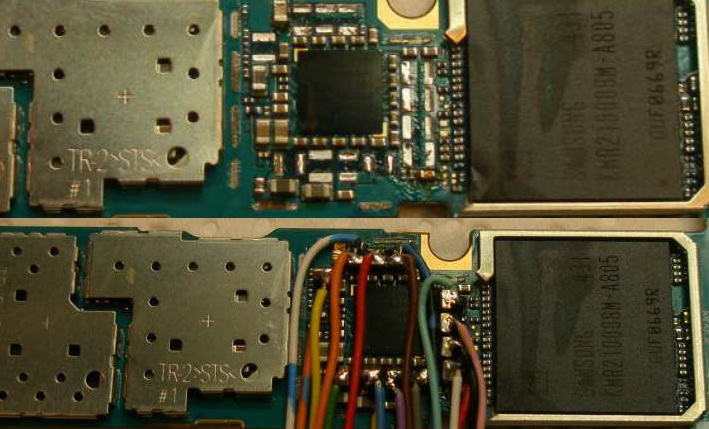

If you want an extremely detailed example of accurate benchmarking, ARM’s Rod Watt took us through his impressive test-setup, which involves stripping down the phone and actually soldering in some current sensing resistors to the Power Management Integrated Circuit (PMICs) so he could accurately measure the power consumed by each component during testing.

From this type of setup it is possible to produce detailed results about exactly what component is drawing power during different type of tests and how much power is consumed by each component.

If gaming is stuttering or draining the battery we can see exactly how much power is being drawn by each component, to better access the work being performed by the CPU or GPU compared with other tests, or if the screen is sucking down all the juice.

While this may or may not be exactly what you’re looking for in a quick benchmark comparisons, it just goes to show the level of detail and accuracy that can be achieved by going above and beyond just comparing numbers churned out by a benchmark suite.

Where do you stand on the benchmarking issue? Are they completely pointless, semi-useful, or do you make your purchasing decisions based almost solely on them?